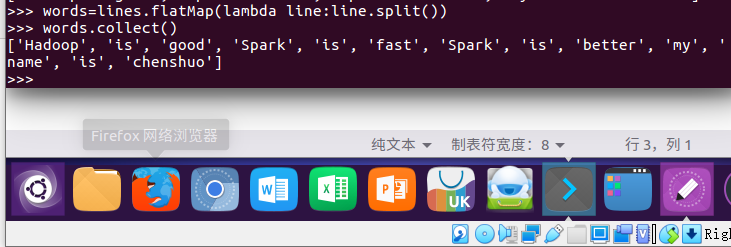

一、filter,map,flatmap练习:

1.读文本文件生成RDD lines

lines=sc.textFile("file:///home/hadoop/word.txt") #读取本地文件 lines.collect()

2.将一行一行的文本分割成单词 words

words=lines.flatMap(lambda line:line.split()) #划分单词 words.collect()

3.全部转换为小写

words=words.map(lambda line:line.lower()) #变为小写 words.collect()

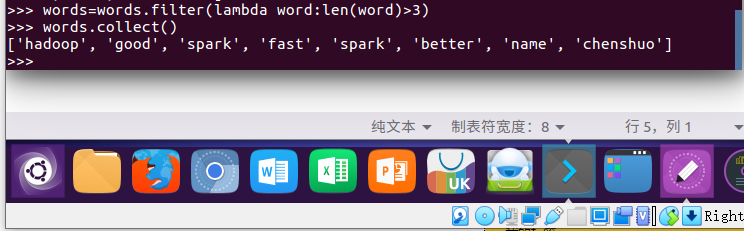

4.去掉长度小于3的单词

words=words.filter(lambda word:len(word)>3) words.collect()

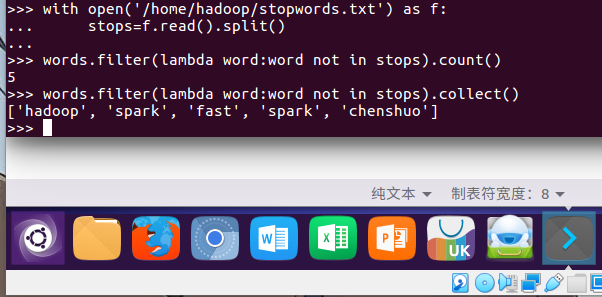

5.去掉停用词

with open('/home/hadoop/stopwords.txt') stops=f.read().split() words.filter(lambda word:word not in stops).count() words.filter(lambda word:word not in stops).collect()

二、groupByKey练习

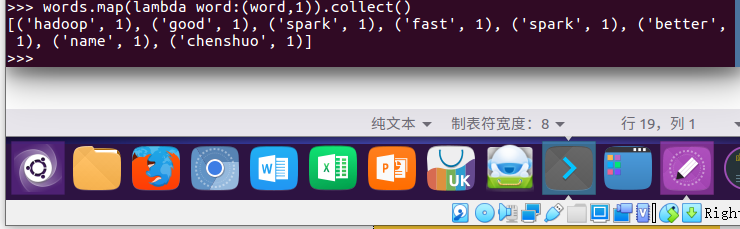

6.练习一的生成单词键值对

words.map(lambda word:(word,1)).collect()

7.对单词进行分组

words.map(lambda word:(word,1)).groupByKey().collect()

8.查看分组结果

for i in words.map(lambda word : (word,1)).groupByKey().collect()[1][1]: print (i)

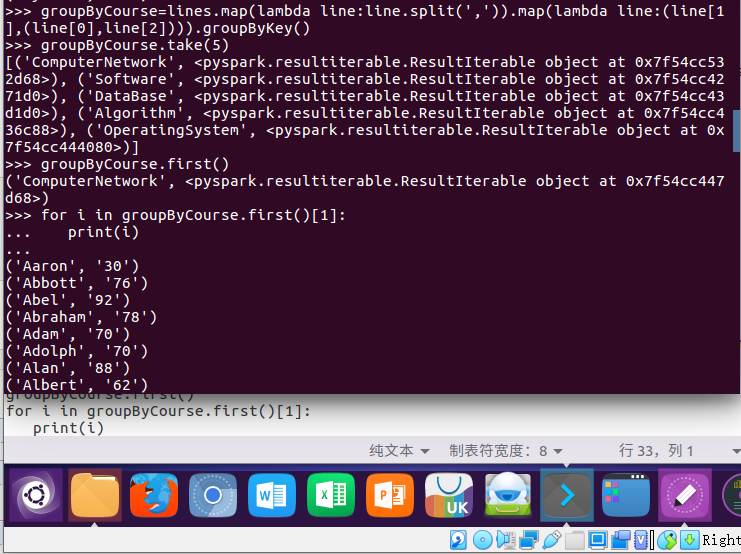

学生科目成绩文件练习:

0.数据文件上传

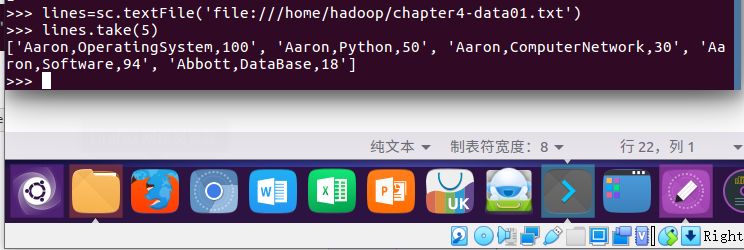

1.读大学计算机系的成绩数据集生成RDD

lines = sc.textFile('file:///home/hadoop/chapter4-data01.txt') lines.take(5)

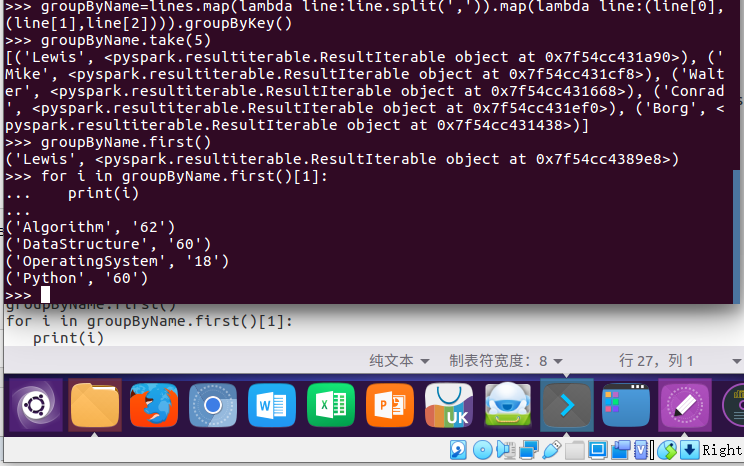

2.按学生汇总全部科目的成绩

groupByName=lines.map(lambda line:line.split(',')).map(lambda line:(line[0],(line[1],line[2]))).groupByKey() groupByName.take(5) groupByName.first() for i in groupByName.first()[1]: print(i)

3.按科目汇总学生的成绩

groupByCourse=lines.map(lambda line:line.split(',')).map(lambda line:(line[1],(line[0],line[2]))).groupByKey() groupByCourse.first() for i in groupByCourse.first()[1]: print(i)