online deployable ,install k8s

Kubeflow有三个核心组件

TFJob Operator 和 Controller:

作为Kubernetes的扩展,来简化分布式TensorFlow工作负载的部署。 通过Operator,Kubeflow能够自动化的配置 master服务器,工作服务器和参数化服务器配置。 TFJob可用来部署工作负载。

$ kubectl describe deploy tf-job-operator-v1alpha2 -n kubeflow Name: tf-job-operator-v1alpha2 Namespace: kubeflow CreationTimestamp: Mon, 03 Dec 2018 12:51:55 +0000 Labels: app.kubernetes.io/deploy-manager=ksonnet ksonnet.io/component=tf-job-operator Annotations: deployment.kubernetes.io/revision: 1 ksonnet.io/managed: {"pristine":"H4sIAAAAAAAA/5yRTY8TMQyG7/wMn+djB24jcajY5dalAgkJrarKk3q6oUkcJZ4BtMp/R95+IdFy4ObE9vO+tl8Ao/1KKVsO0AP9FAoa53buBhLsoIK9DVvo4Z6i4... Selector: name=tf-job-operator Replicas: 1 desired | 1 updated | 1 total | 0 available | 1 unavailable StrategyType: RollingUpdate MinReadySeconds: 0 RollingUpdateStrategy: 1 max unavailable, 1 max surge Pod Template: Labels: name=tf-job-operator Service Account: tf-job-operator Containers: tf-job-operator: Image: gcr.io/kubeflow-images-public/tf_operator:v0.3.0 Port: <none> Host Port: <none> Command: /opt/kubeflow/tf-operator.v2 --alsologtostderr -v=1 Environment: MY_POD_NAMESPACE: (v1:metadata.namespace) MY_POD_NAME: (v1:metadata.name) Mounts: /etc/config from config-volume (rw) Volumes: config-volume: Type: ConfigMap (a volume populated by a ConfigMap) Name: tf-job-operator-config Optional: false Conditions: Type Status Reason ---- ------ ------ Available True MinimumReplicasAvailable OldReplicaSets: <none> NewReplicaSet: tf-job-operator-v1alpha2-644c5f7db7 (1/1 replicas created) Events: <none>

$ kubectl -n kubeflow describe pod tf-job-operator Name: tf-job-operator-v1alpha2-644c5f7db7-dsttx Namespace: kubeflow Priority: 0 PriorityClassName: <none> Node: kubeflow-1/10.0.0.43 Start Time: Mon, 03 Dec 2018 12:51:58 +0000 Labels: name=tf-job-operator pod-template-hash=644c5f7db7 Annotations: <none> Status: Pending IP: Controlled By: ReplicaSet/tf-job-operator-v1alpha2-644c5f7db7 Containers: tf-job-operator: Container ID: Image: gcr.io/kubeflow-images-public/tf_operator:v0.3.0 Image ID: Port: <none> Host Port: <none> Command: /opt/kubeflow/tf-operator.v2 --alsologtostderr -v=1 State: Waiting Reason: ContainerCreating Ready: False Restart Count: 0 Environment: MY_POD_NAMESPACE: kubeflow (v1:metadata.namespace) MY_POD_NAME: tf-job-operator-v1alpha2-644c5f7db7-dsttx (v1:metadata.name) Mounts: /etc/config from config-volume (rw) /var/run/secrets/kubernetes.io/serviceaccount from tf-job-operator-token-fr42l (ro) Conditions: Type Status Initialized True Ready False ContainersReady False PodScheduled True Volumes: config-volume: Type: ConfigMap (a volume populated by a ConfigMap) Name: tf-job-operator-config Optional: false tf-job-operator-token-fr42l: Type: Secret (a volume populated by a Secret) SecretName: tf-job-operator-token-fr42l Optional: false QoS Class: BestEffort Node-Selectors: <none> Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s node.kubernetes.io/unreachable:NoExecute for 300s Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal SandboxChanged 2d9h (x1330 over 2d17h) kubelet, kubeflow-1 Pod sandbox changed, it will be killed and re-created. Warning FailedCreatePodSandBox 2d9h (x1335 over 2d17h) kubelet, kubeflow-1 (combined from similar events): Failed create pod sandbox: rpc error: code = Unknown desc = failed to set up sandbox container "9c0524a3122c673a81d2652d6755e46c721f57d67990a20f636abf806dab9c0e" network for pod "tf-job-operator-v1alpha2-644c5f7db7-dsttx": NetworkPlugin cni failed to set up pod "tf-job-operator-v1alpha2-644c5f7db7-dsttx_kubeflow" network: stat /var/lib/calico/nodename: no such file or directory: check that the calico/node container is running and has mounted /var/lib/calico/ Warning FailedMount 24m kubelet, kubeflow-1 MountVolume.SetUp failed for volume "config-volume" : couldn't propagate object cache: timed out waiting for the condition Warning FailedCreatePodSandBox 24m kubelet, kubeflow-1 Failed create pod sandbox: rpc error: code = Unknown desc = failed to set up sandbox container "76b89d8178a3247f0c906d9eb541b73c847be4f863d5da15efd3a578487cee7c" network for pod "tf-job-operator-v1alpha2-644c5f7db7-dsttx": NetworkPlugin cni failed to set up pod "tf-job-operator-v1alpha2-644c5f7db7-dsttx_kubeflow" network: stat /var/lib/calico/nodename: no such file or directory: check that the calico/node container is running and has mounted /var/lib/calico/

$ kubectl -n kubeflow describe deploy tf-job-dashboard Name: tf-job-dashboard Namespace: kubeflow CreationTimestamp: Mon, 03 Dec 2018 12:51:56 +0000 Labels: app.kubernetes.io/deploy-manager=ksonnet ksonnet.io/component=tf-job-operator Annotations: deployment.kubernetes.io/revision: 1 ksonnet.io/managed: {"pristine":"H4sIAAAAAAAA/3yQzY4TMRCE7zxGn+dvxSXyLUD2AiwRCDisoqjH07NrxnZbds8AivzuyCE/HMjeym53les7AAbzjWIy7EEB/RLyRad2uetJ8A4qmIwfQME7CpZ/O... Selector: name=tf-job-dashboard Replicas: 1 desired | 1 updated | 1 total | 0 available | 1 unavailable StrategyType: RollingUpdate MinReadySeconds: 0 RollingUpdateStrategy: 1 max unavailable, 1 max surge Pod Template: Labels: name=tf-job-dashboard Service Account: tf-job-dashboard Containers: tf-job-dashboard: Image: gcr.io/kubeflow-images-public/tf_operator:v0.3.0 Port: 8080/TCP Host Port: 0/TCP Command: /opt/tensorflow_k8s/dashboard/backend Environment: KUBEFLOW_NAMESPACE: (v1:metadata.namespace) Mounts: <none> Volumes: <none> Conditions: Type Status Reason ---- ------ ------ Available True MinimumReplicasAvailable OldReplicaSets: <none> NewReplicaSet: tf-job-dashboard-7499d5cbcf (1/1 replicas created) Events: <none>

$ kubectl -n kubeflow describe pod tf-job-dashboard Name: tf-job-dashboard-7499d5cbcf-hzhcf Namespace: kubeflow Priority: 0 PriorityClassName: <none> Node: kubeflow-1/10.0.0.43 Start Time: Mon, 03 Dec 2018 12:51:58 +0000 Labels: name=tf-job-dashboard pod-template-hash=7499d5cbcf Annotations: <none> Status: Pending IP: Controlled By: ReplicaSet/tf-job-dashboard-7499d5cbcf Containers: tf-job-dashboard: Container ID: Image: gcr.io/kubeflow-images-public/tf_operator:v0.3.0 Image ID: Port: 8080/TCP Host Port: 0/TCP Command: /opt/tensorflow_k8s/dashboard/backend State: Waiting Reason: ContainerCreating Ready: False Restart Count: 0 Environment: KUBEFLOW_NAMESPACE: kubeflow (v1:metadata.namespace) Mounts: /var/run/secrets/kubernetes.io/serviceaccount from tf-job-dashboard-token-452x9 (ro) Conditions: Type Status Initialized True Ready False ContainersReady False PodScheduled True Volumes: tf-job-dashboard-token-452x9: Type: Secret (a volume populated by a Secret) SecretName: tf-job-dashboard-token-452x9 Optional: false QoS Class: BestEffort Node-Selectors: <none> Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s node.kubernetes.io/unreachable:NoExecute for 300s Events: Type Reason Age From Message ---- ------ ---- ---- ------- Warning FailedCreatePodSandBox 32m (x1084 over 3h31m) kubelet, kubeflow-1 (combined from similar events): Failed create pod sandbox: rpc error: code = Unknown desc = failed to set up sandbox container "b4e475c7cf75d4086f91547e3a88632ca34c494c5e454601711a7588c8a0a22c" network for pod "tf-job-dashboard-7499d5cbcf-hzhcf": NetworkPlugin cni failed to set up pod "tf-job-dashboard-7499d5cbcf-hzhcf_kubeflow" network: stat /var/lib/calico/nodename: no such file or directory: check that the calico/node container is running and has mounted /var/lib/calico/ Normal SandboxChanged 2m29s (x1255 over 3h32m) kubelet, kubeflow-1 Pod sandbox changed, it will be killed and re-created.

TF Hub:

JupyterHub的运行实例,通过该实例来使用Jupyter notebook。

$ kubectl -n kubeflow get pod tf-hub-0 -o yaml apiVersion: v1 kind: Pod metadata: creationTimestamp: 2018-12-03T12:51:35Z generateName: tf-hub- labels: app: tf-hub controller-revision-hash: tf-hub-755f7566bd statefulset.kubernetes.io/pod-name: tf-hub-0 name: tf-hub-0 namespace: kubeflow ownerReferences: - apiVersion: apps/v1 blockOwnerDeletion: true controller: true kind: StatefulSet name: tf-hub uid: 2a91c29b-f6fa-11e8-8183-fa163ed7118a resourceVersion: "319408" selfLink: /api/v1/namespaces/kubeflow/pods/tf-hub-0 uid: 2a953b58-f6fa-11e8-8183-fa163ed7118a spec: containers: - command: - jupyterhub - -f - /etc/config/jupyterhub_config.py env: - name: NOTEBOOK_PVC_MOUNT value: /home/jovyan - name: CLOUD_NAME value: "null" - name: REGISTRY value: gcr.io - name: REPO_NAME value: kubeflow-images-public - name: KF_AUTHENTICATOR value: "null" - name: DEFAULT_JUPYTERLAB value: "false" - name: KF_PVC_LIST value: "null" image: gcr.io/kubeflow/jupyterhub-k8s:v20180531-3bb991b1 imagePullPolicy: IfNotPresent name: tf-hub ports: - containerPort: 8000 protocol: TCP - containerPort: 8081 protocol: TCP resources: {} terminationMessagePath: /dev/termination-log terminationMessagePolicy: File volumeMounts: - mountPath: /etc/config name: config-volume - mountPath: /var/run/secrets/kubernetes.io/serviceaccount name: jupyter-hub-token-hs8h8 readOnly: true dnsPolicy: ClusterFirst hostname: tf-hub-0 nodeName: kubeflow-1 priority: 0 restartPolicy: Always schedulerName: default-scheduler securityContext: {} serviceAccount: jupyter-hub serviceAccountName: jupyter-hub terminationGracePeriodSeconds: 30 tolerations: - effect: NoExecute key: node.kubernetes.io/not-ready operator: Exists tolerationSeconds: 300 - effect: NoExecute key: node.kubernetes.io/unreachable operator: Exists tolerationSeconds: 300 volumes: - configMap: defaultMode: 420 name: jupyterhub-config name: config-volume - name: jupyter-hub-token-hs8h8 secret: defaultMode: 420 secretName: jupyter-hub-token-hs8h8 status: conditions: - lastProbeTime: null lastTransitionTime: 2018-12-03T12:51:35Z status: "True" type: Initialized - lastProbeTime: null lastTransitionTime: 2018-12-03T12:51:35Z message: 'containers with unready status: [tf-hub]' reason: ContainersNotReady status: "False" type: Ready - lastProbeTime: null lastTransitionTime: 2018-12-03T12:51:35Z message: 'containers with unready status: [tf-hub]' reason: ContainersNotReady status: "False" type: ContainersReady - lastProbeTime: null lastTransitionTime: 2018-12-03T12:51:35Z status: "True" type: PodScheduled containerStatuses: - image: gcr.io/kubeflow/jupyterhub-k8s:v20180531-3bb991b1 imageID: "" lastState: {} name: tf-hub ready: false restartCount: 0 state: waiting: reason: ContainerCreating hostIP: 10.0.0.43 phase: Pending qosClass: BestEffort startTime: 2018-12-03T12:51:35Z

$ kubectl -n kubeflow get svc tf-hub-0 -o yaml apiVersion: v1 kind: Service metadata: annotations: ksonnet.io/managed: '{"pristine":"H4sIAAAAAAAA/2SOsU4DQQxEez5j6gscHdo/oEFISDSIwtk4ypI7e7X2BqHT/jsyETSUfprxvA1Uyys3KypIuNxjwrnIAQkv3C4lMyas7HQgJ6QNJKJOXlQsztp0ZT9xt9uid5YbVUaCt84YExba8/ITpFqDH3envo8NUxH2KGVdqwqLI+Gj1y/nFpkxQWjlv9JuxpVYpRz43Pd8XPQzklY5x0peujm3x2ckPKmEfNXmhvS2/b67CgRGepjnebxPMF44u7Z/pmOMm28AAAD//wEAAP//d9+FryQBAAA="}' prometheus.io/scrape: "true" creationTimestamp: 2018-12-03T12:51:34Z labels: app: tf-hub app.kubernetes.io/deploy-manager: ksonnet ksonnet.io/component: jupyterhub name: tf-hub-0 namespace: kubeflow resourceVersion: "319385" selfLink: /api/v1/namespaces/kubeflow/services/tf-hub-0 uid: 2a5a66bd-f6fa-11e8-8183-fa163ed7118a spec: clusterIP: None ports: - name: hub port: 8000 protocol: TCP targetPort: 8000 selector: app: tf-hub sessionAffinity: None type: ClusterIP status: loadBalancer: {}

$ kubectl -n kubeflow get svc tf-hub-lb -o yaml apiVersion: v1 kind: Service metadata: annotations: getambassador.io/config: |- --- apiVersion: ambassador/v0 kind: Mapping name: tf-hub-lb-hub-mapping prefix: /hub/ rewrite: /hub/ timeout_ms: 300000 service: tf-hub-lb.kubeflow use_websocket: true --- apiVersion: ambassador/v0 kind: Mapping name: tf-hub-lb-user-mapping prefix: /user/ rewrite: /user/ timeout_ms: 300000 service: tf-hub-lb.kubeflow use_websocket: true ksonnet.io/managed: '{"pristine":"H4sIAAAAAAAA/6yRP4/bMAzF934Kg7Mdu+hSaO3UoUCAAl3qQ0A5tKOLTQkilVwQ+LsflL93wW13WgQ+kY8/4R0Bg/tHUZxnMLD7DiVsHa/BwF+KO9cRlDCR4hoVwRwBmb2iOs+Sy4EUJ4siuPZx4Xzdee7dAAaqqmr5bm6Ke1+9a1rOW0xR/MEQHA8tM05kCu2rTbLVaE/XdH0MkXr3Yop6k2zdcqR9dEq3Wt1EPulqElP8aPJpWc74bywX22SpH/2+5SS02pMV321JTaExUcufJU5C8QPkLL9jvghfAQ1zCSNaGk9hYAhg4DaaoxTPTHoOZgqeiRUMPKdwUIqbZLNB/sfDXJYkYJf1K0BulUBd3hR8VAHz/3gdzlblSQbzsylBMQ6ky0vdNPNTCUIjderjI2o21kPINr/GJErx9xLm+dsrAAAA//8BAAD//weR4mmcAgAA"}' creationTimestamp: 2018-12-03T12:51:34Z labels: app: tf-hub-lb app.kubernetes.io/deploy-manager: ksonnet ksonnet.io/component: jupyterhub name: tf-hub-lb namespace: kubeflow resourceVersion: "319392" selfLink: /api/v1/namespaces/kubeflow/services/tf-hub-lb uid: 2a712a94-f6fa-11e8-8183-fa163ed7118a spec: clusterIP: 10.102.20.216 ports: - name: hub port: 80 protocol: TCP targetPort: 8000 selector: app: tf-hub sessionAffinity: None type: ClusterIP status: loadBalancer: {}

模型服务器:

部署经过训练过的TensorFlow模型,供客户访问并用于预测。

这三个模型将用于部署不同的工作负载。

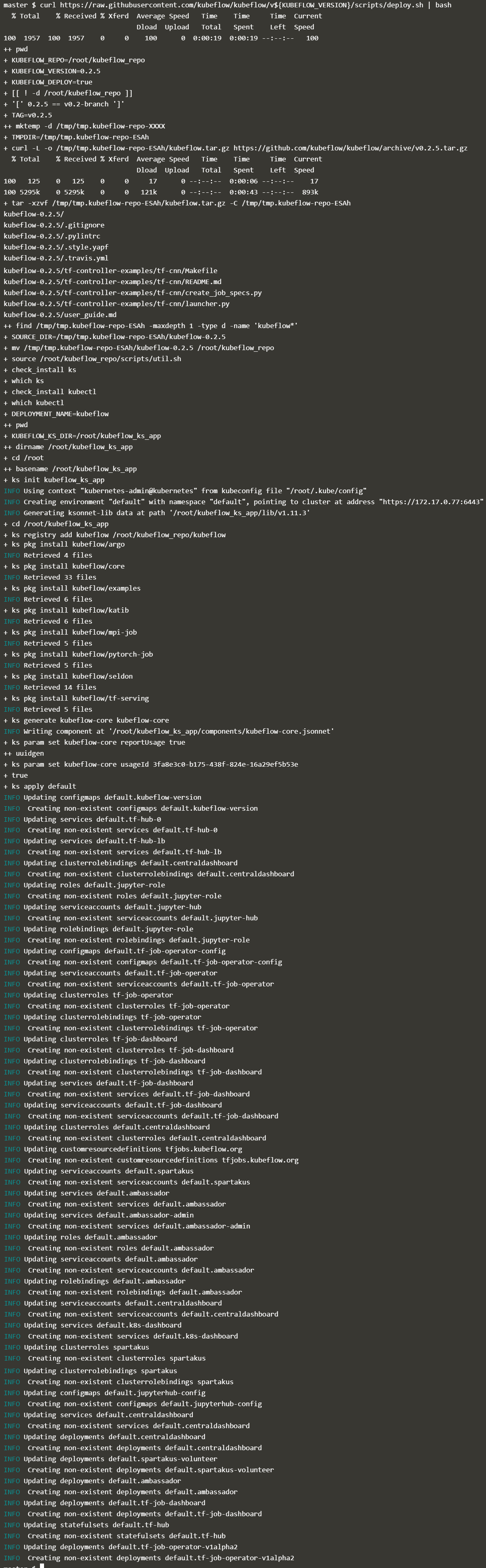

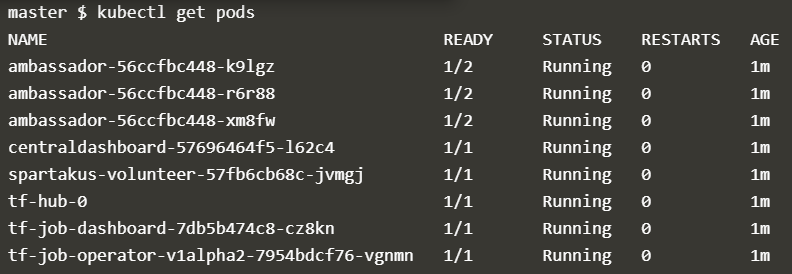

1. 部署Kubeflow

export GITHUB_TOKEN=99510f2ccf40e496d1e97dbec9f31cb16770b884 export KUBEFLOW_VERSION=0.2.5 curl https://raw.githubusercontent.com/kubeflow/kubeflow/v${KUBEFLOW_VERSION}/scripts/deploy.sh | bash

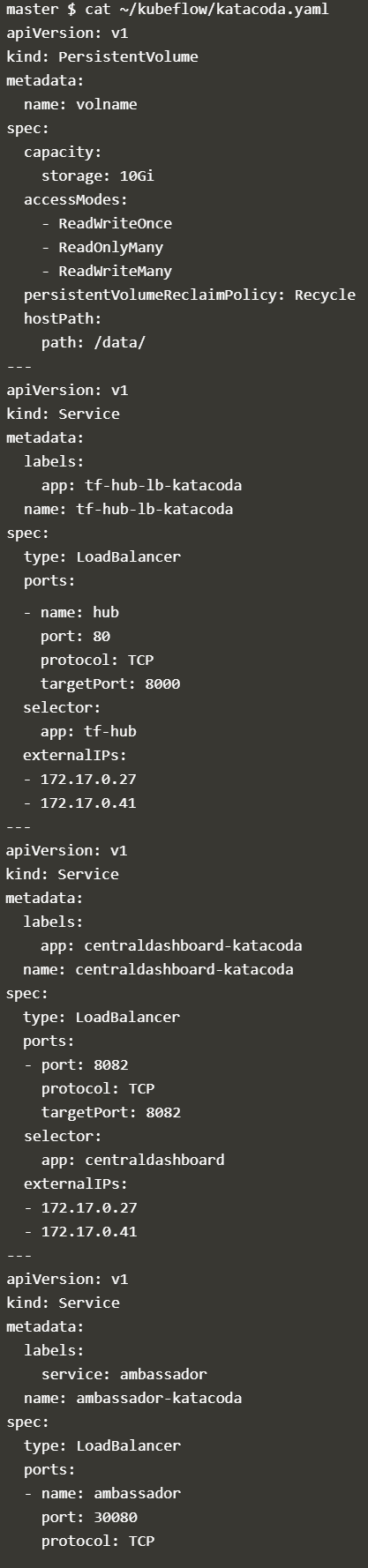

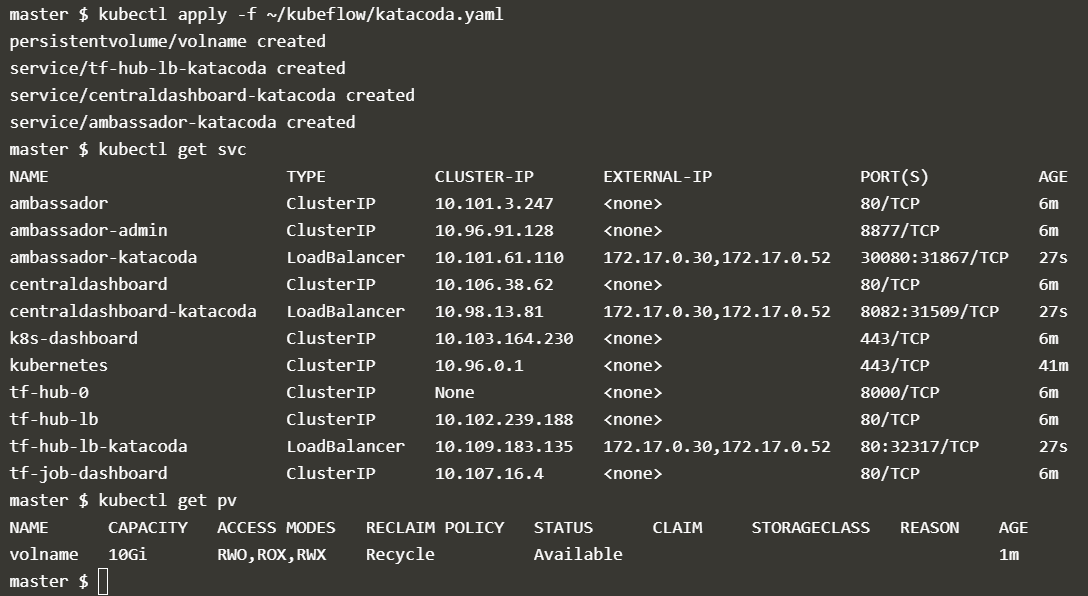

2. 部署Kubeflow 需要的LoadBalancer 和 Persistent Volume

kubectl apply -f ~/kubeflow/katacoda.yaml

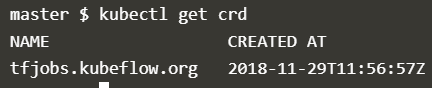

3. 部署TensorFlow作业(TFJob)

TfJob提供了一个Kubeflow的custom resource,可以在Kubernetes上轻松运行分布式或非分布式TensorFlow作业。 TFJob控制器为master,parameter服务器和worker 提供了一个YAML规范(specification ),来运行分布式计算。

CRD(Custom Resource Definition)提供了创建和管理TFJob的能力, CRD与Kubernetes内置资源方式相同。 部署后,CRD可以配置TensorFlow Job,允许用户专注于机器学习而不是基础设施。

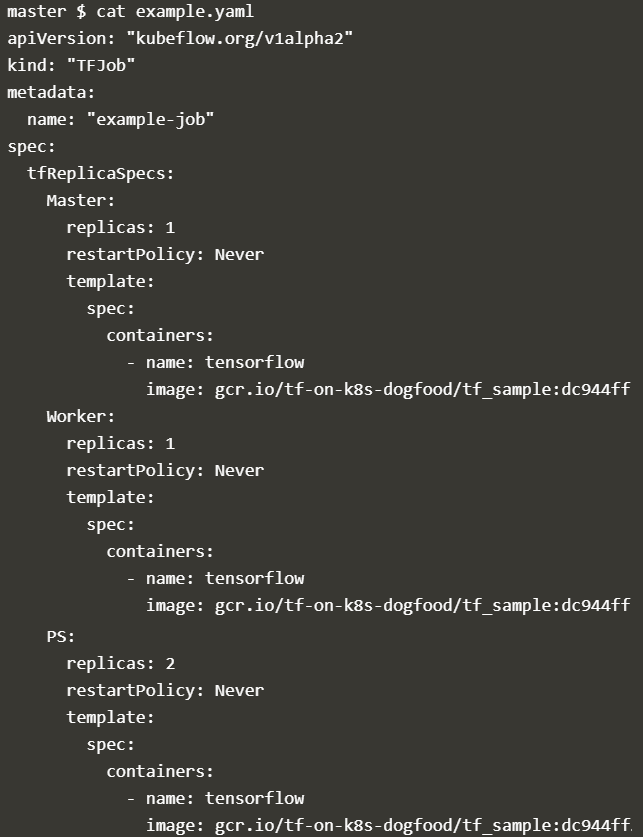

4. 创建一个 TFJob Deployment Definition

部署TensorFlow workload,Kubeflow需要定义TFJob。

可以查看:

cat example.yaml

其定义了三个组件:

master:每个job必须有一个master。 master将协调workers之间的训练操作执行。

worker:一个job可以有0到N名workers。 每个worker进程都运行相同的model,为PS(Parameter Server)提供处理参数。

PS:一个job可以有0到N个Parameter Server。 Parameter Server可以跨多台计算机扩展model。

https://www.tensorflow.org/deploy/distributed

5. 部署TFJob

运行

kubectl apply -f example.yaml

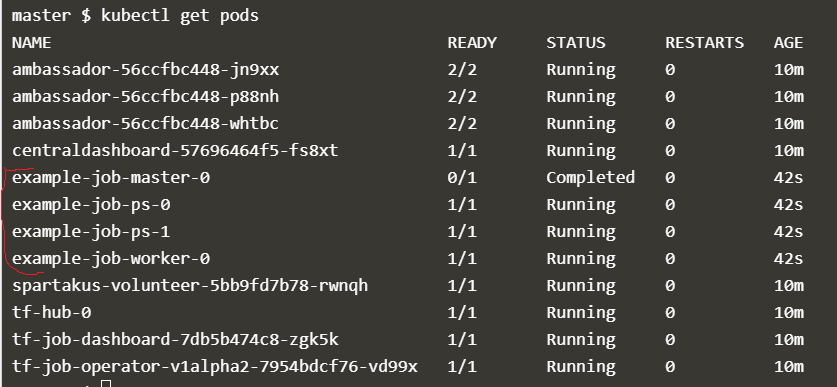

通过部署TFJob,Kubernetes跨可用的节点进行调度, 执行负载。 作为部署的一部分,Kubeflow使用请求的设置来配置TensorFlow,以允许不同的组件进行通信。

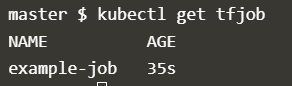

6. 查看TFJob(用户资源) 的进度和结果

kubectl get tfjob

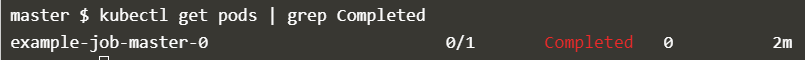

TensorFlow作业完成后,master 服务会被标记为成功。

master 负责协调执行和汇总结果.

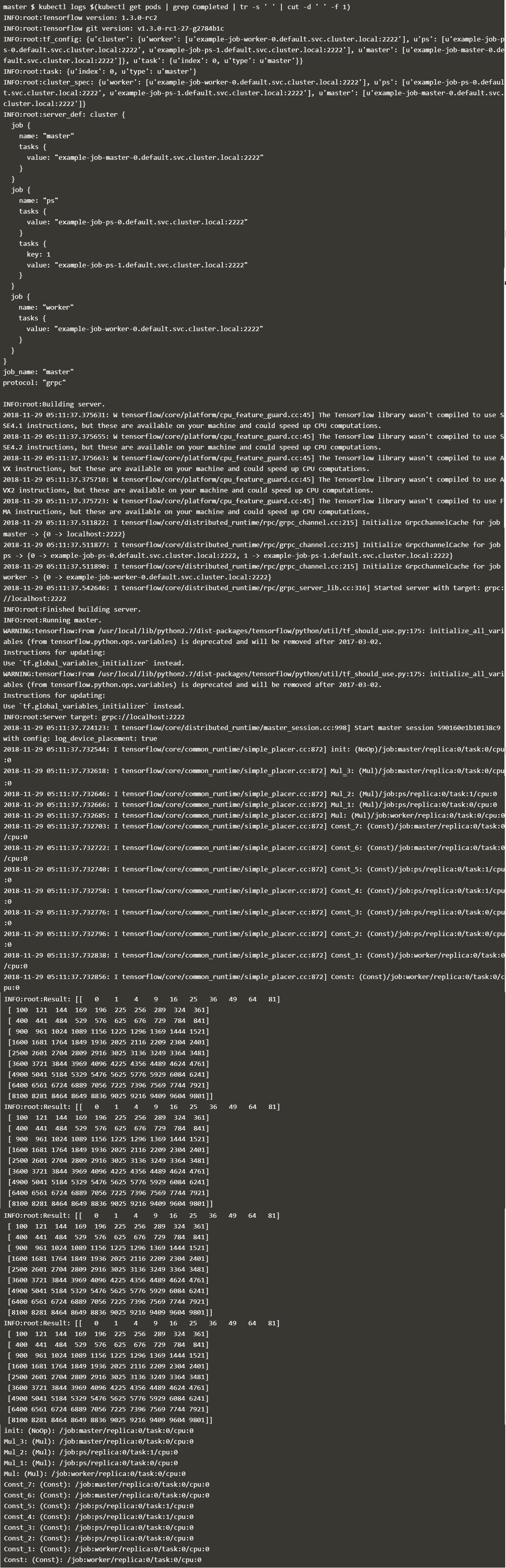

查看 已完成的pods:

kubectl get pods | grep Completed

本示例,结果输出到STDOUT, 通过一下命令可以看到master,worker和PS负载的执行结果

kubectl logs $(kubectl get pods | grep Completed | tr -s ' ' | cut -d ' ' -f 1)

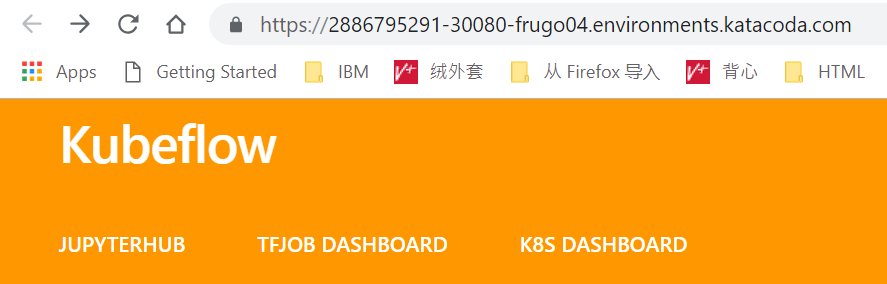

7. Deploy JupyterHub

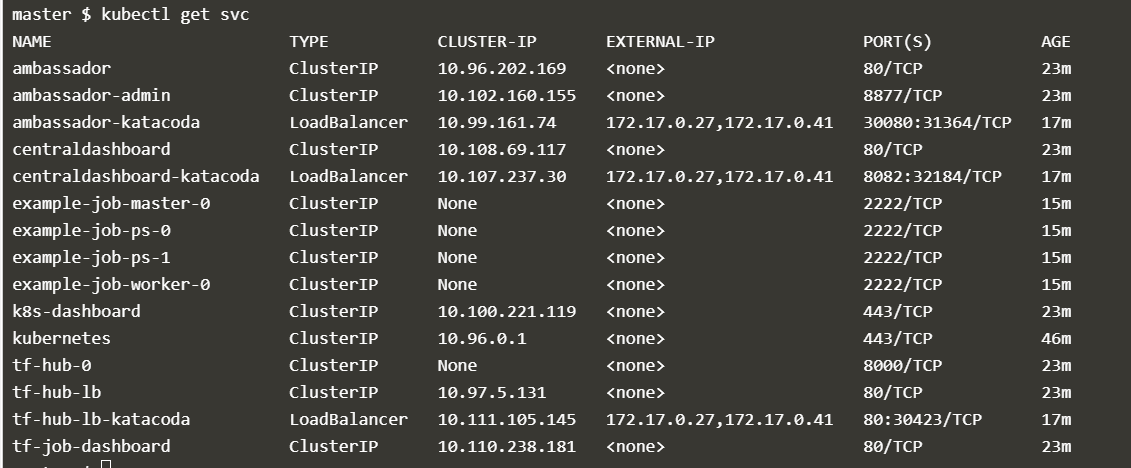

通过前面的Kubeflow,JupyterHub已经部署到Kubernetes的集群上。可以通过一下命令找到Load Balancer IP地址:

kubectl get svc

Jupyter Notebook 通过JupyterHub运行。Jupyter Notebook是经典的数据科学工具,用于在浏览器中运行记录 脚本和代码片段。

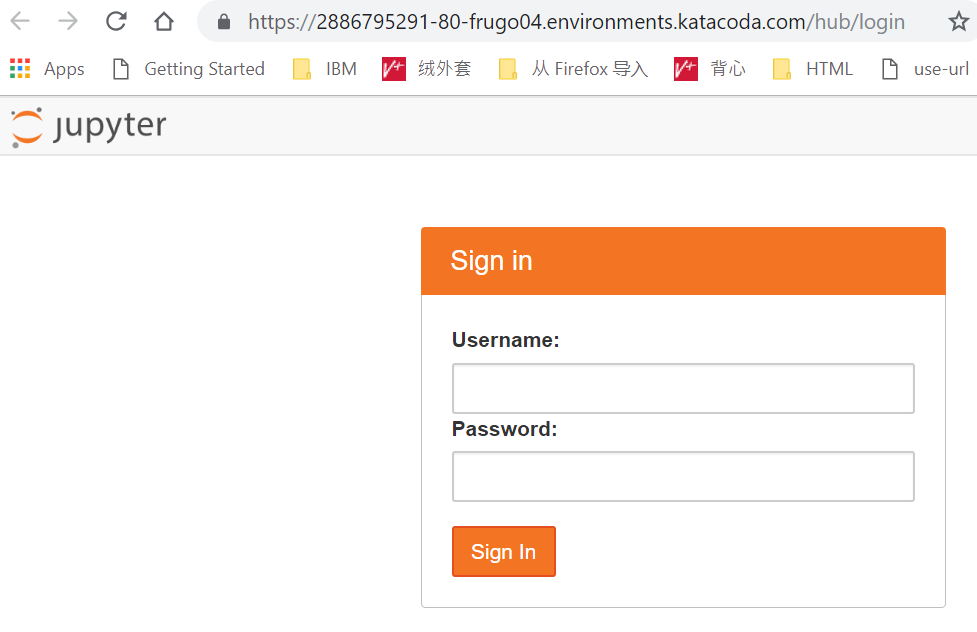

打开 JupyterHub:

用户名为 admin 密码为空。

进入之后,选择一个tensorflow image, gcr.io/kubeflow-images-public/tensorflow-1.8.0-notebook-cpu:v0.2.1 ,

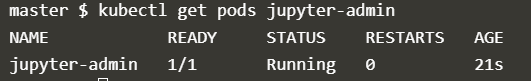

点击“Spawn” 来启动server。这个会生成一个名为jupyter-admin的Kubernetes Pod来管理server。

kubectl get pods jupyter-admin

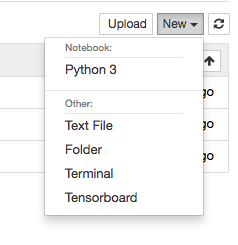

8. 使用 Jupyter Notebook

运行代码

from __future__ import print_function import tensorflow as tf hello = tf.constant('Hello TensorFlow!') s = tf.Session() print(s.run(hello))

其他代码片段如下:

for job_name in cluster_spec.keys():

for i in range(len(cluster_spec[job_name])):

d = "/job:{0}/task:{1}".format(job_name, i)

with tf.device(d):

a = tf.constant(range(width * height), shape=[height, width])

b = tf.constant(range(width * height), shape=[height, width])

c = tf.multiply(a, b)

results.append(c)

https://github.com/tensorflow/k8s/tree/master/examples/tf_sample

9. 部署训练好的Model Server

训练完成之后, model就可以用来执行新数据的预测。 Kubeflow tf-serving提供一个模板,用来服务TensorFlow model。

通过基于模型参数的定义, 并使用Ksonnet来定制和部署。

环境变量如下:

MODEL_COMPONENT=model-server MODEL_NAME=inception MODEL_PATH=/serving/inception-export

使用Ksonnet,扩展Kubeflow服务组件来匹配model的要求。

cd ~/kubeflow_ks_app ks generate tf-serving ${MODEL_COMPONENT} --name=${MODEL_NAME} ks param set ${MODEL_COMPONENT} modelPath $MODEL_PATH ks param set ${MODEL_COMPONENT} modelServerImage katacoda/tensorflow_serving

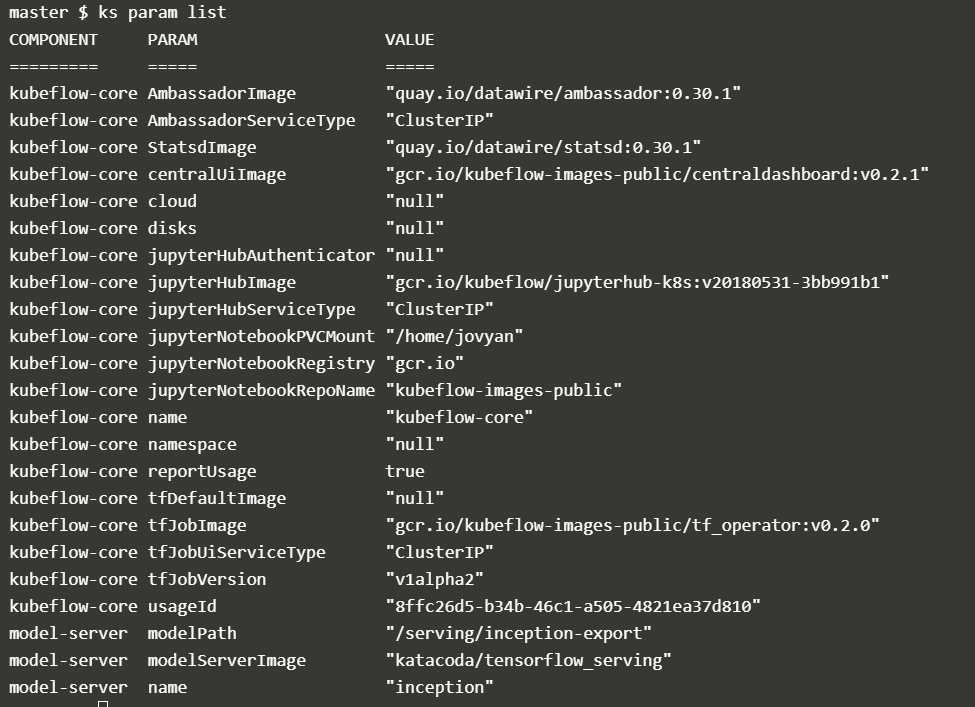

通过一下命令来查看定义的参数:

ks param list

在Kubernete集群中部署这个model 服务

ks apply default -c ${MODEL_COMPONENT}

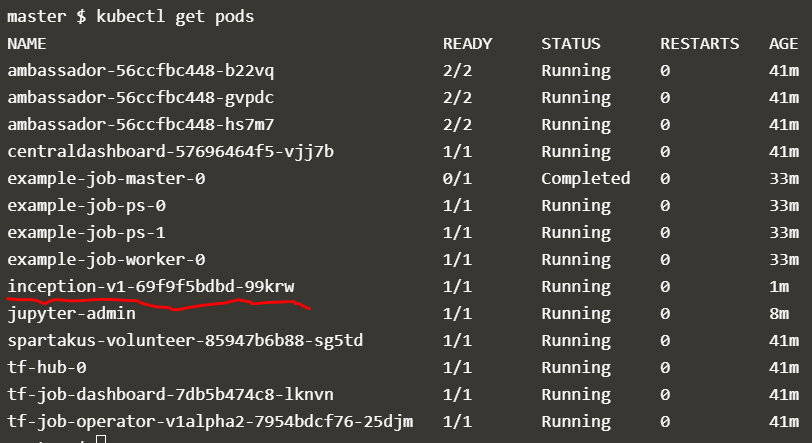

查看运行的pod状态:

kubectl get pods

10. 图像分类

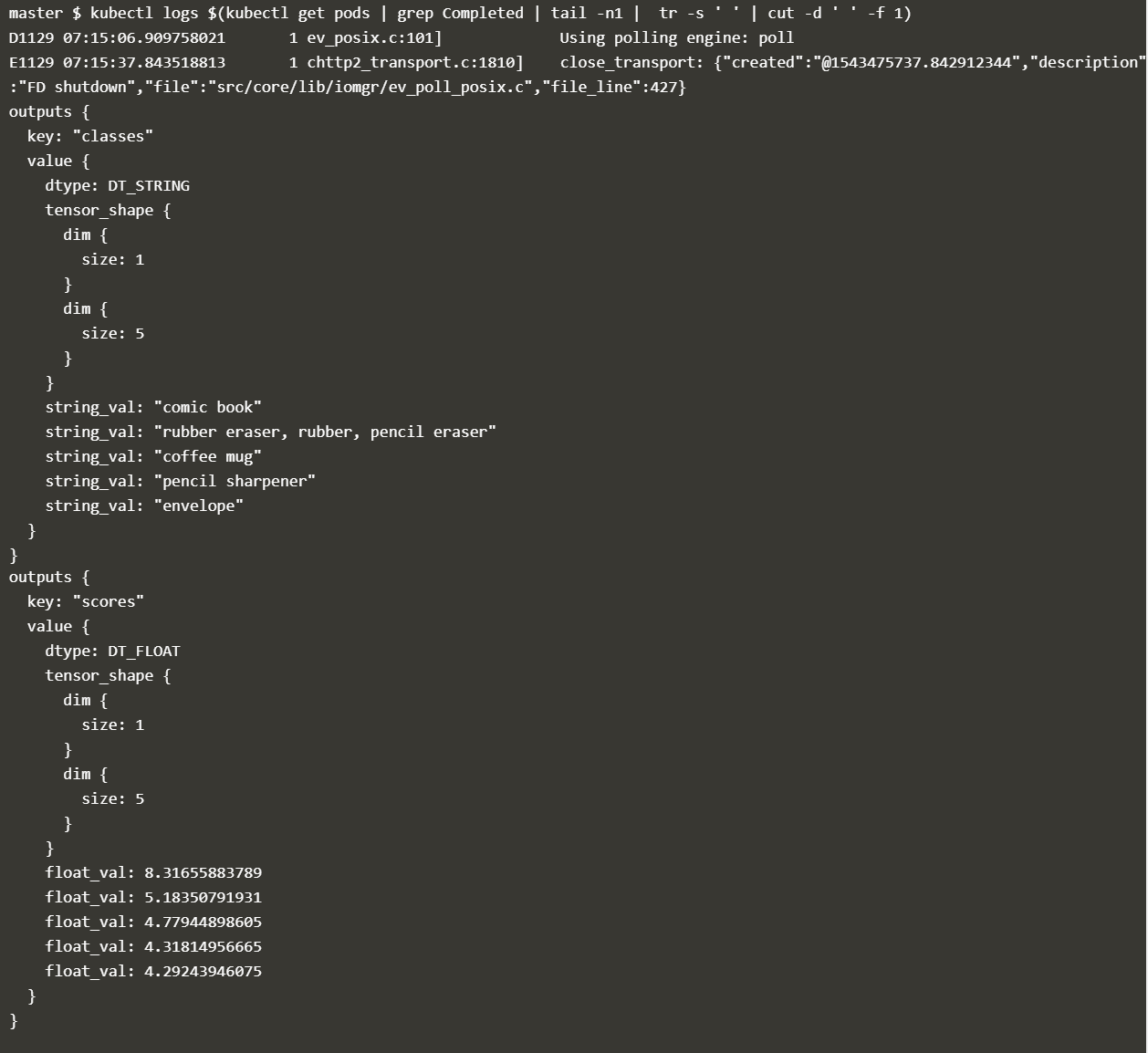

该例子使用预先训练的Inception V3模型。 这是基于ImageNet数据集上训练出来的架构。 ML任务是图像分类,Kubernetes处理model server及其客户端。

要使用已发布的模型,您需要设置客户端。 这与其他job实现方式相同。 用于部署客户端的YAML文件:

cat ~/model-client-job.yaml

使用以下命令部署:

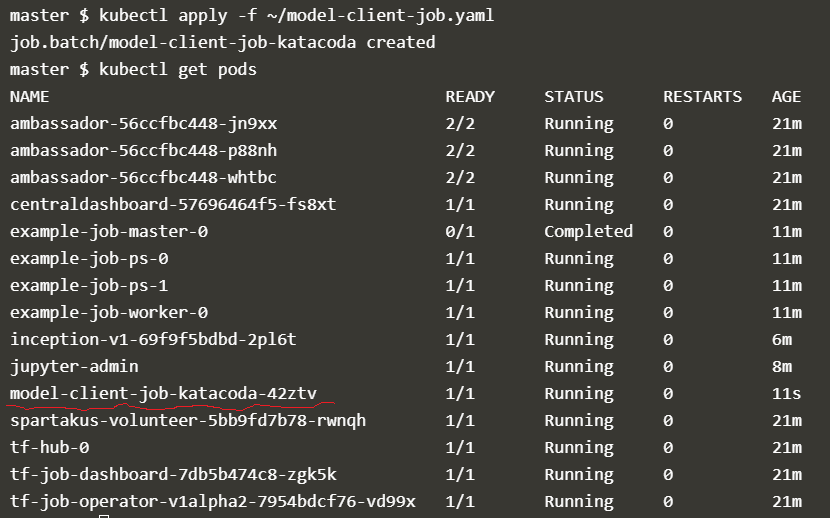

kubectl apply -f ~/model-client-job.yaml

查看 model-client-job 的运行状态:

kubectl get pods

查看Katacoda log的分类状态.

kubectl logs $(kubectl get pods | grep Completed | tail -n1 | tr -s ' ' | cut -d ' ' -f 1)

https://github.com/kubeflow/kubeflow/tree/master/components/k8s-model-server

REF:

Installing Calico for policy and networking (recommended)

Controlling ingress and egress traffic with network policy

Installing Calico for policy and flannel for networking

各个组件介绍:

这个是汇报各种信息的

including the operating system version, kubelet version, container runtime version, as well as CPU and memory capacity.

Argo is an open source container-native workflow engine for getting work done on Kubernetes. Argo is implemented as a Kubernetes CRD (Custom Resource Definition).

大使是 Open Source Kubernetes-Native API Gateway built on the Envoy Proxy

Hyperparameter Tuning on Kubernetes. This project is inspired by Google vizier. Katib is a scalable and flexible hyperparameter tuning framework and is tightly integrated with kubernetes. Also it does not depend on a specific Deep Learning framework e.g. TensorFlow, MXNet, and PyTorch).

modelDB 是用来管理ML的model的。

ModelDB is an end-to-end system to manage machine learning models. It ingests models and associated metadata as models are being trained, stores model data in a structured format, and surfaces it through a web-frontend for rich querying. ModelDB can be used with any ML environment via the ModelDB Light API. ModelDB native clients can be used for advanced support in spark.ml and scikit-learn.