- 方法1

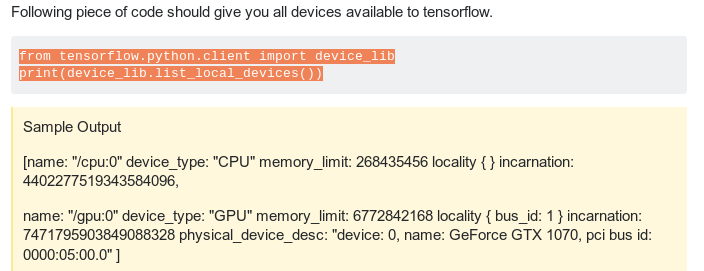

from tensorflow.python.client import device_lib

print(device_lib.list_local_devices())

-

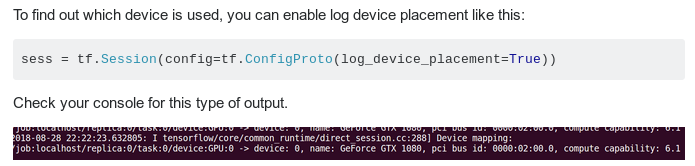

方法2

-

方法3

import tensorflow as tf

with tf.device('/gpu:0'):

a = tf.constant([1.0, 2.0, 3.0, 4.0, 5.0, 6.0], shape=[2, 3], name='a')

b = tf.constant([1.0, 2.0, 3.0, 4.0, 5.0, 6.0], shape=[3, 2], name='b')

c = tf.matmul(a, b)

with tf.Session() as sess:

print (sess.run(c))

安装了tensorflow-gpu,但是train的时候用的还是cpu.用方法1能检测到gpu,但实际上计算的时候还是用了cpu.用方法3可以检测出来.

import tensorflow as tf

tf.test.is_gpu_available()

找到libcudart.so所在位置,添加路径到.bashrc

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/local/cuda/lib64

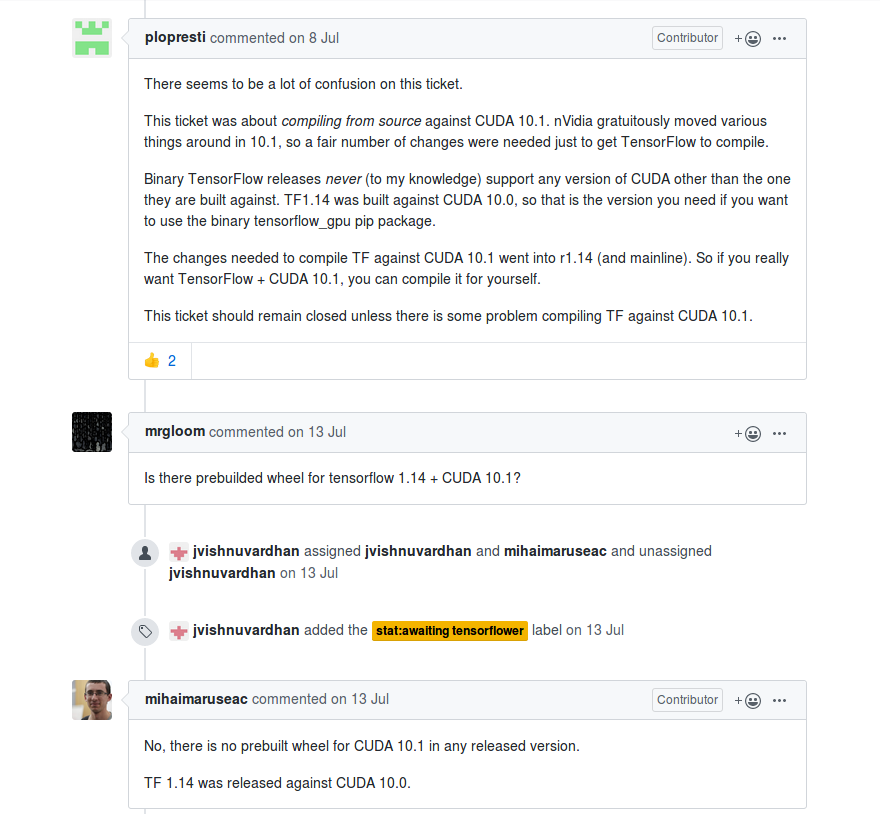

吐血,我机器上的是cuda10.1.

https://github.com/tensorflow/tensorflow/issues/26289

pip装的不支持cuda10.1....支持到cuda10.0

重新安装cuda10.0 https://developer.nvidia.com/cuda-10.0-download-archive?target_os=Linux&target_arch=x86_64&target_distro=Ubuntu&target_version=1604&target_type=runfilelocal下载,安装.done!

安装cudnn

https://developer.nvidia.com/rdp/cudnn-download

感动,终于在gpu上跑起来了!