When I was responsible for CRM Fiori application, I once meet with a performance issue.

When the users perform the synchronization for the first time on their mobile device, the opportunities belonging to them will be downloaded to mobile which is so called the initial load phase. The downloaded data includes attachment header information.

Since for Attachment read in CRM, there is no multiple-enabled API, so we have to perform the single read API within the LOOP, which means the read is performed sequentially:

We really suffer from this poor performance.

As explained in my blog What should an ABAPer continue to learn as an application developer,

I get inspiration from the concept Parallel computing

which is usually related to functional programming language. A function which has no side-effect, only manipulates with immutable data set is a good candidate to be handled concurrently. When looking back on my performance issue, the requirement to read opportunity attachment header data perfectly fits the criteria: only read access on header data, each read is segregated from others – no side effect. As a result it is worth a try to rewrite the read implementation into a parallelism version.

The idea is simple: split the opportunities to be read into different parts, and spawn new ABAP sessions via keyword STARTING NEW TASK, each session is responsible for a dedicated part.

The screenshot below is an example that totally 100 opportunities are divided into 5 sub groups, which will be handled by 5 ABAP sessions, each session reads 100 / 5 = 20 opportunity attachments.

The parallel read version:

METHOD PARALLEL_READ.

DATA:lv_taskid TYPE c LENGTH 8,

lv_index TYPE c LENGTH 4,

lv_current_index TYPE int4,

lt_task LIKE it_orders,

lt_attachment TYPE crmt_odata_task_attachmentt.

* TODO: validation on iv_process_num and lines( it_orders )

DATA(lv_total) = lines( it_orders ).

DATA(lv_additional) = lv_total MOD iv_block_size.

DATA(lv_task_num) = lv_total DIV iv_block_size.

IF lv_additional <> 0.

lv_task_num = lv_task_num + 1.

ENDIF.

DO lv_task_num TIMES.

CLEAR: lt_task.

lv_current_index = 1 + iv_block_size * ( sy-index - 1 ).

DO iv_block_size TIMES.

READ TABLE it_orders ASSIGNING FIELD-SYMBOL(<task>) INDEX lv_current_index.

IF sy-subrc = 0.

APPEND INITIAL LINE TO lt_task ASSIGNING FIELD-SYMBOL(<cur_task>).

MOVE-CORRESPONDING <task> TO <cur_task>.

lv_current_index = lv_current_index + 1.

ELSE.

EXIT.

ENDIF.

ENDDO.

IF lt_task IS NOT INITIAL.

lv_index = sy-index.

lv_taskid = 'Task' && lv_index.

CALL FUNCTION 'ZJERRYGET_ATTACHMENTS'

STARTING NEW TASK lv_taskid

CALLING read_finished ON END OF TASK

EXPORTING

it_objects = lt_task.

ENDIF.

ENDDO.

WAIT UNTIL mv_finished = lv_task_num.

rt_attachments = mt_attachment_result.

ENDMETHOD.

The method READ_FINISHED:

METHOD READ_FINISHED.

DATA: lt_attachment TYPE crmt_odata_task_attachmentt.

ADD 1 TO mv_finished.

RECEIVE RESULTS FROM FUNCTION 'ZJERRYGET_ATTACHMENTS'

CHANGING

ct_attachments = lt_attachment

EXCEPTIONS

system_failure = 1

communication_failure = 2.

APPEND LINES OF lt_attachment TO mt_attachment_result.

ENDMETHOD.

In function module ZJERRYGET_ATTACHMENTS, I still use the attachment single read API:

FUNCTION ZJERRYGET_ATTACHMENTS.

*"----------------------------------------------------------------------

*"*"Local Interface:

*" IMPORTING

*" VALUE(IT_OBJECTS) TYPE CRMT_OBJECT_KEY_T

*" CHANGING

*" VALUE(CT_ATTACHMENTS) TYPE CRMT_ODATA_TASK_ATTACHMENTT

*"----------------------------------------------------------------------

DATA(lo_tool) = new zcl_crm_attachment_tool( ).

ct_attachments = lo_tool->get_attachments_origin( it_objects ).

ENDFUNCTION.

```ABAP

So in fact I didn’t spend any effort to optimize the single read API. Instead, I call it in parallel. Let’s see if there is any performance improvement.

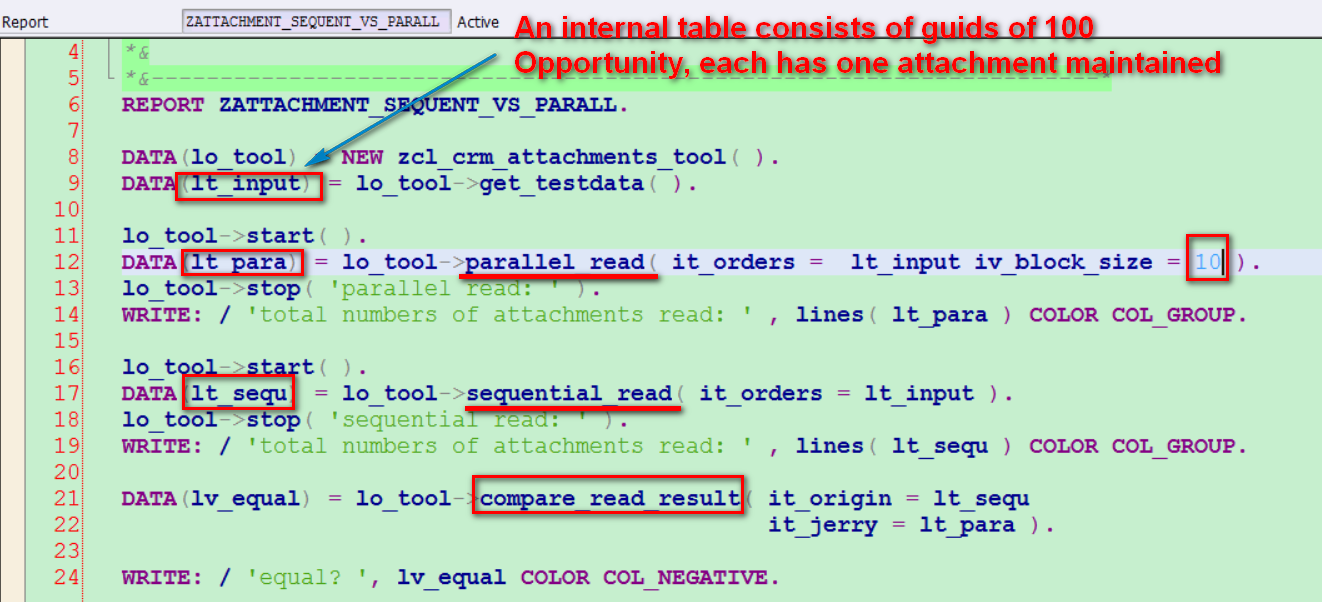

In this test report, first I generate an internal table with 100 entries which are opportunity guids. Then I perform the attachment read twice, one done in parallel and the other done sequentially. Both result are compared in method compare_read_result to ensure there is no function loss in the parallel version.

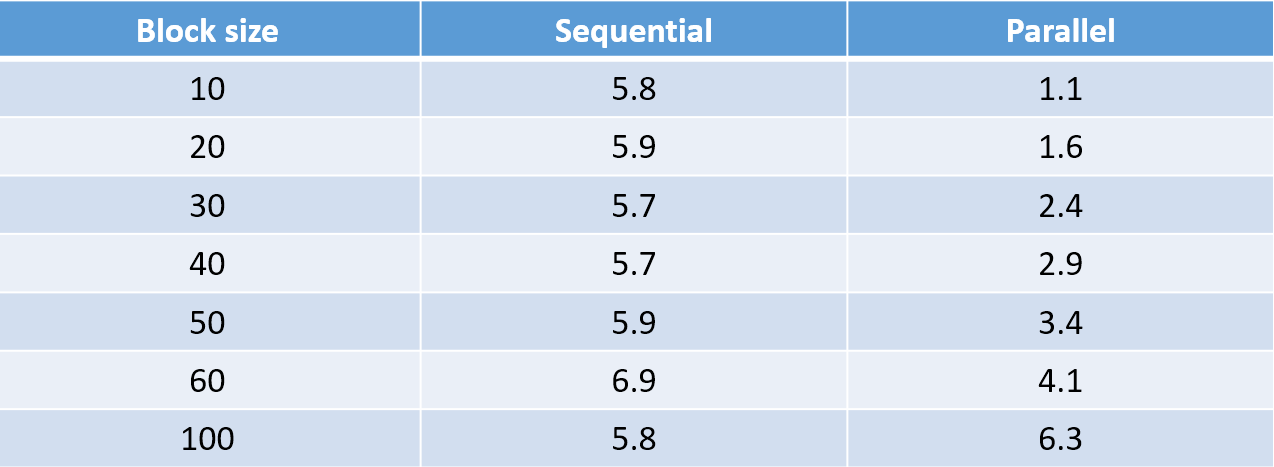

# Testing result ( unit: second )

It clearly shows that the performance increases with the number of running ABAP sessions which handles with the attachment read. When the block size = 100, the parallel solution degrades to the sequential one – even worse due to the overhead of WAIT.

For sure in productive usage the number of block size should not be hard coded.

In fact in my test code why I use the variable name iv_block_size is to express my respect to the block size customizing in tcode R3AC1 in CRM middleware.

要获取更多Jerry的原创文章,请关注公众号"汪子熙":