官方文档地址:https://docs.fluentd.org/output/kafka

td-agent版本自带包含out_kafka2插件,不用再安装了,可以直接使用。

若是使用的是Fluentd,则需要安装这个插件:

$ fluent-gem install fluent-plugin-kafka

配置示例

<match pattern>

@type kafka2

# list of seed brokers

brokers <broker1_host>:<broker1_port>,<broker2_host>:<broker2_port>

use_event_time true

# buffer settings

<buffer topic>

@type file

path /var/log/td-agent/buffer/td

flush_interval 3s

</buffer>

# data type settings

<format>

@type json

</format>

# topic settings

topic_key topic

default_topic messages

# producer settings

required_acks -1

compression_codec gzip

</match>

参数说明

- @type:必填,kafka2

- brokers:kafka连接地址,默认是localhost:9092

- topic_key:目标主题的字段名,默认是topic,必须设置buffer chunk key,示例如下:

topic_key category

<buffer category> # topic_key should be included in buffer chunk key

# ...

</buffer>

- default_topic:要写入目标的topic,默认nil,topic_key未设置的话则使用这个参数

指令,可用参数有json, ltsv和其他格式化程序插件,用法如下:

<format>

@type json

</format>

- use_event_time:fluentd事件发送到kafka的时间,默认false,也就是当前时间

- required_acks:每个请求所需的ACK数,默认-1

- compression_codec: 生产者用来压缩消息的编解码器,默认nil,可选参数有gzip, snappy(如果使用snappy,需要使用命令td-agent-gem安装snappy)

- @log_level:可选,日志等级,参数有fatal, error, warn, info, debug, trace

用法示例

kafka安装参考:https://www.cnblogs.com/sanduzxcvbnm/p/13932933.html

<source>

@type tail

@id input_tail

<parse>

@type nginx

</parse>

path /usr/local/openresty/nginx/logs/host.access.log

tag td.nginx.access

</source>

<match td.nginx.access>

@type kafka2

brokers 192.168.0.253:9092

use_event_time true

<buffer app>

@type memory

</buffer>

<format>

@type json

</format>

topic_key app

default_topic messagesb # 注意,kafka中消费使用的是这个topic

required_acks -1

compression_codec gzip

</match>

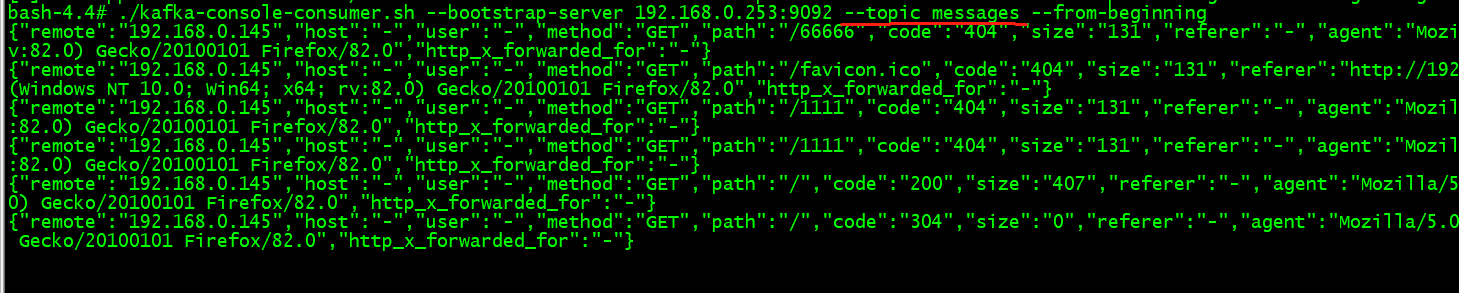

kafka消费的数据显示:

{"remote":"192.168.0.145","host":"-","user":"-","method":"GET","path":"/00000","code":"404","size":"131","referer":"-","agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:82.0) Gecko/20100101 Firefox/82.0","http_x_forwarded_for":"-"}

{"remote":"192.168.0.145","host":"-","user":"-","method":"GET","path":"/99999","code":"404","size":"131","referer":"-","agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:82.0) Gecko/20100101 Firefox/82.0","http_x_forwarded_for":"-"}