1 hostPort

hostPort相当于docker run -p 8081:8080,不用创建svc,因此端口只在容器运行的vm上监听。

但是宿主机上面无法通过netstat -lntup|grep 端口查看,只能通过其他机器telnet IP 端口判断是否映射成功。

缺点: 没法多pod负载

$ cat pod-hostport.yaml apiVersion: v1 kind: Pod metadata: name: webapp labels: app: webapp spec: containers: - name: webapp image: tomcat ports: - containerPort: 8080 hostPort: 8081 $ kubectl get po --all-namespaces -o wide --show-labels NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE LABELS default webapp 1/1 Running 0 5s 10.2.100.3 n2.ma.com app=webapp [root@n2 ~]# docker ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 59e72c92ba55 tomcat "catalina.sh run" 2 minutes ago Up 2 minutes k8s_webapp_webapp_default_932c613e-e2dc-11e7-8313-00505636c956_0 0fe8c2f08e03 gcr.io/google_containers/pause-amd64:3.0 "/pause" 2 minutes ago Up 2 minutes 0.0.0.0:8081->8080/tcp k8s_POD_webapp_default_932c613e-e2dc-11e7-8313-00505636c956_0

2 hostNetwork

hostNetwork相当于 docker run --net=host ,不用创建svc,因此端口只在容器运行的vm上监听

缺点: 没法多pod负载

apiVersion: v1 kind: Pod metadata: name: webapp labels: app: webapp spec: hostNetwork: true containers: - name: webapp image: tomcat ports: - containerPort: 8080 $ kubectl get po --all-namespaces -o wide --show-labels NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE LABELS default webapp 1/1 Running 0 36s 192.168.x.x n2.ma.com app=webapp 查看该pod的网卡, 发现和宿主机一致 $ docker exec -it b8a1e1e35c3e ip ad 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 link/ether 00:50:56:33:13:b6 brd ff:ff:ff:ff:ff:ff inet 192.168.14.133/24 brd 192.168.14.255 scope global dynamic eth0 valid_lft 5356021sec preferred_lft 5356021sec inet6 fe80::250:56ff:fe33:13b6/64 scope link valid_lft forever preferred_lft forever 3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default link/ether 02:42:41:e8:f5:22 brd ff:ff:ff:ff:ff:ff inet 10.2.100.1/24 scope global docker0 valid_lft forever preferred_lft forever inet6 fe80::42:41ff:fee8:f522/64 scope link valid_lft forever preferred_lft forever 4: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN group default link/ether ae:4a:e1:f9:52:ea brd ff:ff:ff:ff:ff:ff inet 10.2.100.0/32 scope global flannel.1 valid_lft forever preferred_lft forever inet6 fe80::ac4a:e1ff:fef9:52ea/64 scope link valid_lft forever preferred_lft forever 244: veth007dbe6@if243: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master docker0 state UP group default link/ether f2:02:e1:a2:9f:8a brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet6 fe80::f002:e1ff:fea2:9f8a/64 scope link valid_lft forever preferred_lft forever

3 NodePort

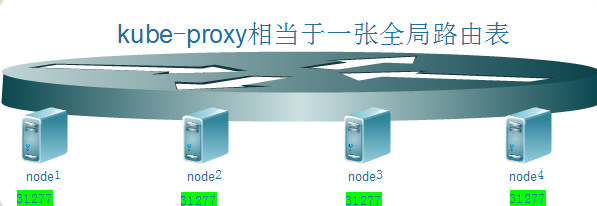

NodePort-svc级别,由kube-proxy操控,所有节点规则统一,逻辑上市全局的

因此,svc上的nodeport会监听在所有的节点上(如果不指定,即是随机端口,由apiserver指定--service-node-port-range '30000-32767'),即使有1个pod,任意访问某台的nodeport都可以访问到这个服务

$ cat nginx-deployment.yaml apiVersion: apps/v1beta1 # for versions before 1.8.0 use apps/v1beta1 kind: Deployment metadata: name: mynginx labels: app: nginx spec: replicas: 1 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - name: nginx image: nginx ports: - containerPort: 80 $ cat nginx-svc.yaml kind: Service apiVersion: v1 metadata: name: mynginx spec: type: NodePort selector: app: nginx ports: - protocol: TCP port: 8080 targetPort: 80 $ kubect get pod --show-lables -o wide NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE LABELS default mynginx-31893996-f8bn7 1/1 Running 0 12m 10.2.100.2 n2.ma.com app=nginx,pod-template-hash=31893996 $ kubectl get svc --show-lables -o wide NAMESPACE NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR default mynginx 10.254.173.173 <nodes> 8080:31277/TCP 9m

4 externalIPs

externalIPs 通过svc创建,在指定的node上监听端口

适用场景: 想通过svc来负载,但要求某台指定的node上监听,而非像nodeport所有节点监听. [root@n1 external-ip]# cat nginx-deployment.yaml apiVersion: extensions/v1beta1 kind: Deployment metadata: name: nginx-deployment labels: app: nginx spec: replicas: 1 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - name: nginx image: nginx ports: - containerPort: 80 [root@n1 external-ip]# cat nginx-svc.yaml apiVersion: v1 kind: Service metadata: name: svc-nginx spec: selector: app: nginx ports: - protocol: TCP port: 80 externalIPs: - 192.168.2.12 #这是我的一台node的ip - 这个端口是kube-proxy来启动的,所以只有运行kube-proxy的 [root@n2 ~]# netstat -ntulp tcp 0 0 192.168.2.12:80 0.0.0.0:* LISTEN 11465/kube-proxy