最近接到一个任务, 要抓取人民日报的内容, 没办法, 小公司, (我是一片砖, 哪里需要我, 我就往哪儿搬)

1 通过查看网页源码可知, 此网站几乎没有用到ajax请求, 几乎所有的东东都直接加载到了源码里,那就简单了。 首先通过抓取单个分类首页先获取到这个分类下面的每一个内容的url,将这个url写到一个单独的文件中,通过解析获取到不同位置的url, 以供以后使用,代码如下

import requests from lxml import etree import time def parsePageUrl(url): user_agent = "Mozilla/5.0 (Windows NT 10.0; WOW64; rv:46.0) Gecko/20100101 Firefox/46.0" baseUrl = url with requests.get(url, headers={ "User-agent": user_agent }) as resp: html = etree.HTML(resp.text) contentUrl = html.xpath("//div[@class='on']//a/@href") title = html.xpath("//h1/a/@href") newBoxs = html.xpath("//div[@class='news_box']//a/@href") yueduList = html.xpath("//div[@class='w310']//a/@href") for newBox in newBoxs: if newBox.find("GB") == -1: if newBox.find(baseUrl) != -1: with open("recode.txt", "a+") as file: file.write(newBox + " ") else: url = baseUrl + newBox with open("recode.txt", "a+") as file: file.write(url + " ") for yueduUrl in yueduList: with open("recode.txt", "a+") as file: file.write(yueduUrl + " ") str = "".join(title) with open("recode.txt", "a+") as file: file.write(str + " ") for nurl in contentUrl: cUrl = baseUrl + nurl with open("recode.txt", "a+") as file: file.write(cUrl + " ") if __name__ == '__main__': types = ("politics", "world", "finance", "tw", "military", "opinion", "leaders", "renshi", "theory", "legal", "society","industry", "edu", "house") for type in types: time.sleep(3) url = "http://%s.people.com.cn" % type parsePageUrl(url)

2 通过用requests,爬取每个url

import requests from lxml import etree import time def readUrl(): with open("recode.txt", "r") as file: urls = file.readlines() for url in urls: if url == ' ': url = url.strip(" ") return urls def parse(url): if "http" in url and url.count("http")==1: basename = url.split("/")[2].split(".")[0] ua = "Mozilla/5.0 (Windows NT 10.0; WOW64; rv:46.0) Gecko/20100101 Firefox/46.0" with requests.get(url=url, headers={ "User-agent": ua }) as req: req.encoding = "GBK" html = etree.HTML(req.text) title = html.xpath("//h1/text()") author = html.xpath("//div[@class='box01']/div[@class='fl']//text()") content = html.xpath("//div[@id='rwb_zw']/p/text()") t = "".join(title).strip() a = "".join(author).strip() c = "".join(content).strip() with open("%s.txt" %basename, "a+", encoding='utf-8') as file: file.write("标题 :" + t + " ") file.write("时间以及来源 :" + a + " ") file.write("正文 :" + c + " ") file.write(" ") file.write("=" * 110 + "下一篇" + "=" * 110) file.write(" ") if __name__ == '__main__': list = readUrl() for url in list: urla = url.replace(" ", "") parse(urla)

这样获取的结果其实并不全,

1 写入文件的时候url的处理有问题, 有部分url丢失,

2 网站中有几个分类的结构和politics不同, 我是以politics页面作为模板解析页面的,这个如果有需要可以单独处理 。

3 url中包含有GB路径的专题系列url也没有去解析,因为实在懒的单独去写解析的这个特殊路径的代码, 毕竟这类路径不是很多。

4 图片没有做处理

第二版

第二版对抓取的xpath做了修改,结果现在为4300条, 对生成的结果也使用词云做了处理,代码如下:

1 抓取路径

import requests from lxml import etree import time def parsePageUrl(url, type): user_agent = "Mozilla/5.0 (Windows NT 10.0; WOW64; rv:46.0) Gecko/20100101 Firefox/46.0" baseUrl = url with requests.get(url, headers={ "User-agent": user_agent }) as resp: html = etree.HTML(resp.text) # contentUrl = html.xpath("//div[@class='on']//a/@href") contentUrl = html.xpath("//div[contains(@class,'hdNews') and contains(@class,'clearfix')]//a/@href") # contentUrl = html.xpath("//div[contains(@class,'clearfix')]//a/@href") title = html.xpath("//h1/a/@href") newBoxs = html.xpath("//div[@class='news_box']//a/@href") yueduList = html.xpath("//div[@class='w310']//a/@href") for newBox in newBoxs: with open("result1.txt", "a+") as file: if "http" not in newBox: newBox = baseUrl+newBox file.write(newBox.strip()+" ") for yueduUrl in yueduList: with open("result1.txt", "a+")as file: file.write(yueduUrl.strip()+ " ") str = "".join(title) if "http" not in str: str = baseUrl+str with open("result1.txt", "a+")as file: file.write(str.strip() + " ") for nurl in contentUrl: if baseUrl not in nurl: cUrl = baseUrl + nurl if cUrl.count("cn") > 1: cUrl = cUrl[cUrl.find("cn") + 2:] with open("result1.txt", "a+")as file: file.write(cUrl.strip() + " ") if __name__ == '__main__': types = ("politics", "world", "finance", "tw", "military", "opinion", "leaders", "renshi", "theory", "legal", "society","industry","sports" ,"house", "travel", "people") for type in types: url = "http://%s.people.com.cn" %type error_time = 0 while True: time.sleep(3) try: parsePageUrl(url, type) except: error_time += 1 if error_time == 100: print 'your network is little bad' time.sleep(60) if error_time == 101: print 'your network is broken' break continue break

2 解析页面

import requests from lxml import etree import time def readUrl(): with open("result1.txt", "r") as file: urls = file.readlines() for url in urls: if url == ' ': url = url.strip(" ") return urls def parse(url): if "http" in url and url.count("http")==1: basename = url.split("/")[2].split(".")[0] ua = "Mozilla/5.0 (Windows NT 10.0; WOW64; rv:46.0) Gecko/20100101 Firefox/46.0" with requests.get(url=url, headers={ "User-agent": ua }) as req: req.encoding = "GBK" html = etree.HTML(req.text) title = html.xpath("//h1/text()") author = html.xpath("//div[@class='box01']/div[@class='fl']//text()") content = html.xpath("//div[@id='rwb_zw']/p/text()") t = "".join(title).strip() a = "".join(author).strip() c = "".join(content).strip() with open("content.txt", "a+", encoding='utf-8') as file: file.write("标题 :" + t + " ") file.write("时间以及来源 :" + a + " ") file.write("正文 :" + c + " ") file.write(" ") file.write("=" * 110 + "下一篇" + "=" * 110) file.write(" ") if __name__ == '__main__': list = readUrl() for url in list: urla = url.replace(" ", "") error_time = 0 while True: time.sleep(3) try: parse(urla) except: error_time += 1 if error_time == 100: print 'your network is little bad' time.sleep(60) if error_time == 101: print 'your network is broken' break continue break

3 词云解析

import chardet import jieba import os from os import path import numpy as np from PIL import Image from matplotlib import pyplot as plt from wordcloud import WordCloud,STOPWORDS,ImageColorGenerator def renmin(): d = path.dirname(__file__) if "__file__" in locals() else os.getcwd() text = open(path.join(d, r'content.txt'), encoding='UTF-8-SIG').read() # text_charInfo = chardet.detect(text) text += ' '.join(jieba.cut(text, cut_all=False)) font_path = 'C:WindowsFontsSourceHanSansCN-Regular.otf' background_Image = np.array(Image.open(path.join(d, "2.jpg"))) img_colors = ImageColorGenerator(background_Image) stopwords = set(STOPWORDS) stopwords.add('下一篇') stopwords.add('Not Found') stopwords.add('Found') stopwords.add('正文') stopwords.add('以及') stopwords.add('时间') stopwords.add('标题') stopwords.add('来源') stopwords.add('时间以及来源') stopwords.add('人民网') stopwords.add('一篇') stopwords.add('我们') wc = WordCloud( font_path=font_path, # 中文需设置路径 margin=2, # 页面边缘 mask=background_Image, scale=2, max_words=200, # 最多词个数 min_font_size=4, # stopwords=stopwords, random_state=42, background_color='white', # 背景颜色 # background_color = '#C3481A', # 背景颜色 max_font_size=100, ) wc.generate(text) # 获取文本词排序,可调整 stopwords process_word = WordCloud.process_text(wc, text) sort = sorted(process_word.items(), key=lambda e: e[1], reverse=True) print(sort[:200]) with open("sort.txt", "a+", encoding="GBK") as file: for list in sort[200:]: a = ','.join(str(s) for s in list if s not in ['NONE','NULL']) file.write(a + " ") wc.recolor(color_func=img_colors) # 存储图像 wc.to_file('人民2.png') # 显示图像 plt.imshow(wc, interpolation='bilinear') plt.axis('off') plt.tight_layout() plt.show() if __name__ == '__main__': renmin()

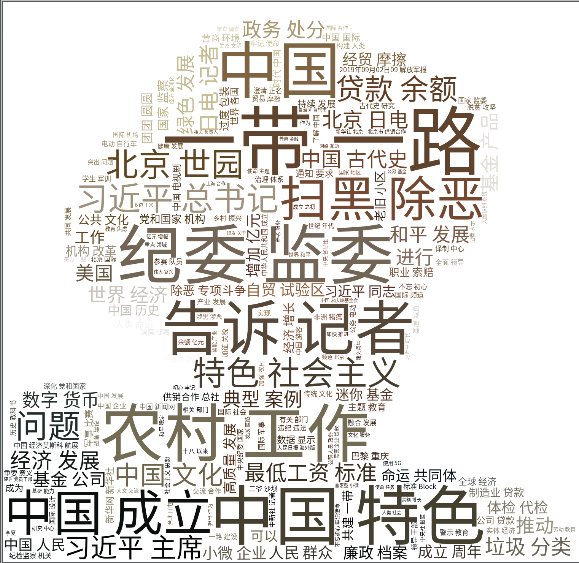

生成的图片是酱样的

图片中字最大的是相对出现最多的, 最小的是前两百出现次数相对最低的

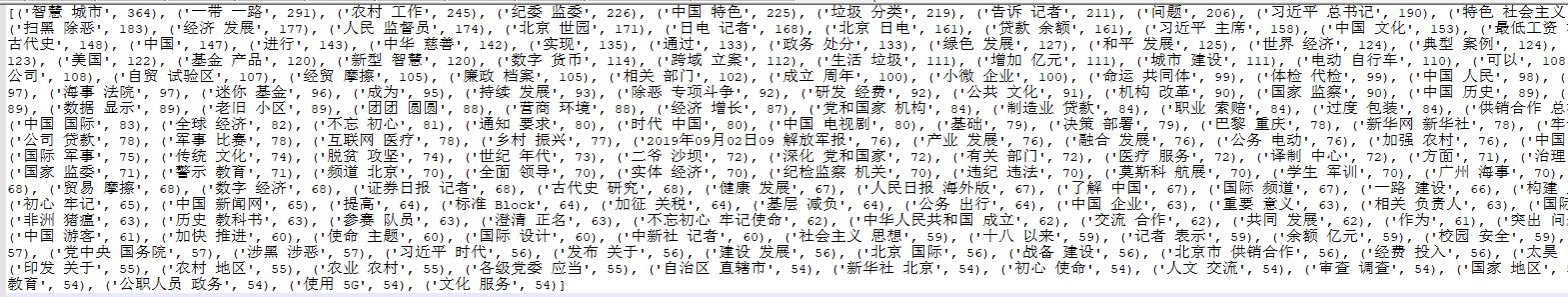

排序前两百的是酱样的

解析下来获取到了4300多条数据,看情况吧, 如果运营小姐姐那边觉得够用那就这样了, 不行再优化。以上代码理论上模块安装了复制下来就能用。

以上只是一位测试小菜鸟写的爬虫, 如果哪位大佬看到了还望多多指点,不要一上来就骂, 毕竟我水平有限, 不是专门搞爬虫的。

ps. 以前一直使用css选择器, 自从最近真正学习到xpath后, 才发现我以前使用的那不叫xpath, 用的好的话比css选择器灵活太多了啊,我爱xpath, 我觉得我上辈子可能就是一个xpath。