Docker中一键安装ELK

对于这种工具类的东西,第一步就直接到docker的hub中查找了,很幸运,不仅有Elasticsearch,kibana,logstash 单独的镜像,而且还直接 有ELK的镜像。

sudo docker run -p 5601:5601 -p 9200:9200 -p 5044:5044 -d --name log-platform --restart always sebp/elk

这当然能少好多配置,毫不犹豫就选择了elk的镜像, 运行起来!如果没有异常的话相信就很容易的跑起来了(最有可能出现的问题就是虚拟内存不足了,可以百度找解决方案这里就不在详细说了)

项目中添加log4net到Elasticseach的Appender

因为在.net core 之前就有搭建过日志中心,所以对于appender还记得有一个Log4net.Elasticsearch的dll,但是在查看资料之后发现很久没有更新 也不支持.net standard。在决定自己实现appender之前,抱着侥幸心理去查找了一翻,既然找到一个支持.net core的开源项目log4stash。很幸运,又可以不要造轮子了,哈哈。log4Stash使用很简单,但是配置的东西还挺多的而且作者也没有很好的文档介绍,先不管其他的用起来在说。

- 项目中添加log4stash

Install-Package log4stash -Version 2.2.1

- 修改log4net.config

在log4net.config中添加appender

<appender name="ElasticSearchAppender" type="log4stash.ElasticSearchAppender, log4stash">

<Server>localhost</Server>

<Port>9200</Port>

<IndexName>log_test_%{+yyyy-MM-dd}</IndexName>

<IndexType>LogEvent</IndexType>

<Bulksize>2000</Bulksize>

<BulkIdleTimeout>10000</BulkIdleTimeout>

<IndexAsync>True</IndexAsync>

</appender>

另外附上全部的配置信息

<appender name="ElasticSearchAppender" type="log4stash.ElasticSearchAppender, log4stash">

<Server>localhost</Server>

<Port>9200</Port>

<!-- optional: in case elasticsearch is located behind a reverse proxy the URL is like http://Server:Port/Path, default = empty string -->

<Path>/es5</Path>

<IndexName>log_test_%{+yyyy-MM-dd}</IndexName>

<IndexType>LogEvent</IndexType>

<Bulksize>2000</Bulksize>

<BulkIdleTimeout>10000</BulkIdleTimeout>

<IndexAsync>False</IndexAsync>

<DocumentIdSource>IdSource</DocumentIdSource> <!-- obsolete! use IndexOperationParams -->

<!-- Serialize log object as json (default is true).

-- This in case you log the object this way: `logger.Debug(obj);` and not: `logger.Debug("string");` -->

<SerializeObjects>True</SerializeObjects>

<!-- optional: elasticsearch timeout for the request, default = 10000 -->

<ElasticSearchTimeout>10000</ElasticSearchTimeout>

<!--You can add parameters to the request to control the parameters sent to ElasticSearch.

for example, as you can see here, you can add a routing specification to the appender.

The Key is the key to be added to the request, and the value is the parameter's name in the log event properties.-->

<IndexOperationParams>

<Parameter>

<Key>_routing</Key>

<Value>%{RoutingSource}</Value>

</Parameter>

<Parameter>

<Key>_id</Key>

<Value>%{IdSource}</Value>

</Parameter>

<Parameter>

<Key>key</Key>

<Value>value</Value>

</Parameter>

</IndexOperationParams>

<!-- for more information read about log4net.Core.FixFlags -->

<FixedFields>Partial</FixedFields>

<Template>

<Name>templateName</Name>

<FileName>path2template.json</FileName>

</Template>

<!--Only one credential type can used at once-->

<!--Here we list all possible types-->

<AuthenticationMethod>

<!--For basic authentication purposes-->

<Basic>

<Username>Username</Username>

<Password>Password</Password>

</Basic>

<!--For AWS ElasticSearch service-->

<Aws>

<Aws4SignerSecretKey>Secret</Aws4SignerSecretKey>

<Aws4SignerAccessKey>AccessKey</Aws4SignerAccessKey>

<Aws4SignerRegion>Region</Aws4SignerRegion>

</Aws>

</AuthenticationMethod>

<!-- all filters goes in ElasticFilters tag -->

<ElasticFilters>

<Add>

<Key>@type</Key>

<Value>Special</Value>

</Add>

<!-- using the @type value from the previous filter -->

<Add>

<Key>SmartValue</Key>

<Value>the type is %{@type}</Value>

</Add>

<Remove>

<Key>@type</Key>

</Remove>

<!-- you can load custom filters like I do here -->

<Filter type="log4stash.Filters.RenameKeyFilter, log4stash">

<Key>SmartValue</Key>

<RenameTo>SmartValue2</RenameTo>

</Filter>

<!-- converts a json object to fields in the document -->

<Json>

<SourceKey>JsonRaw</SourceKey>

<FlattenJson>false</FlattenJson>

<!-- the separator property is only relevant when setting the FlattenJson property to 'true' -->

<Separator>_</Separator>

</Json>

<!-- converts an xml object to fields in the document -->

<Xml>

<SourceKey>XmlRaw</SourceKey>

<FlattenXml>false</FlattenXml>

</Xml>

<!-- kv and grok filters similar to logstash's filters -->

<Kv>

<SourceKey>Message</SourceKey>

<ValueSplit>:=</ValueSplit>

<FieldSplit> ,</FieldSplit>

</kv>

<Grok>

<SourceKey>Message</SourceKey>

<Pattern>the message is %{WORD:Message} and guid %{UUID:the_guid}</Pattern>

<Overwrite>true</Overwrite>

</Grok>

<!-- Convert string like: "1,2, 45 9" into array of numbers [1,2,45,9] -->

<ConvertToArray>

<SourceKey>someIds</SourceKey>

<!-- The separators (space and comma) -->

<Seperators>, </Seperators>

</ConvertToArray>

<Convert>

<!-- convert given key to string -->

<ToString>shouldBeString</ToString>

<!-- same as ConvertToArray. Just for convenience -->

<ToArray>

<SourceKey>anotherIds</SourceKey>

</ToArray>

</Convert>

</ElasticFilters>

</appender>

最后别忘了在root中添加上appender

<root>

<level value="WARN" />

<appender-ref ref="ElasticSearchAppender" />

</root>

OK,项目的配置就到这里结束了,可以运行项目写入一些测试的日志了。

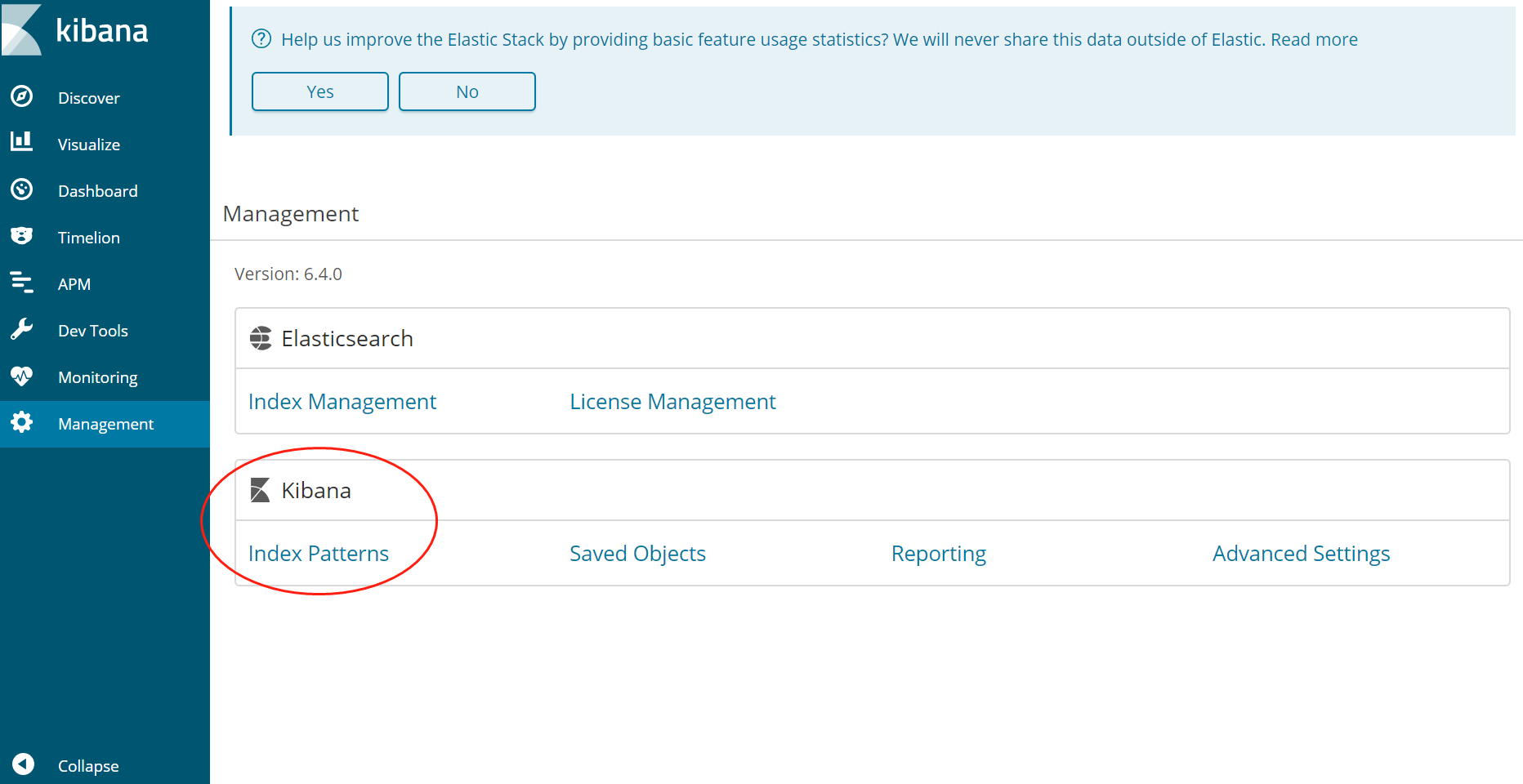

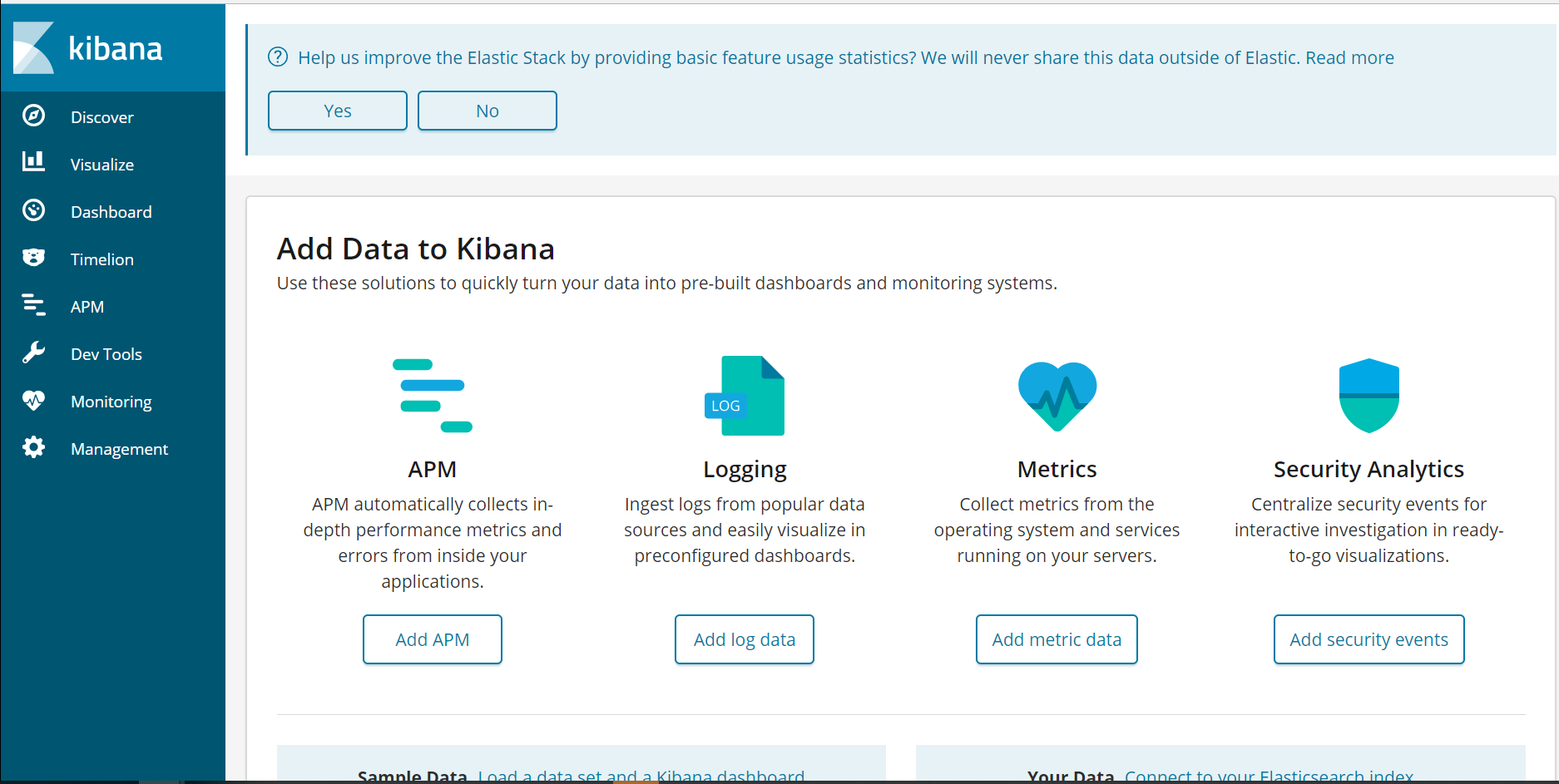

在kibana建立Index Pattern

Elasticsearch的Index跟关系数据库中的Database挺类似的,虽然我们项目在写了测试数据后,Elasticsearch中就已经有Index了,但是如果我们需要在可视化工具中查询数据的话建立Index Pattern

进入Management - Create Index Pattern,输入我们项目日志配置文件中的Index名称log_test-*(如果有数据,这边应该是会自动带出来的),然后创建,之后就可以在Kibana中浏览,查询我们的日志信息了。