安装TensorFlow 包依赖

C:Userssas> pip3 install --upgrade tensorflow

Collecting tensorflow

Downloading https://files.pythonhosted.org/packages/35/f6/8af765c7634bc72a902c50d6e7664cd1faac6128e7362510b0234d93c974/tensorflow-1.7.0-cp36-cp36m-win_amd64.whl (33.1MB)

100% |████████████████████████████████| 33.1MB 34kB/s

Collecting numpy>=1.13.3 (from tensorflow)

Downloading https://files.pythonhosted.org/packages/30/70/cd94a1655d082b8f024b21af1eb13dd0f3035ffe78ff43d4ff9bb97baa5f/numpy-1.14.2-cp36-none-win_amd64.whl (13.4MB)

100% |████████████████████████████████| 13.4MB 84kB/s

Collecting absl-py>=0.1.6 (from tensorflow)

Downloading https://files.pythonhosted.org/packages/90/6b/ba04a9fe6aefa56adafa6b9e0557b959e423c49950527139cb8651b0480b/absl-py-0.2.0.tar.gz (82kB)

100% |████████████████████████████████| 92kB 1.1MB/s

Collecting protobuf>=3.4.0 (from tensorflow)

Downloading https://files.pythonhosted.org/packages/32/cf/6945106da76db9b62d11b429aa4e062817523bb587018374c77f4b63200e/protobuf-3.5.2.post1-cp36-cp36m-win_amd64.whl (958kB)

100% |████████████████████████████████| 962kB 438kB/s

Collecting gast>=0.2.0 (from tensorflow)

Downloading https://files.pythonhosted.org/packages/5c/78/ff794fcae2ce8aa6323e789d1f8b3b7765f601e7702726f430e814822b96/gast-0.2.0.tar.gz

Collecting tensorboard<1.8.0,>=1.7.0 (from tensorflow)

Downloading https://files.pythonhosted.org/packages/0b/ec/65d4e8410038ca2a78c09034094403d231228d0ddcae7d470b223456e55d/tensorboard-1.7.0-py3-none-any.whl (3.1MB)

100% |████████████████████████████████| 3.1MB 132kB/s

Collecting termcolor>=1.1.0 (from tensorflow)

Downloading https://files.pythonhosted.org/packages/8a/48/a76be51647d0eb9f10e2a4511bf3ffb8cc1e6b14e9e4fab46173aa79f981/termcolor-1.1.0.tar.gz

Collecting wheel>=0.26 (from tensorflow)

Downloading https://files.pythonhosted.org/packages/1b/d2/22cde5ea9af055f81814f9f2545f5ed8a053eb749c08d186b369959189a8/wheel-0.31.0-py2.py3-none-any.whl (41kB)

100% |████████████████████████████████| 51kB 311kB/s

Collecting astor>=0.6.0 (from tensorflow)

Downloading https://files.pythonhosted.org/packages/b2/91/cc9805f1ff7b49f620136b3a7ca26f6a1be2ed424606804b0fbcf499f712/astor-0.6.2-py2.py3-none-any.whl

Requirement already up-to-date: six>=1.10.0 in c:userssasappdata

oamingpythonpython36site-packages (from tensorflow)

Collecting grpcio>=1.8.6 (from tensorflow)

Downloading https://files.pythonhosted.org/packages/80/7e/d5ee3ef92822b01e3a274230200baf2454faae64e3d7f436b093ff771a17/grpcio-1.11.0-cp36-cp36m-win_amd64.whl (1.4MB)

100% |████████████████████████████████| 1.4MB 120kB/s

Requirement already up-to-date: setuptools in c:userspublicpy36libsite-packages (from protobuf>=3.4.0->tensorflow)

Collecting html5lib==0.9999999 (from tensorboard<1.8.0,>=1.7.0->tensorflow)

Downloading https://files.pythonhosted.org/packages/ae/ae/bcb60402c60932b32dfaf19bb53870b29eda2cd17551ba5639219fb5ebf9/html5lib-0.9999999.tar.gz (889kB)

100% |████████████████████████████████| 890kB 114kB/s

Collecting markdown>=2.6.8 (from tensorboard<1.8.0,>=1.7.0->tensorflow)

Downloading https://files.pythonhosted.org/packages/6d/7d/488b90f470b96531a3f5788cf12a93332f543dbab13c423a5e7ce96a0493/Markdown-2.6.11-py2.py3-none-any.whl (78kB)

100% |████████████████████████████████| 81kB 122kB/s

Collecting werkzeug>=0.11.10 (from tensorboard<1.8.0,>=1.7.0->tensorflow)

Downloading https://files.pythonhosted.org/packages/20/c4/12e3e56473e52375aa29c4764e70d1b8f3efa6682bef8d0aae04fe335243/Werkzeug-0.14.1-py2.py3-none-any.whl (322kB)

100% |████████████████████████████████| 327kB 127kB/s

Collecting bleach==1.5.0 (from tensorboard<1.8.0,>=1.7.0->tensorflow)

Downloading https://files.pythonhosted.org/packages/33/70/86c5fec937ea4964184d4d6c4f0b9551564f821e1c3575907639036d9b90/bleach-1.5.0-py2.py3-none-any.whl

Installing collected packages: numpy, absl-py, protobuf, gast, html5lib, wheel, markdown, werkzeug, bleach, tensorboard, termcolor, astor, grpcio, tensorflow

Found existing installation: numpy 1.14.0

Uninstalling numpy-1.14.0:

Successfully uninstalled numpy-1.14.0

Running setup.py install for absl-py ... done

Running setup.py install for gast ... done

Running setup.py install for html5lib ... done

Running setup.py install for termcolor ... done

Successfully installed absl-py-0.2.0 astor-0.6.2 bleach-1.5.0 gast-0.2.0 grpcio-1.11.0 html5lib-0.9999999 markdown-2.6.11 numpy-1.14.2 protobuf-3.5.2.post1 tensorboard-1.7.0 tensorflow-1.7.0 termcolor-1.1.0 werkzeug-0.14.1 wheel-0.31.0

You are using pip version 9.0.3, however version 10.0.1 is available.

You should consider upgrading via the 'python -m pip install --upgrade pip' command.

https://www.tensorflow.org/tutorials/layers

Building the CNN MNIST Classifier

【Each of these methods accepts a tensor as input and returns a transformed tensor as output. This makes it easy to connect one layer to another: just take the output from one layer-creation method and supply it as input to another.】

Let's build a model to classify the images in the MNIST dataset using the following CNN architecture:

- Convolutional Layer #1: Applies 32 5x5 filters (extracting 5x5-pixel subregions), with ReLU activation function

- Pooling Layer #1: Performs max pooling with a 2x2 filter and stride of 2 (which specifies that pooled regions do not overlap)

- Convolutional Layer #2: Applies 64 5x5 filters, with ReLU activation function

- Pooling Layer #2: Again, performs max pooling with a 2x2 filter and stride of 2

- Dense Layer #1: 1,024 neurons, with dropout regularization rate of 0.4 (probability of 0.4 that any given element will be dropped during training)

- Dense Layer #2 (Logits Layer): 10 neurons, one for each digit target class (0–9).

【计算output volume:the Conv layer output volume had size [55x55x96]】

http://cs231n.github.io/convolutional-networks/

Real-world example. The Krizhevsky et al. architecture that won the ImageNet challenge in 2012 accepted images of size [227x227x3]. On the first Convolutional Layer, it used neurons with receptive field size F=11F=11, stride S=4S=4and no zero padding P=0P=0. Since (227 - 11)/4 + 1 = 55, and since the Conv layer had a depth of K=96K=96, the Conv layer output volume had size [55x55x96]. Each of the 55*55*96 neurons in this volume was connected to a region of size [11x11x3] in the input volume. Moreover, all 96 neurons in each depth column are connected to the same [11x11x3] region of the input, but of course with different weights. As a fun aside, if you read the actual paper it claims that the input images were 224x224, which is surely incorrect because (224 - 11)/4 + 1 is quite clearly not an integer. This has confused many people in the history of ConvNets and little is known about what happened. My own best guess is that Alex used zero-padding of 3 extra pixels that he does not mention in the paper.

|

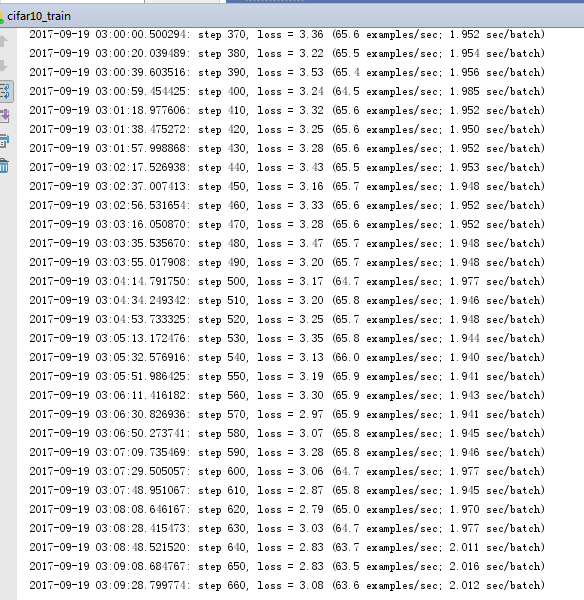

【算法测试】CNN-tensorflow-cifar10_train.py,修改代码或运行环境,减少并控制训练时间---调参:conv1、conv2—kernel-shape=[5, 5,]—>[3, 3,],减小layer of a ConvNet size。 |

http://cs231n.github.io/convolutional-networks/

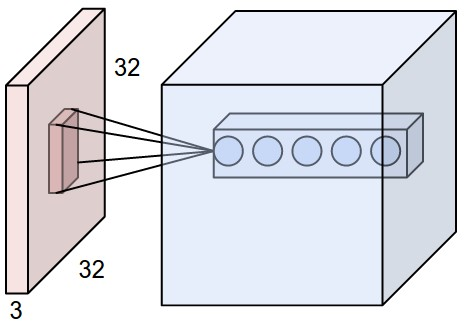

Left: An example input volume in red (e.g. a 32x32x3 CIFAR-10 image), and an example volume of neurons in the first Convolutional layer. Each neuron in the convolutional layer is connected only to a local region in the input volume spatially, but to the full depth (i.e. all color channels). Note, there are multiple neurons (5 in this example) along the depth, all looking at the same region in the input - see discussion of depth columns in text below. Right: The neurons from the Neural Network chapter remain unchanged: They still compute a dot product of their weights with the input followed by a non-linearity, but their connectivity is now restricted to be local spatially.

# Copyright 2015 The TensorFlow Authors. All Rights Reserved.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# ==============================================================================

"""A binary to train CIFAR-10 using a single GPU.

Accuracy:

cifar10_train.py achieves ~86% accuracy after 100K steps (256 epochs of

data) as judged by cifar10_eval.py.

Speed: With batch_size 128.

System | Step Time (sec/batch) | Accuracy

------------------------------------------------------------------

1 Tesla K20m | 0.35-0.60 | ~86% at 60K steps (5 hours)

1 Tesla K40m | 0.25-0.35 | ~86% at 100K steps (4 hours)

Usage:

Please see the tutorial and website for how to download the CIFAR-10

data set, compile the program and train the model.

http://tensorflow.org/tutorials/deep_cnn/

"""

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

from datetime import datetime

import time

import tensorflow as tf

import cifar10

FLAGS = tf.app.flags.FLAGS

tf.app.flags.DEFINE_string('train_dir', '/tmp/cifar10_train',

"""Directory where to write event logs """

"""and checkpoint.""")

tf.app.flags.DEFINE_integer('max_steps', 1000000,

"""Number of batches to run.""")

tf.app.flags.DEFINE_boolean('log_device_placement', False,

"""Whether to log device placement.""")

tf.app.flags.DEFINE_integer('log_frequency', 10,

"""How often to log results to the console.""")

def train():

"""Train CIFAR-10 for a number of steps."""

with tf.Graph().as_default():

global_step = tf.contrib.framework.get_or_create_global_step()

# Get images and labels for CIFAR-10.

# Force input pipeline to CPU:0 to avoid operations sometimes ending up on

# GPU and resulting in a slow down.

with tf.device('/cpu:0'):

images, labels = cifar10.distorted_inputs()

# Build a Graph that computes the logits predictions from the

# inference model.

logits = cifar10.inference(images)

# Calculate loss.

loss = cifar10.loss(logits, labels)

# Build a Graph that trains the model with one batch of examples and

# updates the model parameters.

train_op = cifar10.train(loss, global_step)

class _LoggerHook(tf.train.SessionRunHook):

"""Logs loss and runtime."""

def begin(self):

self._step = -1

self._start_time = time.time()

def before_run(self, run_context):

self._step += 1

return tf.train.SessionRunArgs(loss) # Asks for loss value.

def after_run(self, run_context, run_values):

if self._step % FLAGS.log_frequency == 0:

current_time = time.time()

duration = current_time - self._start_time

self._start_time = current_time

loss_value = run_values.results

examples_per_sec = FLAGS.log_frequency * FLAGS.batch_size / duration

sec_per_batch = float(duration / FLAGS.log_frequency)

format_str = ('%s: step %d, loss = %.2f (%.1f examples/sec; %.3f '

'sec/batch)')

print (format_str % (datetime.now(), self._step, loss_value,

examples_per_sec, sec_per_batch))

with tf.train.MonitoredTrainingSession(

checkpoint_dir=FLAGS.train_dir,

hooks=[tf.train.StopAtStepHook(last_step=FLAGS.max_steps),

tf.train.NanTensorHook(loss),

_LoggerHook()],

config=tf.ConfigProto(

log_device_placement=FLAGS.log_device_placement)) as mon_sess:

while not mon_sess.should_stop():

mon_sess.run(train_op)

def main(argv=None): # pylint: disable=unused-argument

cifar10.maybe_download_and_extract()

if tf.gfile.Exists(FLAGS.train_dir):

tf.gfile.DeleteRecursively(FLAGS.train_dir)

tf.gfile.MakeDirs(FLAGS.train_dir)

train()

if __name__ == '__main__':

tf.app.run()

# Copyright 2015 The TensorFlow Authors. All Rights Reserved.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# ==============================================================================

"""Builds the CIFAR-10 network.

Summary of available functions:

# Compute input images and labels for training. If you would like to run

# evaluations, use inputs() instead.

inputs, labels = distorted_inputs()

# Compute inference on the model inputs to make a prediction.

predictions = inference(inputs)

# Compute the total loss of the prediction with respect to the labels.

loss = loss(predictions, labels)

# Create a graph to run one step of training with respect to the loss.

train_op = train(loss, global_step)

"""

# pylint: disable=missing-docstring

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

import os

import re

import sys

import tarfile

from six.moves import urllib

import tensorflow as tf

import cifar10_input

FLAGS = tf.app.flags.FLAGS

# Basic model parameters.

tf.app.flags.DEFINE_integer('batch_size', 128,

"""Number of images to process in a batch.""")

tf.app.flags.DEFINE_string('data_dir', '/tmp/cifar10_data',

"""Path to the CIFAR-10 data directory.""")

tf.app.flags.DEFINE_boolean('use_fp16', False,

"""Train the model using fp16.""")

# Global constants describing the CIFAR-10 data set.

IMAGE_SIZE = cifar10_input.IMAGE_SIZE

NUM_CLASSES = cifar10_input.NUM_CLASSES

NUM_EXAMPLES_PER_EPOCH_FOR_TRAIN = cifar10_input.NUM_EXAMPLES_PER_EPOCH_FOR_TRAIN

NUM_EXAMPLES_PER_EPOCH_FOR_EVAL = cifar10_input.NUM_EXAMPLES_PER_EPOCH_FOR_EVAL

# Constants describing the training process.

MOVING_AVERAGE_DECAY = 0.9999 # The decay to use for the moving average.

NUM_EPOCHS_PER_DECAY = 350.0 # Epochs after which learning rate decays.

LEARNING_RATE_DECAY_FACTOR = 0.1 # Learning rate decay factor.

INITIAL_LEARNING_RATE = 0.1 # Initial learning rate.

# If a model is trained with multiple GPUs, prefix all Op names with tower_name

# to differentiate the operations. Note that this prefix is removed from the

# names of the summaries when visualizing a model.

TOWER_NAME = 'tower'

DATA_URL = 'http://www.cs.toronto.edu/~kriz/cifar-10-binary.tar.gz'

def _activation_summary(x):

"""Helper to create summaries for activations.

Creates a summary that provides a histogram of activations.

Creates a summary that measures the sparsity of activations.

Args:

x: Tensor

Returns:

nothing

"""

# Remove 'tower_[0-9]/' from the name in case this is a multi-GPU training

# session. This helps the clarity of presentation on tensorboard.

tensor_name = re.sub('%s_[0-9]*/' % TOWER_NAME, '', x.op.name)

tf.summary.histogram(tensor_name + '/activations', x)

tf.summary.scalar(tensor_name + '/sparsity',

tf.nn.zero_fraction(x))

def _variable_on_cpu(name, shape, initializer):

"""Helper to create a Variable stored on CPU memory.

Args:

name: name of the variable

shape: list of ints

initializer: initializer for Variable

Returns:

Variable Tensor

"""

with tf.device('/cpu:0'):

dtype = tf.float16 if FLAGS.use_fp16 else tf.float32

var = tf.get_variable(name, shape, initializer=initializer, dtype=dtype)

return var

def _variable_with_weight_decay(name, shape, stddev, wd):

"""Helper to create an initialized Variable with weight decay.

Note that the Variable is initialized with a truncated normal distribution.

A weight decay is added only if one is specified.

Args:

name: name of the variable

shape: list of ints

stddev: standard deviation of a truncated Gaussian

wd: add L2Loss weight decay multiplied by this float. If None, weight

decay is not added for this Variable.

Returns:

Variable Tensor

"""

dtype = tf.float16 if FLAGS.use_fp16 else tf.float32

var = _variable_on_cpu(

name,

shape,

tf.truncated_normal_initializer(stddev=stddev, dtype=dtype))

if wd is not None:

weight_decay = tf.multiply(tf.nn.l2_loss(var), wd, name='weight_loss')

tf.add_to_collection('losses', weight_decay)

return var

def distorted_inputs():

"""Construct distorted input for CIFAR training using the Reader ops.

Returns:

images: Images. 4D tensor of [batch_size, IMAGE_SIZE, IMAGE_SIZE, 3] size.

labels: Labels. 1D tensor of [batch_size] size.

Raises:

ValueError: If no data_dir

"""

if not FLAGS.data_dir:

raise ValueError('Please supply a data_dir')

data_dir = os.path.join(FLAGS.data_dir, 'cifar-10-batches-bin')

images, labels = cifar10_input.distorted_inputs(data_dir=data_dir,

batch_size=FLAGS.batch_size)

if FLAGS.use_fp16:

images = tf.cast(images, tf.float16)

labels = tf.cast(labels, tf.float16)

return images, labels

def inputs(eval_data):

"""Construct input for CIFAR evaluation using the Reader ops.

Args:

eval_data: bool, indicating if one should use the train or eval data set.

Returns:

images: Images. 4D tensor of [batch_size, IMAGE_SIZE, IMAGE_SIZE, 3] size.

labels: Labels. 1D tensor of [batch_size] size.

Raises:

ValueError: If no data_dir

"""

if not FLAGS.data_dir:

raise ValueError('Please supply a data_dir')

data_dir = os.path.join(FLAGS.data_dir, 'cifar-10-batches-bin')

images, labels = cifar10_input.inputs(eval_data=eval_data,

data_dir=data_dir,

batch_size=FLAGS.batch_size)

if FLAGS.use_fp16:

images = tf.cast(images, tf.float16)

labels = tf.cast(labels, tf.float16)

return images, labels

def inference(images):

"""Build the CIFAR-10 model.

Args:

images: Images returned from distorted_inputs() or inputs().

Returns:

Logits.

"""

# We instantiate all variables using tf.get_variable() instead of

# tf.Variable() in order to share variables across multiple GPU training runs.

# If we only ran this model on a single GPU, we could simplify this function

# by replacing all instances of tf.get_variable() with tf.Variable().

#

# conv1

with tf.variable_scope('conv1') as scope:

kernel = _variable_with_weight_decay('weights',

shape=[5, 5, 3, 64],

stddev=5e-2,

wd=0.0)

conv = tf.nn.conv2d(images, kernel, [1, 1, 1, 1], padding='SAME')

biases = _variable_on_cpu('biases', [64], tf.constant_initializer(0.0))

pre_activation = tf.nn.bias_add(conv, biases)

conv1 = tf.nn.relu(pre_activation, name=scope.name)

_activation_summary(conv1)

# pool1

pool1 = tf.nn.max_pool(conv1, ksize=[1, 3, 3, 1], strides=[1, 2, 2, 1],

padding='SAME', name='pool1')

# norm1

norm1 = tf.nn.lrn(pool1, 4, bias=1.0, alpha=0.001 / 9.0, beta=0.75,

name='norm1')

# conv2

with tf.variable_scope('conv2') as scope:

kernel = _variable_with_weight_decay('weights',

shape=[5, 5, 64, 64],

stddev=5e-2,

wd=0.0)

conv = tf.nn.conv2d(norm1, kernel, [1, 1, 1, 1], padding='SAME')

biases = _variable_on_cpu('biases', [64], tf.constant_initializer(0.1))

pre_activation = tf.nn.bias_add(conv, biases)

conv2 = tf.nn.relu(pre_activation, name=scope.name)

_activation_summary(conv2)

# norm2

norm2 = tf.nn.lrn(conv2, 4, bias=1.0, alpha=0.001 / 9.0, beta=0.75,

name='norm2')

# pool2

pool2 = tf.nn.max_pool(norm2, ksize=[1, 3, 3, 1],

strides=[1, 2, 2, 1], padding='SAME', name='pool2')

# local3

with tf.variable_scope('local3') as scope:

# Move everything into depth so we can perform a single matrix multiply.

reshape = tf.reshape(pool2, [FLAGS.batch_size, -1])

dim = reshape.get_shape()[1].value

weights = _variable_with_weight_decay('weights', shape=[dim, 384],

stddev=0.04, wd=0.004)

biases = _variable_on_cpu('biases', [384], tf.constant_initializer(0.1))

local3 = tf.nn.relu(tf.matmul(reshape, weights) + biases, name=scope.name)

_activation_summary(local3)

# local4

with tf.variable_scope('local4') as scope:

weights = _variable_with_weight_decay('weights', shape=[384, 192],

stddev=0.04, wd=0.004)

biases = _variable_on_cpu('biases', [192], tf.constant_initializer(0.1))

local4 = tf.nn.relu(tf.matmul(local3, weights) + biases, name=scope.name)

_activation_summary(local4)

# linear layer(WX + b),

# We don't apply softmax here because

# tf.nn.sparse_softmax_cross_entropy_with_logits accepts the unscaled logits

# and performs the softmax internally for efficiency.

with tf.variable_scope('softmax_linear') as scope:

weights = _variable_with_weight_decay('weights', [192, NUM_CLASSES],

stddev=1/192.0, wd=0.0)

biases = _variable_on_cpu('biases', [NUM_CLASSES],

tf.constant_initializer(0.0))

softmax_linear = tf.add(tf.matmul(local4, weights), biases, name=scope.name)

_activation_summary(softmax_linear)

return softmax_linear

def loss(logits, labels):

"""Add L2Loss to all the trainable variables.

Add summary for "Loss" and "Loss/avg".

Args:

logits: Logits from inference().

labels: Labels from distorted_inputs or inputs(). 1-D tensor

of shape [batch_size]

Returns:

Loss tensor of type float.

"""

# Calculate the average cross entropy loss across the batch.

labels = tf.cast(labels, tf.int64)

cross_entropy = tf.nn.sparse_softmax_cross_entropy_with_logits(

labels=labels, logits=logits, name='cross_entropy_per_example')

cross_entropy_mean = tf.reduce_mean(cross_entropy, name='cross_entropy')

tf.add_to_collection('losses', cross_entropy_mean)

# The total loss is defined as the cross entropy loss plus all of the weight

# decay terms (L2 loss).

return tf.add_n(tf.get_collection('losses'), name='total_loss')

def _add_loss_summaries(total_loss):

"""Add summaries for losses in CIFAR-10 model.

Generates moving average for all losses and associated summaries for

visualizing the performance of the network.

Args:

total_loss: Total loss from loss().

Returns:

loss_averages_op: op for generating moving averages of losses.

"""

# Compute the moving average of all individual losses and the total loss.

loss_averages = tf.train.ExponentialMovingAverage(0.9, name='avg')

losses = tf.get_collection('losses')

loss_averages_op = loss_averages.apply(losses + [total_loss])

# Attach a scalar summary to all individual losses and the total loss; do the

# same for the averaged version of the losses.

for l in losses + [total_loss]:

# Name each loss as '(raw)' and name the moving average version of the loss

# as the original loss name.

tf.summary.scalar(l.op.name + ' (raw)', l)

tf.summary.scalar(l.op.name, loss_averages.average(l))

return loss_averages_op

def train(total_loss, global_step):

"""Train CIFAR-10 model.

Create an optimizer and apply to all trainable variables. Add moving

average for all trainable variables.

Args:

total_loss: Total loss from loss().

global_step: Integer Variable counting the number of training steps

processed.

Returns:

train_op: op for training.

"""

# Variables that affect learning rate.

num_batches_per_epoch = NUM_EXAMPLES_PER_EPOCH_FOR_TRAIN / FLAGS.batch_size

decay_steps = int(num_batches_per_epoch * NUM_EPOCHS_PER_DECAY)

# Decay the learning rate exponentially based on the number of steps.

lr = tf.train.exponential_decay(INITIAL_LEARNING_RATE,

global_step,

decay_steps,

LEARNING_RATE_DECAY_FACTOR,

staircase=True)

tf.summary.scalar('learning_rate', lr)

# Generate moving averages of all losses and associated summaries.

loss_averages_op = _add_loss_summaries(total_loss)

# Compute gradients.

with tf.control_dependencies([loss_averages_op]):

opt = tf.train.GradientDescentOptimizer(lr)

grads = opt.compute_gradients(total_loss)

# Apply gradients.

apply_gradient_op = opt.apply_gradients(grads, global_step=global_step)

# Add histograms for trainable variables.

for var in tf.trainable_variables():

tf.summary.histogram(var.op.name, var)

# Add histograms for gradients.

for grad, var in grads:

if grad is not None:

tf.summary.histogram(var.op.name + '/gradients', grad)

# Track the moving averages of all trainable variables.

variable_averages = tf.train.ExponentialMovingAverage(

MOVING_AVERAGE_DECAY, global_step)

variables_averages_op = variable_averages.apply(tf.trainable_variables())

with tf.control_dependencies([apply_gradient_op, variables_averages_op]):

train_op = tf.no_op(name='train')

return train_op

def maybe_download_and_extract():

"""Download and extract the tarball from Alex's website."""

dest_directory = FLAGS.data_dir

if not os.path.exists(dest_directory):

os.makedirs(dest_directory)

filename = DATA_URL.split('/')[-1]

filepath = os.path.join(dest_directory, filename)

if not os.path.exists(filepath):

def _progress(count, block_size, total_size):

sys.stdout.write('

>> Downloading %s %.1f%%' % (filename,

float(count * block_size) / float(total_size) * 100.0))

sys.stdout.flush()

filepath, _ = urllib.request.urlretrieve(DATA_URL, filepath, _progress)

print()

statinfo = os.stat(filepath)

print('Successfully downloaded', filename, statinfo.st_size, 'bytes.')

extracted_dir_path = os.path.join(dest_directory, 'cifar-10-batches-bin')

if not os.path.exists(extracted_dir_path):

tarfile.open(filepath, 'r:gz').extractall(dest_directory)

# Copyright 2015 The TensorFlow Authors. All Rights Reserved.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# ==============================================================================

"""Routine for decoding the CIFAR-10 binary file format."""

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

import os

from six.moves import xrange # pylint: disable=redefined-builtin

import tensorflow as tf

# Process images of this size. Note that this differs from the original CIFAR

# image size of 32 x 32. If one alters this number, then the entire model

# architecture will change and any model would need to be retrained.

IMAGE_SIZE = 24

# Global constants describing the CIFAR-10 data set.

NUM_CLASSES = 10

NUM_EXAMPLES_PER_EPOCH_FOR_TRAIN = 50000

NUM_EXAMPLES_PER_EPOCH_FOR_EVAL = 10000

def read_cifar10(filename_queue):

"""Reads and parses examples from CIFAR10 data files.

Recommendation: if you want N-way read parallelism, call this function

N times. This will give you N independent Readers reading different

files & positions within those files, which will give better mixing of

examples.

Args:

filename_queue: A queue of strings with the filenames to read from.

Returns:

An object representing a single example, with the following fields:

height: number of rows in the result (32)

number of columns in the result (32)

depth: number of color channels in the result (3)

key: a scalar string Tensor describing the filename & record number

for this example.

label: an int32 Tensor with the label in the range 0..9.

uint8image: a [height, width, depth] uint8 Tensor with the image data

"""

class CIFAR10Record(object):

pass

result = CIFAR10Record()

# Dimensions of the images in the CIFAR-10 dataset.

# See http://www.cs.toronto.edu/~kriz/cifar.html for a description of the

# input format.

label_bytes = 1 # 2 for CIFAR-100

result.height = 32

result.width = 32

result.depth = 3

image_bytes = result.height * result.width * result.depth

# Every record consists of a label followed by the image, with a

# fixed number of bytes for each.

record_bytes = label_bytes + image_bytes

# Read a record, getting filenames from the filename_queue. No

# header or footer in the CIFAR-10 format, so we leave header_bytes

# and footer_bytes at their default of 0.

reader = tf.FixedLengthRecordReader(record_bytes=record_bytes)

result.key, value = reader.read(filename_queue)

# Convert from a string to a vector of uint8 that is record_bytes long.

record_bytes = tf.decode_raw(value, tf.uint8)

# The first bytes represent the label, which we convert from uint8->int32.

result.label = tf.cast(

tf.strided_slice(record_bytes, [0], [label_bytes]), tf.int32)

# The remaining bytes after the label represent the image, which we reshape

# from [depth * height * width] to [depth, height, width].

depth_major = tf.reshape(

tf.strided_slice(record_bytes, [label_bytes],

[label_bytes + image_bytes]),

[result.depth, result.height, result.width])

# Convert from [depth, height, width] to [height, width, depth].

result.uint8image = tf.transpose(depth_major, [1, 2, 0])

return result

def _generate_image_and_label_batch(image, label, min_queue_examples,

batch_size, shuffle):

"""Construct a queued batch of images and labels.

Args:

image: 3-D Tensor of [height, width, 3] of type.float32.

label: 1-D Tensor of type.int32

min_queue_examples: int32, minimum number of samples to retain

in the queue that provides of batches of examples.

batch_size: Number of images per batch.

shuffle: boolean indicating whether to use a shuffling queue.

Returns:

images: Images. 4D tensor of [batch_size, height, width, 3] size.

labels: Labels. 1D tensor of [batch_size] size.

"""

# Create a queue that shuffles the examples, and then

# read 'batch_size' images + labels from the example queue.

num_preprocess_threads = 16

if shuffle:

images, label_batch = tf.train.shuffle_batch(

[image, label],

batch_size=batch_size,

num_threads=num_preprocess_threads,

capacity=min_queue_examples + 3 * batch_size,

min_after_dequeue=min_queue_examples)

else:

images, label_batch = tf.train.batch(

[image, label],

batch_size=batch_size,

num_threads=num_preprocess_threads,

capacity=min_queue_examples + 3 * batch_size)

# Display the training images in the visualizer.

tf.summary.image('images', images)

return images, tf.reshape(label_batch, [batch_size])

def distorted_inputs(data_dir, batch_size):

"""Construct distorted input for CIFAR training using the Reader ops.

Args:

data_dir: Path to the CIFAR-10 data directory.

batch_size: Number of images per batch.

Returns:

images: Images. 4D tensor of [batch_size, IMAGE_SIZE, IMAGE_SIZE, 3] size.

labels: Labels. 1D tensor of [batch_size] size.

"""

filenames = [os.path.join(data_dir, 'data_batch_%d.bin' % i)

for i in xrange(1, 6)]

for f in filenames:

if not tf.gfile.Exists(f):

raise ValueError('Failed to find file: ' + f)

# Create a queue that produces the filenames to read.

filename_queue = tf.train.string_input_producer(filenames)

# Read examples from files in the filename queue.

read_input = read_cifar10(filename_queue)

reshaped_image = tf.cast(read_input.uint8image, tf.float32)

height = IMAGE_SIZE

width = IMAGE_SIZE

# Image processing for training the network. Note the many random

# distortions applied to the image.

# Randomly crop a [height, width] section of the image.

distorted_image = tf.random_crop(reshaped_image, [height, width, 3])

# Randomly flip the image horizontally.

distorted_image = tf.image.random_flip_left_right(distorted_image)

# Because these operations are not commutative, consider randomizing

# the order their operation.

# NOTE: since per_image_standardization zeros the mean and makes

# the stddev unit, this likely has no effect see tensorflow#1458.

distorted_image = tf.image.random_brightness(distorted_image,

max_delta=63)

distorted_image = tf.image.random_contrast(distorted_image,

lower=0.2, upper=1.8)

# Subtract off the mean and divide by the variance of the pixels.

float_image = tf.image.per_image_standardization(distorted_image)

# Set the shapes of tensors.

float_image.set_shape([height, width, 3])

read_input.label.set_shape([1])

# Ensure that the random shuffling has good mixing properties.

min_fraction_of_examples_in_queue = 0.4

min_queue_examples = int(NUM_EXAMPLES_PER_EPOCH_FOR_TRAIN *

min_fraction_of_examples_in_queue)

print ('Filling queue with %d CIFAR images before starting to train. '

'This will take a few minutes.' % min_queue_examples)

# Generate a batch of images and labels by building up a queue of examples.

return _generate_image_and_label_batch(float_image, read_input.label,

min_queue_examples, batch_size,

shuffle=True)

def inputs(eval_data, data_dir, batch_size):

"""Construct input for CIFAR evaluation using the Reader ops.

Args:

eval_data: bool, indicating if one should use the train or eval data set.

data_dir: Path to the CIFAR-10 data directory.

batch_size: Number of images per batch.

Returns:

images: Images. 4D tensor of [batch_size, IMAGE_SIZE, IMAGE_SIZE, 3] size.

labels: Labels. 1D tensor of [batch_size] size.

"""

if not eval_data:

filenames = [os.path.join(data_dir, 'data_batch_%d.bin' % i)

for i in xrange(1, 6)]

num_examples_per_epoch = NUM_EXAMPLES_PER_EPOCH_FOR_TRAIN

else:

filenames = [os.path.join(data_dir, 'test_batch.bin')]

num_examples_per_epoch = NUM_EXAMPLES_PER_EPOCH_FOR_EVAL

for f in filenames:

if not tf.gfile.Exists(f):

raise ValueError('Failed to find file: ' + f)

# Create a queue that produces the filenames to read.

filename_queue = tf.train.string_input_producer(filenames)

# Read examples from files in the filename queue.

read_input = read_cifar10(filename_queue)

reshaped_image = tf.cast(read_input.uint8image, tf.float32)

height = IMAGE_SIZE

width = IMAGE_SIZE

# Image processing for evaluation.

# Crop the central [height, width] of the image.

resized_image = tf.image.resize_image_with_crop_or_pad(reshaped_image,

height, width)

# Subtract off the mean and divide by the variance of the pixels.

float_image = tf.image.per_image_standardization(resized_image)

# Set the shapes of tensors.

float_image.set_shape([height, width, 3])

read_input.label.set_shape([1])

# Ensure that the random shuffling has good mixing properties.

min_fraction_of_examples_in_queue = 0.4

min_queue_examples = int(num_examples_per_epoch *

min_fraction_of_examples_in_queue)

# Generate a batch of images and labels by building up a queue of examples.

return _generate_image_and_label_batch(float_image, read_input.label,

min_queue_examples, batch_size,

shuffle=False)

# Copyright 2015 The TensorFlow Authors. All Rights Reserved.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# ==============================================================================

"""A very simple MNIST classifier.

See extensive documentation at

https://www.tensorflow.org/get_started/mnist/beginners

"""

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

import argparse

import sys

from tensorflow.examples.tutorials.mnist import input_data

import tensorflow as tf

import pprint

FLAGS = None

def main(_):

# Import data

mnist = input_data.read_data_sets(FLAGS.data_dir, one_hot=True)

# Create the model

x = tf.placeholder(tf.float32, [None, 784])

W = tf.Variable(tf.zeros([784, 10]))

b = tf.Variable(tf.zeros([10]))

y = tf.matmul(x, W) + b

# Define loss and optimizer

y_ = tf.placeholder(tf.float32, [None, 10])

print(47,y_)

# The raw formulation of cross-entropy,

#

# tf.reduce_mean(-tf.reduce_sum(y_ * tf.log(tf.nn.softmax(y)),

# reduction_indices=[1]))

#

# can be numerically unstable.

#

# So here we use tf.nn.softmax_cross_entropy_with_logits on the raw

# outputs of 'y', and then average across the batch.

cross_entropy = tf.reduce_mean(

tf.nn.softmax_cross_entropy_with_logits(labels=y_, logits=y))

train_step = tf.train.GradientDescentOptimizer(0.5).minimize(cross_entropy)

sess = tf.InteractiveSession()

tf.global_variables_initializer().run()

# Train

for _ in range(1000):

batch_xs, batch_ys = mnist.train.next_batch(100)

print('batch_xs, batch_ys',batch_xs, batch_ys)

pprint.pprint(batch_xs)

pprint.pprint(batch_ys)

sess.run(train_step, feed_dict={x: batch_xs, y_: batch_ys})

# Test trained model

correct_prediction = tf.equal(tf.argmax(y, 1), tf.argmax(y_, 1))

print('correct_prediction',correct_prediction)

pprint.pprint(correct_prediction)

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

print(sess.run(accuracy, feed_dict={x: mnist.test.images,

y_: mnist.test.labels}))

if __name__ == '__main__':

parser = argparse.ArgumentParser()

parser.add_argument('--data_dir', type=str, default='/tmp/tensorflow/mnist/input_data',

help='Directory for storing input data')

FLAGS, unparsed = parser.parse_known_args()

tf.app.run(main=main, argv=[sys.argv[0]] + unparsed)

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]

[ 1. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 1. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 1. 0. 0. 0.]

[ 1. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 0. 0. 0. 0. 1. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 0. 0. 0. 0. 1. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 1. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 1. 0. 0. 0. 0.]

[ 0. 0. 1. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 1. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 1. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 1. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 1. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 1. 0. 0. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 1. 0. 0. 0.]

[ 1. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]

[ 0. 0. 1. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 1. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 0. 1. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 1. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 1. 0. 0. 0.]

[ 1. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 1. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]

[ 0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 0. 0. 0. 0. 0. 1. 0. 0. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 0. 0. 0. 0. 0. 0. 1. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 1. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 1. 0. 0. 0.]

[ 0. 0. 1. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 0. 0. 0. 0. 0. 1. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 0. 0. 0. 0. 1. 0. 0. 0. 0.]

[ 0. 0. 1. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 1. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]

[ 1. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]

[ 0. 0. 0. 1. 0. 0. 0. 0. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 1. 0. 0. 0.]]

array([[ 0., 0., 0., ..., 0., 0., 0.],

[ 0., 0., 0., ..., 0., 0., 0.],

[ 0., 0., 0., ..., 0., 0., 0.],

...,

[ 0., 0., 0., ..., 0., 0., 0.],

[ 0., 0., 0., ..., 0., 0., 0.],

[ 0., 0., 0., ..., 0., 0., 0.]], dtype=float32)

array([[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 1., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 0., 1.],

[ 1., 0., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 1., 0., 0., 0., 0., 0., 0., 0.],

[ 1., 0., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 1., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 1., 0., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 1., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 1., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 1., 0., 0., 0., 0.],

[ 0., 0., 0., 1., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 1., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 1., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 1., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 0., 0., 0., 0., 1., 0., 0., 0., 0., 0.],

[ 1., 0., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 1., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 1., 0., 0., 0.],

[ 1., 0., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 0., 0., 0., 0., 0., 1., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 1., 0., 0., 0., 0., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 1., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 0., 0., 0., 0., 0., 1., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 1., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 1., 0., 0., 0., 0.],

[ 0., 0., 1., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 0., 0., 0., 0., 1., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 1., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 1., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 1., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 1., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 1., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 0., 1.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 1., 0., 0., 0., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 1., 0., 0., 0.],

[ 1., 0., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 1., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 1., 0.],

[ 0., 0., 1., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 1., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 1., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 1., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 1., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 0., 0., 1., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 1., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 1., 0., 0., 0.],

[ 1., 0., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 1., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 1., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 1., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 1., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 1., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 1., 0.],

[ 0., 0., 0., 0., 1., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 0., 1.],

[ 0., 0., 0., 0., 0., 1., 0., 0., 0., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 1., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 0., 1.],

[ 0., 0., 0., 0., 0., 0., 1., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 1., 0., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 1., 0., 0., 0.],

[ 0., 0., 1., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 0., 1.],

[ 0., 0., 0., 0., 0., 1., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 0., 0., 0., 0., 0., 1., 0., 0., 0., 0.],

[ 0., 0., 1., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 1., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 0., 1.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 1., 0.],

[ 1., 0., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 1., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 1., 0.],

[ 0., 0., 0., 1., 0., 0., 0., 0., 0., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 1., 0., 0., 0.]])

batch_xs, batch_ys [[ 0. 0. 0. ..., 0. 0. 0.]

[ 0. 0. 0. ..., 0. 0. 0.]

[ 0. 0. 0. ..., 0. 0. 0.]

...,

[ 0. 0. 0. ..., 0. 0. 0.]

[ 0. 0. 0. ..., 0. 0. 0.]

[ 0. 0. 0. ..., 0. 0. 0.]] [[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 0. 0. 0. 1. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]

[ 0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 1. 0. 0. 0. 0.]

[ 1. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 1. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 1. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 1. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 0. 0. 1. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 0. 0. 0. 1. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 1. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 1. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]

[ 0. 0. 1. 0. 0. 0. 0. 0. 0. 0.]

[ 1. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 1. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 1. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 1. 0. 0. 0. 0. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 0. 0. 1. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]

[ 0. 0. 0. 0. 0. 0. 1. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]

[ 0. 0. 0. 0. 0. 1. 0. 0. 0. 0.]

[ 0. 0. 1. 0. 0. 0. 0. 0. 0. 0.]

[ 1. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 1. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 1. 0. 0. 0.]

[ 0. 0. 0. 1. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 1. 0. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 1. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 1. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 1. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 1. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 1. 0. 0. 0. 0.]

[ 0. 0. 0. 1. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 0. 0. 0. 1. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 1. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 1. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 1. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 1. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 0. 0. 0. 0. 0. 0. 1. 0. 0. 0.]

[ 0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]

[ 0. 0. 1. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 1. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]

[ 0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]

[ 0. 0. 0. 1. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 1. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 1. 0. 0. 0. 0.]

[ 1. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 1. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 0. 0. 1. 0. 0. 0. 0. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]

[ 0. 0. 0. 1. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 1. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 0. 0. 1. 0. 0. 0. 0. 0. 0.]

[ 1. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 1. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 1. 0. 0. 0.]

[ 0. 0. 0. 1. 0. 0. 0. 0. 0. 0.]

[ 1. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]]

array([[ 0., 0., 0., ..., 0., 0., 0.],

[ 0., 0., 0., ..., 0., 0., 0.],

[ 0., 0., 0., ..., 0., 0., 0.],

...,

[ 0., 0., 0., ..., 0., 0., 0.],

[ 0., 0., 0., ..., 0., 0., 0.],

[ 0., 0., 0., ..., 0., 0., 0.]], dtype=float32)

array([[ 0., 0., 0., 0., 0., 0., 0., 0., 0., 1.],

[ 0., 0., 0., 1., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 0., 1.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 0., 1.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 1., 0.],

[ 0., 0., 0., 0., 1., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 1., 0., 0., 0., 0.],

[ 1., 0., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 0., 1.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 0., 1.],

[ 1., 0., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 1., 0., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 1., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 0., 1.],

[ 0., 0., 1., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 0., 1.],

[ 0., 0., 0., 1., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 1., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 1., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 1., 0., 0., 0., 0., 0.],

[ 0., 0., 1., 0., 0., 0., 0., 0., 0., 0.],

[ 1., 0., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 1., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 1., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 1., 0., 0., 0., 0., 0., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 0., 0., 0., 1., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 1., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 1., 0.],

[ 0., 0., 0., 0., 0., 0., 1., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 1., 0.],

[ 0., 0., 0., 0., 0., 1., 0., 0., 0., 0.],

[ 0., 0., 1., 0., 0., 0., 0., 0., 0., 0.],

[ 1., 0., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 1., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 1., 0., 0., 0.],

[ 0., 0., 0., 1., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 0., 1.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 1., 0., 0., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 0., 1.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 0., 1.],

[ 1., 0., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 1., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 1., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 1., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 1., 0., 0., 0., 0.],

[ 0., 0., 0., 1., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 0., 1.],

[ 0., 0., 0., 1., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 1., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 1., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 1., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 1., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 1., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 0., 1.],

[ 0., 0., 0., 0., 0., 0., 1., 0., 0., 0.],

[ 0., 0., 0., 0., 1., 0., 0., 0., 0., 0.],

[ 0., 0., 1., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 1., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 1., 0.],

[ 0., 0., 0., 0., 1., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 1., 0.],

[ 0., 0., 0., 1., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 1., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 1., 0., 0., 0., 0.],

[ 1., 0., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 0., 1.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 1., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 1., 0., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 0., 0., 0., 1., 0., 0., 0., 0., 0., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 1., 0.],

[ 0., 0., 0., 1., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 1., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 0., 1.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 0., 0., 0., 1., 0., 0., 0., 0., 0., 0.],

[ 1., 0., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 1., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 1., 0., 0., 0.],

[ 0., 0., 0., 1., 0., 0., 0., 0., 0., 0.],

[ 1., 0., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 1., 0., 0., 0., 0., 0.]])

batch_xs, batch_ys [[ 0. 0. 0. ..., 0. 0. 0.]

[ 0. 0. 0. ..., 0. 0. 0.]

[ 0. 0. 0. ..., 0. 0. 0.]

...,

[ 0. 0. 0. ..., 0. 0. 0.]

[ 0. 0. 0. ..., 0. 0. 0.]

[ 0. 0. 0. ..., 0. 0. 0.]] [[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 1. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]

[ 0. 0. 1. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 1. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 1. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 1. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 0. 1. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 0. 0. 0. 0. 1. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 1. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 1. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]

[ 0. 0. 1. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]

[ 1. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 1. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 1. 0. 0. 0.]

[ 0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 1. 0. 0. 0.]

[ 0. 0. 1. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 1. 0. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 1. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]

[ 0. 0. 0. 1. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 0. 0. 1. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 1. 0. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 1. 0. 0. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 1. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 1. 0. 0. 0.]

[ 0. 0. 1. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 1. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 1. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 1. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 1. 0. 0. 0.]

[ 1. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 1. 0. 0. 0. 0.]

[ 1. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 1. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 1. 0. 0. 0. 0.]

[ 0. 0. 0. 1. 0. 0. 0. 0. 0. 0.]

[ 1. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]

[ 0. 0. 0. 0. 0. 0. 1. 0. 0. 0.]

[ 1. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]

[ 0. 0. 1. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 1. 0. 0. 0. 0. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 1. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 1. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 1. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]

[ 0. 0. 0. 1. 0. 0. 0. 0. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]

[ 0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 1. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]

[ 0. 0. 0. 0. 0. 0. 1. 0. 0. 0.]]

array([[ 0., 0., 0., ..., 0., 0., 0.],

[ 0., 0., 0., ..., 0., 0., 0.],

[ 0., 0., 0., ..., 0., 0., 0.],

...,

[ 0., 0., 0., ..., 0., 0., 0.],

[ 0., 0., 0., ..., 0., 0., 0.],

[ 0., 0., 0., ..., 0., 0., 0.]], dtype=float32)

array([[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 1., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 1., 0.],

[ 0., 0., 1., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 0., 1.],

[ 1., 0., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 1., 0., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 1., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 1., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 1., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 0., 0., 1., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 0., 0., 0., 0., 0., 1., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 1., 0., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 1., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 0., 1.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 1., 0.],

[ 0., 0., 1., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 0., 1.],

[ 0., 0., 0., 0., 1., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 1., 0., 0., 0., 0., 0.],

[ 1., 0., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 1., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 1., 0., 0., 0.],

[ 0., 0., 0., 0., 1., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 1., 0., 0., 0.],

[ 0., 0., 1., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 1., 0., 0., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 1., 0., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 1., 0.],

[ 0., 0., 0., 1., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 0., 1.],

[ 0., 0., 1., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 1., 0., 0., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 1., 0., 0., 0., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 1., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 1., 0., 0., 0.],

[ 0., 0., 1., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 1., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 1., 0., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 1., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 1., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 1., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 1., 0., 0., 0.],

[ 1., 0., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 1., 0., 0., 0., 0.],

[ 1., 0., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 1., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 1., 0., 0., 0., 0.],

[ 0., 0., 0., 1., 0., 0., 0., 0., 0., 0.],

[ 1., 0., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 1., 0.],

[ 0., 0., 0., 0., 0., 0., 1., 0., 0., 0.],

[ 1., 0., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 1., 0.],

[ 0., 0., 1., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 1., 0., 0., 0., 0., 0., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 1., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 1., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 1., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 1., 0., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 1., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 1., 0.],

[ 0., 0., 0., 1., 0., 0., 0., 0., 0., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 1., 0.],

[ 0., 0., 0., 0., 1., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 1., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 1., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 1., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 0., 1.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 1., 0.],

[ 0., 0., 0., 0., 0., 0., 1., 0., 0., 0.]])

batch_xs, batch_ys [[ 0. 0. 0. ..., 0. 0. 0.]

[ 0. 0. 0. ..., 0. 0. 0.]

[ 0. 0. 0. ..., 0. 0. 0.]

...,

[ 0. 0. 0. ..., 0. 0. 0.]

[ 0. 0. 0. ..., 0. 0. 0.]

[ 0. 0. 0. ..., 0. 0. 0.]] [[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 0. 0. 0. 0. 0. 1. 0. 0. 0. 0.]

[ 1. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 1. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 0. 0. 1. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 1. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 1. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 1. 0. 0. 0. 0.]

[ 0. 0. 0. 1. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 0. 0. 0. 0. 0. 0. 1. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 0. 0. 0. 1. 0. 0. 0. 0. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]

[ 0. 0. 0. 0. 0. 0. 1. 0. 0. 0.]

[ 1. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 1. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 1. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]

[ 1. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 1. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 1. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]

[ 0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 0. 0. 0. 0. 0. 1. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 1. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 1. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 1. 0. 0. 0. 0. 0. 0.]

[ 1. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 1. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 1. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 1. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]

[ 0. 0. 0. 1. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 1. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 1. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 1. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]

[ 0. 0. 0. 0. 0. 0. 1. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 1. 0. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 0. 0. 0. 0. 1. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 1. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 1. 0. 0. 0.]

[ 0. 0. 1. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 1. 0. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 1. 0. 0. 0. 0. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]

[ 0. 0. 0. 0. 0. 1. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 1. 0. 0. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]]

array([[ 0., 0., 0., ..., 0., 0., 0.],

[ 0., 0., 0., ..., 0., 0., 0.],

[ 0., 0., 0., ..., 0., 0., 0.],

...,

[ 0., 0., 0., ..., 0., 0., 0.],

[ 0., 0., 0., ..., 0., 0., 0.],

[ 0., 0., 0., ..., 0., 0., 0.]], dtype=float32)

array([[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 0., 1.],

[ 0., 0., 0., 0., 0., 1., 0., 0., 0., 0.],

[ 1., 0., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 1., 0., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 0., 0., 0., 1., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 1., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 1., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 1., 0., 0., 0., 0.],

[ 0., 0., 0., 1., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 1., 0., 0., 0., 0., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 1., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 0., 1.],

[ 0., 0., 0., 0., 0., 0., 1., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 0., 1.],

[ 0., 0., 0., 1., 0., 0., 0., 0., 0., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 0., 1.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 1., 0.],

[ 0., 0., 0., 0., 0., 0., 1., 0., 0., 0.],

[ 1., 0., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 1., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 1., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 1., 0.],

[ 1., 0., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 1., 0., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 1., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 1., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 0., 1.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 1., 0.],

[ 0., 0., 0., 0., 1., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 0., 1.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 0., 1.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 0., 1.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 0., 1.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 0., 1.],

[ 0., 0., 0., 0., 0., 1., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 1., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 0., 1.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 0., 1.],

[ 1., 0., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 1., 0., 0., 0., 0., 0., 0.],

[ 1., 0., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 1., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 0., 1.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 0., 1.],

[ 1., 0., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 0., 1.],

[ 1., 0., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 1., 0.],

[ 0., 0., 0., 1., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 1., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 1., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 1., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 1., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 1., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 1., 0., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 1., 0., 0., 0., 0., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 1., 0.],

[ 0., 0., 0., 0., 0., 0., 1., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 0., 1.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 1., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 1., 0., 0., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 1., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 1., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 0., 0., 0., 0., 0., 1., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 1., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 1., 0., 0., 0.],

[ 0., 0., 1., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 1., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 1., 0., 0., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 1., 0., 0., 0., 0., 0., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 1., 0.],

[ 0., 0., 0., 0., 0., 1., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 1., 0., 0., 0., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 1., 0., 0., 0., 0., 0., 0., 0., 0.]])

batch_xs, batch_ys [[ 0. 0. 0. ..., 0. 0. 0.]

[ 0. 0. 0. ..., 0. 0. 0.]

[ 0. 0. 0. ..., 0. 0. 0.]

...,

[ 0. 0. 0. ..., 0. 0. 0.]

[ 0. 0. 0. ..., 0. 0. 0.]

[ 0. 0. 0. ..., 0. 0. 0.]] [[ 0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 1. 0. 0. 0. 0.]

[ 0. 0. 1. 0. 0. 0. 0. 0. 0. 0.]

[ 1. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 1. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 1. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 0. 0. 0. 1. 0. 0. 0. 0. 0. 0.]

[ 1. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 1. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 1. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 1. 0. 0. 0. 0.]

[ 0. 0. 0. 1. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 1. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 1. 0. 0. 0.]

[ 0. 0. 0. 1. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]

[ 0. 0. 0. 1. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]

[ 0. 0. 1. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 0. 0. 0. 0. 0. 0. 1. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]

[ 0. 0. 1. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]