COMPUTER ORGANIZATION AND ARCHITECTURE DESIGNING FOR PERFORMANCE NINTH EDITION

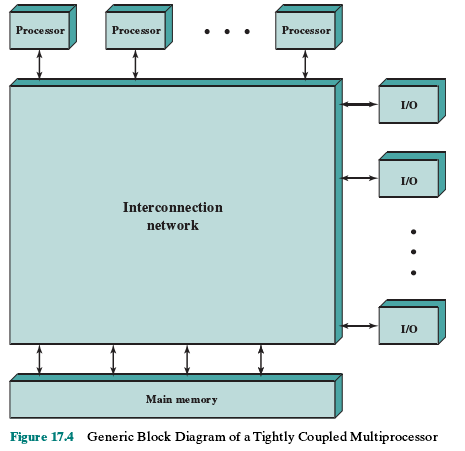

Figure 17.4 depicts in general terms the organization of a multiprocessor system.

There are two or more processors. Each processor is self-contained, including a

control unit, ALU, registers, and, typically, one or more levels of cache. Each pro-

cessor has access to a shared main memory and the I/O devices through some form

of interconnection mechanism. The processors can communicate with each other

through memory (messages and status information left in common data areas). It

may also be possible for processors to exchange signals directly. The memory is

often organized so that multiple simultaneous accesses to separate blocks of mem-

ory are possible. In some configurations, each processor may also have its own pri-

vate main memory and I/O channels in addition to the shared resources.

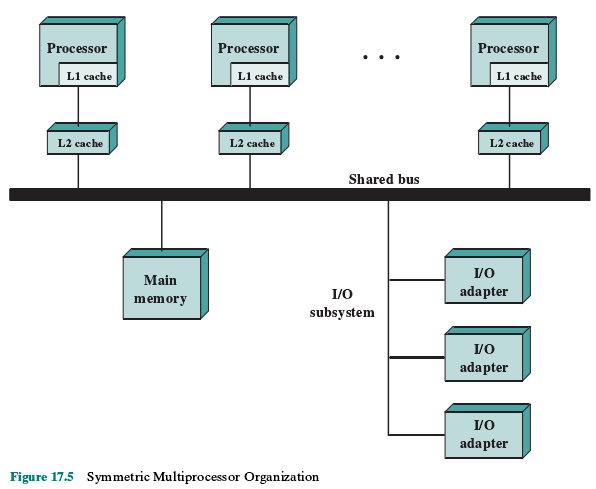

The most common organization for personal computers, workstations, and

servers is the time-shared bus. The time-shared bus is the simplest mechanism for

constructing a multiprocessor system (Figure 17.5). The structure and interfaces are

basically the same as for a single-processor system that uses a bus interconnection.

The bus consists of control, address, and data lines. To facilitate DMA transfers

from I/O subsystems to processors, the following features are provided:

• Addressing: It must be possible to distinguish modules on the bus to deter-

mine the source and destination of data.

• Arbitration: Any I/O module can temporarily function as “master.” A mecha-

nism is provided to arbitrate competing requests for bus control, using some

sort of priority scheme.

• Time-sharing: When one module is controlling the bus, other modules are

locked out and must, if necessary, suspend operation until bus access is achieved.

hese uniprocessor features are directly usable in an SMP organization. In

this latter case, there are now multiple processors as well as multiple I/O processors

all attempting to gain access to one or more memory modules via the bus.

The bus organization has several attractive features:

• Simplicity: This is the simplest approach to multiprocessor organization. The

physical interface and the addressing, arbitration, and time-sharing logic of

each processor remain the same as in a single-processor system.

• Flexibility: It is generally easy to expand the system by attaching more proces-

sors to the bus.

• Reliability: The bus is essentially a passive medium, and the failure of any

attached device should not cause failure of the whole system.

The main drawback to the bus organization is performance. All memory ref-

erences pass through the common bus. Thus, the bus cycle time limits the speed

of the system. To improve performance, it is desirable to equip each processor

with a cache memory. This should reduce the number of bus accesses dramatically.

Typically, workstation and PC SMPs have two levels of cache, with the L1 cache

internal (same chip as the processor) and the L2 cache either internal or external.

Some processors now employ a L3 cache as well.

The use of caches introduces some new design considerations. Because each

local cache contains an image of a portion of memory, if a word is altered in one

cache, it could conceivably invalidate a word in another cache. To prevent this, the

other processors must be alerted that an update has taken place. This problem is

known as the cache coherence problem and is typically addressed in hardware rather

than by the operating system. We address this issue in Section 17.4.