准备工作:

-

若没有下载vim请下载vim

- 若出现 Could not get lock /var/lib/dpkg/lock 问题请参考:

https://jingyan.baidu.com/article/636f38bb861422d6b8461024.html

- 下载 openssh-server

- 查看ssh

如果显示sshd则说明已启动成功

- 查看主机

ifconfig -a

inet后面即为你的ip

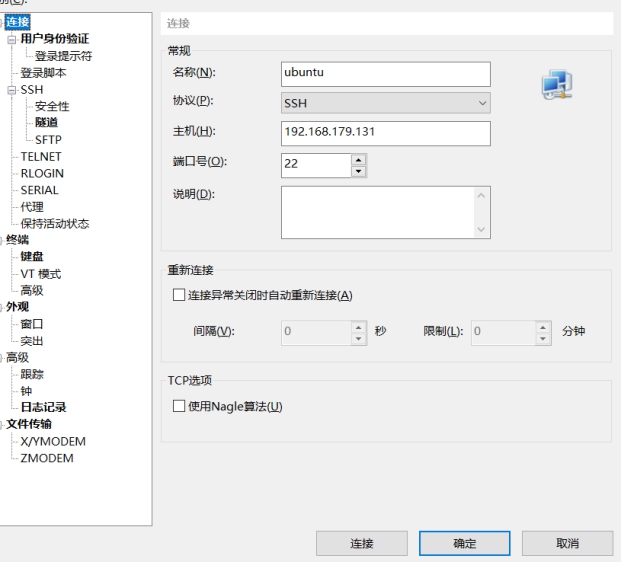

- Xshell连接

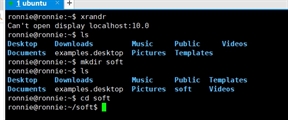

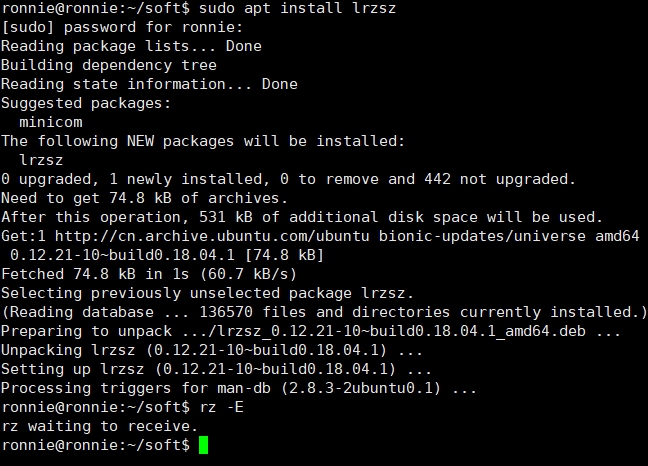

- 创建soft目录

- 下载lrzsz, 然后就可以拖拉文件上传了

- JDK配置

(1). 将jdk的tar包上传至soft目录, tar -zxvf 文件名 将其解压, 并 mv 解压后的文件名 /usr/lib/jdk1.8

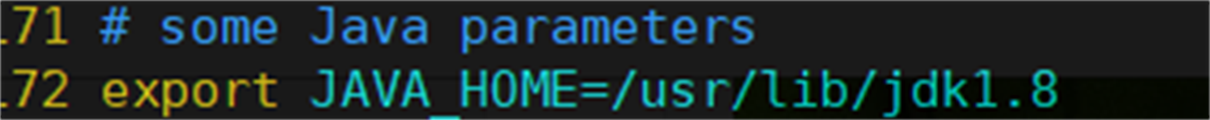

(2). sudo vim ~/.bashrc, 进入文件, 并在文件末尾添加jdk路径

(3). 配置好之后使其生效

~# source ~/.bashrc

再依次输入(蓝字为你要更新到的目录, 绿字为之前在/usr/lib下存储的jdk的组件)

sudo update-alternatives --install /usr/bin/java java /usr/lib/jdk1.8/bin/java 300

sudo update-alternatives --install /usr/bin/javac javac /usr/lib/jdk1.8/bin/javac 300

sudo update-alternatives --install /usr/bin/jar jar /usr/lib/jdk1.8/bin/jar 300

sudo update-alternatives --install /usr/bin/javah javah /usr/lib/jdk1.8/bin/javah 300

sudo update-alternatives --install /usr/bin/javap javap /usr/lib/jdk1.8/bin/javap 300

(4). 检查配置是否成功

执行java -version, 如显示版本

表示安装成功

- Hadoop 单机版安装

(1). 去apache官网下载hadoop的tar.gz包(已经编译好了), 若下载src.tar.gz包则需要自己编译。将tar包上传并tar -zxvf 解压, 再将解压后的移至/usr/local目录下, mv hadoop-xxxx hadoop 重命名目录。

(2). vi .bashrc 进入配置文件并在文件末尾添加

#HADOOP VARIABLES

export HADOOP_HOME=/usr/lib/hadoop

export PATH=$PATH:$HADOOP_HOME/bin

export PATH=$PATH:$HADOOP_HOME/sbin

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export YARN_HOME=$HADOOP_HOME

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib"

source .bashrc使环境变量生效

(3). 检查Hadoop路径配置

root@ronnie:/home/ronnie# hadoop version

Hadoop 3.1.2

Source code repository https://github.com/apache/hadoop.git -r 1019dde65bcf12e05ef48ac71e84550d589e5d9a

Compiled by sunilg on 2019-01-29T01:39Z

Compiled with protoc 2.5.0

From source with checksum 64b8bdd4ca6e77cce75a93eb09ab2a9

This command was run using /usr/local/hadoop/share/hadoop/common/hadoop-common-3.1.2.jar

(4) . 修改Hadoop配置

vim /usr/local/hadoop/etc/hadoop/hadoop-env.sh

将 export JAVA_HOME= 的注释解开并设置为jdk绝对路径:

export JAVA_HOME=/usr/lib/jdk1.8

(5). 进行WordCount测试

root@ronnie:~# cd /usr/local/hadoop/

root@ronnie:/usr/local/hadoop# cp README.txt input

root@ronnie:/usr/local/hadoop# ./bin/hadoop jar share/hadoop/mapreduce/sources/hadoop-mapreduce-examples-3.1.2-sources.jar org.apache.hadoop.examples.WordCount input output

输出结果:

File System Counters

FILE: Number of bytes read=639494

FILE: Number of bytes written=1632872

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

Map-Reduce Framework

Map input records=31

Map output records=179

Map output bytes=2055

Map output materialized bytes=1836

Input split bytes=93

Combine input records=179

Combine output records=131

Reduce input groups=131

Reduce shuffle bytes=1836

Reduce input records=131

Reduce output records=131

Spilled Records=262

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=5

Total committed heap usage (bytes)=434634752

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=1366

File Output Format Counters

Bytes Written=1326

cat output/* 查看信息

(BIS), 1

(ECCN) 1

(TSU) 1

(see 1

5D002.C.1, 1

740.13) 1

<http://www.wassenaar.org/> 1

Administration 1

Apache 1

BEFORE 1

BIS 1

Bureau 1

Commerce, 1

Commodity 1

Control 1

Core 1

Department 1

ENC 1

Exception 1

Export 2

For 1

Foundation 1

Government 1

Hadoop 1

Hadoop, 1

Industry 1

Jetty 1

License 1

Number 1

Regulations, 1

SSL 1

Section 1

Security 1

See 1

Software 2

Technology 1

The 4

This 1

U.S. 1

Unrestricted 1

about 1

algorithms. 1

and 6

and/or 1

another 1

any 1

as 1

asymmetric 1

at: 2

both 1

by 1

check 1

classified 1

code 1

code. 1

concerning 1

country 1

country's 1

country, 1

cryptographic 3

currently 1

details 1

distribution 2

eligible 1

encryption 3

exception 1

export 1

following 1

for 3

form 1

from 1

functions 1

has 1

have 1

http://hadoop.apache.org/core/ 1

http://wiki.apache.org/hadoop/ 1

if 1

import, 2

in 1

included 1

includes 2

information 2

information. 1

is 1

it 1

latest 1

laws, 1

libraries 1

makes 1

manner 1

may 1

more 2

mortbay.org. 1

object 1

of 5

on 2

or 2

our 2

performing 1

permitted. 1

please 2

policies 1

possession, 2

project 1

provides 1

re-export 2

regulations 1

reside 1

restrictions 1

security 1

see 1

software 2

software, 2

software. 2

software: 1

source 1

the 8

this 3

to 2

under 1

use, 2

uses 1

using 2

visit 1

website 1

which 2

wiki, 1

with 1

written 1

you 1

your 1

-

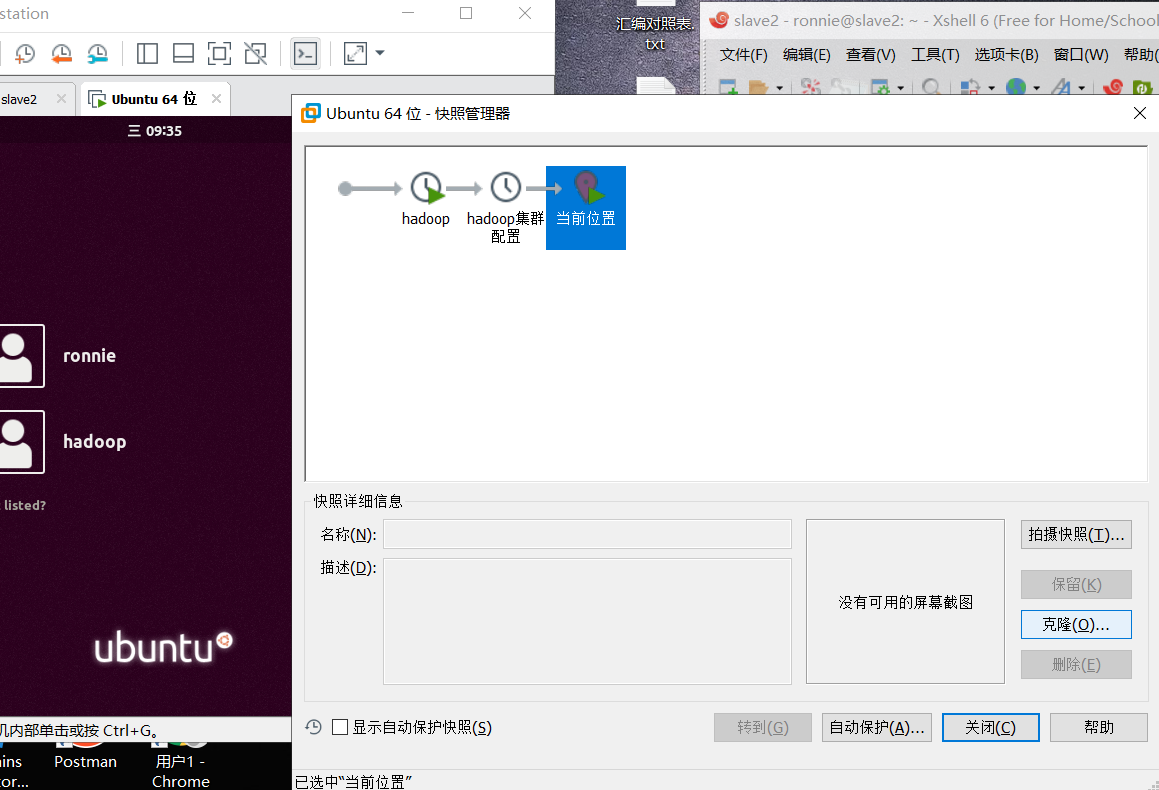

Hadoop伪分布式安装部署

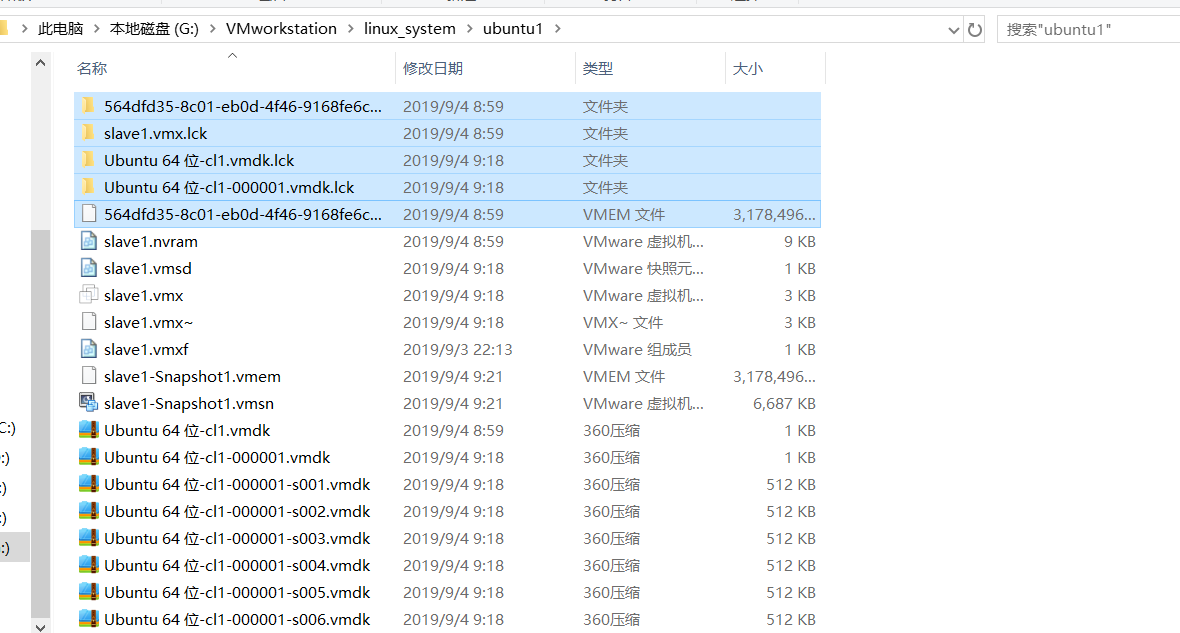

克隆2份原文件,自定义目录,记得删除文件中的lck文件以及环境文件

主节点的网络配置(vim /etc/netplan/01-network-manager-all.yaml编辑该文件, 需要root权限)

# Let NetworkManager manage all devices on this system network: version: 2 renderer: networkd ethernets: ens33: dhcp4: no addresses: [192.168.179.138/24] gateway4: 192.168.179.2 nameservers: addresses: [192.168.179.2, 114.114.114.114]

slave1 网络配置

# Let NetworkManager manage all devices on this system

network:

version: 2

renderer: networkd

ethernets:

ens33:

dhcp4: no

addresses: [192.168.179.139/24]

gateway4: 192.168.179.2

nameservers:

addresses: [192.168.179.2, 114.114.114.114]

slave2 网络配置

# Let NetworkManager manage all devices on this system

network:

version: 2

renderer: networkd

ethernets:

ens33:

dhcp4: no

addresses: [192.168.179.140/24]

gateway4: 192.168.179.2

nameservers:

addresses: [192.168.179.2, 114.114.114.114]

修改/etc/sudoers文件

sudo vim /etc/sudoers

在root ALL=(ALL:ALL) ALL 此行下面添加

hadoop ALL=(ALL:ALL) ALL

修改/etc/hosts文件

sudo vim /etc/hosts

127.0.0.1 localhost.localdomain localhost

192.168.179.138 master

192.168.179.139 slave1

192.168.179.140 slave2

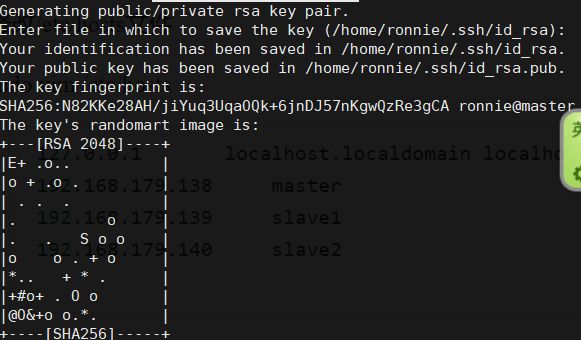

免密登录

在master, slave1 和slave2上执行ssh-keygen -t rsa -P ""生成RSA公钥私钥(记得enter)

在家目录(~)下vim .ssh/authorized_keys:

将三台虚拟机的公钥都存入该文件中:

ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDleINRMFosnU6bMTHdNpm/OA4kER1AG3ZdqxmTkrQmikAKtEYp/4jvKTWIhziYiKJMbTw1crpUoHRFuThInvCjyMPQ9/BP9Sajd1+1XjOrviUofVQ9D33OjiAB3iOg61UQUJP2lsKPc58T0Igkf+vVKMHoJlfKDhJhHE4la+bcDRVMHY1hiEoNaeczazYvYXTzjsVDs21SCyqzYhe4h0aMg5071AJ/UDqt7YL+U0BGOWQyW1ww2QMsc6JG/pjrPbhlnE2zyggCvN9mk475gLi9fC2vHMcCeJVH5N+KUuiMPEGKiXi/EGcnv83Kq/NabH0zLIzIHbUQiZZhE4mpmpQf ronnie@master

ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDvAvPUPIs9tiEIGW2u5JMpSjLRLbCoa1B8XegiX1HaA+MCml1k+d8IJLFWYAPSTQT0vA/MzF5ujRmBcMwgjVj3yd8hQwPMngyg2QQWW9OCw7jzUqjX5C8hA+3k0UTdKAtOBdn5vQxvYNBrzOd2JrYHCIGSgpTjoV1ZWbYi3ceCwxAlUTfKjwaJyrJbBA58fNeMwFRZ9Xzu5f+Yy/3rzTi2pdXnWTodgW4MhVJF+/JYyy8OyK8otNxrhgk5J0M11Om1RJO5NB83x0OnV/ignImv10h8KN/UHQ6rWnqOvLQsAEpvM2dXW0Xii0c/G7huFxxzfeN+vhmk6VABg/Acz+3T ronnie@slave1

ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQCmQ5odgiHTLO7szEG5KfJvbfrolY3Fm/tmX32dM0DIHgW3P+OYiNd7efPgfZKue+NbwzyhuNkk2IHPR3h5fE5p7MGiO0tP0G/iCtLeOW3vT4OHtFkskY0sHzbUT73jo4owYC+c4aCh5cRZFR3V3WSP50bcUI3lvcKkTLS07VYjFRJzPmC8nHjE3Ll5xQfdH1TM6uV1UwLqfOiBr9oxEs+G3a+KmsBjFdx3xerKcNolTJWfyv2q172BcAQ8PoTt4iL1KCL554QI6KZadNAGr8QIOkxULsLd3TxQ0TPMMWv5nAcqwiCOxBiBBnXMKxy5nU3BWWH9qJHbR+qrsaZECc69 ronnie@slave2

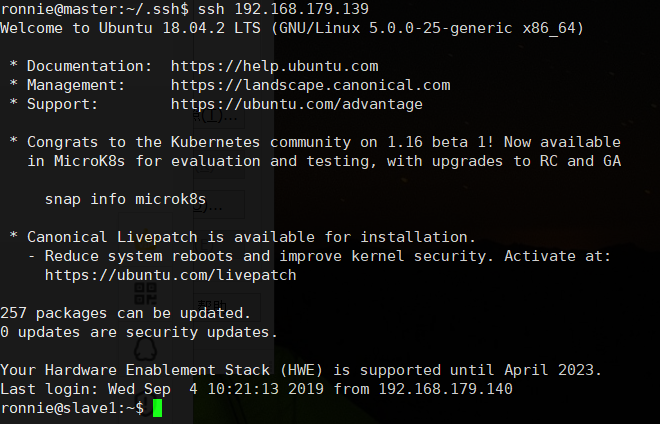

依次测试三台虚拟机之间能否免密登录

(成功案例)

创建hadoop用户

为master和slave分别创建hadoop用户

sudo useradd -m hadoop -s /bin/bash

sudo passwd hadoop

sudo adduser hadoop sudo

更改hadoop目录权限

cd /usr/local

sudo chmod -R 777 hadoop/

更改hadoop目录拥有者

sudo chown -R hadoop:hadoop hadoop/

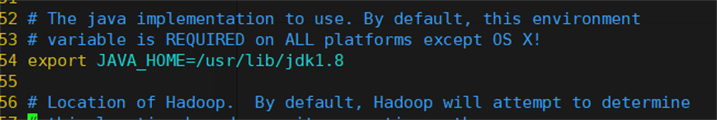

检查hadoop-env.sh文件

vim /usr/local/hadoop/etc/hadoop/hadoop-env.sh

若lib/ 后为jdk8则将其改为jdk1.8

同理修改mapred-env.sh文件以及yarn-env.sh

vim /usr/local/hadoop/etc/hadoop/mapred-env.sh

vim /usr/local/hadoop/etc/hadoop/yarn-env.sh

修改core-site.xml文件

vim /usr/local/hadoop/etc/hadoop/core-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>hadoop.tmp.dir</name>

<value>/usr/local/hadoop/tmp</value>

<description>temp-dir</description>

</property>

<property>

<name>fs.defaultFS</name>

<!--1.x name>fs.default.name</name-->

<value>hdfs://master:9000</value>

<description>hdfs namenode address</description>

</property>

<property>

<name>io.file.buffer.size</name>

<value>102400</value>

<description>size-of-document</description>

</property>

</configuration>

修改hdfs-site.xml

vim /usr/local/hadoop/etc/hadoop/hdfs-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<!--property>

<name>dfs.http.address</name>

<value>slave1:50070</value>

</property -->

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>slave1:50080</value>

</property>

<property>

<name>dfs.replication</name>

<value>1</value>

<description>replication-of-data-file-system</description>

</property>

<property>

<name>dfs.name.dir</name>

<value>/usr/local/hadoop/hdfs/name</value>

<description>namenode-direction</description>

</property>

<property>

<name>dfs.data.dir</name>

<value>/usr/local/hadoop/hdfs/data</value>

<description>datanode-direction</description>

</property>

<!--property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property -->

</configuration>

修改mapred-site.xml

vim /usr/local/hadoop/etc/hadoop/mapred-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<!-- property>

<name>mapred.map.tasks</name>

<value>20</value>

</property>

<property>

<name>mapred.reduce.tasks</name>

<value>4</value>

</property>

<property>

<name>mapred.job.tracker</name>

<value>master:9000</value>

<description>job-tracker-address</description>

<property -->

<property>

<name>mapreduce.jobhistory.address</name>

<value>master:10020</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>master:19888</value>

</property>

</configuration>

修改yarn-site.xml

vim /usr/local/hadoop/etc/hadoop/yarn-site.xml

<?xml version="1.0"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>master</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>master:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>master:8030</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>master:8031</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>master:8033</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>master:8088</value>

</property>

</configuration>

格式化HDFS

cd /usr/local/hadoop/

./bin/hdfs namenode -format

若无报错会显示以下信息

WARNING: /usr/local/hadoop/logs does not exist. Creating.

2019-09-04 11:37:04,099 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = master/192.168.179.138

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 3.1.2

STARTUP_MSG: classpath = /usr/local/hadoop/etc/hadoop:/usr/local/hadoop/share/hadoop/common/lib/jetty-http-9.3.24.v20180605.jar:/usr/local/hadoop/share/hadoop/common/lib/kerb-core-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/kerby-pkix-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/gson-2.2.4.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-cli-1.2.jar:/usr/local/hadoop/share/hadoop/common/lib/zookeeper-3.4.13.jar:/usr/local/hadoop/share/hadoop/common/lib/audience-annotations-0.5.0.jar:/usr/local/hadoop/share/hadoop/common/lib/jettison-1.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jsr305-3.0.0.jar:/usr/local/hadoop/share/hadoop/common/lib/jcip-annotations-1.0-1.jar:/usr/local/hadoop/share/hadoop/common/lib/httpclient-4.5.2.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-xc-1.9.13.jar:/usr/local/hadoop/share/hadoop/common/lib/jetty-util-9.3.24.v20180605.jar:/usr/local/hadoop/share/hadoop/common/lib/kerb-util-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jetty-xml-9.3.24.v20180605.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-compress-1.18.jar:/usr/local/hadoop/share/hadoop/common/lib/jsp-api-2.1.jar:/usr/local/hadoop/share/hadoop/common/lib/curator-recipes-2.13.0.jar:/usr/local/hadoop/share/hadoop/common/lib/paranamer-2.3.jar:/usr/local/hadoop/share/hadoop/common/lib/slf4j-api-1.7.25.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-databind-2.7.8.jar:/usr/local/hadoop/share/hadoop/common/lib/snappy-java-1.0.5.jar:/usr/local/hadoop/share/hadoop/common/lib/jsr311-api-1.1.1.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-codec-1.11.jar:/usr/local/hadoop/share/hadoop/common/lib/woodstox-core-5.0.3.jar:/usr/local/hadoop/share/hadoop/common/lib/httpcore-4.4.4.jar:/usr/local/hadoop/share/hadoop/common/lib/kerb-client-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jersey-server-1.19.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-lang3-3.4.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-io-2.5.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-configuration2-2.1.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/usr/local/hadoop/share/hadoop/common/lib/curator-client-2.13.0.jar:/usr/local/hadoop/share/hadoop/common/lib/guava-11.0.2.jar:/usr/local/hadoop/share/hadoop/common/lib/jetty-server-9.3.24.v20180605.jar:/usr/local/hadoop/share/hadoop/common/lib/stax2-api-3.1.4.jar:/usr/local/hadoop/share/hadoop/common/lib/kerb-simplekdc-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/javax.servlet-api-3.1.0.jar:/usr/local/hadoop/share/hadoop/common/lib/htrace-core4-4.1.0-incubating.jar:/usr/local/hadoop/share/hadoop/common/lib/avro-1.7.7.jar:/usr/local/hadoop/share/hadoop/common/lib/accessors-smart-1.2.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-core-2.7.8.jar:/usr/local/hadoop/share/hadoop/common/lib/jersey-json-1.19.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-jaxrs-1.9.13.jar:/usr/local/hadoop/share/hadoop/common/lib/jul-to-slf4j-1.7.25.jar:/usr/local/hadoop/share/hadoop/common/lib/jersey-core-1.19.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-math3-3.1.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jetty-security-9.3.24.v20180605.jar:/usr/local/hadoop/share/hadoop/common/lib/log4j-1.2.17.jar:/usr/local/hadoop/share/hadoop/common/lib/jetty-io-9.3.24.v20180605.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-net-3.6.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-annotations-2.7.8.jar:/usr/local/hadoop/share/hadoop/common/lib/kerb-admin-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-beanutils-1.9.3.jar:/usr/local/hadoop/share/hadoop/common/lib/kerb-crypto-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/kerby-util-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/re2j-1.1.jar:/usr/local/hadoop/share/hadoop/common/lib/kerb-common-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jsch-0.1.54.jar:/usr/local/hadoop/share/hadoop/common/lib/token-provider-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop/share/hadoop/common/lib/jetty-servlet-9.3.24.v20180605.jar:/usr/local/hadoop/share/hadoop/common/lib/asm-5.0.4.jar:/usr/local/hadoop/share/hadoop/common/lib/kerby-xdr-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jersey-servlet-1.19.jar:/usr/local/hadoop/share/hadoop/common/lib/hadoop-annotations-3.1.2.jar:/usr/local/hadoop/share/hadoop/common/lib/kerb-server-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jetty-webapp-9.3.24.v20180605.jar:/usr/local/hadoop/share/hadoop/common/lib/hadoop-auth-3.1.2.jar:/usr/local/hadoop/share/hadoop/common/lib/kerby-asn1-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/metrics-core-3.2.4.jar:/usr/local/hadoop/share/hadoop/common/lib/json-smart-2.3.jar:/usr/local/hadoop/share/hadoop/common/lib/kerb-identity-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/netty-3.10.5.Final.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-collections-3.2.2.jar:/usr/local/hadoop/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/share/hadoop/common/lib/jaxb-api-2.2.11.jar:/usr/local/hadoop/share/hadoop/common/lib/nimbus-jose-jwt-4.41.1.jar:/usr/local/hadoop/share/hadoop/common/lib/curator-framework-2.13.0.jar:/usr/local/hadoop/share/hadoop/common/lib/kerby-config-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-lang-2.6.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-logging-1.1.3.jar:/usr/local/hadoop/share/hadoop/common/hadoop-common-3.1.2-tests.jar:/usr/local/hadoop/share/hadoop/common/hadoop-nfs-3.1.2.jar:/usr/local/hadoop/share/hadoop/common/hadoop-common-3.1.2.jar:/usr/local/hadoop/share/hadoop/common/hadoop-kms-3.1.2.jar:/usr/local/hadoop/share/hadoop/hdfs:/usr/local/hadoop/share/hadoop/hdfs/lib/jetty-http-9.3.24.v20180605.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/kerb-core-1.0.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/kerby-pkix-1.0.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/gson-2.2.4.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/zookeeper-3.4.13.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/audience-annotations-0.5.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jettison-1.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jsr305-3.0.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jcip-annotations-1.0-1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/httpclient-4.5.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jackson-xc-1.9.13.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jetty-util-9.3.24.v20180605.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/kerb-util-1.0.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jetty-xml-9.3.24.v20180605.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-compress-1.18.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/curator-recipes-2.13.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/paranamer-2.3.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jackson-databind-2.7.8.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/snappy-java-1.0.5.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jsr311-api-1.1.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-codec-1.11.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/netty-all-4.0.52.Final.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/woodstox-core-5.0.3.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/httpcore-4.4.4.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/kerb-client-1.0.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jersey-server-1.19.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-lang3-3.4.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-io-2.5.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-configuration2-2.1.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jaxb-impl-2.2.3-1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/curator-client-2.13.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/guava-11.0.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jetty-server-9.3.24.v20180605.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/okhttp-2.7.5.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/stax2-api-3.1.4.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/json-simple-1.1.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/kerb-simplekdc-1.0.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/javax.servlet-api-3.1.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/okio-1.6.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/htrace-core4-4.1.0-incubating.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/avro-1.7.7.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/accessors-smart-1.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jackson-core-2.7.8.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jersey-json-1.19.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jackson-jaxrs-1.9.13.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jersey-core-1.19.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-math3-3.1.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jetty-security-9.3.24.v20180605.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jetty-io-9.3.24.v20180605.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-net-3.6.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jackson-annotations-2.7.8.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/kerb-admin-1.0.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-beanutils-1.9.3.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/leveldbjni-all-1.8.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jetty-util-ajax-9.3.24.v20180605.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/kerb-crypto-1.0.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/kerby-util-1.0.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/re2j-1.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/kerb-common-1.0.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jsch-0.1.54.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/token-provider-1.0.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jetty-servlet-9.3.24.v20180605.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/asm-5.0.4.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/kerby-xdr-1.0.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jersey-servlet-1.19.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/hadoop-annotations-3.1.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/kerb-server-1.0.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jetty-webapp-9.3.24.v20180605.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/hadoop-auth-3.1.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/kerby-asn1-1.0.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/json-smart-2.3.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/kerb-identity-1.0.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/netty-3.10.5.Final.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-collections-3.2.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jaxb-api-2.2.11.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/nimbus-jose-jwt-4.41.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/curator-framework-2.13.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/kerby-config-1.0.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-lang-2.6.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-httpfs-3.1.2.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-client-3.1.2-tests.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-rbf-3.1.2.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-nfs-3.1.2.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-rbf-3.1.2-tests.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-native-client-3.1.2-tests.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-3.1.2.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-native-client-3.1.2.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-3.1.2-tests.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-client-3.1.2.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/junit-4.11.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/hamcrest-core-1.3.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-3.1.2.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-3.1.2.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-3.1.2.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-3.1.2-tests.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-3.1.2.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-nativetask-3.1.2.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-common-3.1.2.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-core-3.1.2.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-uploader-3.1.2.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-app-3.1.2.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.1.2.jar:/usr/local/hadoop/share/hadoop/yarn:/usr/local/hadoop/share/hadoop/yarn/lib/jackson-jaxrs-base-2.7.8.jar:/usr/local/hadoop/share/hadoop/yarn/lib/HikariCP-java7-2.4.12.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jersey-guice-1.19.jar:/usr/local/hadoop/share/hadoop/yarn/lib/geronimo-jcache_1.0_spec-1.0-alpha-1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/swagger-annotations-1.5.4.jar:/usr/local/hadoop/share/hadoop/yarn/lib/json-io-2.5.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/javax.inject-1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/fst-2.50.jar:/usr/local/hadoop/share/hadoop/yarn/lib/guice-4.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jackson-module-jaxb-annotations-2.7.8.jar:/usr/local/hadoop/share/hadoop/yarn/lib/java-util-1.9.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/snakeyaml-1.16.jar:/usr/local/hadoop/share/hadoop/yarn/lib/mssql-jdbc-6.2.1.jre7.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jackson-jaxrs-json-provider-2.7.8.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jersey-client-1.19.jar:/usr/local/hadoop/share/hadoop/yarn/lib/metrics-core-3.2.4.jar:/usr/local/hadoop/share/hadoop/yarn/lib/guice-servlet-4.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/aopalliance-1.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/ehcache-3.3.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/objenesis-1.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/dnsjava-2.1.7.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-timeline-pluginstorage-3.1.2.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-3.1.2.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-3.1.2.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-services-core-3.1.2.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-3.1.2.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-api-3.1.2.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-common-3.1.2.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-common-3.1.2.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-services-api-3.1.2.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-client-3.1.2.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-3.1.2.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-sharedcachemanager-3.1.2.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-nodemanager-3.1.2.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-registry-3.1.2.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-tests-3.1.2.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-router-3.1.2.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-web-proxy-3.1.2.jar

STARTUP_MSG: build = https://github.com/apache/hadoop.git -r 1019dde65bcf12e05ef48ac71e84550d589e5d9a; compiled by 'sunilg' on 2019-01-29T01:39Z

STARTUP_MSG: java = 1.8.0_191

************************************************************/

2019-09-04 11:37:04,113 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

2019-09-04 11:37:04,244 INFO namenode.NameNode: createNameNode [-format]

2019-09-04 11:37:04,917 INFO common.Util: Assuming 'file' scheme for path /usr/local/hadoop/hdfs/name in configuration.

2019-09-04 11:37:04,917 INFO common.Util: Assuming 'file' scheme for path /usr/local/hadoop/hdfs/name in configuration.

Formatting using clusterid: CID-590dbb9e-6102-4d5a-bdb4-63a8175c44ef

2019-09-04 11:37:04,996 INFO namenode.FSEditLog: Edit logging is async:true

2019-09-04 11:37:05,021 INFO namenode.FSNamesystem: KeyProvider: null

2019-09-04 11:37:05,022 INFO namenode.FSNamesystem: fsLock is fair: true

2019-09-04 11:37:05,022 INFO namenode.FSNamesystem: Detailed lock hold time metrics enabled: false

2019-09-04 11:37:05,034 INFO namenode.FSNamesystem: fsOwner = ronnie (auth:SIMPLE)

2019-09-04 11:37:05,034 INFO namenode.FSNamesystem: supergroup = supergroup

2019-09-04 11:37:05,034 INFO namenode.FSNamesystem: isPermissionEnabled = true

2019-09-04 11:37:05,034 INFO namenode.FSNamesystem: HA Enabled: false

2019-09-04 11:37:05,095 INFO common.Util: dfs.datanode.fileio.profiling.sampling.percentage set to 0. Disabling file IO profiling

2019-09-04 11:37:05,114 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit: configured=1000, counted=60, effected=1000

2019-09-04 11:37:05,114 INFO blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true

2019-09-04 11:37:05,118 INFO blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to 000:00:00:00.000

2019-09-04 11:37:05,133 INFO blockmanagement.BlockManager: The block deletion will start around 2019 Sep 04 11:37:05

2019-09-04 11:37:05,135 INFO util.GSet: Computing capacity for map BlocksMap

2019-09-04 11:37:05,135 INFO util.GSet: VM type = 64-bit

2019-09-04 11:37:05,137 INFO util.GSet: 2.0% max memory 654.5 MB = 13.1 MB

2019-09-04 11:37:05,137 INFO util.GSet: capacity = 2^21 = 2097152 entries

2019-09-04 11:37:05,146 INFO blockmanagement.BlockManager: dfs.block.access.token.enable = false

2019-09-04 11:37:05,153 INFO Configuration.deprecation: No unit for dfs.namenode.safemode.extension(30000) assuming MILLISECONDS

2019-09-04 11:37:05,153 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.threshold-pct = 0.9990000128746033

2019-09-04 11:37:05,153 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.min.datanodes = 0

2019-09-04 11:37:05,153 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.extension = 30000

2019-09-04 11:37:05,153 INFO blockmanagement.BlockManager: defaultReplication = 1

2019-09-04 11:37:05,153 INFO blockmanagement.BlockManager: maxReplication = 512

2019-09-04 11:37:05,153 INFO blockmanagement.BlockManager: minReplication = 1

2019-09-04 11:37:05,153 INFO blockmanagement.BlockManager: maxReplicationStreams = 2

2019-09-04 11:37:05,153 INFO blockmanagement.BlockManager: redundancyRecheckInterval = 3000ms

2019-09-04 11:37:05,153 INFO blockmanagement.BlockManager: encryptDataTransfer = false

2019-09-04 11:37:05,153 INFO blockmanagement.BlockManager: maxNumBlocksToLog = 1000

2019-09-04 11:37:05,243 INFO namenode.FSDirectory: GLOBAL serial map: bits=24 maxEntries=16777215

2019-09-04 11:37:05,274 INFO util.GSet: Computing capacity for map INodeMap

2019-09-04 11:37:05,274 INFO util.GSet: VM type = 64-bit

2019-09-04 11:37:05,274 INFO util.GSet: 1.0% max memory 654.5 MB = 6.5 MB

2019-09-04 11:37:05,274 INFO util.GSet: capacity = 2^20 = 1048576 entries

2019-09-04 11:37:05,295 INFO namenode.FSDirectory: ACLs enabled? false

2019-09-04 11:37:05,295 INFO namenode.FSDirectory: POSIX ACL inheritance enabled? true

2019-09-04 11:37:05,295 INFO namenode.FSDirectory: XAttrs enabled? true

2019-09-04 11:37:05,295 INFO namenode.NameNode: Caching file names occurring more than 10 times

2019-09-04 11:37:05,306 INFO snapshot.SnapshotManager: Loaded config captureOpenFiles: false, skipCaptureAccessTimeOnlyChange: false, snapshotDiffAllowSnapRootDescendant: true, maxSnapshotLimit: 65536

2019-09-04 11:37:05,309 INFO snapshot.SnapshotManager: SkipList is disabled

2019-09-04 11:37:05,318 INFO util.GSet: Computing capacity for map cachedBlocks

2019-09-04 11:37:05,318 INFO util.GSet: VM type = 64-bit

2019-09-04 11:37:05,318 INFO util.GSet: 0.25% max memory 654.5 MB = 1.6 MB

2019-09-04 11:37:05,318 INFO util.GSet: capacity = 2^18 = 262144 entries

2019-09-04 11:37:05,344 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.window.num.buckets = 10

2019-09-04 11:37:05,344 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.num.users = 10

2019-09-04 11:37:05,344 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.windows.minutes = 1,5,25

2019-09-04 11:37:05,355 INFO namenode.FSNamesystem: Retry cache on namenode is enabled

2019-09-04 11:37:05,355 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis

2019-09-04 11:37:05,360 INFO util.GSet: Computing capacity for map NameNodeRetryCache

2019-09-04 11:37:05,360 INFO util.GSet: VM type = 64-bit

2019-09-04 11:37:05,361 INFO util.GSet: 0.029999999329447746% max memory 654.5 MB = 201.1 KB

2019-09-04 11:37:05,361 INFO util.GSet: capacity = 2^15 = 32768 entries

2019-09-04 11:37:05,408 INFO namenode.FSImage: Allocated new BlockPoolId: BP-267790080-192.168.179.138-1567568225396

2019-09-04 11:37:05,428 INFO common.Storage: Storage directory /usr/local/hadoop/hdfs/name has been successfully formatted.

2019-09-04 11:37:05,669 INFO namenode.FSImageFormatProtobuf: Saving image file /usr/local/hadoop/hdfs/name/current/fsimage.ckpt_0000000000000000000 using no compression

2019-09-04 11:37:05,751 INFO namenode.FSImageFormatProtobuf: Image file /usr/local/hadoop/hdfs/name/current/fsimage.ckpt_0000000000000000000 of size 393 bytes saved in 0 seconds .

2019-09-04 11:37:05,768 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

2019-09-04 11:37:05,778 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at master/192.168.179.138

************************************************************/

此时使用ll命令会发现/usr/local/hadoop下生成了hdfs文件夹

ps: 若报错会显示配置文件的第几行几列有错, 修改后需要重启生效。

记得配置 workers文件:

vim /usr/local/hadoop/etc/hadoop/workers

localhost

master

slave1

slave2

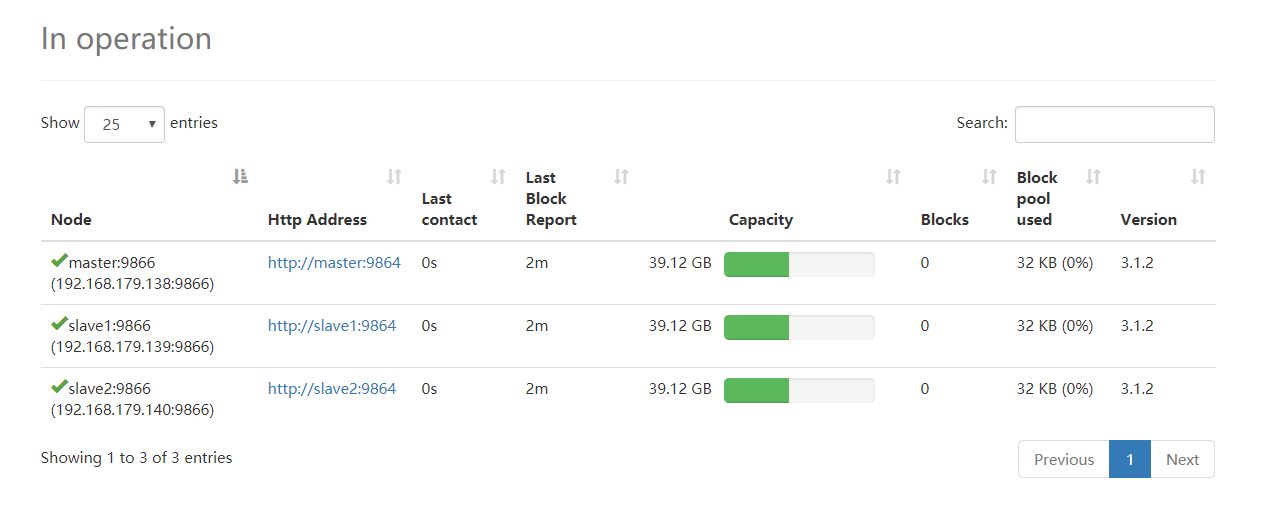

开启集群

cd /usr/local/hadoop/

./sbin/start-all.sh

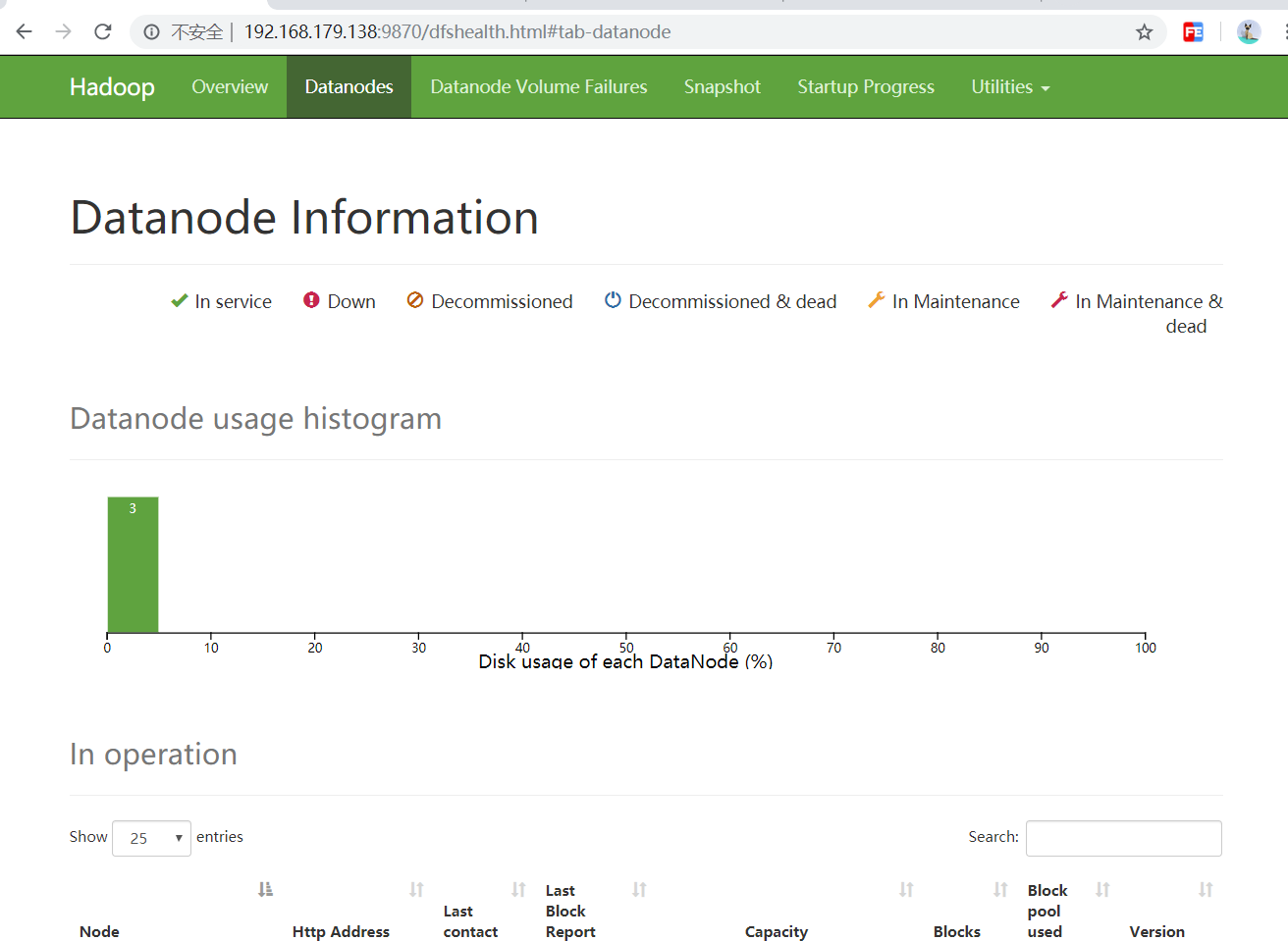

一个比较坑的地方是新版hadoop的端口为9870而不是50070......

启动成功, 今天就先到这了。