Kubernetes 进阶教程

一、 服务探针

对线上业务来说,保证服务的正常稳定是重中之重,对故障服务的及时处理避免影响业务以及快速恢复一直 是开发运维的难点。Kubernetes 提供了健康检查服务,对于检测到故障服务会被及时自动下线,以及通过重启服 务的方式使服务自动恢复。

1、 存活性探测(LivenessProbe)

用于判断容器是否存活,即 Pod 是否为 running 状态,如果 LivenessProbe 探针探测到容器不健康,则 kubelet 将 kill 掉容器,并根据容器的重启策略判断按照那种方式重启,如果一个容器不包含 LivenessProbe 探针,则 Kubelet 认为容器的 LivenessProbe 探针的返回值永远成功。存活性探测支持的方法有三种:ExecAction,TCPSocketAction, HTTPGetAction。

注意:存活性检查,检测容器是否可以正常运行

1.2Exec方式

# 存活性探测 Exec方式

# 定义资源类型

kind: Deployment

# 指定API版本号

apiVersion: apps/v1

# 元信息

metadata:

namespace: default

name: test-deployment

labels:

app: test-deployment

spec:

# 选择器

selector:

# 精确匹配

matchLabels:

app: nginx

dev: pro

# 定义pod的模板

template:

metadata:

# 跟matchlabels精确匹配只能多不能少

labels:

app: nginx

dev: pro

name: randysun

spec:

containers:

- name: nginx # 容器名称

image: nginx:latest

imagePullPolicy: IfNotPresent # 拉取镜像规则

# 存活性探针

livenessProbe:

exec:

command:

- cat

- /usr/share/nginx/html/index.html

initialDelaySeconds: 8 # 启动8后开始探测

timeoutSeconds: 3 # 每次执行探测超市时间,默认为1秒

failureThreshold: 2 # 探测两次,认为服务失败

periodSeconds: 3 # 探测的频率(3s一次)

successThreshold: 2 # 探测两次以上成功,才认为服务成功

replicas: 2

---

kind: Service

apiVersion: v1

metadata:

name: test-deployment-svc

spec:

type: NodePort

ports:

- port: 80

targetPort: 80

nodePort: 30001

selector: # 选择器与label一样只能多不能少

app: nginx

dev: pro

执行

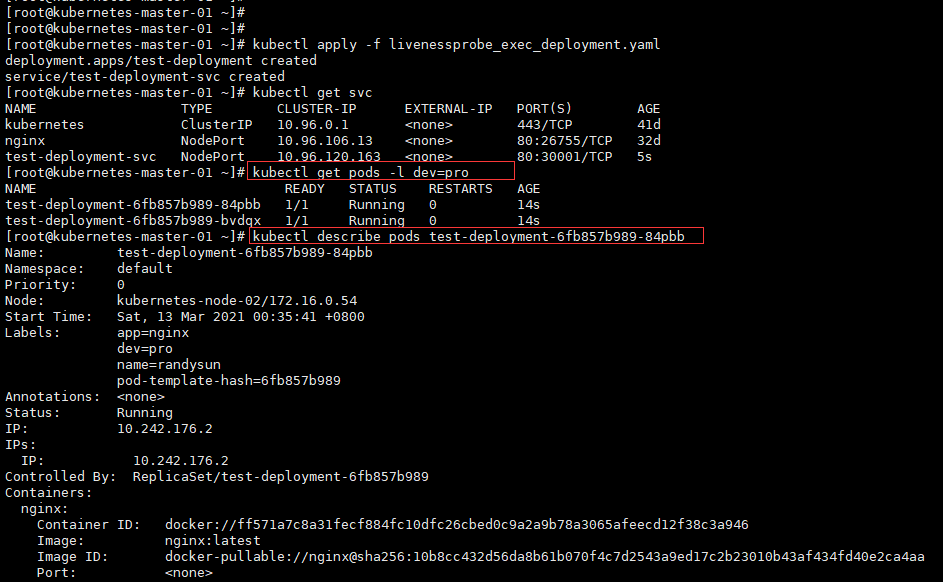

[root@kubernetes-master-01 ~]# kubectl apply -f livenessprobe_exec_deployment.yaml

deployment.apps/test-deployment created

service/test-deployment-svc created

[root@kubernetes-master-01 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 41d

nginx NodePort 10.96.106.13 <none> 80:26755/TCP 32d

test-deployment-svc NodePort 10.96.120.163 <none> 80:30001/TCP 5s

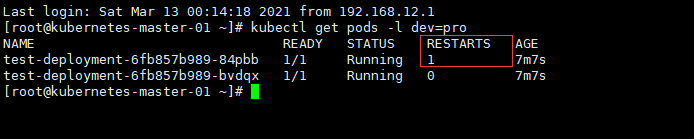

[root@kubernetes-master-01 ~]# kubectl get pods -l dev=pro

NAME READY STATUS RESTARTS AGE

test-deployment-6fb857b989-84pbb 1/1 Running 0 14s

test-deployment-6fb857b989-bvdqx 1/1 Running 0 14s

[root@kubernetes-master-01 ~]# kubectl describe pods test-deployment-6fb857b989-84pbb

Name: test-deployment-6fb857b989-84pbb

Namespace: default

Priority: 0

Node: kubernetes-node-02/172.16.0.54

Start Time: Sat, 13 Mar 2021 00:35:41 +0800

Labels: app=nginx

dev=pro

name=randysun

pod-template-hash=6fb857b989

Annotations: <none>

Status: Running

IP: 10.242.176.2

IPs:

IP: 10.242.176.2

Controlled By: ReplicaSet/test-deployment-6fb857b989

Containers:

nginx:

Container ID: docker://ff571a7c8a31fecf884fc10dfc26cbed0c9a2a9b78a3065afeecd12f38c3a946

Image: nginx:latest

Image ID: docker-pullable://nginx@sha256:10b8cc432d56da8b61b070f4c7d2543a9ed17c2b23010b43af434fd40e2ca4aa

Port: <none>

Host Port: <none>

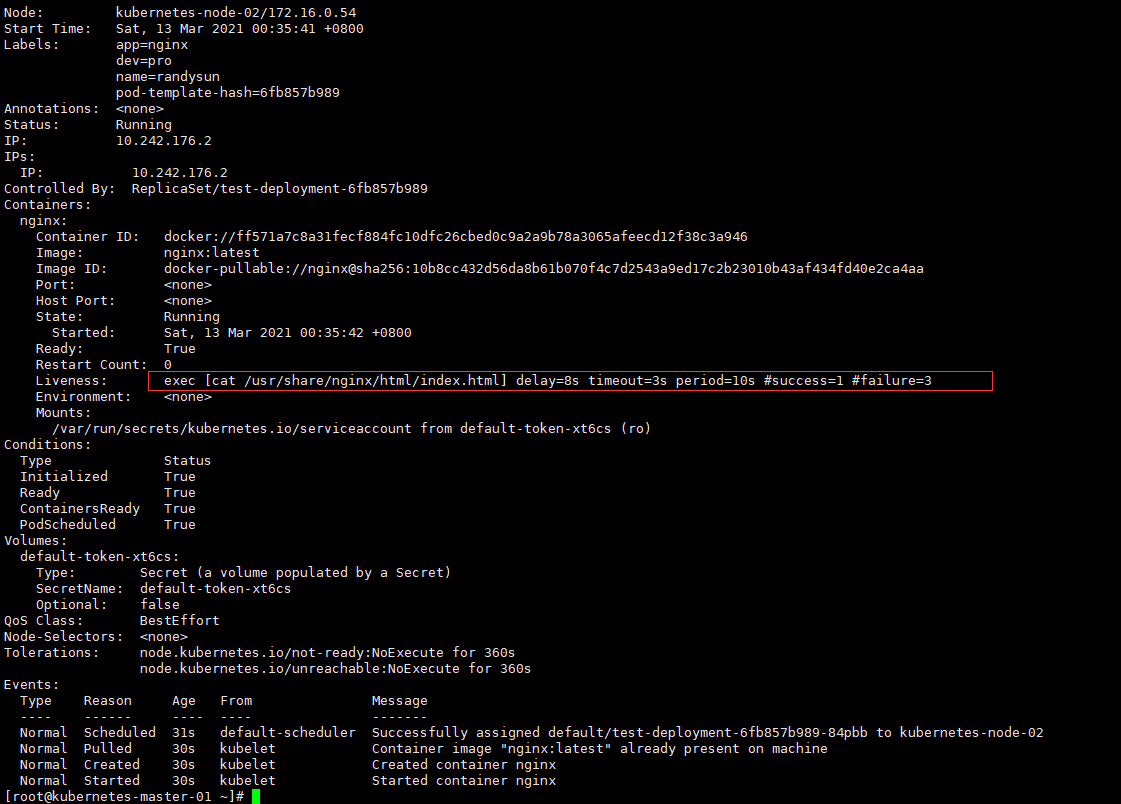

State: Running

Started: Sat, 13 Mar 2021 00:35:42 +0800

Ready: True

Restart Count: 0

Liveness: exec [cat /usr/share/nginx/html/index.html] delay=8s timeout=3s period=10s #success=1 #failure=3

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-xt6cs (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

default-token-xt6cs:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-xt6cs

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 360s

node.kubernetes.io/unreachable:NoExecute for 360s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 31s default-scheduler Successfully assigned default/test-deployment-6fb857b989-84pbb to kubernetes-node-02

Normal Pulled 30s kubelet Container image "nginx:latest" already present on machine

Normal Created 30s kubelet Created container nginx

Normal Started 30s kubelet Started container nginx

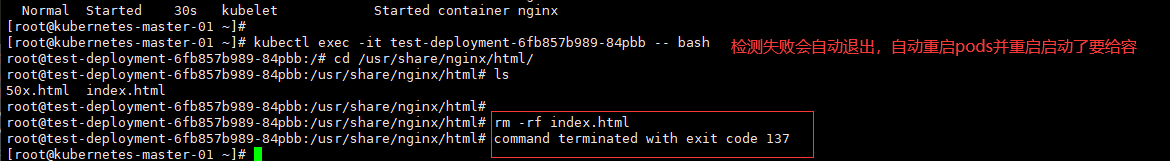

# 进入pods查看内容

[root@kubernetes-master-01 ~]# kubectl exec -it test-deployment-6fb857b989-84pbb -- bash

root@test-deployment-6fb857b989-84pbb:/# cd /usr/share/nginx/html/

root@test-deployment-6fb857b989-84pbb:/usr/share/nginx/html# ls

50x.html index.html

root@test-deployment-6fb857b989-84pbb:/usr/share/nginx/html#

## 删除pods nginx目录下面的index.html文件

[root@kubernetes-master-01 ~]# kubectl exec -it test-deployment-6fb857b989-84pbb -- bash

root@test-deployment-6fb857b989-84pbb:/# cd /usr/share/nginx/html/

root@test-deployment-6fb857b989-84pbb:/usr/share/nginx/html# ls

50x.html index.html

root@test-deployment-6fb857b989-84pbb:/usr/share/nginx/html#

root@test-deployment-6fb857b989-84pbb:/usr/share/nginx/html# rm -rf index.html

root@test-deployment-6fb857b989-84pbb:/usr/share/nginx/html# command terminated with exit code 137

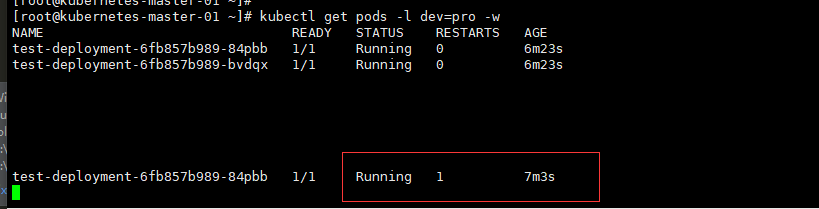

# 再次查看 (pods被重启了,容器被重新启动了一个)

[root@kubernetes-master-01 ~]# kubectl exec -it test-deployment-6fb857b989-84pbb -- bash

root@test-deployment-6fb857b989-84pbb:/# cd /usr/share/nginx/html/

root@test-deployment-6fb857b989-84pbb:/usr/share/nginx/html# ll

bash: ll: command not found

root@test-deployment-6fb857b989-84pbb:/usr/share/nginx/html# ls

50x.html index.html

root@test-deployment-6fb857b989-84pbb:/usr/share/nginx/html#

1.2、 TCPSocket

# 存活性探测 TCPSocket方式

# 定义资源类型

kind: Deployment

# 指定API版本号

apiVersion: apps/v1

# 元信息

metadata:

namespace: default

name: test-deployment

labels:

app: test-deployment

spec:

# 选择器

selector:

# 精确匹配

matchLabels:

app: nginx

dev: pro

# 定义pod的模板

template:

metadata:

# 跟matchlabels精确匹配只能多不能少

labels:

app: nginx

dev: pro

name: randysun

spec:

containers:

- name: nginx # 容器名称

image: nginx:latest

imagePullPolicy: IfNotPresent # 拉取镜像规则

# 存活性探针

livenessProbe:

tcpSocket:

port: 80

initialDelaySeconds: 8

timeoutSeconds: 3

replicas: 2

---

---

kind: Service

apiVersion: v1

metadata:

name: test-deployment-svc

spec:

type: NodePort

ports:

- port: 80

targetPort: 80

nodePort: 30001

selector: # 选择器与label一样只能多不能少

app: nginx

dev: pro

执行

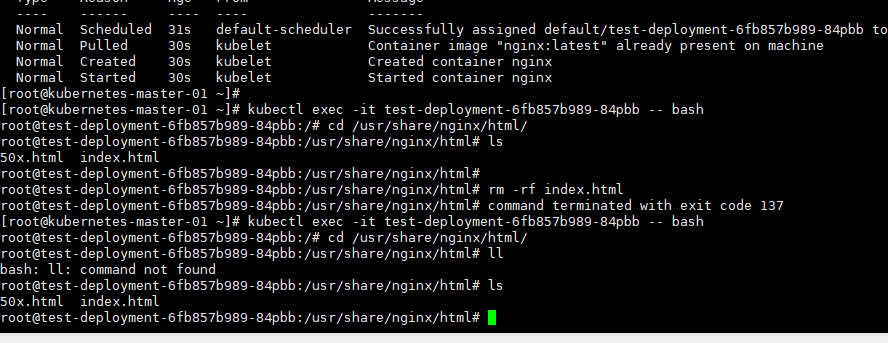

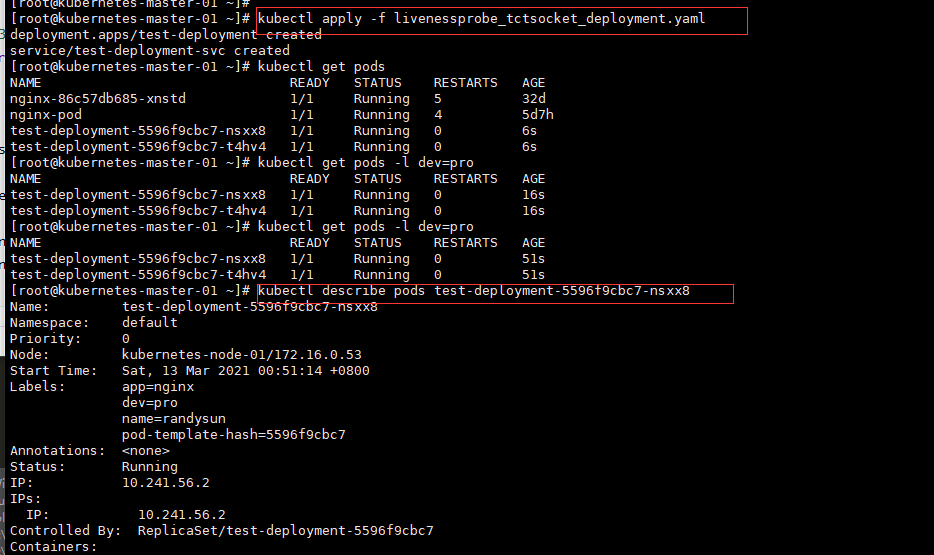

[root@kubernetes-master-01 ~]# kubectl apply -f livenessprobe_tctsocket_deployment.yaml

deployment.apps/test-deployment created

service/test-deployment-svc created

[root@kubernetes-master-01 ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-86c57db685-xnstd 1/1 Running 5 32d

nginx-pod 1/1 Running 4 5d7h

test-deployment-5596f9cbc7-nsxx8 1/1 Running 0 6s

test-deployment-5596f9cbc7-t4hv4 1/1 Running 0 6s

[root@kubernetes-master-01 ~]# kubectl get pods -l dev=pro

NAME READY STATUS RESTARTS AGE

test-deployment-5596f9cbc7-nsxx8 1/1 Running 0 16s

test-deployment-5596f9cbc7-t4hv4 1/1 Running 0 16s

[root@kubernetes-master-01 ~]# kubectl get pods -l dev=pro

NAME READY STATUS RESTARTS AGE

test-deployment-5596f9cbc7-nsxx8 1/1 Running 0 51s

test-deployment-5596f9cbc7-t4hv4 1/1 Running 0 51s

[root@kubernetes-master-01 ~]# kubectl describe pods test-deployment-5596f9cbc7-nsxx8

Name: test-deployment-5596f9cbc7-nsxx8

Namespace: default

Priority: 0

Node: kubernetes-node-01/172.16.0.53

Start Time: Sat, 13 Mar 2021 00:51:14 +0800

Labels: app=nginx

dev=pro

name=randysun

pod-template-hash=5596f9cbc7

Annotations: <none>

Status: Running

IP: 10.241.56.2

IPs:

IP: 10.241.56.2

Controlled By: ReplicaSet/test-deployment-5596f9cbc7

Containers:

nginx:

Container ID: docker://b238423c7b7e9148fc513ff553bdb2ff8599ae8b71966d923ffef9e49ccdc539

Image: nginx:latest

Image ID: docker-pullable://nginx@sha256:10b8cc432d56da8b61b070f4c7d2543a9ed17c2b23010b43af434fd40e2ca4aa

Port: <none>

Host Port: <none>

State: Running

Started: Sat, 13 Mar 2021 00:51:15 +0800

Ready: True

Restart Count: 0

Liveness: tcp-socket :80 delay=8s timeout=3s period=10s #success=1 #failure=3

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-xt6cs (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

default-token-xt6cs:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-xt6cs

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 360s

node.kubernetes.io/unreachable:NoExecute for 360s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 66s default-scheduler Successfully assigned default/test-deployment-5596f9cbc7-nsxx8 to kubernetes-node-01

Normal Pulled 66s kubelet Container image "nginx:latest" already present on machine

Normal Created 66s kubelet Created container nginx

Normal Started 66s kubelet Started container nginx

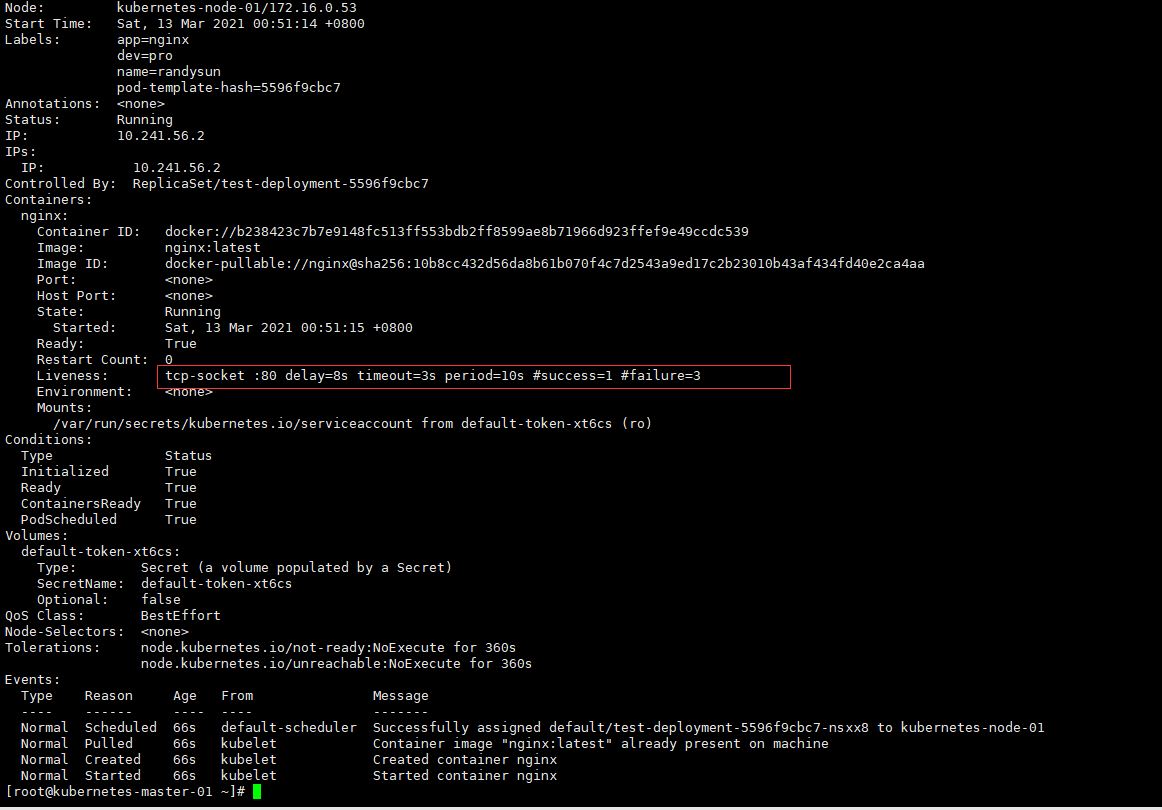

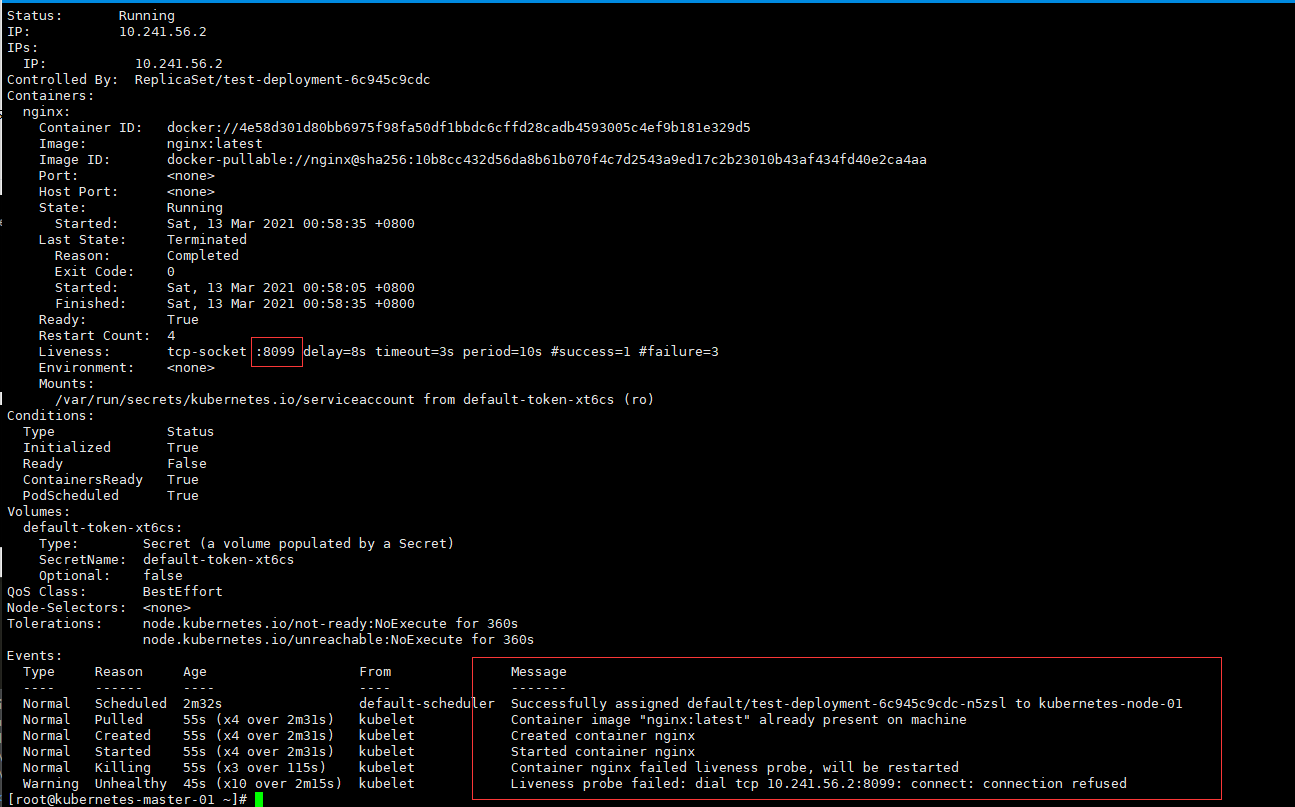

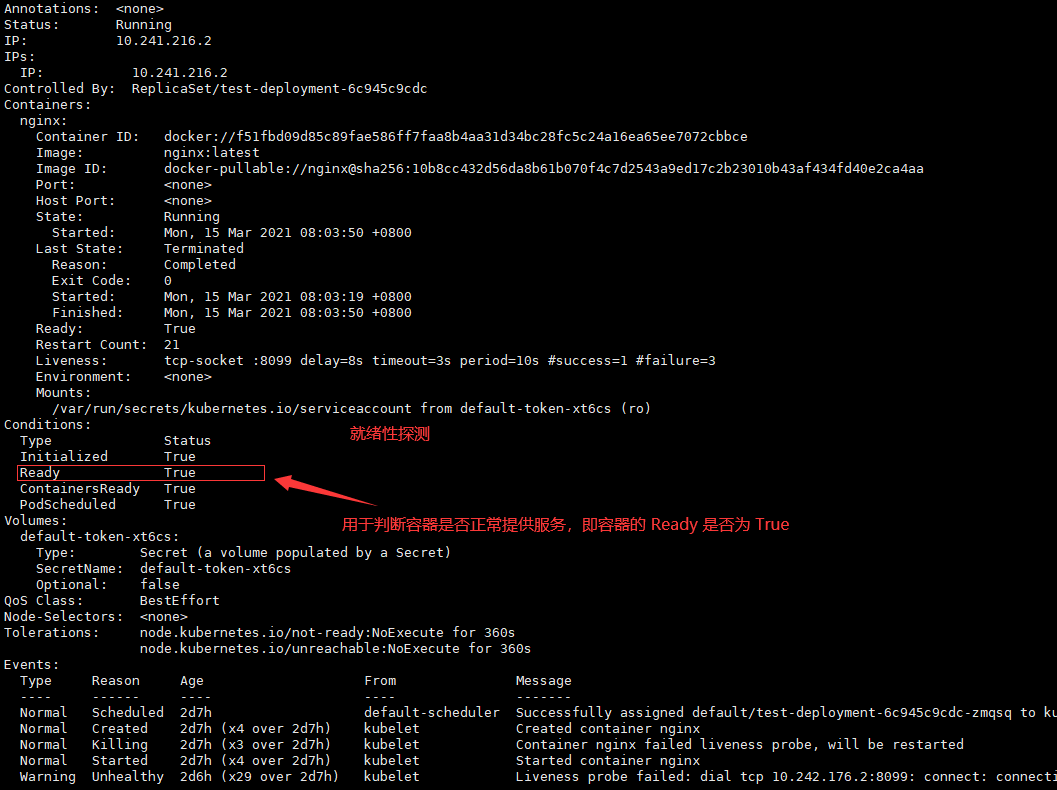

修改错误端口

# 存活性探测 TCPSocket方式

# 定义资源类型

kind: Deployment

# 指定API版本号

apiVersion: apps/v1

# 元信息

metadata:

namespace: default

name: test-deployment

labels:

app: test-deployment

spec:

# 选择器

selector:

# 精确匹配

matchLabels:

app: nginx

dev: pro

# 定义pod的模板

template:

metadata:

# 跟matchlabels精确匹配只能多不能少

labels:

app: nginx

dev: pro

name: randysun

spec:

containers:

- name: nginx # 容器名称

image: nginx:latest

imagePullPolicy: IfNotPresent # 拉取镜像规则

# 存活性探针

livenessProbe:

tcpSocket:

port: 8099 # 修改错误监听端口

initialDelaySeconds: 8

timeoutSeconds: 3

replicas: 2

---

---

kind: Service

apiVersion: v1

metadata:

name: test-deployment-svc

spec:

type: NodePort

ports:

- port: 80

targetPort: 80

nodePort: 30001

selector: # 选择器与label一样只能多不能少

app: nginx

dev: pro

执行

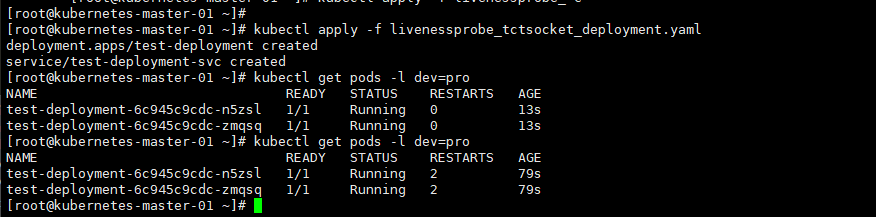

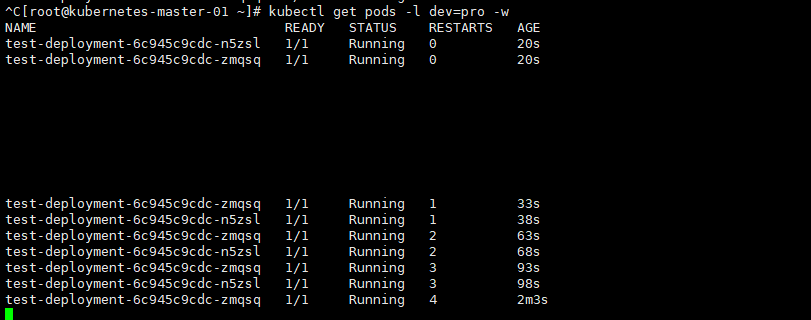

[root@kubernetes-master-01 ~]# kubectl apply -f livenessprobe_tctsocket_deployment.yaml

deployment.apps/test-deployment created

service/test-deployment-svc created

[root@kubernetes-master-01 ~]# kubectl get pods -l dev=pro

NAME READY STATUS RESTARTS AGE

test-deployment-6c945c9cdc-n5zsl 1/1 Running 0 13s

test-deployment-6c945c9cdc-zmqsq 1/1 Running 0 13s

# 重启了两次

[root@kubernetes-master-01 ~]# kubectl get pods -l dev=pro

NAME READY STATUS RESTARTS AGE

test-deployment-6c945c9cdc-n5zsl 1/1 Running 2 79s

test-deployment-6c945c9cdc-zmqsq 1/1 Running 2 79s

[root@kubernetes-master-01 ~]# kubectl get pods -l dev=pro

NAME READY STATUS RESTARTS AGE

test-deployment-6c945c9cdc-n5zsl 1/1 Running 5 3m26s

test-deployment-6c945c9cdc-zmqsq 1/1 Running 5 3m26s

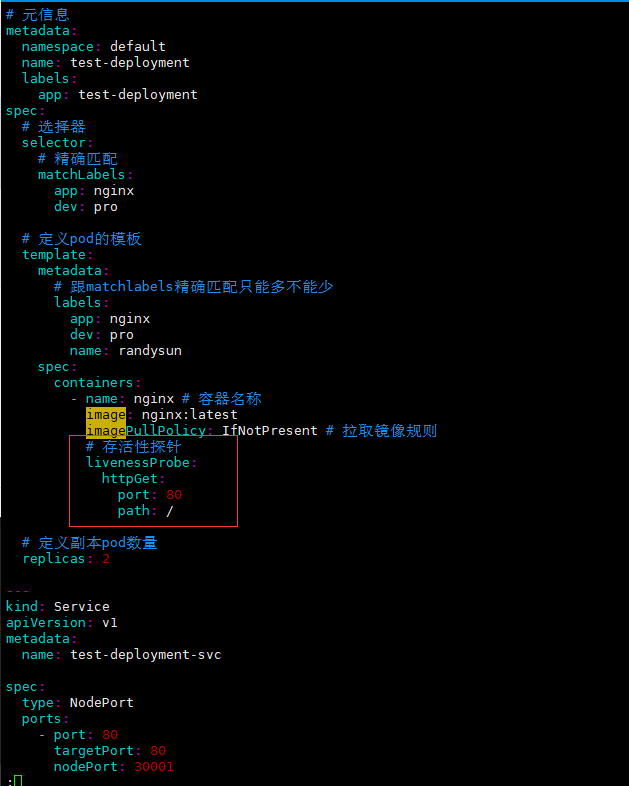

1.3、 HTTPGet

# 存活性探测 HTTPGet方式

# 定义资源类型

kind: Deployment

# 指定API版本号

apiVersion: apps/v1

# 元信息

metadata:

namespace: default

name: test-deployment

labels:

app: test-deployment

spec:

# 选择器

selector:

# 精确匹配

matchLabels:

app: nginx

dev: pro

# 定义pod的模板

template:

metadata:

# 跟matchlabels精确匹配只能多不能少

labels:

app: nginx

dev: pro

name: randysun

spec:

containers:

- name: nginx # 容器名称

image: nginx:latest

imagePullPolicy: IfNotPresent # 拉取镜像规则

# 存活性探针

livenessProbe:

httpGet:

port: 80

path: /

host: 127.0.0.1

scheme: HTTP

# 定义副本pod数量

replicas: 2

---

kind: Service

apiVersion: v1

metadata:

name: test-deployment-svc

spec:

type: NodePort

ports:

- port: 80

targetPort: 80

nodePort: 30001

selector: # 选择器与label一样只能多不能少

app: nginx

dev: pro

[root@kubernetes-master-01 ~]# kubectl delete -f deployment.yaml

deployment.apps "test-deployment" deleted

service "test-deployment-svc" deleted

[root@kubernetes-master-01 ~]# kubectl apply -f deployment.yaml

deployment.apps/test-deployment created

service/test-deployment-svc created

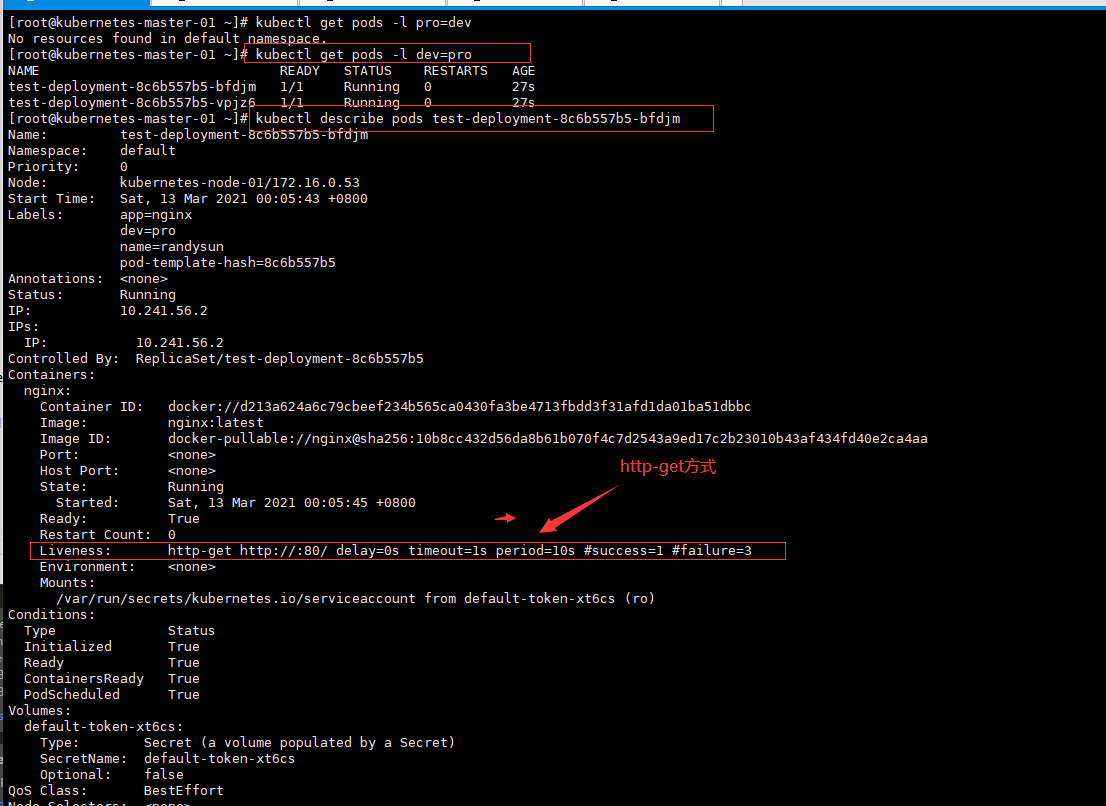

[root@kubernetes-master-01 ~]# kubectl get pods -l pro=dev

No resources found in default namespace.

[root@kubernetes-master-01 ~]# kubectl get pods -l dev=pro

NAME READY STATUS RESTARTS AGE

test-deployment-8c6b557b5-bfdjm 1/1 Running 0 27s

test-deployment-8c6b557b5-vpjz6 1/1 Running 0 27s

[root@kubernetes-master-01 ~]# kubectl describe pods test-deployment-8c6b557b5-bfdjm

Name: test-deployment-8c6b557b5-bfdjm

Namespace: default

Priority: 0

Node: kubernetes-node-01/172.16.0.53

Start Time: Sat, 13 Mar 2021 00:05:43 +0800

Labels: app=nginx

dev=pro

name=randysun

pod-template-hash=8c6b557b5

Annotations: <none>

Status: Running

IP: 10.241.56.2

IPs:

IP: 10.241.56.2

Controlled By: ReplicaSet/test-deployment-8c6b557b5

Containers:

nginx:

Container ID: docker://d213a624a6c79cbeef234b565ca0430fa3be4713fbdd3f31afd1da01ba51dbbc

Image: nginx:latest

Image ID: docker-pullable://nginx@sha256:10b8cc432d56da8b61b070f4c7d2543a9ed17c2b23010b43af434fd40e2ca4aa

Port: <none>

Host Port: <none>

State: Running

Started: Sat, 13 Mar 2021 00:05:45 +0800

Ready: True

Restart Count: 0

Liveness: http-get http://:80/ delay=0s timeout=1s period=10s #success=1 #failure=3

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-xt6cs (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

default-token-xt6cs:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-xt6cs

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 360s

node.kubernetes.io/unreachable:NoExecute for 360s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 2m11s default-scheduler Successfully assigned default/test-deployment-8c6b557b5-bfdjm to kubernetes-node-01

Normal Pulled 2m11s kubelet Container image "nginx:latest" already present on machine

Normal Created 2m10s kubelet Created container nginx

Normal Started 2m10s kubelet Started container nginx

修改检测错误的端口

# 定义资源类型

kind: Deployment

# 指定API版本号

apiVersion: apps/v1

# 元信息

metadata:

namespace: default

name: test-deployment

labels:

app: test-deployment

spec:

# 选择器

selector:

# 精确匹配

matchLabels:

app: nginx

dev: pro

# 定义pod的模板

template:

metadata:

# 跟matchlabels精确匹配只能多不能少

labels:

app: nginx

dev: pro

name: randysun

spec:

containers:

- name: nginx # 容器名称

image: nginx:latest

imagePullPolicy: IfNotPresent # 拉取镜像规则

# 存活性探针

livenessProbe:

httpGet:

port: 8080 # 修改探测不存在端口

path: /

# 定义副本pod数量

replicas: 2

---

kind: Service

apiVersion: v1

metadata:

name: test-deployment-svc

spec:

type: NodePort

ports:

- port: 80

targetPort: 80

nodePort: 30001

selector: # 选择器与label一样只能多不能少

app: nginx

dev: pro

执行

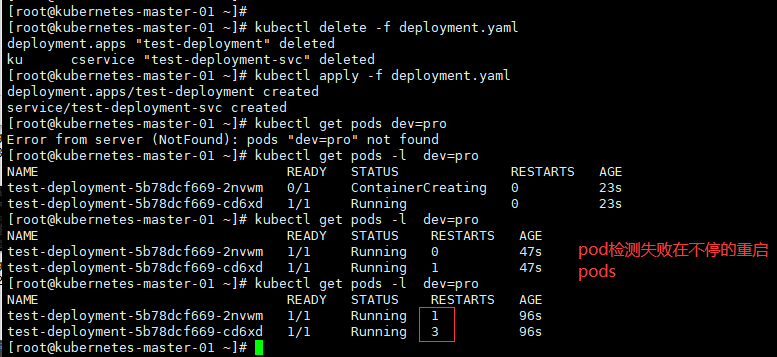

[root@kubernetes-master-01 ~]# kubectl delete -f deployment.yaml

deployment.apps "test-deployment" deleted

ku cservice "test-deployment-svc" deleted

[root@kubernetes-master-01 ~]# kubectl apply -f deployment.yaml

deployment.apps/test-deployment created

service/test-deployment-svc created

[root@kubernetes-master-01 ~]# kubectl get pods dev=pro

Error from server (NotFound): pods "dev=pro" not found

[root@kubernetes-master-01 ~]# kubectl get pods -l dev=pro

NAME READY STATUS RESTARTS AGE

test-deployment-5b78dcf669-2nvwm 0/1 ContainerCreating 0 23s

test-deployment-5b78dcf669-cd6xd 1/1 Running 0 23s

[root@kubernetes-master-01 ~]# kubectl get pods -l dev=pro

NAME READY STATUS RESTARTS AGE

test-deployment-5b78dcf669-2nvwm 1/1 Running 0 47s

test-deployment-5b78dcf669-cd6xd 1/1 Running 1 47s

[root@kubernetes-master-01 ~]# kubectl get pods -l dev=pro

NAME READY STATUS RESTARTS AGE

test-deployment-5b78dcf669-2nvwm 1/1 Running 1 96s

test-deployment-5b78dcf669-cd6xd 1/1 Running 3 96s

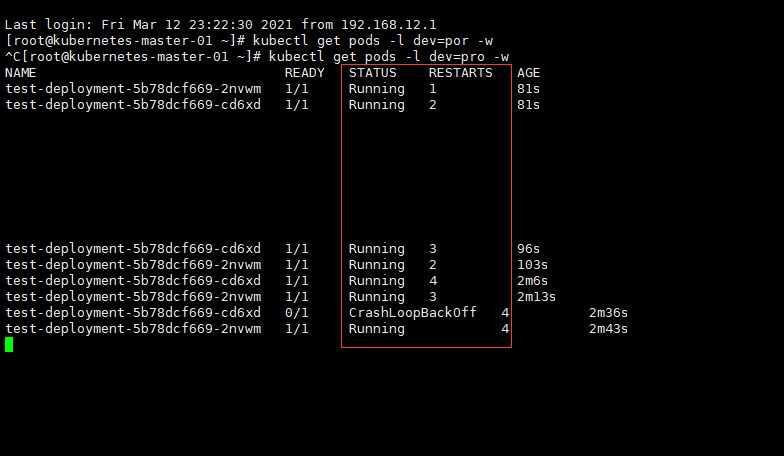

# 查看pods的状态,不断的重启pods

[root@kubernetes-master-01 ~]# kubectl get pods -l dev=pro

NAME READY STATUS RESTARTS AGE

test-deployment-5b78dcf669-2nvwm 0/1 CrashLoopBackOff 6 7m40s

test-deployment-5b78dcf669-cd6xd 0/1 CrashLoopBackOff 6 7m40s

# 查看执行的结果

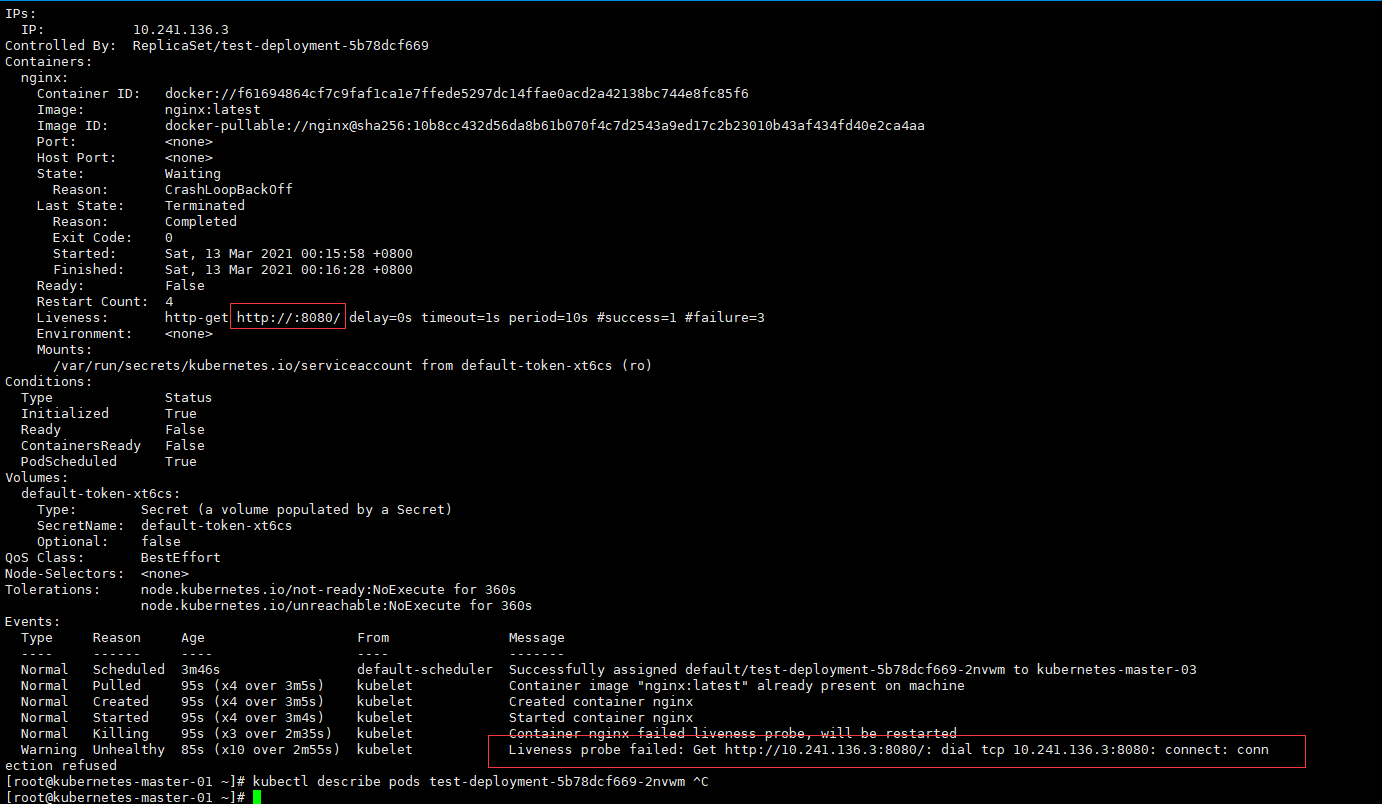

[root@kubernetes-master-01 ~]# kubectl describe pods test-deployment-5b78dcf669-2nvwm

Name: test-deployment-5b78dcf669-2nvwm

Namespace: default

Priority: 0

Node: kubernetes-master-03/172.16.0.52

Start Time: Sat, 13 Mar 2021 00:13:17 +0800

Labels: app=nginx

dev=pro

name=randysun

pod-template-hash=5b78dcf669

Annotations: <none>

Status: Running

IP: 10.241.136.3

IPs:

IP: 10.241.136.3

Controlled By: ReplicaSet/test-deployment-5b78dcf669

Containers:

nginx:

Container ID: docker://f61694864cf7c9faf1ca1e7ffede5297dc14ffae0acd2a42138bc744e8fc85f6

Image: nginx:latest

Image ID: docker-pullable://nginx@sha256:10b8cc432d56da8b61b070f4c7d2543a9ed17c2b23010b43af434fd40e2ca4aa

Port: <none>

Host Port: <none>

State: Waiting

Reason: CrashLoopBackOff

Last State: Terminated

Reason: Completed

Exit Code: 0

Started: Sat, 13 Mar 2021 00:15:58 +0800

Finished: Sat, 13 Mar 2021 00:16:28 +0800

Ready: False

Restart Count: 4

Liveness: http-get http://:8080/ delay=0s timeout=1s period=10s #success=1 #failure=3

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-xt6cs (ro)

Conditions:

Type Status

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

default-token-xt6cs:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-xt6cs

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 360s

node.kubernetes.io/unreachable:NoExecute for 360s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 3m46s default-scheduler Successfully assigned default/test-deployment-5b78dcf669-2nvwm to kubernetes-master-03

Normal Pulled 95s (x4 over 3m5s) kubelet Container image "nginx:latest" already present on machine

Normal Created 95s (x4 over 3m5s) kubelet Created container nginx

Normal Started 95s (x4 over 3m4s) kubelet Started container nginx

Normal Killing 95s (x3 over 2m35s) kubelet Container nginx failed liveness probe, will be restarted

Warning Unhealthy 85s (x10 over 2m55s) kubelet Liveness probe failed: Get http://10.241.136.3:8080/: dial tcp 10.241.136.3:8080: connect: connection refused

1.4、 参数详解

- failureThreshold:最少连续几次探测失败的次数,满足该次数则认为 fail

- initialDelaySeconds:容器启动之后开始进行存活性探测的秒数。不填立即进行

- periodSeconds:执行探测的频率(秒)。默认为 10 秒。最小值为 1。

- successThreshold:探测失败后,最少连续探测成功多少次才被认定为成功,满足该次数则认为 success。(但 是如果是 liveness 则必须是 1。最小值是 1。)

- timeoutSeconds:每次执行探测的超时时间,默认 1

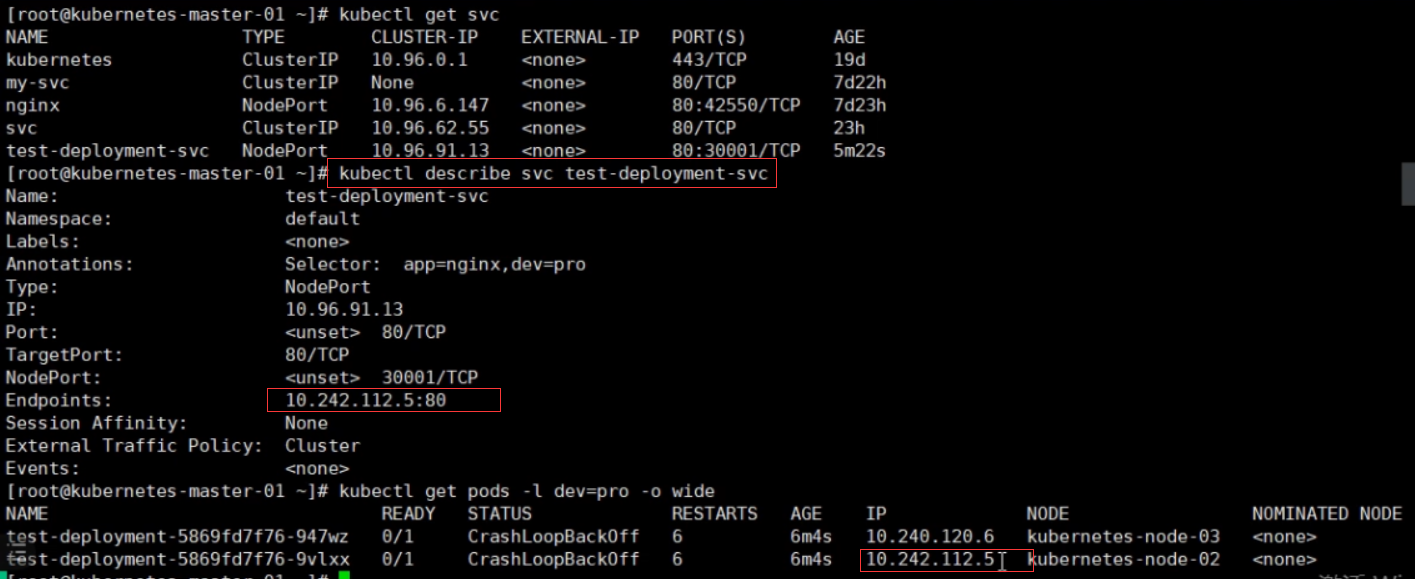

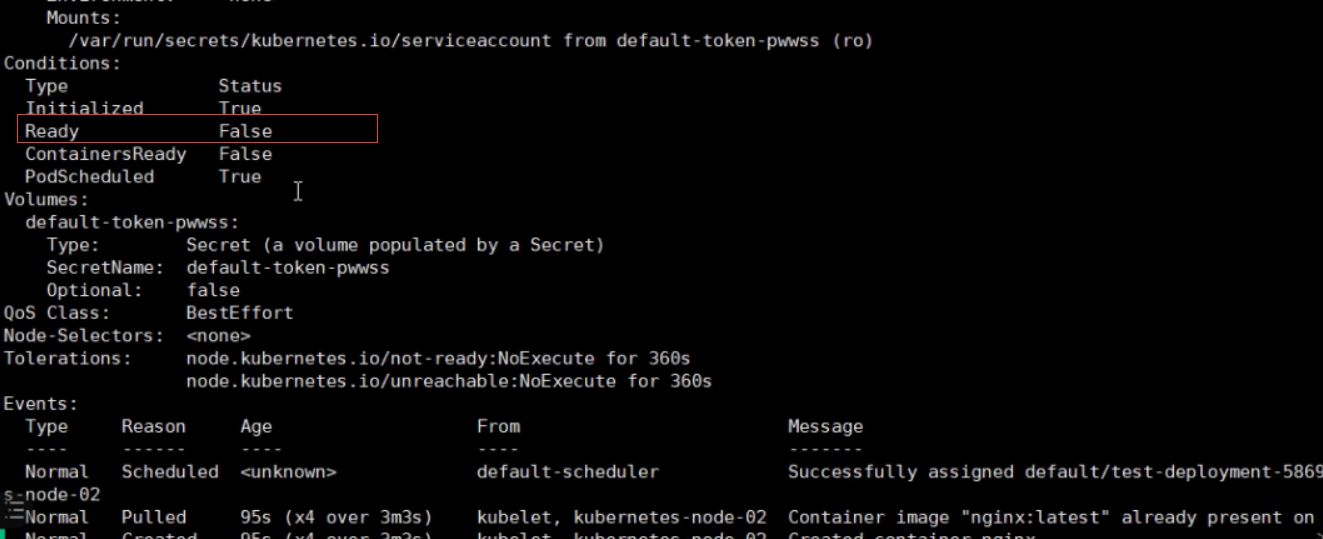

2、 就绪性探测

用于判断容器是否正常提供服务,即容器的 Ready 是否为 True,是否可以接收请求,如果 ReadinessProbe 探测失败,则容器的 Ready 将设置为 False,控制器将此 Pod 的 Endpoint 从对应的 service 的 Endpoint 列表中移除, 从此不再将任何请求调度此 Pod 上,直到下次探测成功。(剔除此 pod,不参与接收请求不会将流量转发给此 Pod

注:就绪性检查,探测当前服务是否正常对外提供服务

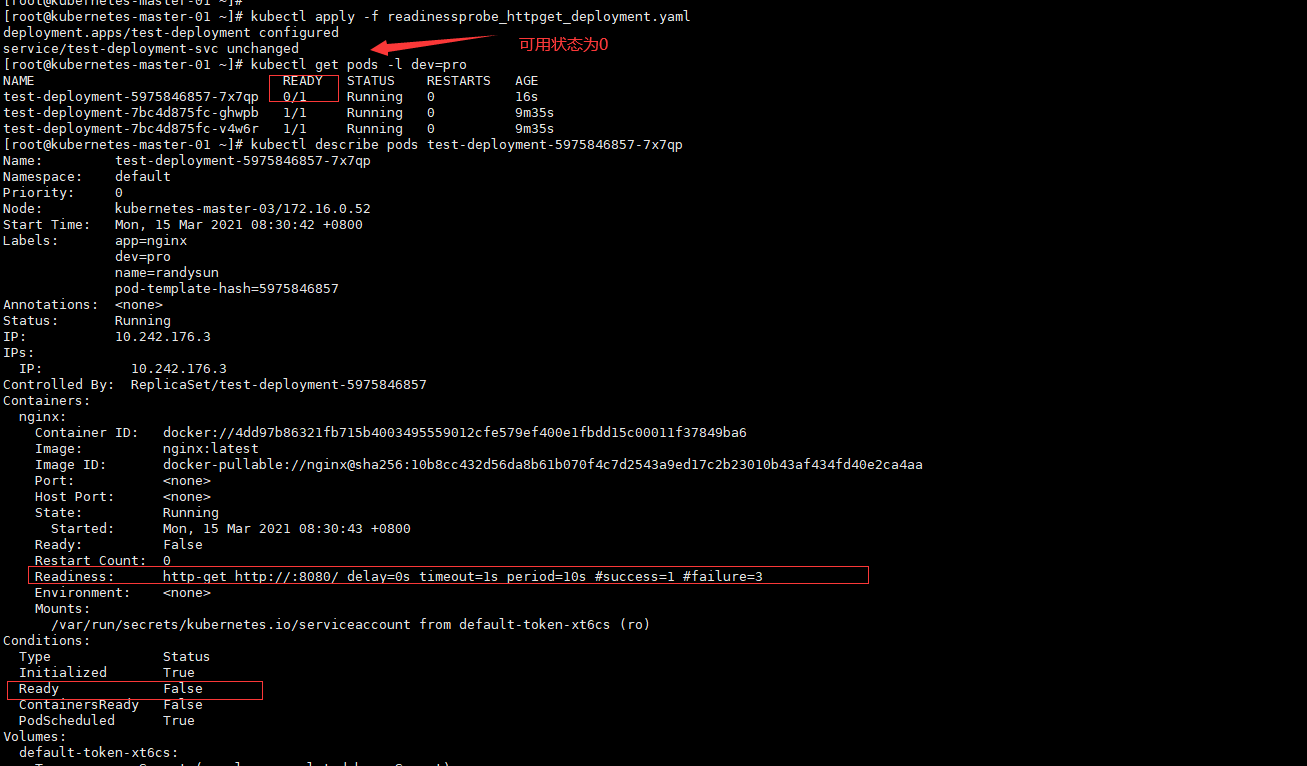

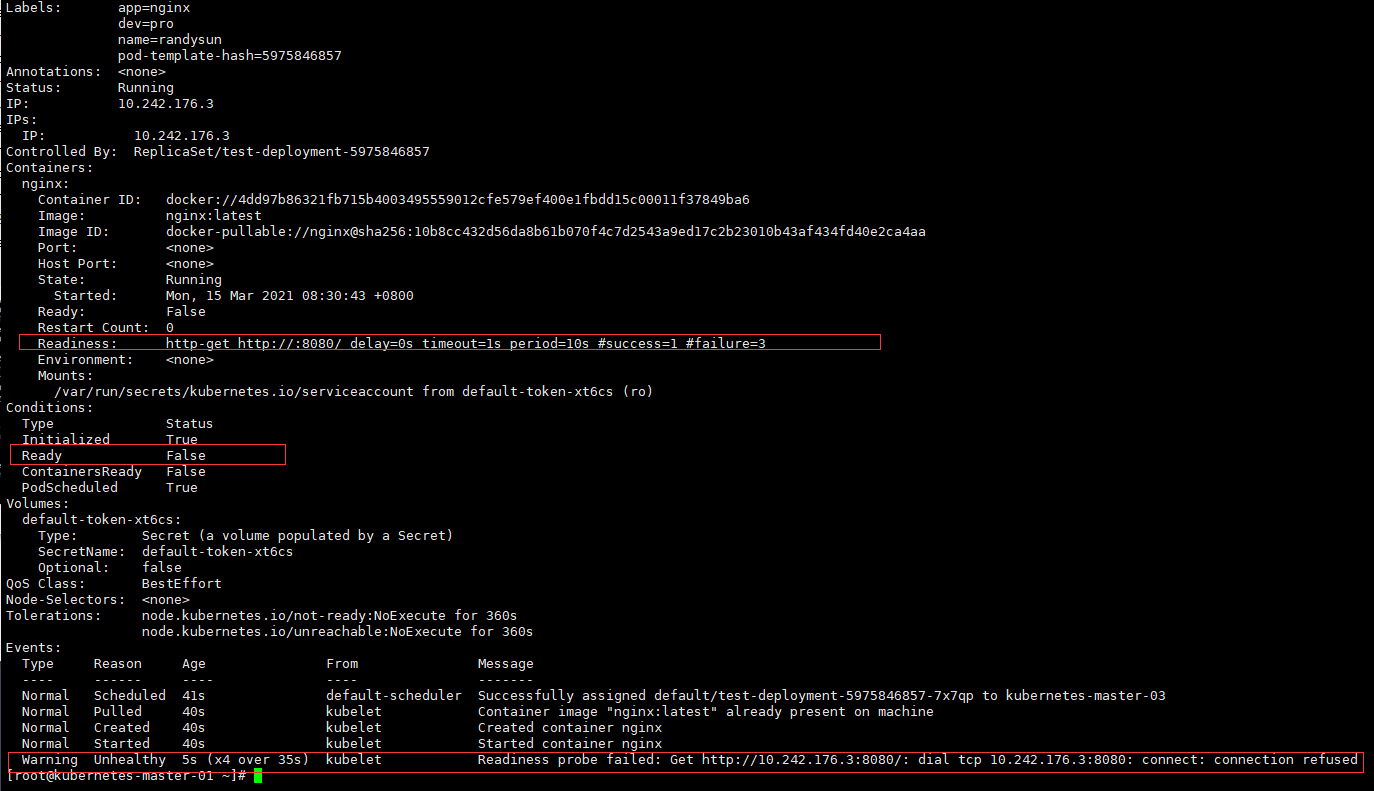

探测失败

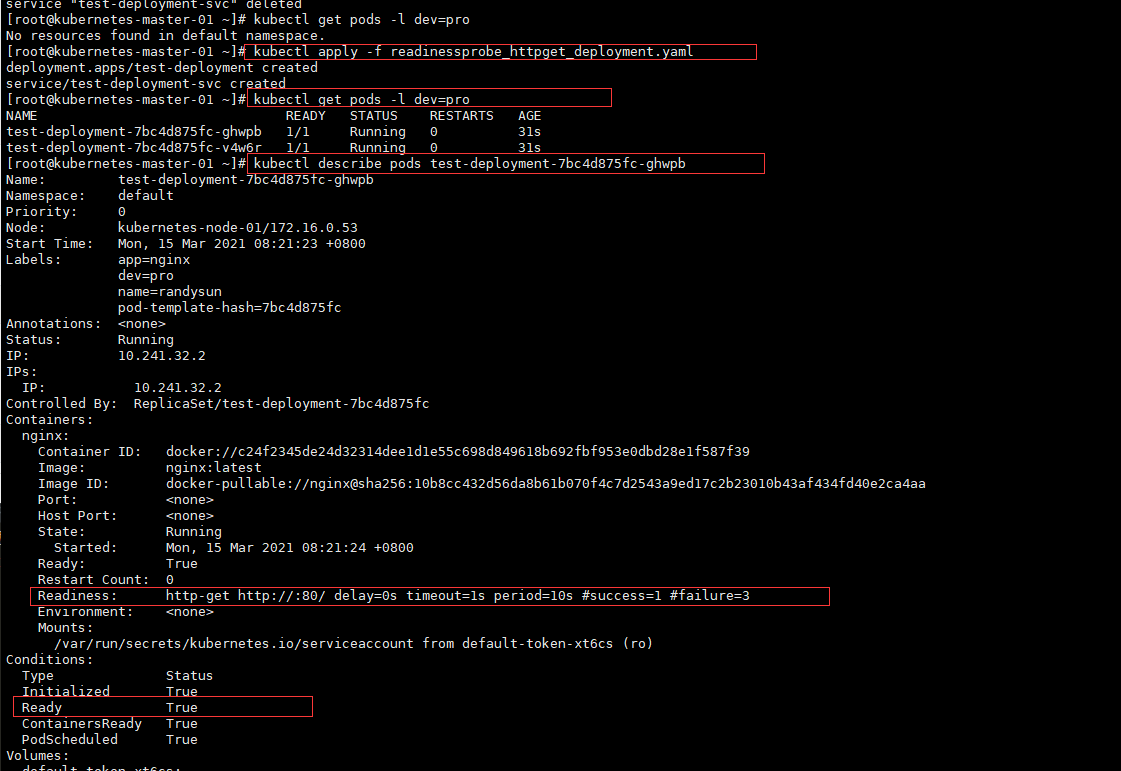

2.1、 HTTPGet

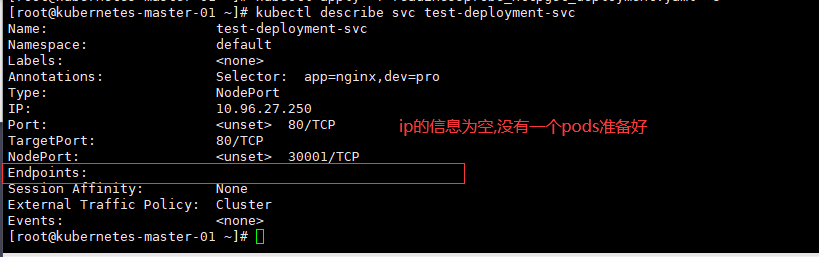

通过访问某个 URL 的方式探测当前 POD 是否可以正常对外提供服务。

# 就绪性探测 HTTPGet方式

# 定义资源类型

kind: Deployment

# 指定API版本号

apiVersion: apps/v1

# 元信息

metadata:

namespace: default

name: test-deployment

labels:

app: test-deployment

spec:

# 选择器

selector:

# 精确匹配

matchLabels:

app: nginx

dev: pro

# 定义pod的模板

template:

metadata:

# 跟matchlabels精确匹配只能多不能少

labels:

app: nginx

dev: pro

name: randysun

spec:

containers:

- name: nginx # 容器名称

image: nginx:latest

imagePullPolicy: IfNotPresent # 拉取镜像规则

# 存活性探针

readinessProbe:

httpGet:

port: 80

path: /

# host: 127.0.0.1 写这个Ip会探测失败,探测ip是pods的ip

replicas: 2

---

kind: Service

apiVersion: v1

metadata:

name: test-deployment-svc

spec:

type: NodePort

ports:

- port: 80

targetPort: 80

nodePort: 30001

selector: # 选择器与label一样只能多不能少

app: nginx

dev: pro

执行

# 创建Pods

[root@kubernetes-master-01 ~]# kubectl apply -f readinessprobe_httpget_deployment.yaml

deployment.apps/test-deployment created

service/test-deployment-svc created

# 查看创建pods

[root@kubernetes-master-01 ~]# kubectl get pods -l dev=pro

NAME READY STATUS RESTARTS AGE

test-deployment-7bc4d875fc-ghwpb 1/1 Running 0 31s

test-deployment-7bc4d875fc-v4w6r 1/1 Running 0 31s

# 查看pods详细信息

[root@kubernetes-master-01 ~]# kubectl describe pods test-deployment-7bc4d875fc-ghwpb

Name: test-deployment-7bc4d875fc-ghwpb

Namespace: default

Priority: 0

Node: kubernetes-node-01/172.16.0.53

Start Time: Mon, 15 Mar 2021 08:21:23 +0800

Labels: app=nginx

dev=pro

name=randysun

pod-template-hash=7bc4d875fc

Annotations: <none>

Status: Running

IP: 10.241.32.2

IPs:

IP: 10.241.32.2

Controlled By: ReplicaSet/test-deployment-7bc4d875fc

Containers:

nginx:

Container ID: docker://c24f2345de24d32314dee1d1e55c698d849618b692fbf953e0dbd28e1f587f39

Image: nginx:latest

Image ID: docker-pullable://nginx@sha256:10b8cc432d56da8b61b070f4c7d2543a9ed17c2b23010b43af434fd40e2ca4aa

Port: <none>

Host Port: <none>

State: Running

Started: Mon, 15 Mar 2021 08:21:24 +0800

Ready: True

Restart Count: 0

Readiness: http-get http://:80/ delay=0s timeout=1s period=10s #success=1 #failure=3 就绪探测HTTPGet方式

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-xt6cs (ro)

Conditions:

Type Status

Initialized True

Ready True # 探测为True

ContainersReady True

PodScheduled True

Volumes:

default-token-xt6cs:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-xt6cs

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 360s

node.kubernetes.io/unreachable:NoExecute for 360s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 2m default-scheduler Successfully assigned default/test-deployment-7bc4d875fc-ghwpb to kubernetes-node-01

Normal Pulled 119s kubelet Container image "nginx:latest" already present on machine

Normal Created 119s kubelet Created container nginx

Normal Started 119s kubelet Started container nginx

# 查看svc

[root@kubernetes-master-01 ~]# kubectl describe svc test-deployment-svc

Name: test-deployment-svc

Namespace: default

Labels: <none>

Annotations: Selector: app=nginx,dev=pro

Type: NodePort

IP: 10.96.27.250

Port: <unset> 80/TCP

TargetPort: 80/TCP

NodePort: <unset> 30001/TCP

Endpoints: 10.241.216.3:80,10.241.32.3:80

Session Affinity: None

External Traffic Policy: Cluster

Events: <none>

修改监听错误端口

# 就绪性探测 HTTPGet方式

# 定义资源类型

kind: Deployment

# 指定API版本号

apiVersion: apps/v1

# 元信息

metadata:

namespace: default

name: test-deployment

labels:

app: test-deployment

spec:

# 选择器

selector:

# 精确匹配

matchLabels:

app: nginx

dev: pro

# 定义pod的模板

template:

metadata:

# 跟matchlabels精确匹配只能多不能少

labels:

app: nginx

dev: pro

name: randysun

spec:

containers:

- name: nginx # 容器名称

image: nginx:latest

imagePullPolicy: IfNotPresent # 拉取镜像规则

# 存活性探针

readinessProbe:

httpGet:

port: 8080 # 修改监听错误端口

path: /

replicas: 2

---

kind: Service

apiVersion: v1

metadata:

name: test-deployment-svc

spec:

type: NodePort

ports:

- port: 80

targetPort: 80

nodePort: 30001

selector: # 选择器与label一样只能多不能少

app: nginx

dev: pro

执行

[root@kubernetes-master-01 ~]# kubectl apply -f readinessprobe_httpget_deployment.yaml

deployment.apps/test-deployment configured

service/test-deployment-svc unchanged

[root@kubernetes-master-01 ~]# kubectl get pods -l dev=pro

NAME READY STATUS RESTARTS AGE

test-deployment-5975846857-4f6xq 0/1 Running 0 10s

test-deployment-5975846857-h6fp9 0/1 Running 0 11s

[root@kubernetes-master-01 ~]# kubectl describe pods test-deployment-5975846857-7x7qp

Name: test-deployment-5975846857-7x7qp

Namespace: default

Priority: 0

Node: kubernetes-master-03/172.16.0.52

Start Time: Mon, 15 Mar 2021 08:30:42 +0800

Labels: app=nginx

dev=pro

name=randysun

pod-template-hash=5975846857

Annotations: <none>

Status: Running

IP: 10.242.176.3

IPs:

IP: 10.242.176.3

Controlled By: ReplicaSet/test-deployment-5975846857

Containers:

nginx:

Container ID: docker://4dd97b86321fb715b4003495559012cfe579ef400e1fbdd15c00011f37849ba6

Image: nginx:latest

Image ID: docker-pullable://nginx@sha256:10b8cc432d56da8b61b070f4c7d2543a9ed17c2b23010b43af434fd40e2ca4aa

Port: <none>

Host Port: <none>

State: Running

Started: Mon, 15 Mar 2021 08:30:43 +0800

Ready: False

Restart Count: 0

Readiness: http-get http://:8080/ delay=0s timeout=1s period=10s #success=1 #failure=3

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-xt6cs (ro)

Conditions:

Type Status

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

default-token-xt6cs:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-xt6cs

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 360s

node.kubernetes.io/unreachable:NoExecute for 360s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 41s default-scheduler Successfully assigned default/test-deployment-5975846857-7x7qp to kubernetes-master-03

Normal Pulled 40s kubelet Container image "nginx:latest" already present on machine

Normal Created 40s kubelet Created container nginx

Normal Started 40s kubelet Started container nginx

Warning Unhealthy 5s (x4 over 35s) kubelet Readiness probe failed: Get http://10.242.176.3:8080/: dial tcp 10.242.176.3:8080: connect: connection refused

[root@kubernetes-master-01 ~]# kubectl describe svc test-deployment-svc

Name: test-deployment-svc

Namespace: default

Labels: <none>

Annotations: Selector: app=nginx,dev=pro

Type: NodePort

IP: 10.96.27.250

Port: <unset> 80/TCP

TargetPort: 80/TCP

NodePort: <unset> 30001/TCP

Endpoints:

Session Affinity: None

External Traffic Policy: Cluster

Events: <none>

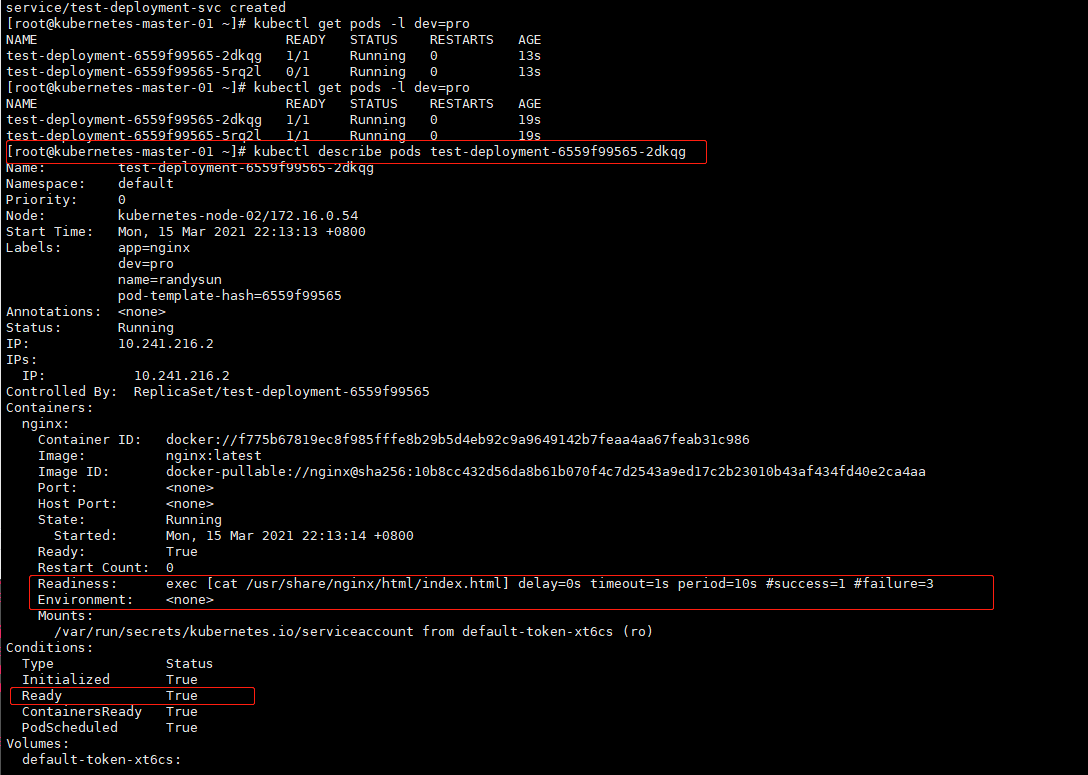

2.2、 Exec

通过执行一条命令,探测服务是否可以正常对外提供服务。

# 就绪性探测 Exec方式

# 定义资源类型

kind: Deployment

# 指定API版本号

apiVersion: apps/v1

# 元信息

metadata:

namespace: default

name: test-deployment

labels:

app: test-deployment

spec:

# 选择器

selector:

# 精确匹配

matchLabels:

app: nginx

dev: pro

# 定义pod的模板

template:

metadata:

# 跟matchlabels精确匹配只能多不能少

labels:

app: nginx

dev: pro

name: randysun

spec:

containers:

- name: nginx # 容器名称

image: nginx:latest

imagePullPolicy: IfNotPresent # 拉取镜像规则

# 就绪性探针

readinessProbe:

exec:

command:

- cat

- /usr/share/nginx/html/index.html

replicas: 2

---

kind: Service

apiVersion: v1

metadata:

name: test-deployment-svc

spec:

type: NodePort

ports:

- port: 80

targetPort: 80

nodePort: 30001

selector: # 选择器与label一样只能多不能少

app: nginx

dev: pro

执行

[root@kubernetes-master-01 ~]# kubectl apply -f readinessprobe_exec_deployment.yaml

deployment.apps/test-deployment created

service/test-deployment-svc created

[root@kubernetes-master-01 ~]# kubectl get pods -l dev=pro

NAME READY STATUS RESTARTS AGE

test-deployment-6559f99565-2dkqg 1/1 Running 0 13s

test-deployment-6559f99565-5rq2l 0/1 Running 0 13s

[root@kubernetes-master-01 ~]# kubectl get pods -l dev=pro

NAME READY STATUS RESTARTS AGE

test-deployment-6559f99565-2dkqg 1/1 Running 0 19s

test-deployment-6559f99565-5rq2l 1/1 Running 0 19s

[root@kubernetes-master-01 ~]# kubectl describe pods test-deployment-6559f99565-2dkqg

Name: test-deployment-6559f99565-2dkqg

Namespace: default

Priority: 0

Node: kubernetes-node-02/172.16.0.54

Start Time: Mon, 15 Mar 2021 22:13:13 +0800

Labels: app=nginx

dev=pro

name=randysun

pod-template-hash=6559f99565

Annotations: <none>

Status: Running

IP: 10.241.216.2

IPs:

IP: 10.241.216.2

Controlled By: ReplicaSet/test-deployment-6559f99565

Containers:

nginx:

Container ID: docker://f775b67819ec8f985fffe8b29b5d4eb92c9a9649142b7feaa4aa67feab31c986

Image: nginx:latest

Image ID: docker-pullable://nginx@sha256:10b8cc432d56da8b61b070f4c7d2543a9ed17c2b23010b43af434fd40e2ca4aa

Port: <none>

Host Port: <none>

State: Running

Started: Mon, 15 Mar 2021 22:13:14 +0800

Ready: True

Restart Count: 0

Readiness: exec [cat /usr/share/nginx/html/index.html] delay=0s timeout=1s period=10s #success=1 #failure=3

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-xt6cs (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

default-token-xt6cs:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-xt6cs

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 360s

node.kubernetes.io/unreachable:NoExecute for 360s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 30s default-scheduler Successfully assigned default/test-deployment-6559f99565-2dkqg to kubernetes-node-02

Normal Pulled 30s kubelet Container image "nginx:latest" already present on machine

Normal Created 30s kubelet Created container nginx

Normal Started 30s kubelet Started container nginx

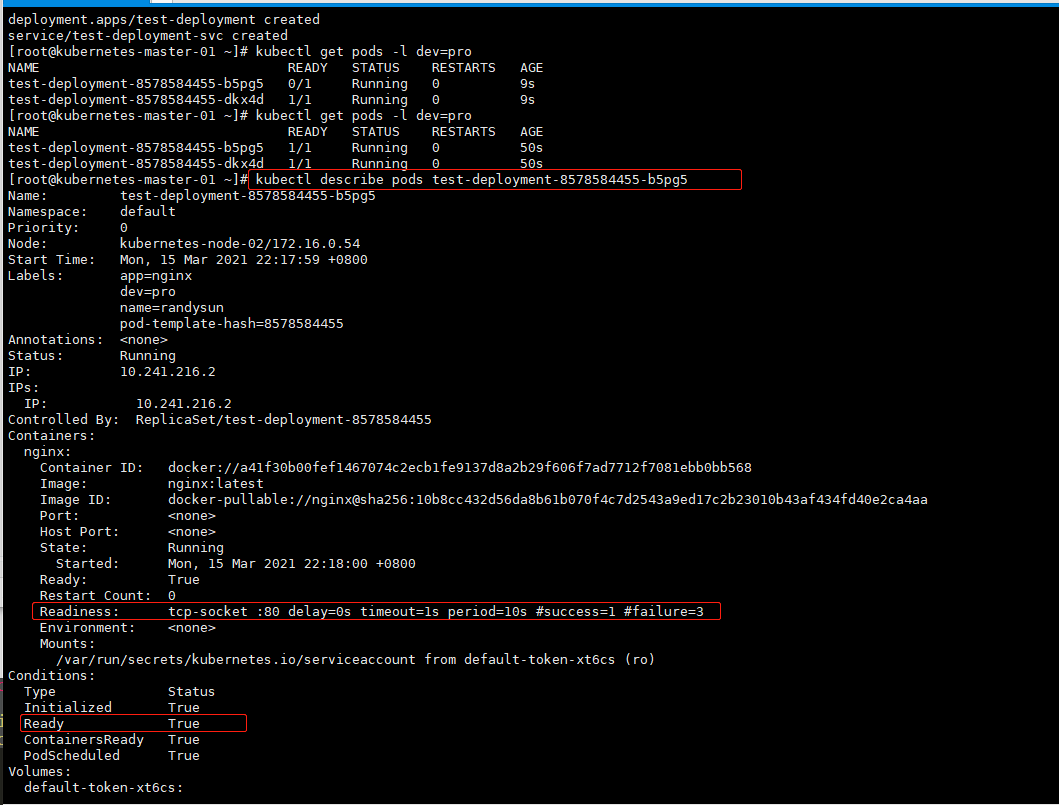

2.3、 TCPSocket

通过 ping 某个端口的方式,探测服务是否可以正常对外提供服务。

# 就绪性探测 TCPSocket方式

# 定义资源类型

kind: Deployment

# 指定API版本号

apiVersion: apps/v1

# 元信息

metadata:

namespace: default

name: test-deployment

labels:

app: test-deployment

spec:

# 选择器

selector:

# 精确匹配

matchLabels:

app: nginx

dev: pro

# 定义pod的模板

template:

metadata:

# 跟matchlabels精确匹配只能多不能少

labels:

app: nginx

dev: pro

name: randysun

spec:

containers:

- name: nginx # 容器名称

image: nginx:latest

imagePullPolicy: IfNotPresent # 拉取镜像规则

# 就绪性探针

readinessProbe:

tcpSocket:

port: 80

replicas: 2

---

kind: Service

apiVersion: v1

metadata:

name: test-deployment-svc

spec:

type: NodePort

ports:

- port: 80

targetPort: 80

nodePort: 30001

selector: # 选择器与label一样只能多不能少

app: nginx

dev: pro

执行

[root@kubernetes-master-01 ~]# kubectl apply -f readinessprobe_tcpsocket_deployment.yaml

deployment.apps/test-deployment created

service/test-deployment-svc created

[root@kubernetes-master-01 ~]# kubectl get pods -l dev=pro

NAME READY STATUS RESTARTS AGE

test-deployment-8578584455-b5pg5 0/1 Running 0 9s

test-deployment-8578584455-dkx4d 1/1 Running 0 9s

[root@kubernetes-master-01 ~]# kubectl get pods -l dev=pro

NAME READY STATUS RESTARTS AGE

test-deployment-8578584455-b5pg5 1/1 Running 0 50s

test-deployment-8578584455-dkx4d 1/1 Running 0 50s

[root@kubernetes-master-01 ~]# kubectl describe pods test-deployment-8578584455-b5pg5

Name: test-deployment-8578584455-b5pg5

Namespace: default

Priority: 0

Node: kubernetes-node-02/172.16.0.54

Start Time: Mon, 15 Mar 2021 22:17:59 +0800

Labels: app=nginx

dev=pro

name=randysun

pod-template-hash=8578584455

Annotations: <none>

Status: Running

IP: 10.241.216.2

IPs:

IP: 10.241.216.2

Controlled By: ReplicaSet/test-deployment-8578584455

Containers:

nginx:

Container ID: docker://a41f30b00fef1467074c2ecb1fe9137d8a2b29f606f7ad7712f7081ebb0bb568

Image: nginx:latest

Image ID: docker-pullable://nginx@sha256:10b8cc432d56da8b61b070f4c7d2543a9ed17c2b23010b43af434fd40e2ca4aa

Port: <none>

Host Port: <none>

State: Running

Started: Mon, 15 Mar 2021 22:18:00 +0800

Ready: True

Restart Count: 0

Readiness: tcp-socket :80 delay=0s timeout=1s period=10s #success=1 #failure=3

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-xt6cs (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

default-token-xt6cs:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-xt6cs

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 360s

node.kubernetes.io/unreachable:NoExecute for 360s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 63s default-scheduler Successfully assigned default/test-deployment-8578584455-b5pg5 to kubernetes-node-02

Normal Pulled 63s kubelet Container image "nginx:latest" already present on machine

Normal Created 63s kubelet Created container nginx

Normal Started 62s kubelet Started container nginx

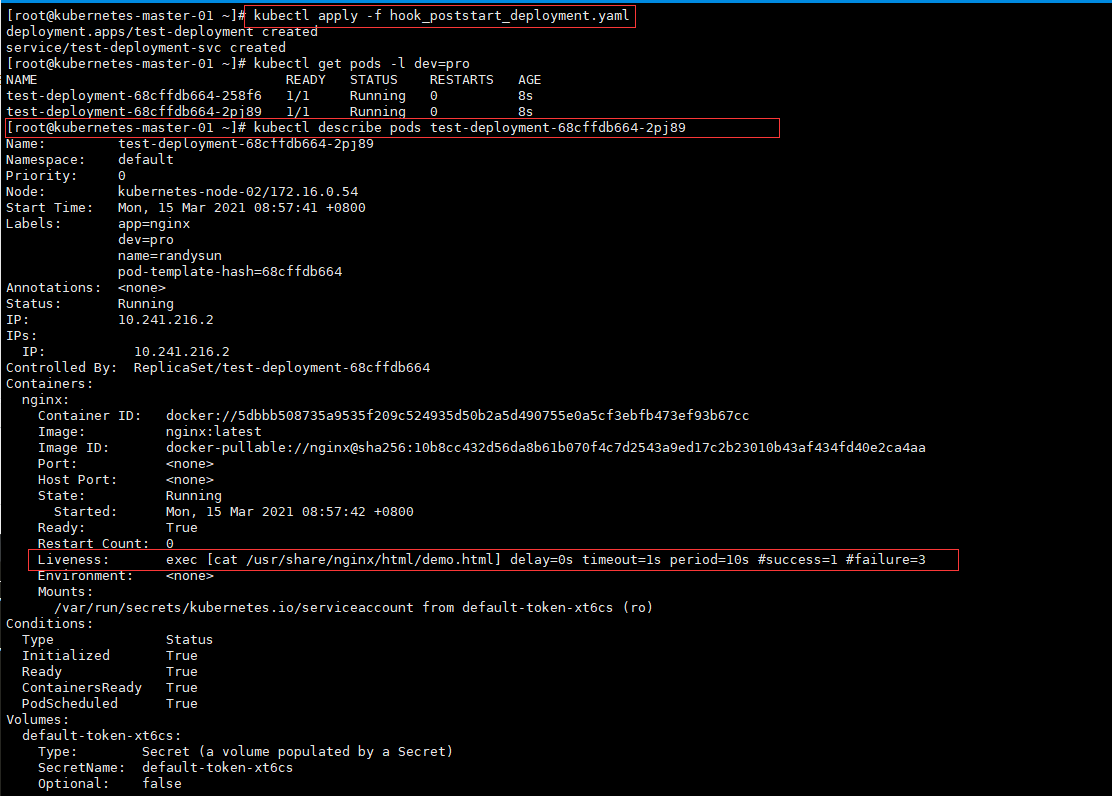

3、 回调 HOOK

实际上 Kubernetes 为我们的容器提供了生命周期钩子的,就是我们说的 Pod Hook,Pod Hook 是由 kubelet 发起的,当容器中的进程启动前或者容器中的进程终止之前运行,这是包含在容器的生命周期之中。我们可以同 时为 Pod 中的所有容器都配置 hook。 Kubernetes 为我们提供了两种钩子函数:

- PostStart(创建容器成功一瞬间):这个钩子在容器创建后立即执行。但是,并不能保证钩子将在容器 ENTRYPOINT 之前运行,因为 没有参数传递给处理程序。主要用于资源部署、环境准备等。不过需要注意的是如果钩子花费太长时间以至 于不能运行或者挂起, 容器将不能达到 running 状态。(可用于开始的时候下载密钥)

- PreStop:这个钩子在容器终止之前立即被调用。它是阻塞的,意味着它是同步的, 所以它必须在删除容器 的调用发出之前完成。主要用于优雅关闭应用程序、通知其他系统等。如果钩子在执行期间挂起, Pod 阶 段将停留在 running 状态并且永不会达到 failed 状态。(停止容器的时候删除密钥,保证相对安全,对保密的数据)

如果 PostStart 或者 PreStop 钩子失败, 它会杀死容器。所以我们应该让钩子函数尽可能的轻量。当然有些 情况下,长时间运行命令是合理的, 比如在停止容器之前预先保存状态

apiVersion: v1

kind: Pod

metadata:

name: hook-demo1

spec:

containers:

- name: hook-dem

image: nginx

lifecycle:

postStart:

exec:

command: ["/bin/sh", "-c", "echo Hello from the postStart handler > /usr/share/message"]

案例

# 回调钩子HOOK PostStart方式

# 定义资源类型

kind: Deployment

# 指定API版本号

apiVersion: apps/v1

# 元信息

metadata:

namespace: default

name: test-deployment

labels:

app: test-deployment

spec:

# 选择器

selector:

# 精确匹配

matchLabels:

app: nginx

dev: pro

# 定义pod的模板

template:

metadata:

# 跟matchlabels精确匹配只能多不能少

labels:

app: nginx

dev: pro

name: randysun

spec:

containers:

- name: nginx # 容器名称

image: nginx:latest

imagePullPolicy: IfNotPresent # 拉取镜像规则

# 存活性探针

livenessProbe:

exec:

command:

- cat

- /usr/share/nginx/html/demo.html

# 回调钩子

lifecycle:

postStart: # 容器创建后立刻执行

exec:

command:

- touch # 命令容器中一定是存在的

- /usr/share/nginx/html/demo.html

replicas: 2

---

kind: Service

apiVersion: v1

metadata:

name: test-deployment-svc

spec:

type: NodePort

ports:

- port: 80

targetPort: 80

nodePort: 30001

selector: # 选择器与label一样只能多不能少

app: nginx

dev: pro

执行

# 创建Pods

[root@kubernetes-master-01 ~]# kubectl apply -f hook_poststart_deployment.yaml

deployment.apps/test-deployment created

service/test-deployment-svc created

# 查看pods

[root@kubernetes-master-01 ~]# kubectl get pods -l dev=pro

NAME READY STATUS RESTARTS AGE

test-deployment-68cffdb664-258f6 1/1 Running 0 8s

test-deployment-68cffdb664-2pj89 1/1 Running 0 8s

# 查看详细信息

[root@kubernetes-master-01 ~]# kubectl describe pods test-deployment-68cffdb664-2pj89

Name: test-deployment-68cffdb664-2pj89

Namespace: default

Priority: 0

Node: kubernetes-node-02/172.16.0.54

Start Time: Mon, 15 Mar 2021 08:57:41 +0800

Labels: app=nginx

dev=pro

name=randysun

pod-template-hash=68cffdb664

Annotations: <none>

Status: Running

IP: 10.241.216.2

IPs:

IP: 10.241.216.2

Controlled By: ReplicaSet/test-deployment-68cffdb664

Containers:

nginx:

Container ID: docker://5dbbb508735a9535f209c524935d50b2a5d490755e0a5cf3ebfb473ef93b67cc

Image: nginx:latest

Image ID: docker-pullable://nginx@sha256:10b8cc432d56da8b61b070f4c7d2543a9ed17c2b23010b43af434fd40e2ca4aa

Port: <none>

Host Port: <none>

State: Running

Started: Mon, 15 Mar 2021 08:57:42 +0800

Ready: True

Restart Count: 0

Liveness: exec [cat /usr/share/nginx/html/demo.html] delay=0s timeout=1s period=10s #success=1 #failure=3

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-xt6cs (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

default-token-xt6cs:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-xt6cs

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 360s

node.kubernetes.io/unreachable:NoExecute for 360s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 78s default-scheduler Successfully assigned default/test-deployment-68cffdb664-2pj89 to kubernetes-node-02

Normal Pulled 78s kubelet Container image "nginx:latest" already present on machine

Normal Created 78s kubelet Created container nginx

Normal Started 78s kubelet Started container nginx

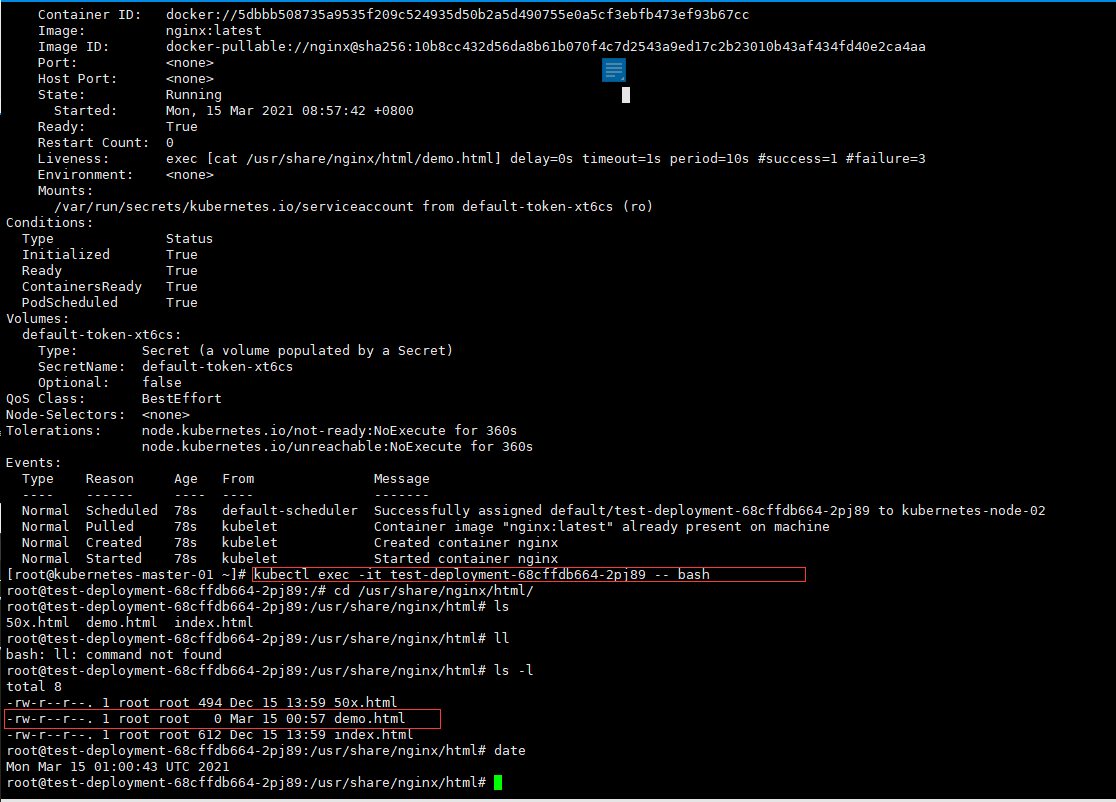

# 进入容器中查看创建的demo.html文件

[root@kubernetes-master-01 ~]# kubectl exec -it test-deployment-68cffdb664-2pj89 -- bash

root@test-deployment-68cffdb664-2pj89:/# cd /usr/share/nginx/html/

root@test-deployment-68cffdb664-2pj89:/usr/share/nginx/html# ls

50x.html demo.html index.html

root@test-deployment-68cffdb664-2pj89:/usr/share/nginx/html# ls -l

total 8

-rw-r--r--. 1 root root 494 Dec 15 13:59 50x.html

-rw-r--r--. 1 root root 0 Mar 15 00:57 demo.html

-rw-r--r--. 1 root root 612 Dec 15 13:59 index.html

root@test-deployment-68cffdb664-2pj89:/usr/share/nginx/html# date

Mon Mar 15 01:00:43 UTC 2021

root@test-deployment-68cffdb664-2pj89:/usr/share/nginx/html#

二、 K8S 监控组件 metrics-server

1、 创建用户

Metrics-server 需要读取 kubernetes 中数据,所以需要创建一个有权限的用户来给 metrics-server 使用。

[root@kubernetes-master-01 ~]# kubectl create clusterrolebinding system:anonymous --clusterrole=cluster-admin --user=system:anonymous

2、 创建配置文件

# # K8S 监控组件 metrics-server

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: system:aggregated-metrics-reader

labels:

rbac.authorization.k8s.io/aggregate-to-view: "true"

rbac.authorization.k8s.io/aggregate-to-edit: "true"

rbac.authorization.k8s.io/aggregate-to-admin: "true"

rules:

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: metrics-server:system:auth-delegator

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: metrics-server-auth-reader

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

name: v1beta1.metrics.k8s.io

spec:

groupPriorityMinimum: 100

service:

name: metrics-server

namespace: kube-system

versionPriority: 100

group: metrics.k8s.io

version: v1beta1

insecureSkipTLSVerify: true

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: metrics-server

namespace: kube-system

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: metrics-server

namespace: kube-system

labels:

k8s-app: metrics-server

spec:

selector:

matchLabels:

k8s-app: metrics-server

template:

metadata:

name: metrics-server

labels:

k8s-app: metrics-server

spec:

serviceAccountName: metrics-server

volumes:

# mount in tmp so we can safely use from-scratch images and/or read-only containers

- name: tmp-dir

emptyDir: {}

- name: ca-ssl

hostPath:

path: /etc/kubernetes/ssl

containers:

- name: metrics-server

image: registry.cn-hangzhou.aliyuncs.com/k8sos/metrics-server:v0.4.1

imagePullPolicy: IfNotPresent

args:

- --cert-dir=/tmp

- --secure-port=4443

- --metric-resolution=30s

- --kubelet-insecure-tls

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --requestheader-username-headers=X-Remote-User

- --requestheader-group-headers=X-Remote-Group

- --requestheader-extra-headers-prefix=X-Remote-Extra-

ports:

- name: main-port

containerPort: 4443

protocol: TCP

securityContext:

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

volumeMounts:

- name: tmp-dir

mountPath: /tmp

- name: ca-ssl

mountPath: /etc/kubernetes/ssl

nodeSelector:

kubernetes.io/os: linux

---

apiVersion: v1

kind: Service

metadata:

name: metrics-server

namespace: kube-system

labels:

kubernetes.io/name: "Metrics-server"

kubernetes.io/cluster-service: "true"

spec:

selector:

k8s-app: metrics-server

ports:

- port: 443

protocol: TCP

targetPort: main-port

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: system:metrics-server

rules:

- apiGroups:

- ""

resources:

- pods

- nodes

- nodes/stats

- namespaces

- configmaps

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: system:metrics-server

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:metrics-server

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

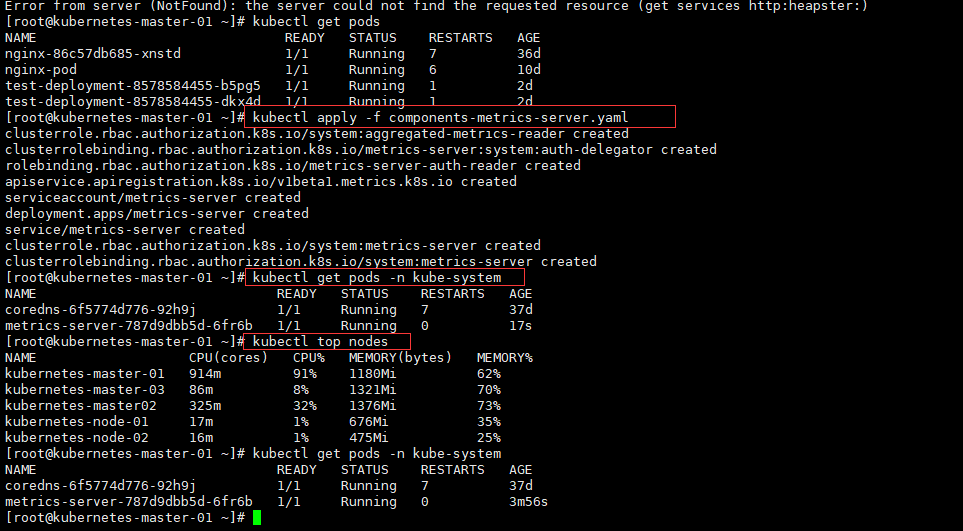

2.1、 复制上面的配置文件,创建对应的服务

kubectl apply -f components-metrics-server.yaml

kubectl get pods -n kube-system

[root@kubernetes-master-01 ~]# kubectl apply -f components-metrics-server.yaml

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

serviceaccount/metrics-server created

deployment.apps/metrics-server created

service/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

[root@kubernetes-master-01 ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-6f5774d776-92h9j 1/1 Running 7 37d

metrics-server-787d9dbb5d-6fr6b 1/1 Running 0 17s

[root@kubernetes-master-01 ~]# kubectl top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

kubernetes-master-01 914m 91% 1180Mi 62%

kubernetes-master-03 86m 8% 1321Mi 70%

kubernetes-master02 325m 32% 1376Mi 73%

kubernetes-node-01 17m 1% 676Mi 35%

kubernetes-node-02 16m 1% 475Mi 25%

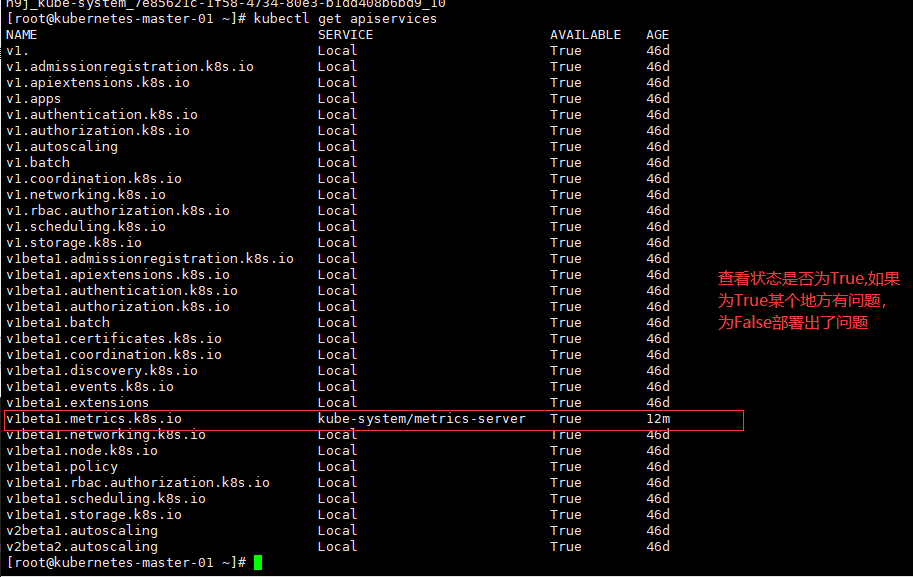

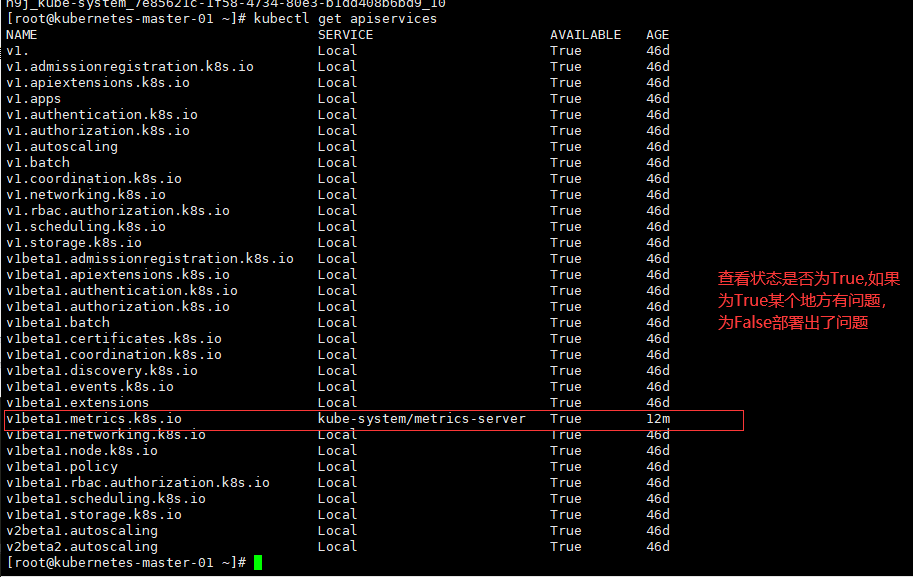

如果出现问题

kubectl get apiservices

[root@kubernetes-master-01 ~]# kubectl get apiservices

NAME SERVICE AVAILABLE AGE

v1. Local True 46d

v1.admissionregistration.k8s.io Local True 46d

v1.apiextensions.k8s.io Local True 46d

v1.apps Local True 46d

v1.authentication.k8s.io Local True 46d

v1.authorization.k8s.io Local True 46d

v1.autoscaling Local True 46d

v1.batch Local True 46d

v1.coordination.k8s.io Local True 46d

v1.networking.k8s.io Local True 46d

v1.rbac.authorization.k8s.io Local True 46d

v1.scheduling.k8s.io Local True 46d

v1.storage.k8s.io Local True 46d

v1beta1.admissionregistration.k8s.io Local True 46d

v1beta1.apiextensions.k8s.io Local True 46d

v1beta1.authentication.k8s.io Local True 46d

v1beta1.authorization.k8s.io Local True 46d

v1beta1.batch Local True 46d

v1beta1.certificates.k8s.io Local True 46d

v1beta1.coordination.k8s.io Local True 46d

v1beta1.discovery.k8s.io Local True 46d

v1beta1.events.k8s.io Local True 46d

v1beta1.extensions Local True 46d

v1beta1.metrics.k8s.io kube-system/metrics-server True 12m

v1beta1.networking.k8s.io Local True 46d

v1beta1.node.k8s.io Local True 46d

v1beta1.policy Local True 46d

v1beta1.rbac.authorization.k8s.io Local True 46d

v1beta1.scheduling.k8s.io Local True 46d

v1beta1.storage.k8s.io Local True 46d

v2beta1.autoscaling Local True 46d

v2beta2.autoscaling Local True 46d

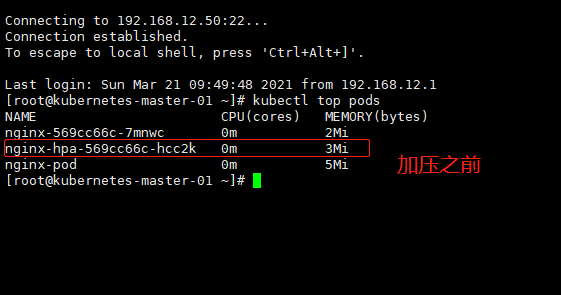

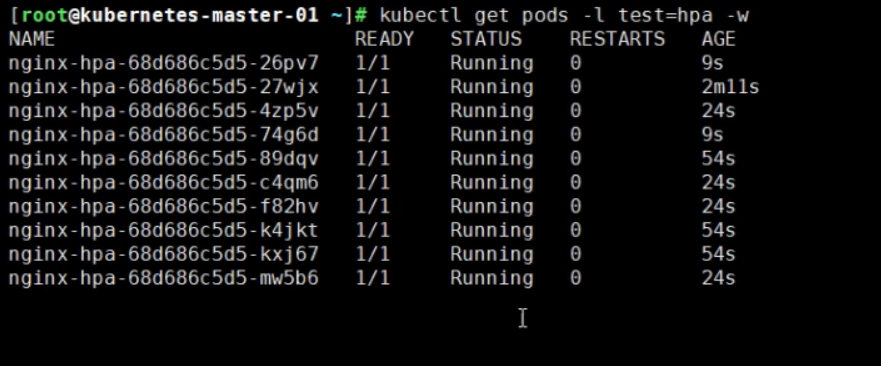

三、 HPA 自动伸缩

在生产环境中,总会有一些意想不到的事情发生,比如公司网站流量突然升高,此时之前创建的 Pod 已不足 以撑住所有的访问,而运维人员也不可能 24 小时守着业务服务,这时就可以通过配置 HPA,实现负载过高的情 况下自动扩容 Pod 副本数以分摊高并发的流量,当流量恢复正常后,HPA 会自动缩减 Pod 的数量。HPA 是根据 CPU 的使用率、内存使用率自动扩展 Pod 数量的,所以要使用 HPA 就必须定义 Requests 参数。

1.1、 创建 HPA

# HPA伸缩容 HPA 自动伸缩

kind: Deployment

apiVersion: apps/v1

metadata:

name: nginx-hpa

namespace: default

labels:

app: nginx

spec:

selector:

matchLabels:

app: nginx

test: hpa

template:

metadata:

labels:

app: nginx

test: hpa

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

resources:

limits:

cpu: 10m

memory: 50Mi

requests:

cpu: 10m

memory: 50Mi

---

kind: Service

apiVersion: v1

metadata:

namespace: default

name: hpa-svc

spec:

selector:

app: nginx

test: hpa

ports:

- port: 80

targetPort: 80

---

kind: HorizontalPodAutoscaler

apiVersion: autoscaling/v2beta1

metadata:

name: docs

namespace: default

spec:

# HPA的大pod数量和最少pod数量

maxReplicas: 10

minReplicas: 1

# HPA的伸缩对象描述,HPA会动态修改对象的pods数量

scaleTargetRef:

kind: Deployment

name: nginx-hpa

apiVersion: apps/v1

# 监控的指标数组,支持多种类型的指标共存

metrics:

- type: Resource

# 核心指标,包含 cpu 和内存两种(被弹性伸缩的 pod 对象中容器的 requests 和 limits 中定义的指标。)

resource:

name: cup

# CPU 阈值

# 计算公式:所有目标 pod 的 metric 的使用率(百分比)的平均值,

# 例如 limit.cpu=1000m,实际使用 500m,则 utilization=50%

# 例如 deployment.replica=3, limit.cpu=1000m,则 pod1 实际使用 cpu=500m, pod2=300m,pod=600m

## 则 averageUtilization=(500/1000+300/1000+600/1000)/3 = (500 + 300 +600)/(3*1000))

targetAverageUtilization: 5

执行

[root@kubernetes-master-01 ~]# vi hpa-metrics-server.yaml

[root@kubernetes-master-01 ~]# kubectl apply -f hpa-metrics-server.yaml

deployment.apps/nginx-hpa created

service/hpa-svc created

horizontalpodautoscaler.autoscaling/docs created

[root@kubernetes-master-01 ~]# kubectl get pods -l test=hpa

NAME READY STATUS RESTARTS AGE

nginx-hpa-569cc66c-hcc2k 1/1 Running 0 47s

[root@kubernetes-master-01 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

hpa-svc ClusterIP 10.96.208.122 <none> 80/TCP 2m15s

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 49d

nginx NodePort 10.96.106.13 <none> 80:26755/TCP 40d

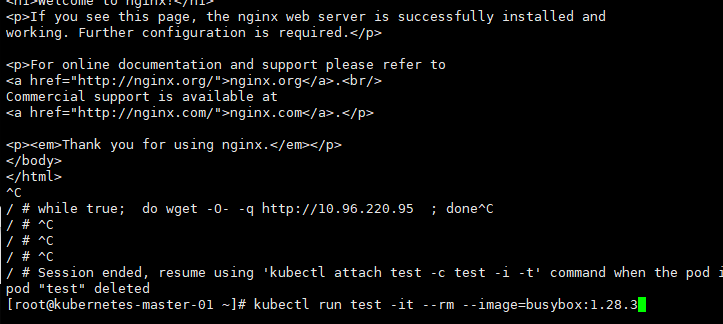

对hpa-svc进行加压

wget -O- -q http://10.96.208.122

kubectl run test -it --rm --image=busybox:1.28.3

while true; do wget -O- -q http://10.96.220.95 ; done

1.2、 测试

四、 Nginx Ingress

Ingress 为 Kubernetes 集群中的服务提供了入口,可以提供负载均衡、SSL 终止和基于名称的虚拟主机,在生 产环境中常用的 Ingress 有 Treafik、Nginx、HAProxy、Istio 等。在 Kubernetesv 1.1 版中添加的 Ingress 用于从集群 外部到集群内部 Service 的 HTTP 和 HTTPS 路由,流量从 Internet 到 Ingress 再到 Services 最后到 Pod 上,通常情 况下,Ingress 部署在所有的 Node 节点上。Ingress 可以配置提供服务外部访问的 URL、负载均衡、终止 SSL,并 提供基于域名的虚拟主机。但 Ingress 不会暴露任意端口或协

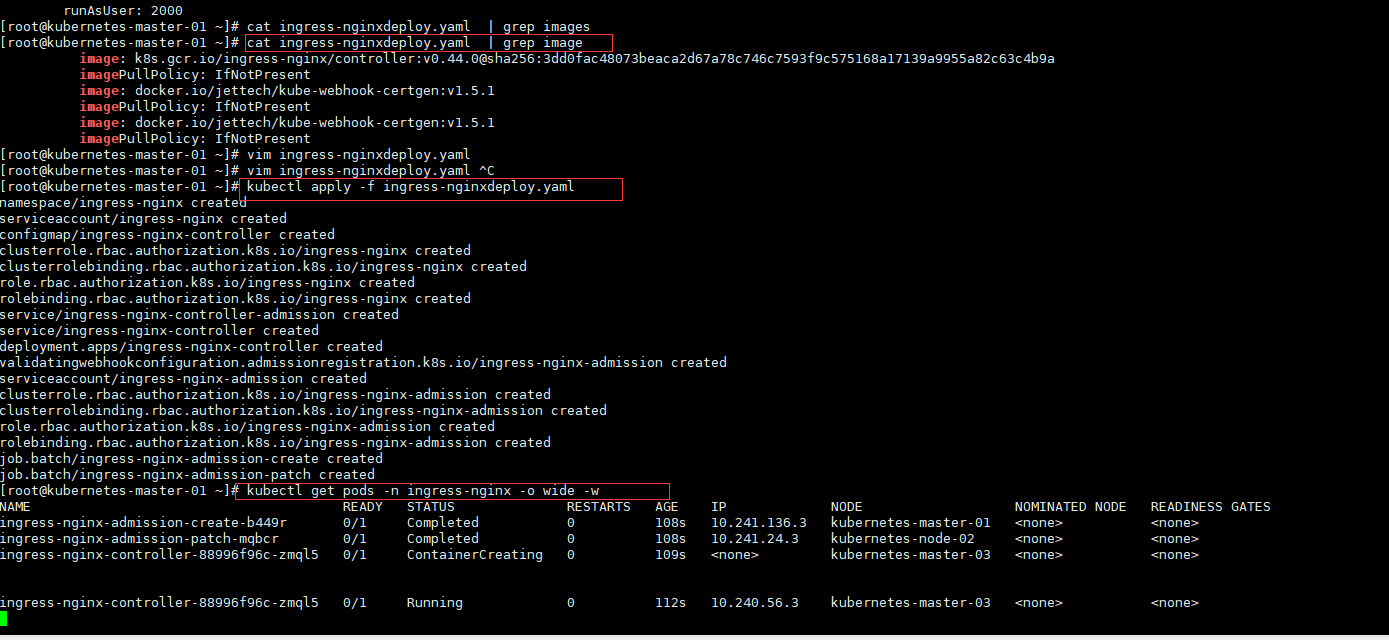

1.1、 安装 nginx ingress

# 官网地址

https://github.com/kubernetes/ingress-nginx

https://kubernetes.github.io/ingress-nginx/

# 部署地址

https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v0.44.0/deploy/static/provider/baremetal/deploy.yaml

# ingree-nginx.yaml

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

---

# Source: ingress-nginx/templates/controller-serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

helm.sh/chart: ingress-nginx-3.23.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.44.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/controller-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

labels:

helm.sh/chart: ingress-nginx-3.23.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.44.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

data:

---

# Source: ingress-nginx/templates/clusterrole.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

helm.sh/chart: ingress-nginx-3.23.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.44.0

app.kubernetes.io/managed-by: Helm

name: ingress-nginx

rules:

- apiGroups:

- ''

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ''

resources:

- nodes

verbs:

- get

- apiGroups:

- ''

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- extensions

- networking.k8s.io # k8s 1.14+

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- events

verbs:

- create

- patch

- apiGroups:

- extensions

- networking.k8s.io # k8s 1.14+

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io # k8s 1.14+

resources:

- ingressclasses

verbs:

- get

- list

- watch

---

# Source: ingress-nginx/templates/clusterrolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

helm.sh/chart: ingress-nginx-3.23.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.44.0

app.kubernetes.io/managed-by: Helm

name: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/controller-role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

helm.sh/chart: ingress-nginx-3.23.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.44.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

rules:

- apiGroups:

- ''

resources:

- namespaces

verbs:

- get

- apiGroups:

- ''

resources:

- configmaps

- pods

- secrets

- endpoints

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- extensions

- networking.k8s.io # k8s 1.14+

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- extensions

- networking.k8s.io # k8s 1.14+

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io # k8s 1.14+

resources:

- ingressclasses

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- configmaps

resourceNames:

- ingress-controller-leader-nginx

verbs:

- get

- update

- apiGroups:

- ''

resources:

- configmaps

verbs:

- create

- apiGroups:

- ''

resources:

- events

verbs:

- create

- patch

---

# Source: ingress-nginx/templates/controller-rolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

helm.sh/chart: ingress-nginx-3.23.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.44.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/controller-service-webhook.yaml

apiVersion: v1

kind: Service

metadata:

labels:

helm.sh/chart: ingress-nginx-3.23.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.44.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller-admission

namespace: ingress-nginx

spec:

type: ClusterIP

ports:

- name: https-webhook

port: 443

targetPort: webhook

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

---

# Source: ingress-nginx/templates/controller-service.yaml

apiVersion: v1

kind: Service

metadata:

annotations:

labels:

helm.sh/chart: ingress-nginx-3.23.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.44.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: http

- name: https

port: 443

protocol: TCP

targetPort: https

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

---

# Source: ingress-nginx/templates/controller-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

helm.sh/chart: ingress-nginx-3.23.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.44.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

revisionHistoryLimit: 10

minReadySeconds: 0

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

spec:

dnsPolicy: ClusterFirst

containers:

- name: controller

image: k8s.gcr.io/ingress-nginx/controller:v0.44.0@sha256:3dd0fac48073beaca2d67a78c746c7593f9c575168a17139a9955a82c63c4b9a

imagePullPolicy: IfNotPresent

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

args:

- /nginx-ingress-controller

- --election-id=ingress-controller-leader

- --ingress-class=nginx

- --configmap=$(POD_NAMESPACE)/ingress-nginx-controller

- --validating-webhook=:8443

- --validating-webhook-certificate=/usr/local/certificates/cert

- --validating-webhook-key=/usr/local/certificates/key

securityContext:

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

runAsUser: 101

allowPrivilegeEscalation: true

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: LD_PRELOAD

value: /usr/local/lib/libmimalloc.so

livenessProbe:

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 1

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 1

successThreshold: 1

failureThreshold: 3

ports:

- name: http

containerPort: 80

protocol: TCP

- name: https

containerPort: 443

protocol: TCP

- name: webhook

containerPort: 8443

protocol: TCP

volumeMounts:

- name: webhook-cert

mountPath: /usr/local/certificates/

readOnly: true

resources:

requests:

cpu: 100m

memory: 90Mi

nodeSelector:

kubernetes.io/os: linux

serviceAccountName: ingress-nginx

terminationGracePeriodSeconds: 300

volumes:

- name: webhook-cert

secret:

secretName: ingress-nginx-admission

---

# Source: ingress-nginx/templates/admission-webhooks/validating-webhook.yaml

# before changing this value, check the required kubernetes version

# https://kubernetes.io/docs/reference/access-authn-authz/extensible-admission-controllers/#prerequisites

apiVersion: admissionregistration.k8s.io/v1

kind: ValidatingWebhookConfiguration

metadata:

labels:

helm.sh/chart: ingress-nginx-3.23.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.44.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

name: ingress-nginx-admission

webhooks:

- name: validate.nginx.ingress.kubernetes.io

matchPolicy: Equivalent

rules:

- apiGroups:

- networking.k8s.io

apiVersions:

- v1beta1

operations:

- CREATE

- UPDATE

resources:

- ingresses

failurePolicy: Fail

sideEffects: None

admissionReviewVersions:

- v1

- v1beta1

clientConfig:

service:

namespace: ingress-nginx

name: ingress-nginx-controller-admission

path: /networking/v1beta1/ingresses

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.23.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.44.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrole.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.23.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.44.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

rules:

- apiGroups:

- admissionregistration.k8s.io

resources:

- validatingwebhookconfigurations

verbs:

- get

- update

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.23.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.44.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.23.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.44.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

rules:

- apiGroups:

- ''

resources:

- secrets

verbs:

- get

- create

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/rolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.23.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.44.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/job-createSecret.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: ingress-nginx-admission-create

annotations:

helm.sh/hook: pre-install,pre-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.23.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.44.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

spec:

template:

metadata:

name: ingress-nginx-admission-create

labels:

helm.sh/chart: ingress-nginx-3.23.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.44.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

containers:

- name: create

image: docker.io/jettech/kube-webhook-certgen:v1.5.1

imagePullPolicy: IfNotPresent

args:

- create

- --host=ingress-nginx-controller-admission,ingress-nginx-controller-admission.$(POD_NAMESPACE).svc

- --namespace=$(POD_NAMESPACE)

- --secret-name=ingress-nginx-admission

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

restartPolicy: OnFailure

serviceAccountName: ingress-nginx-admission

securityContext:

runAsNonRoot: true

runAsUser: 2000

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/job-patchWebhook.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: ingress-nginx-admission-patch

annotations:

helm.sh/hook: post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.23.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.44.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

spec:

template:

metadata:

name: ingress-nginx-admission-patch

labels:

helm.sh/chart: ingress-nginx-3.23.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.44.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

containers:

- name: patch

image: docker.io/jettech/kube-webhook-certgen:v1.5.1

imagePullPolicy: IfNotPresent

args:

- patch

- --webhook-name=ingress-nginx-admission

- --namespace=$(POD_NAMESPACE)

- --patch-mutating=false

- --secret-name=ingress-nginx-admission

- --patch-failure-policy=Fail

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

restartPolicy: OnFailure

serviceAccountName: ingress-nginx-admission

securityContext:

runAsNonRoot: true

runAsUser: 2000

# 查看依赖镜像

[root@kubernetes-master-01 ~]# cat ingress-nginxdeploy.yaml | grep image

image: k8s.gcr.io/ingress-nginx/controller:v0.44.0@sha256:3dd0fac48073beaca2d67a78c746c7593f9c575168a17139a9955a82c63c4b9a

imagePullPolicy: IfNotPresent

image: docker.io/jettech/kube-webhook-certgen:v1.5.1

imagePullPolicy: IfNotPresent

image: docker.io/jettech/kube-webhook-certgen:v1.5.1

imagePullPolicy: IfNotPresent

# 仓库构建

https://code.aliyun.com/RandySun121/k8s/blob/master/ingress-controller/Dockerfile

https://cr.console.aliyun.com/repository/cn-hangzhou/k8s121/ingress-nginx/build

# 创建

[root@kubernetes-master-01 ~]# kubectl apply -f ingress-nginxdeploy.yaml

namespace/ingress-nginx created

serviceaccount/ingress-nginx created

configmap/ingress-nginx-controller created

clusterrole.rbac.authorization.k8s.io/ingress-nginx created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx created

role.rbac.authorization.k8s.io/ingress-nginx created

rolebinding.rbac.authorization.k8s.io/ingress-nginx created

service/ingress-nginx-controller-admission created

service/ingress-nginx-controller created

deployment.apps/ingress-nginx-controller created

validatingwebhookconfiguration.admissionregistration.k8s.io/ingress-nginx-admission created

serviceaccount/ingress-nginx-admission created

clusterrole.rbac.authorization.k8s.io/ingress-nginx-admission created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

role.rbac.authorization.k8s.io/ingress-nginx-admission created

rolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

job.batch/ingress-nginx-admission-create created

job.batch/ingress-nginx-admission-patch created

# 检查安装是否成功

[root@kubernetes-master-01 ~]# kubectl get pods -n ingress-nginx

NAME READY STATUS RESTARTS AGE

ingress-nginx-admission-create-b449r 0/1 Completed 0 3m34s

ingress-nginx-admission-patch-mqbcr 0/1 Completed 0 3m34s

ingress-nginx-controller-88996f96c-zmql5 1/1 Running 0 3m35s

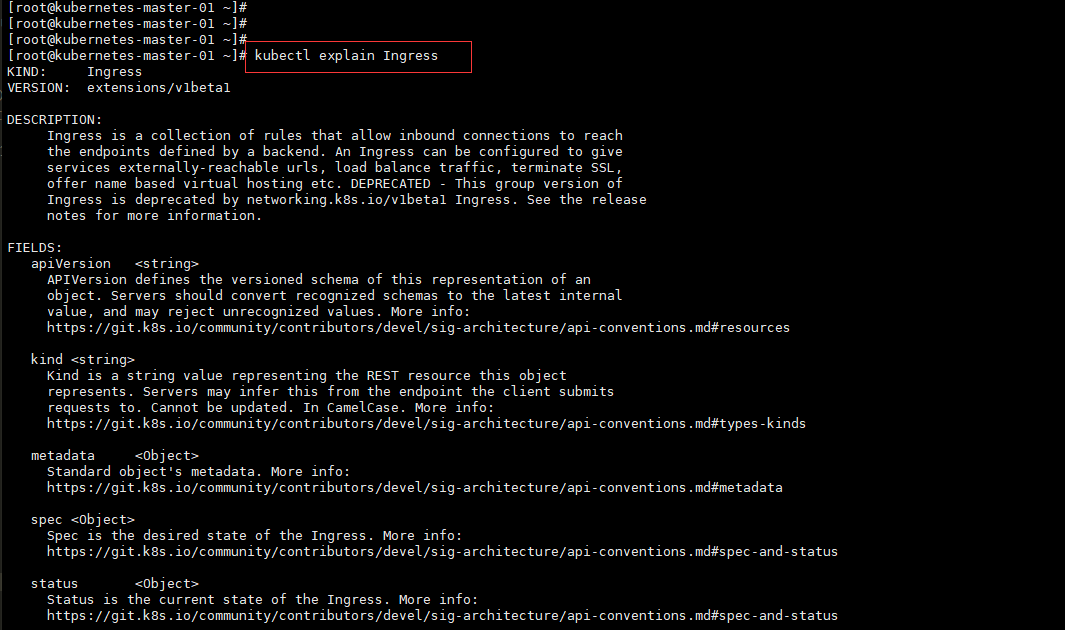

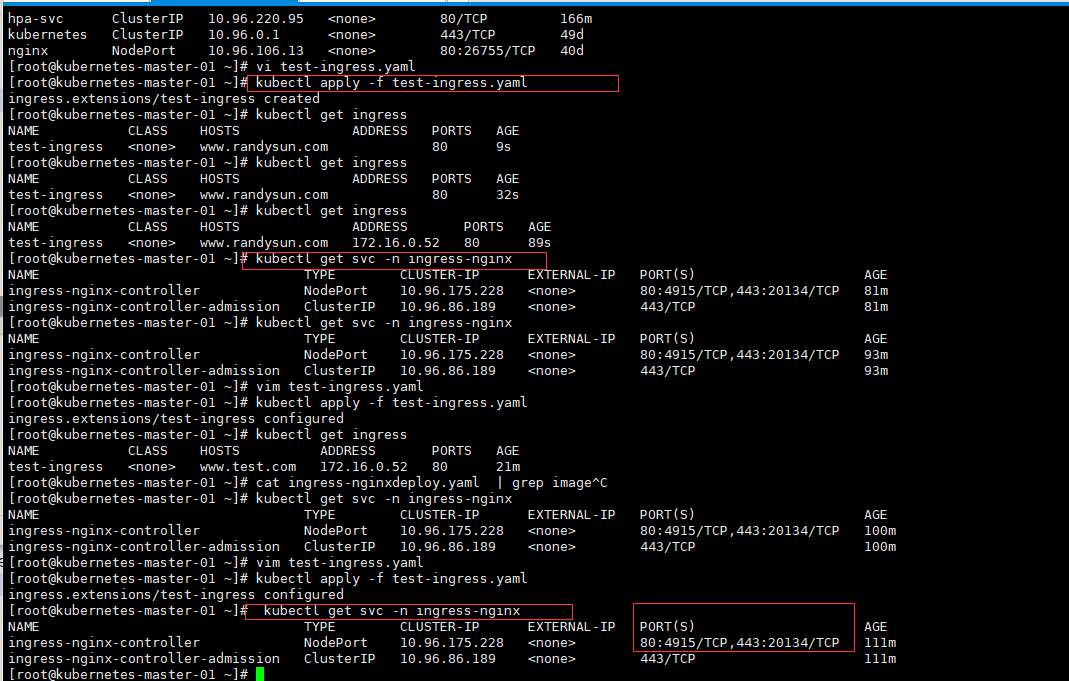

测试

[root@kubernetes-master-01 ~]# kubectl explain Ingress

KIND: Ingress

VERSION: extensions/v1beta1

kind: Ingress

# kubectl explain Ingress

apiVersion: extensions/v1beta1

metadata:

namespace: default

name: test-ingress

spec:

rules:

- host: www.test.com

http:

paths:

- backend:

serviceName: hpa-svc

servicePort: 80

path: /

[root@kubernetes-master-01 ~]# vi test-ingress.yaml

# 部署

[root@kubernetes-master-01 ~]# kubectl apply -f test-ingress.yaml

ingress.extensions/test-ingress created

# 查看部署结果

[root@kubernetes-master-01 ~]# kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

test-ingress <none> www.randysun.com 80 9s

[root@kubernetes-master-01 ~]# kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

test-ingress <none> www.randysun.com 80 32s

[root@kubernetes-master-01 ~]# kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

test-ingress <none> www.randysun.com 172.16.0.52 80 89s

# 查看访问ip

[root@kubernetes-master-01 ~]# kubectl get svc -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller NodePort 10.96.175.228 <none> 80:4915/TCP,443:20134/TCP 81m

ingress-nginx-controller-admission ClusterIP 10.96.86.189 <none> 443/TCP 81m

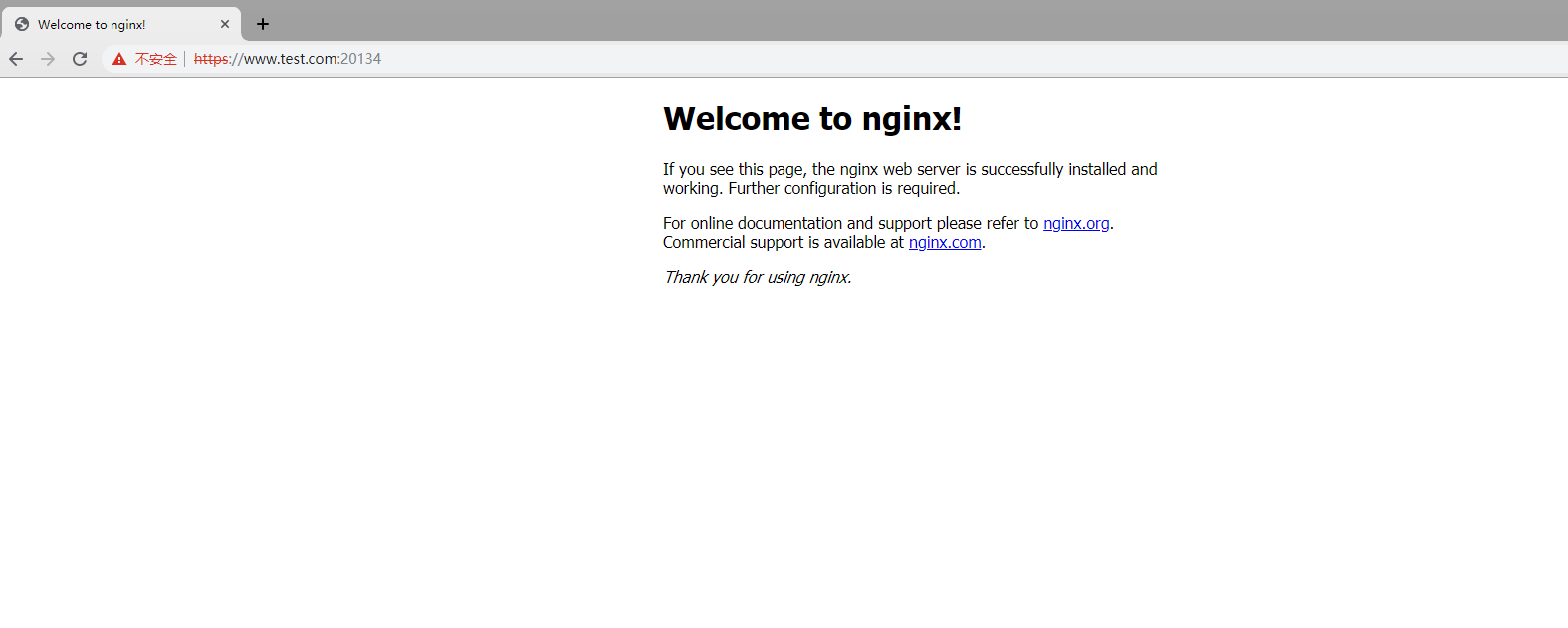

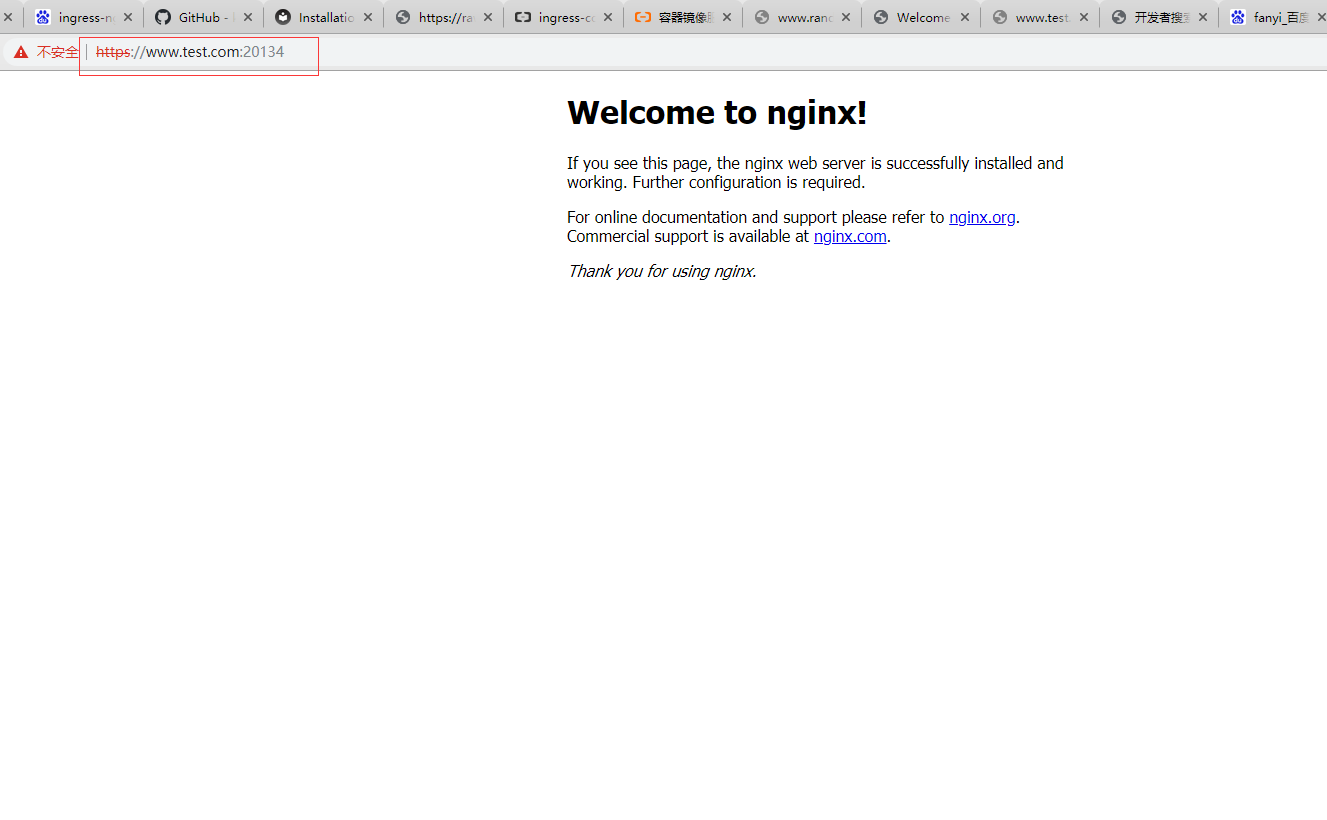

1.2、 基于 TLS 的 Ingress

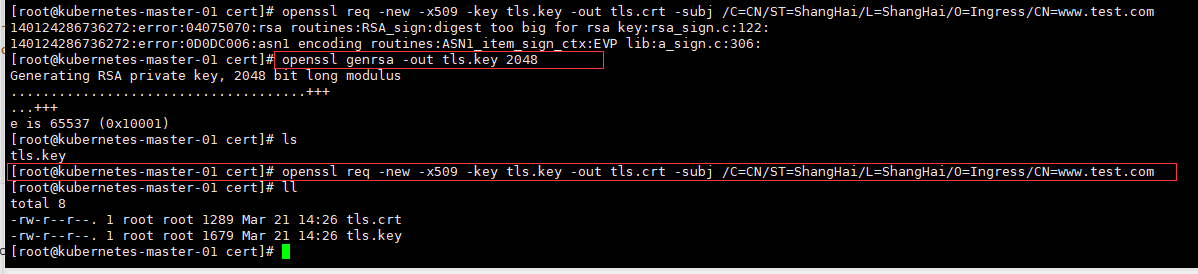

1.2.1、创建证书

创建证书,生产环境的证书为公司购买的证书。

openssl genrsa -out tls.key 2048

openssl req -new -x509 -key tls.key -out tls.crt -subj -C=CN/ST=ShangHai/L=ShangHai/O=Ingress/CN=www.test.com

[root@kubernetes-master-01 ~]# mkdir cert

[root@kubernetes-master-01 ~]# cd cert/

[root@kubernetes-master-01 cert]# openssl genrsa -out tls.key 2048

Generating RSA private key, 2048 bit long modulus

.....................................+++

...+++

e is 65537 (0x10001)

[root@kubernetes-master-01 cert]# ls

tls.key

[root@kubernetes-master-01 cert]# openssl req -new -x509 -key tls.key -out tls.crt -subj /C=CN/ST=ShangHai/L=ShangHai/O=Ingress/CN=www.test.com

[root@kubernetes-master-01 cert]# ll

total 8

-rw-r--r--. 1 root root 1289 Mar 21 14:26 tls.crt

-rw-r--r--. 1 root root 1679 Mar 21 14:26 tls.key

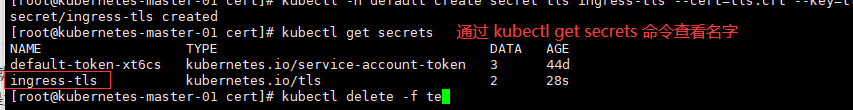

[root@kubernetes-master-01 cert]# kubectl -n default create secret tls ingress-tls --cert=tls.crt --key=tls.key

secret/ingress-tls created

[root@kubernetes-master-01 cert]# kubectl get secrets

NAME TYPE DATA AGE

default-token-xt6cs kubernetes.io/service-account-token 3 44d

ingress-tls kubernetes.io/tls 2 28s

1.2.2、定义 Ingress

---

kind: Ingress

apiVersion: extensions/v1beta1

metadata:

name: ingress-ingress

namespace: default

annotations:

kubernetes.io/ingress.class: "nginx"

spec:

tls:

- secretName: ingress-tls # 通过 kubectl get secrets 命令查看名字

rules:

- host: www.test.com

http:

paths:

- backend:

serviceName: hpa-svc

servicePort: 80

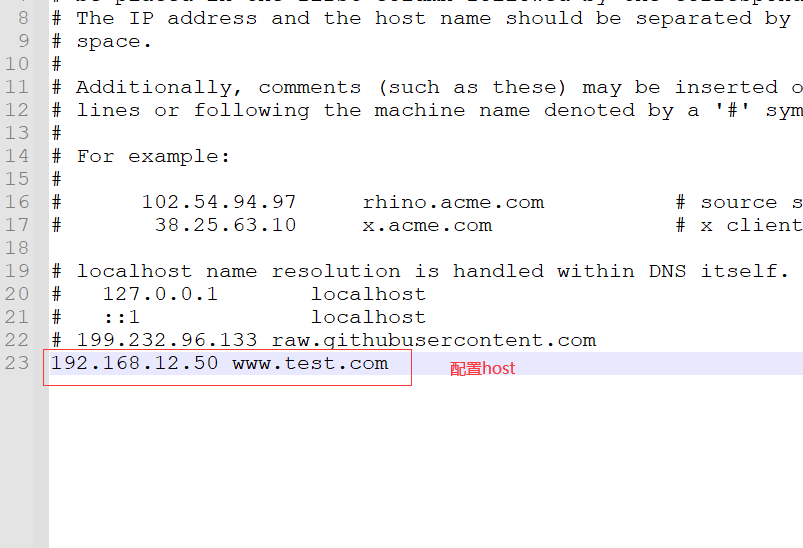

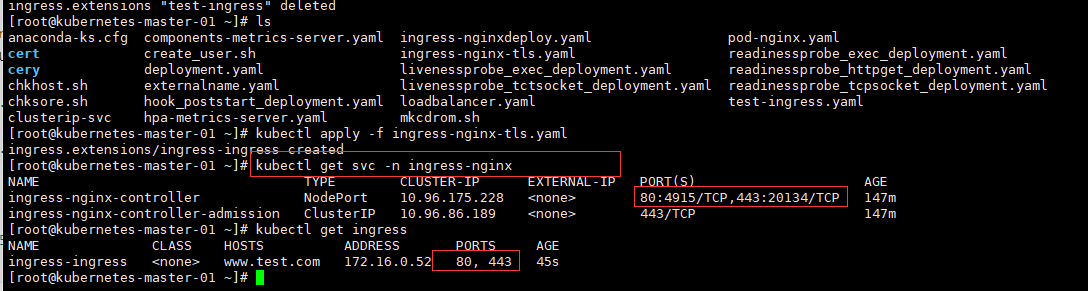

1.2.2.1、 部署

[root@kubernetes-master-01 ~]# kubectl apply -f ingress-nginx-tls.yaml

ingress.extensions/ingress-ingress created

[root@kubernetes-master-01 ~]# kubectl get svc -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller NodePort 10.96.175.228 <none> 80:4915/TCP,443:20134/TCP 147m

ingress-nginx-controller-admission ClusterIP 10.96.86.189 <none> 443/TCP 147m

[root@kubernetes-master-01 ~]# kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress-ingress <none> www.test.com 172.16.0.52 80, 443 45s

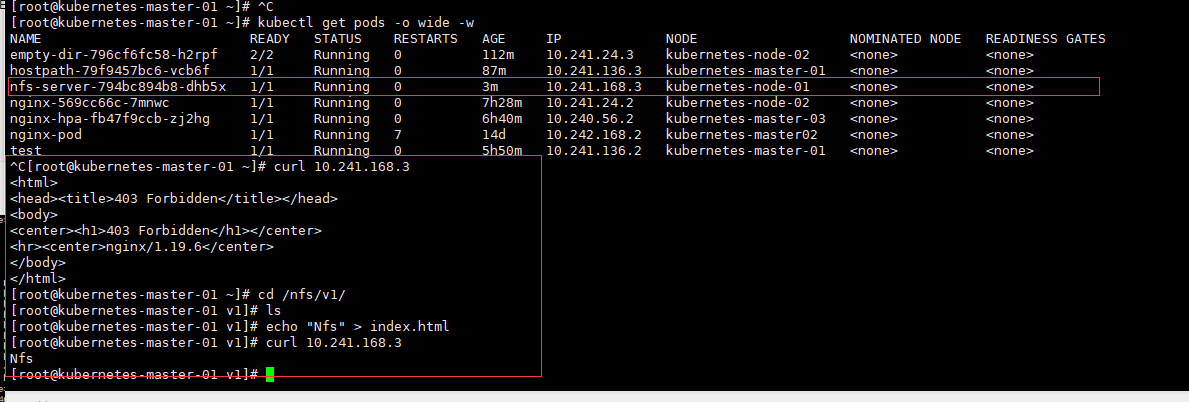

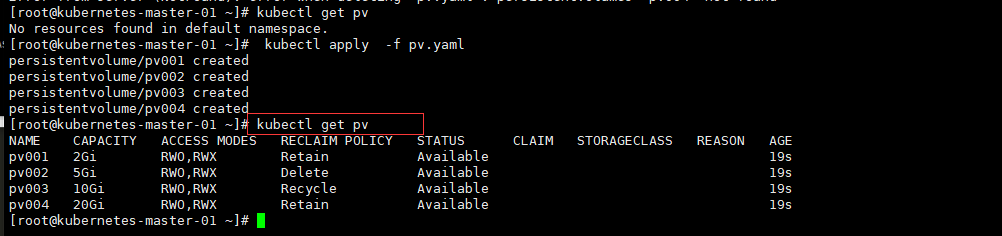

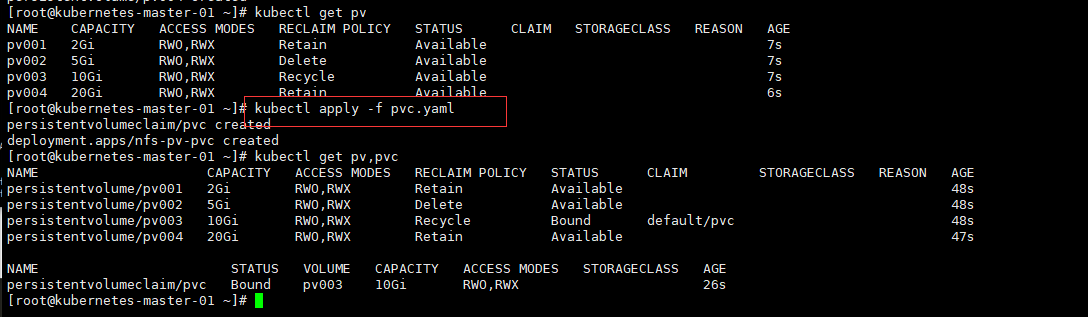

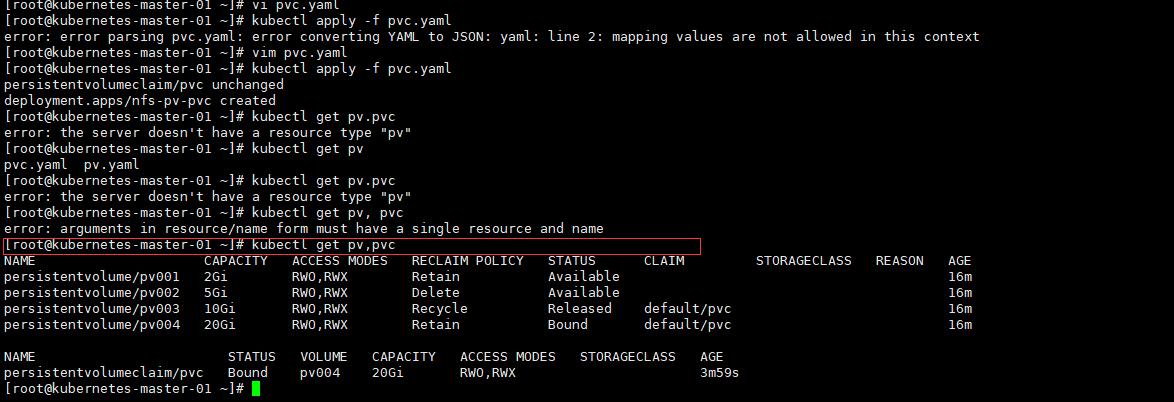

五、 数据持久化

我们知道,Pod 是由容器组成的,而容器宕机或停止之后,数据就随之丢了,那么这也就意味着我们在做 Kubernetes 集群的时候就不得不考虑存储的问题,而存储卷就是为了 Pod 保存数据而生的。存储卷的类型有很多, 我们常用到一般有四种:emptyDir,hostPath,NFS 以及云存储(ceph, glasterfs...)等。

1、 emptyDir

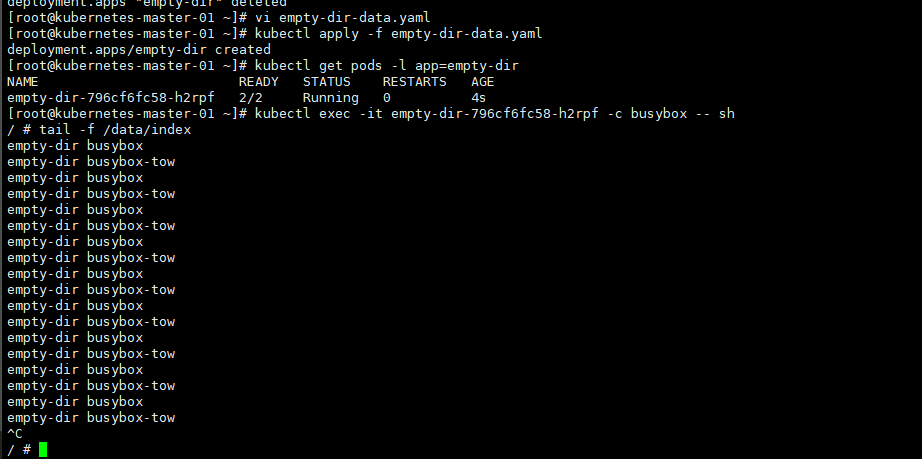

emptyDir 类型的 volume 在 pod 分配到 node 上时被创建,kubernetes 会在 node 上自动分配 一个目录,因 此无需指定宿主机 node 上对应的目录文件。这个目录的初始内容为空,当 Pod 从 node 上移除时,emptyDir 中 的数据会被永久删除。emptyDir Volume 主要用于某些应用程序无需永久保存的临时目录。

kind: Deployment 数据持久化 emptyDir 类型的 volume 在 pod 分配到 node 上时被创建,kubernetes 会在 node 上自动分配 一个目录,因 此**无需指定宿主机 node 上对应的目录文件**。这个目录的初始内容为空,当 Pod 从 node 上移除时,emptyDir 中 的数据会被永久删除。emptyDir Volume 主要用于某些应用程序无需永久保存的临时目录。

apiVersion: apps/v1

metadata:

namespace: default

name: empty-dir

spec:

selector:

matchLabels:

app: empty-dir

template:

metadata:

labels:

app: empty-dir

spec:

containers:

# 第一个容器

- name: busybox

image: busybox:1.28.3

imagePullPolicy: IfNotPresent

command: ['/bin/sh', '-c', "while true; do echo 'empty-dir busybox' >> /data/index; sleep 1; done"]

# 挂载存储卷

volumeMounts:

- mountPath: /data # 挂在容器里面的路径

name: empty-dir-data # 存储卷的名称

# 第二个容器