Background Information

It is reported that starting from weeks ago, a background job ZABC which is used for IDoc batch processing could not finish successfully.

This background job is designed to process the uploaded IDocs from Chanel partners and post them for processing. After it finished, the order information from these Chanel partners will be available in CRM system for further follow-up processing. 7 other background jobs follow this job to fetch information from different perspectives. If this job could not finish successfully, the business impact on the production environment is huge.

Analysis

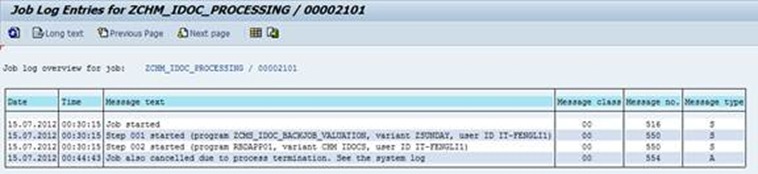

Checking in SM37, batch job log could be easily checked, and the following information guided us to the system log for further analysis.

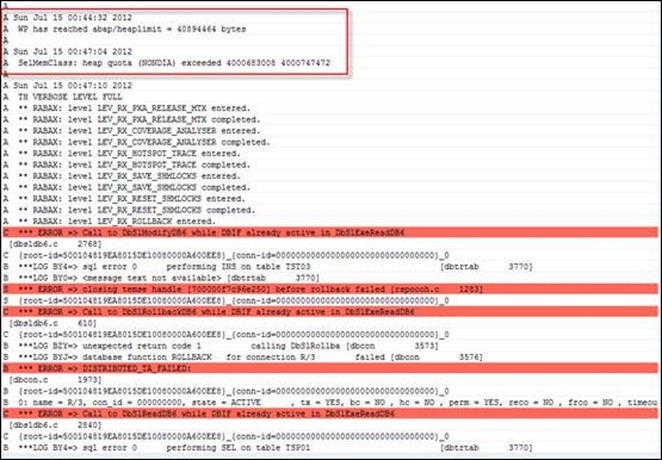

And the system log further indicates that the background work process was forced to stopped and restarted during the job failing time phase.

We could see that database error occurred when updating/selecting on table TST01 and TST03. This indicates the inconsistencies on Spool logs or background job logs. We have communicated with the basis team about the steps to eliminate the inconsistencies on these logs. However, this could not solve the issue completely. Check recommendations for more details.

As indicated in the system log, we realized more information might exist in the work process’s trace file. We found that before each time when DB errors occurred, there is always an indication “WP has reached abap/heaplimit = 40894464 bytes”.

The heap limit to each work process (abap/heaplimit) is 4 GB. The limit was reached because of either insufficient SQL selections or improper ABAP code like too many recursive calls or endless loops. To identify the root cause, we need further checks on the runtime status when job failed. And this needs to be checked from logs that contain the memory snapshots and the work process information (/SDF/MON data, see details below).

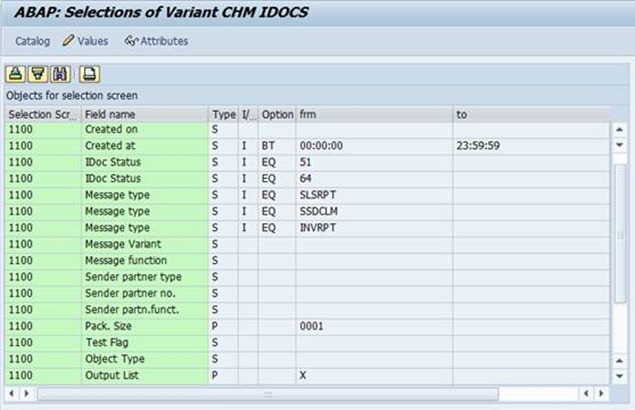

At the same time, we tried to schedule the job with different variants which narrow down the time interval of the IDocs. All the previous failed jobs used the following variant.

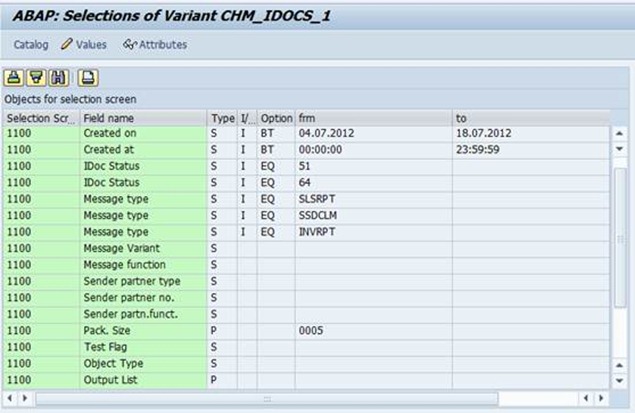

But the new variant added the “Created on” information which dated back two weeks since the current date information.

After the variant changed, all the jobs could successfully finish.

To determine the root cause of the high heap memory utilization, we further checked the /SDF/MON during the whole day time on July 15. And interestingly we found that heap memory peak time could be identified. (00:30:00 July 15 – 00:47:00 July 15)

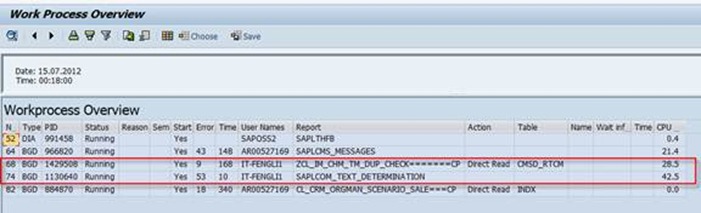

And if we check the work process snapshot at the same time, the background work process is always running on a data selection of table CMSD_RTCM within report ZCL_IM_CHM_TM_DUP_CHECK.

The source code shows that a “Select … For all entries…” was performed based on the content of internal table lt_to_com.

IF lt_to_com IS NOT INITIAL.

SELECT * FROM cmsd_rtcm

INTO CORRESPONDING FIELDS OF TABLE gt_cmsd_rtcm

FOR ALL ENTRIES IN lt_to_com

WHERE xmno_docno_i = lt_to_com-xmno_docno_i

AND tk_partner_i = lt_to_com-tk_partner_i

AND idoc_docno_i NE lt_to_com-idoc_docno_i.

ELSE.

ENDIF.

CMSD_RTCM is the index table of RTCM which stores basically the information about the Sale-in/Sale-through/Inventory of the resale items.

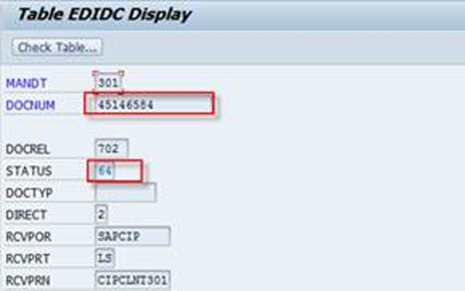

With the question about how the selection ate up all the heap memory, we selected out the IDocs two weeks older than current date from table EDIDC, and there are in total 213 old IDocs dating back to two or three years ago.

For 212 of the 213 entries, the status of the IDocs is always 51.

According to SAP Note 89471, all the IDocs with status ‘51’ are not supposed to be re-processed by program RBDAPP01 but by program RBDMANIN. Therefore all the other 212 old IDocs were actually not processed in previous cancelled jobs.

For the last record on 01.06.2012 with IDoc number 45146584, it has the status 64. Therefore we tried to debug into the work process during that IDoc was processed.

After when the background started, we managed to debug via SM66 and got the following information before the erroneous SQL statement was executed.

We could see that an internal table with 412 entries will be used as conditions to select data from table CMSD_RTCM. According to the /SDF/MON data around every job cancellation previously, we know that it is within this SQL statement, that the Heap Memory of the whole work process was occupied and finally exhausted. And by checking the passing table containing the processed IDoc information, we could confirm that this happened during the processing of IDoc 45146584.

Via checking the statement, we found it was trying to fetch much more data than really needed from the table CMSD_RTCM. The internal table gt_cmsd_rtcm which was supposed to be filled via this statement actually contains only 7 columns defined within this method.

TYPES: BEGIN OF itab,

itmid_i TYPE cmsd_rtcm-itmid_i, " record no

xn_type TYPE cmsd_rtcm-xn_type, " Transaction Type

tk_partner_i TYPE cmsd_rtcm-tk_partner_i, "channal partner

xmno_docno_i TYPE cmsd_rtcm-xmno_docno_i, "trasmission no

idoc_docno_i TYPE cmsd_rtcm-idoc_docno_i, "idoc number

docnum TYPE edidc-docnum, "idoc number

crdt_i TYPE cmsd_rtcm-crdt_i, "create date

END OF itab.

DATA: gt_cmsd_rtcm TYPE TABLE OF itab..

FIELD-SYMBOLS: <gv_cmsd_rtcm> TYPE itab.

DATA: gv_cmsd_rtcm TYPE itab..

However, the SQL statement was doing “SELECT * FROM cmsd_rtcm…” without any filtering on the chosen columns. In fact, the table CMSD_RTCM is a big table containing more than 130 different columns.

Therefore selecting everything from table CMSD_RTCM and filtering them into 7 columns would be a huge waste on resource and memory because the filtering would only be done within the local memory (not in the database).

And we could confirm that the root cause of the Job cancellation was finally found to be the improper SQL statement and the bad data (records on 01.06.2012) at the same time.

We performed multiple tests in the system with different variants and to better support our conclusion, please check following different variants and the corresponding job running status.

| Job Running Version | Date Selection | Pack. size | Output | Result |

| Original (2 months ago) | No restriction | 01 | X | Passed |

| Original | No restriction | 01 | X | Failed |

| Test #1 | Latest 14 days | 05 | Space | Passed |

| Test #2 | Latest 60 days | 01 | Space | Failed |

| Test #3 | Latest 14 days | 01 | Space | Passed |

The bad data IDoc was created at 01.06.2012, which was around 2 months ago. And since then the job kept failing. If we compare the test #1, #2 and test #3, we could confirm that the data from latest 60 days but not the latest 14 days is the only factor that influences the result. And the only data in such a time interval is the IDoc created on 01.06.2012.

I believe now we have already get the recommendations.

(The End)