介绍

介绍、算法和改进部分转自康行天下的博文

非极大值抑制(Non-Maximum Suppression,NMS),顾名思义就是抑制不是极大值的元素,可以理解为局部最大搜索。这个局部代表的是一个邻域,邻域有两个参数可变,一是邻域的维数,二是邻域的大小。这里不讨论通用的NMS算法(参考论文《Efficient Non-Maximum Suppression》对1维和2维数据的NMS实现),而是用于目标检测中提取分数最高的窗口的。例如在行人检测中,滑动窗口经提取特征,经分类器分类识别后,每个窗口都会得到一个分数。但是滑动窗口会导致很多窗口与其他窗口存在包含或者大部分交叉的情况。这时就需要用到NMS来选取那些邻域里分数最高(是行人的概率最大),并且抑制那些分数低的窗口。

NMS在计算机视觉领域有着非常重要的应用,如视频目标跟踪、数据挖掘、3D重建、目标识别以及纹理分析等。

算法

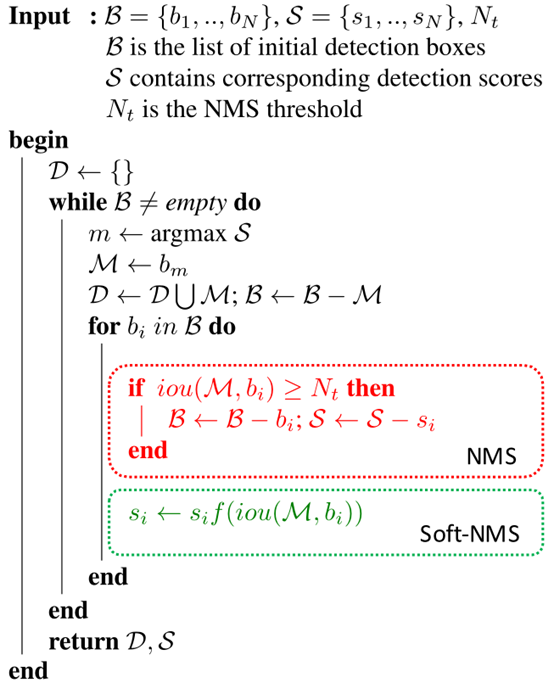

对于Bounding Box的列表B及其对应的置信度S,采用下面的计算方式.选择具有最大score的检测框M,将其从B集合中移除并加入到最终的检测结果D中.通常将B中剩余检测框中与M的IoU大于阈值Nt的框从B中移除.重复这个过程,直到B为空.

常用的重叠率(重叠区域面积比例IOU)阈值是

0.3 ~ 0.5.

其中用到排序,可以按照右下角的坐标排序或者面积排序,也可以是通过SVM等分类器得到的得分或概率,R-CNN中就是按得分进行的排序.非极大值抑制的方法是:先假设有6个矩形框,根据分类器的类别分类概率做排序,假设从小到大属于车辆的概率 分别为A、B、C、D、E、F。

(1)从最大概率矩形框F开始,分别判断A~E与F的重叠度IOU是否大于某个设定的阈值;

(2)假设B、D与F的重叠度超过阈值,那么就扔掉B、D;并标记第一个矩形框F,是我们保留下来的。

(3)从剩下的矩形框A、C、E中,选择概率最大的E,然后判断E与A、C的重叠度,重叠度大于一定的阈值,那么就扔掉;并标记E是我们保留下来的第二个矩形框。

就这样一直重复,找到所有被保留下来的矩形框。

实现

实现部分有一篇很好的教程——《Non-Maximum Suppression for Object Detection in Python》,介绍了Felzenszwalb的NMS代码和Malisiewicz的快速版代码。

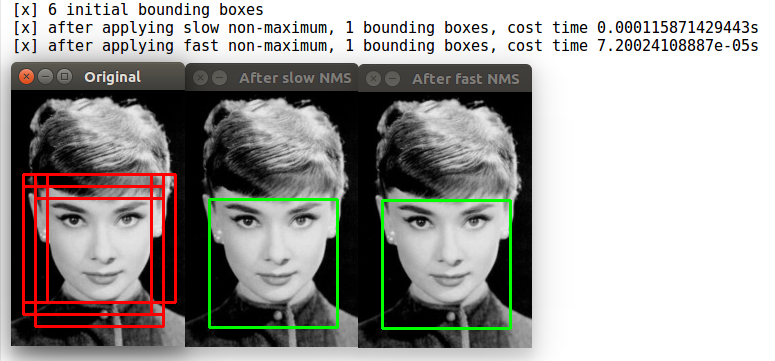

少量修改后,运行结果如下。

import numpy as np import cv2 from time import time # Felzenszwalb et al. def non_max_suppression_slow(boxes, overlapThresh): # if there are no boxes, return an empty list if len(boxes) == 0: return [] # initialize the list of picked indexes pick = [] # grab the coordinates of the bounding boxes x1 = boxes[:,0] y1 = boxes[:,1] x2 = boxes[:,2] y2 = boxes[:,3] # compute the area of the bounding boxes and sort the bounding # boxes by the bottom-right y-coordinate of the bounding box area = (x2 - x1 + 1) * (y2 - y1 + 1) idxs = np.argsort(y2) # keep looping while some indexes still remain in the indexes # list while len(idxs) > 0: # grab the last index in the indexes list, add the index # value to the list of picked indexes, then initialize # the suppression list (i.e. indexes that will be deleted) # using the last index last = len(idxs) - 1 i = idxs[last] pick.append(i) suppress = [last] # loop over all indexes in the indexes list for pos in xrange(0, last): # grab the current index j = idxs[pos] # find the largest (x, y) coordinates for the start of # the bounding box and the smallest (x, y) coordinates # for the end of the bounding box xx1 = max(x1[i], x1[j]) yy1 = max(y1[i], y1[j]) xx2 = min(x2[i], x2[j]) yy2 = min(y2[i], y2[j]) # compute the width and height of the bounding box w = max(0, xx2 - xx1 + 1) h = max(0, yy2 - yy1 + 1) # compute the ratio of overlap between the computed # bounding box and the bounding box in the area list overlap = float(w * h) / area[j] # if there is sufficient overlap, suppress the # current bounding box if overlap > overlapThresh: suppress.append(pos) # delete all indexes from the index list that are in the # suppression list idxs = np.delete(idxs, suppress) # return only the bounding boxes that were picked return boxes[pick] # Malisiewicz et al. def non_max_suppression_fast(boxes, overlapThresh): # if there are no boxes, return an empty list if len(boxes) == 0: return [] # if the bounding boxes integers, convert them to floats -- # this is important since we'll be doing a bunch of divisions if boxes.dtype.kind == "i": boxes = boxes.astype("float") # initialize the list of picked indexes pick = [] # grab the coordinates of the bounding boxes x1 = boxes[:, 0] y1 = boxes[:, 1] x2 = boxes[:, 2] y2 = boxes[:, 3] # compute the area of the bounding boxes and sort the bounding # boxes by the bottom-right y-coordinate of the bounding box area = (x2 - x1 + 1) * (y2 - y1 + 1) idxs = np.argsort(y2) # keep looping while some indexes still remain in the indexes # list while len(idxs) > 0: # grab the last index in the indexes list and add the # index value to the list of picked indexes last = len(idxs) - 1 i = idxs[last] pick.append(i) # find the largest (x, y) coordinates for the start of # the bounding box and the smallest (x, y) coordinates # for the end of the bounding box xx1 = np.maximum(x1[i], x1[idxs[:last]]) yy1 = np.maximum(y1[i], y1[idxs[:last]]) xx2 = np.minimum(x2[i], x2[idxs[:last]]) yy2 = np.minimum(y2[i], y2[idxs[:last]]) # compute the width and height of the bounding box w = np.maximum(0, xx2 - xx1 + 1) h = np.maximum(0, yy2 - yy1 + 1) # compute the ratio of overlap overlap = (w * h) / area[idxs[:last]] # delete all indexes from the index list that have idxs = np.delete(idxs, np.concatenate(([last], np.where(overlap > overlapThresh)[0]))) # return only the bounding boxes that were picked using the # integer data type return boxes[pick].astype("int") # construct a list containing the images that will be examined # along with their respective bounding boxes images = [ ("images/audrey.jpg", np.array([ (12, 84, 140, 212), (24, 84, 152, 212), (36, 84, 164, 212), (12, 96, 140, 224), (24, 96, 152, 224), (24, 108, 152, 236)])), ("images/bksomels.jpg", np.array([ (114, 60, 178, 124), (120, 60, 184, 124), (114, 66, 178, 130)])), ("images/gpripe.jpg", np.array([ (12, 30, 76, 94), (12, 36, 76, 100), (72, 36, 200, 164), (84, 48, 212, 176)]))] # loop over the images for (imagePath, boundingBoxes) in images: # load the image and clone it print "[x] %d initial bounding boxes" % (len(boundingBoxes)) image = cv2.imread(imagePath) orig = image.copy() orig1=image.copy() # loop over the bounding boxes for each image and draw them for (startX, startY, endX, endY) in boundingBoxes: cv2.rectangle(orig, (startX, startY), (endX, endY), (0, 0, 255), 2) # perform non-maximum suppression on the bounding boxes start_time=time() pick = non_max_suppression_slow(boundingBoxes, 0.3) print "[x] after applying slow non-maximum, %d bounding boxes, cost time %ss" % (len(pick),time()-start_time) # loop over the picked bounding boxes and draw them for (startX, startY, endX, endY) in pick: cv2.rectangle(image, (startX, startY), (endX, endY), (0, 255, 0), 2) # perform non-maximum suppression on the bounding boxes start_time = time() pick = non_max_suppression_fast(boundingBoxes, 0.3) print "[x] after applying fast non-maximum, %d bounding boxes, cost time %ss" % (len(pick),time()-start_time) # loop over the picked bounding boxes and draw them for (startX, startY, endX, endY) in pick: cv2.rectangle(orig1, (startX, startY), (endX, endY), (0, 255, 0), 2) # display the images cv2.imshow("Original", orig) cv2.imshow("After slow NMS", image) cv2.imshow("After fast NMS", orig1) cv2.waitKey(0)

改进

NMS loss

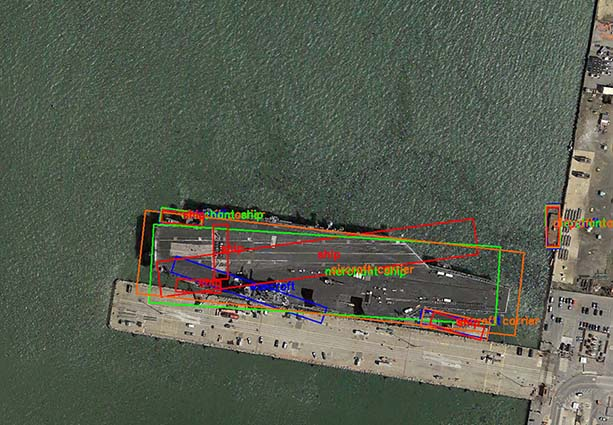

值的注意的是对多类别检测任务,如果对每类分别进行NMS,那么当检测结果中包含两个被分到不同类别的目标且其IoU较大时,会得到不可接受的结果。如下图所示:

一种改进方式便是在损失函数中加入一部分NMS损失。NMS损失可以定义为与分类损失相同:Lnms=Lcls(p,u)=−logpuLnms=Lcls(p,u)=−logpu,即真实列别u对应的log损失,p是C个类别的预测概率。实际相当于增加分类误差。

参考论文《Rotated Region Based CNN for Ship Detection》(IEEE2017会议论文)的Multi-task for NMS部分。

Soft-NMS

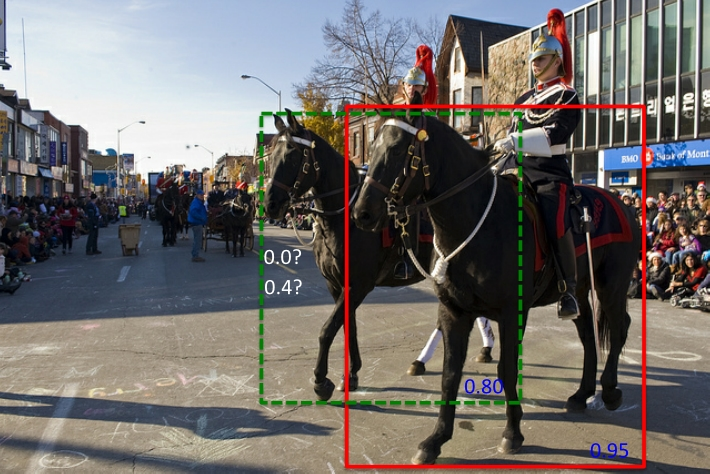

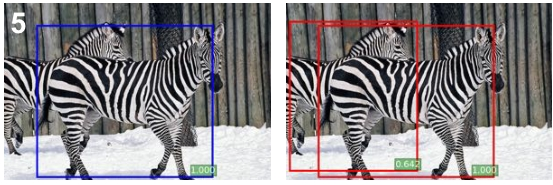

上述NMS算法的一个主要问题是当两个ground truth的目标的确重叠度很高时,NMS会将具有较低置信度的框去掉(置信度改成0),参见下图所示.

论文:《Improving Object Detection With One Line of Code》

改进之处:

改进方法在于将置信度改为IoU的函数:f(IoU),具有较低的值而不至于从排序列表中删去.

-

线性函数

si={si,si(1−iou(�,bi)),iou(�,bi)<Ntiou(�,bi)≥Ntsi={si,iou(M,bi)<Ntsi(1−iou(M,bi)),iou(M,bi)≥Nt

函数值不连续,在某一点的值发生跳跃. -

高斯函数

si=sie−iou(�,bi)2σ,∀bi∉�si=sie−iou(M,bi)2σ,∀bi∉D

时间复杂度同传统的greedy-NMS,为�(N2)O(N2).

ua = float((tx2 - tx1 + 1) * (ty2 - ty1 + 1) + area - iw * ih) ov = iw * ih / ua #iou between max box and detection box if method == 1: # linear if ov > Nt: weight = 1 - ov else: weight = 1 elif method == 2: # gaussian weight = np.exp(-(ov * ov)/sigma) else: # original NMS if ov > Nt: weight = 0 else: weight = 1 # re-scoring 修改置信度 boxes[pos, 4] = weight*boxes[pos, 4]

Caffe C++ 版实现: makefile/frcnn

效果

| training data | testing data | mAP | mAP@0.5 | mAP@0.75 | mAP@S | mAP@M | mAP@L | Recall | |

|---|---|---|---|---|---|---|---|---|---|

| Baseline D-R-FCN | coco trainval | coco test-dev | 35.7 | 56.8 | 38.3 | 15.2 | 38.8 | 51.5 | |

| D-R-FCN, ResNet-v1-101, NMS | coco trainval | coco test-dev | 37.4 | 59.6 | 40.2 | 17.8 | 40.6 | 51.4 | 48.3 |

| D-R-FCN, ResNet-v1-101, SNMS | coco trainval | coco test-dev | 38.4 | 60.1 | 41.6 | 18.5 | 41.6 | 52.5 | 53.8 |

| D-R-FCN, ResNet-v1-101, MST, NMS | coco trainval | coco test-dev | 39.8 | 62.4 | 43.3 | 22.6 | 42.3 | 52.2 | 52.9 |

| D-R-FCN, ResNet-v1-101, MST, SNMS | coco trainval | coco test-dev | 40.9 | 62.8 | 45.0 | 23.3 | 43.6 | 53.3 | 60.4 |

在基于proposal方法的模型结果上应用比较好,检测效果提升:

在R-FCN以及Faster-RCNN模型中的测试阶段运用Soft-NMS,在MS-COCO数据集上mAP@[0.5:0.95]能够获得大约1%的提升(详见这里). 如果应用到训练阶段的proposal选取过程理论上也能获得提升. 在自己的实验中发现确实对易重叠的目标类型有提高(目标不一定真的有像素上的重叠,切斜的目标的矩形边框会有较大的重叠).

而在SSD,YOLO等非proposal方法中没有提升.