11.部署docker

1.下载和分发docker二进制文件

1.下载程序包 [root@k8s-master01 ~]# cd /opt/k8s/work/ [root@k8s-master01 work]# wget https://download.docker.com/linux/static/stable/x86_64/docker-19.03.9.tgz [root@k8s-master01 work]# tar -xzvf docker-19.03.9.tgz 2.分发程序包 将程序包分发给各个work节点 [root@k8s-master01 work]# for node_ip in ${WORK_IPS[@]} do echo ">>> ${node_ip}" scp docker/* root@${node_ip}:/opt/k8s/bin/ ssh root@${node_ip} "chmod +x /opt/k8s/bin/*" done

2.创建和分发 systemd unit 文件

#1.创建systemd unit文件 [root@k8s-master01 work]# cat > docker.service <<"EOF" [Unit] Description=Docker Application Container Engine Documentation=http://docs.docker.io [Service] WorkingDirectory=##DOCKER_DIR## Environment="PATH=/opt/k8s/bin:/bin:/sbin:/usr/bin:/usr/sbin" ExecStart=/opt/k8s/bin/dockerd ExecReload=/bin/kill -s HUP $MAINPID Restart=on-failure RestartSec=5 LimitNOFILE=infinity LimitNPROC=infinity LimitCORE=infinity Delegate=yes KillMode=process [Install] WantedBy=multi-user.target EOF 更改IPTABLES 防火墙策略 [root@k8s-master01 work]# for i in ${WORK_IPS[@]}; do echo ">>> $i";ssh root@$i "iptables -P FORWARD ACCEPT"; done [root@k8s-master01 work]# for i in ${WORK_IPS[@]}; do echo ">>> $i";ssh root@$i "echo '/sbin/iptables -P FORWARD ACCEPT' >> /etc/rc.local"; done 2.分发 systemd unit 文件到所有 worker 机器 [root@k8s-master1 work]# sed -i -e "s|##DOCKER_DIR##|${DOCKER_DIR}|" docker.service [root@k8s-master1 work]# for node_ip in ${WORK_IPS[@]} do echo ">>> ${node_ip}" scp docker.service root@${node_ip}:/etc/systemd/system/ done

3.配置和分发 docker 配置文件

使用国内的仓库镜像服务器以加快 pull image 的速度,同时增加下载的并发数 (需要重启 dockerd 生效)

1.配置docker加速 由于网络环境原因。默认去下载官方的docker hub会下载失败所以使用阿里云的docker加速器 登入自己的阿里云生成docker.json,阿里云镜像地址 [root@k8s-master01 work]# cat > docker-daemon.json <<EOF { "registry-mirrors": ["https://9l7fbd59.mirror.aliyuncs.com"], "insecure-registries": ["docker02:35000"], "max-concurrent-downloads": 20, "live-restore": true, "max-concurrent-uploads": 10, "debug": true, "data-root": "${DOCKER_DIR}/data", "exec-root": "${DOCKER_DIR}/exec", "log-opts": { "max-size": "100m", "max-file": "5" } } EOF 2.分发至work节点 [root@k8s-master01 work]# for node_ip in ${WORK_IPS[@]} do echo ">>> ${node_ip}" ssh root@${node_ip} "mkdir -p /etc/docker/ ${DOCKER_DIR}/{data,exec}" scp docker-daemon.json root@${node_ip}:/etc/docker/daemon.json done

4.启动docker服务

[root@k8s-master01 work]# for node_ip in ${WORK_IPS[@]} do echo ">>> ${node_ip}" ssh root@${node_ip} "systemctl daemon-reload && systemctl enable docker && systemctl restart docker" done

5.检查服务器运行状态

[root@k8s-master01 work]# for node_ip in ${WORK_IPS[@]} do echo ">>> ${node_ip}" ssh root@${node_ip} "systemctl status docker|grep Active" done

6.检查docker0网桥

[root@k8s-master01 work]# for node_ip in ${WORK_IPS[@]} do echo ">>> ${node_ip}" ssh root@${node_ip} "/usr/sbin/ip addr show docker0" done

12.部署kubelet 组件

1.创建kubelet bootstrap kubeconfig 文件

1.创建文件 [root@k8s-master01 ~]# cd /opt/k8s/work [root@k8s-master01 work]# for node_name in ${WORK_NAMES[@]} do echo ">>> ${node_name}" # 创建 token export BOOTSTRAP_TOKEN=$(kubeadm token create --description kubelet-bootstrap-token --groups system:bootstrappers:${node_name} --kubeconfig ~/.kube/config) # 设置集群参数 kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/cert/ca.pem --embed-certs=true --server=${KUBE_APISERVER} --kubeconfig=kubelet-bootstrap-${node_name}.kubeconfig # 设置客户端认证参数 kubectl config set-credentials kubelet-bootstrap --token=${BOOTSTRAP_TOKEN} --kubeconfig=kubelet-bootstrap-${node_name}.kubeconfig # 设置上下文参数 kubectl config set-context default --cluster=kubernetes --user=kubelet-bootstrap --kubeconfig=kubelet-bootstrap-${node_name}.kubeconfig # 设置默认上下文 kubectl config use-context default --kubeconfig=kubelet-bootstrap-${node_name}.kubeconfig done 解释说明: 向 kubeconfig 写入的是 token,bootstrap 结束后 kube-controller-manager 为 kubelet 创建 client 和 server 证书; 2.查看 kubeadm 为各节点创建的 token [root@k8s-node01 work]# kubeadm token list --kubeconfig ~/.kube/config

TOKEN TTL EXPIRES USAGES DESCRIPTION EXTRA GROUPS

8p1fcg.u69ugzieq9edhgio 23h 2021-03-11T19:03:26+08:00 authentication,signing kubelet-bootstrap-token system:bootstrappers:k8s-node02

gidgud.wk9ok4ib3wgbjvc9 23h 2021-03-11T19:03:27+08:00 authentication,signing kubelet-bootstrap-token system:bootstrappers:k8s-node03

yky6w8.if1n6tlghxi5rk57 23h 2021-03-11T19:03:26+08:00 authentication,signing kubelet-bootstrap-token system:bootstrappers:k8s-node01

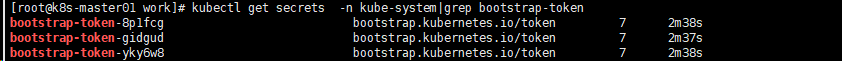

3.查看各 token 关联的 Secret [root@k8s-master01 work]# kubectl get secrets -n kube-system|grep bootstrap-token

2.分发 bootstrap kubeconfig 文件到所有 worker 节点

[root@k8s-master01 work]# for node_name in ${WORK_NAMES[@]} do echo ">>> ${node_name}" scp kubelet-bootstrap-${node_name}.kubeconfig root@${node_name}:/etc/kubernetes/kubelet-bootstrap.kubeconfig done

3.创建和分发 kubelet 参数配置文件

1.创建 kubelet 参数配置模板文件 **注意:**需要 root 账户运行 [root@k8s-master01 work]# cat <<EOF | tee kubelet-config.yaml.template kind: KubeletConfiguration apiVersion: kubelet.config.k8s.io/v1beta1 authentication: anonymous: enabled: false webhook: enabled: true x509: clientCAFile: "/etc/kubernetes/cert/ca.pem" authorization: mode: Webhook clusterDomain: "${CLUSTER_DNS_DOMAIN}" clusterDNS: - "${CLUSTER_DNS_SVC_IP}" podCIDR: "${CLUSTER_CIDR}" maxPods: 220 serializeImagePulls: false hairpinMode: promiscuous-bridge cgroupDriver: cgroupfs runtimeRequestTimeout: "15m" rotateCertificates: true serverTLSBootstrap: true readOnlyPort: 0 port: 10250 address: "##NODE_IP##" EOF 2.为各节点创建和分发 kubelet 配置文件 [root@k8s-master01 work]# for node_ip in ${WORK_IPS[@]} do echo ">>> ${node_ip}" sed -e "s/##NODE_IP##/${node_ip}/" kubelet-config.yaml.template > kubelet-config-${node_ip}.yaml.template scp kubelet-config-${node_ip}.yaml.template root@${node_ip}:/etc/kubernetes/kubelet-config.yaml done

4.创建和分发 kubelet systemd unit 文件

1.创建 kubelet systemd unit 文件模板 [root@k8s-master01 work]# cat > kubelet.service.template <<EOF [Unit] Description=Kubernetes Kubelet Documentation=https://github.com/GoogleCloudPlatform/kubernetes After=docker.service Requires=docker.service [Service] WorkingDirectory=${K8S_DIR}/kubelet ExecStart=/opt/k8s/bin/kubelet \ --bootstrap-kubeconfig=/etc/kubernetes/kubelet-bootstrap.kubeconfig \ --cert-dir=/etc/kubernetes/cert \ --network-plugin=cni \ --cni-conf-dir=/etc/cni/net.d \ --root-dir=${K8S_DIR}/kubelet \ --kubeconfig=/etc/kubernetes/kubelet.kubeconfig \ --config=/etc/kubernetes/kubelet-config.yaml \ --hostname-override=##NODE_NAME## \ --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause \ --image-pull-progress-deadline=15m \ --volume-plugin-dir=${K8S_DIR}/kubelet/kubelet-plugins/volume/exec/ \ --logtostderr=true \ --v=2 Restart=always RestartSec=5 StartLimitInterval=0 [Install] WantedBy=multi-user.target EOF 2.为各节点创建和分发 kubelet systemd unit 文件 [root@k8s-master01 work]# for node_name in ${WORK_NAMES[@]} do echo ">>> ${node_name}" sed -e "s/##NODE_NAME##/${node_name}/" kubelet.service.template > kubelet-${node_name}.service scp kubelet-${node_name}.service root@${node_name}:/etc/systemd/system/kubelet.service done

5.授予 kube-apiserver 访问 kubelet API 的权限

[root@k8s-master01 work]# kubectl create clusterrolebinding kube-apiserver:kubelet-apis --clusterrole=system:kubelet-api-admin --user kubernetes-master

6.Bootstrap Token Auth 和授予权限

[root@k8s-master01 work]# kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --group=system:bootstrappers

7.自动 approve CSR 请求,生成 kubelet client 证书

[root@k8s-master01 ~]# cd /opt/k8s/work [root@k8s-master1 work]# cat > csr-crb.yaml <<EOF # Approve all CSRs for the group "system:bootstrappers" kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: auto-approve-csrs-for-group subjects: - kind: Group name: system:bootstrappers apiGroup: rbac.authorization.k8s.io roleRef: kind: ClusterRole name: system:certificates.k8s.io:certificatesigningrequests:nodeclient apiGroup: rbac.authorization.k8s.io --- # To let a node of the group "system:nodes" renew its own credentials kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: node-client-cert-renewal subjects: - kind: Group name: system:nodes apiGroup: rbac.authorization.k8s.io roleRef: kind: ClusterRole name: system:certificates.k8s.io:certificatesigningrequests:selfnodeclient apiGroup: rbac.authorization.k8s.io --- # A ClusterRole which instructs the CSR approver to approve a node requesting a # serving cert matching its client cert. kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: approve-node-server-renewal-csr rules: - apiGroups: ["certificates.k8s.io"] resources: ["certificatesigningrequests/selfnodeserver"] verbs: ["create"] --- # To let a node of the group "system:nodes" renew its own server credentials kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: node-server-cert-renewal subjects: - kind: Group name: system:nodes apiGroup: rbac.authorization.k8s.io roleRef: kind: ClusterRole name: approve-node-server-renewal-csr apiGroup: rbac.authorization.k8s.io EOF [root@k8s-master01 work]# kubectl apply -f csr-crb.yaml

8.启动kubelet服务

[root@k8s-master01 work]# for node_ip in ${WORK_IPS[@]} do echo ">>> ${node_ip}" ssh root@${node_ip} "mkdir -p ${K8S_DIR}/kubelet" ssh root@${node_ip} "/usr/sbin/swapoff -a" ssh root@${node_ip} "systemctl daemon-reload && systemctl enable kubelet && systemctl restart kubelet" done

9.查看kubelet情况

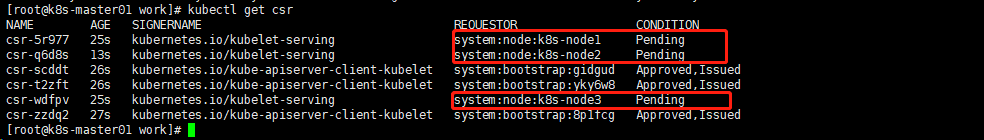

- 稍等一会,三个节点的 CSR 都被自动

approved Pending 的 CSR用于创建 kubelet server 证书

[root@k8s-master01 work]# kubectl get csr

所有节点均注册(Ready 状态是预期的,现在查看状态显示为NotReady为正常想象,因为没有部署网络插件,后续安装了网络插件后就好)

[root@k8s-master01 work]# kubectl get node NAME STATUS ROLES AGE VERSION k8s-node01 NotReady <none> 18h v1.18.15 k8s-node02 NotReady <none> 18h v1.18.15 k8s-node03 NotReady <none> 18h v1.18.15

- kube-controller-manager 为各 node 生成了 kubeconfig 文件和公私钥

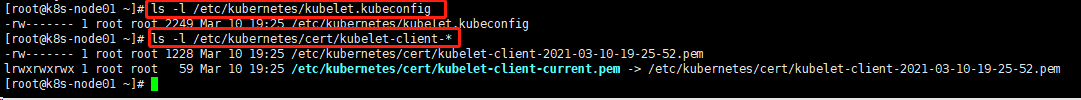

- 注意在node节点执行以下命令查看

ls -l /etc/kubernetes/kubelet.kubeconfig

ls -l /etc/kubernetes/cert/kubelet-client-*

可以看到没有自动生成 kubelet server 证书文件

10.手动 approve server cert csr

基于安全性考虑,CSR approving controllers 不会自动 approve kubelet server 证书签名请求,需要手动 approve

[root@k8s-master01 ~]# kubectl get csr

手动approve

[root@k8s-master01 ~]# kubectl get csr | grep Pending | awk '{print $1}' | xargs kubectl certificate approve

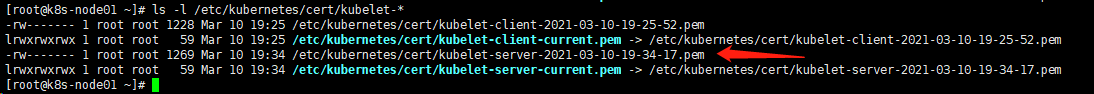

在node节点查看,自动生成了server证书

ls -l /etc/kubernetes/cert/kubelet-*

11.kubelet api 认证和授权

[root@k8s-master01 ~]# curl -s --cacert /etc/kubernetes/cert/ca.pem https://172.31.46.90:10250/metrics Unauthorized [root@k8s-master01 ~]# curl -s --cacert /etc/kubernetes/cert/ca.pem https://172.31.46.103:10250/metrics Unauthorized [root@k8s-master01 ~]# curl -s --cacert /etc/kubernetes/cert/ca.pem https://172.31.46.101:10250/metrics Unauthorized [root@k8s-master01 ~]# curl -s --cacert /etc/kubernetes/cert/ca.pem -H "Authorization: Bearer 123456" https://172.31.46.90:10250/metrics Unauthorized

12.证书认证和授权

#权限不足的证书 [root@k8s-master01 ~]# curl -s --cacert /etc/kubernetes/cert/ca.pem --cert /etc/kubernetes/cert/kube-controller-manager.pem --key /etc/kubernetes/cert/kube-controller-manager-key.pem https://172.31.46.90:10250/metrics Forbidden (user=system:kube-controller-manager, verb=get, resource=nodes, subresource=metrics) 使用部署 kubectl 命令行工具时创建的、具有最高权限的 admin 证书; [root@k8s-master01 ~]# curl -s --cacert /etc/kubernetes/cert/ca.pem --cert /opt/k8s/work/admin.pem --key /opt/k8s/work/admin-key.pem https://172.31.46.90:10250/metrics|head

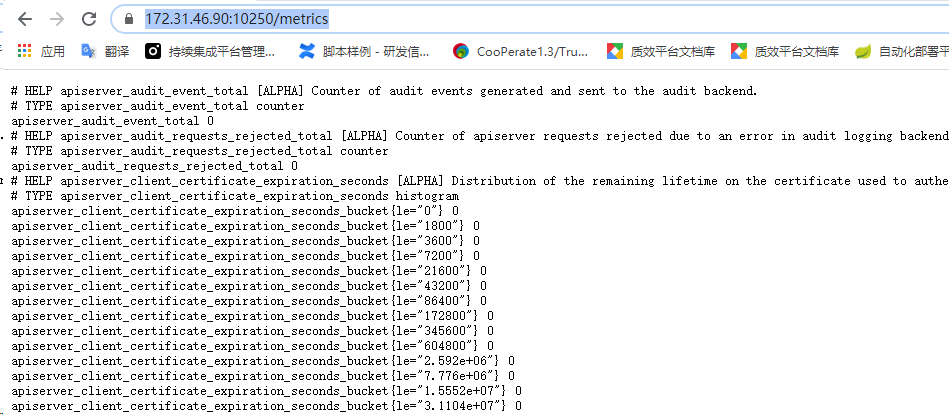

13.bear token 认证和授权

#创建一个 ServiceAccount,将它和 ClusterRole system:kubelet-api-admin 绑定,从而具有调用 kubelet API 的权限; [root@k8s-master01 ~]# kubectl create sa kubelet-api-test serviceaccount/kubelet-api-test created [root@k8s-master01 ~]# kubectl create clusterrolebinding kubelet-api-test --clusterrole=system:kubelet-api-admin --serviceaccount=default:kubelet-api-test clusterrolebinding.rbac.authorization.k8s.io/kubelet-api-test created [root@k8s-master01 ~]# SECRET=$(kubectl get secrets | grep kubelet-api-test | awk '{print $1}') [root@k8s-master01 ~]# TOKEN=$(kubectl describe secret ${SECRET} | grep -E '^token' | awk '{print $2}') [root@k8s-master01 ~]# echo ${TOKEN} [root@k8s-master1 ~]# curl -s --cacert /etc/kubernetes/cert/ca.pem -H "Authorization: Bearer ${TOKEN}" https://172.31.46.90:10250/metrics |head # HELP apiserver_audit_event_total [ALPHA] Counter of audit events generated and sent to the audit backend. # TYPE apiserver_audit_event_total counter apiserver_audit_event_total 0 # HELP apiserver_audit_requests_rejected_total [ALPHA] Counter of apiserver requests rejected due to an error in audit logging backend. # TYPE apiserver_audit_requests_rejected_total counter apiserver_audit_requests_rejected_total 0 # HELP apiserver_client_certificate_expiration_seconds [ALPHA] Distribution of the remaining lifetime on the certificate used to authenticate a request. # TYPE apiserver_client_certificate_expiration_seconds histogram apiserver_client_certificate_expiration_seconds_bucket{le="0"} 0 apiserver_client_certificate_expiration_seconds_bucket{le="1800"} 0

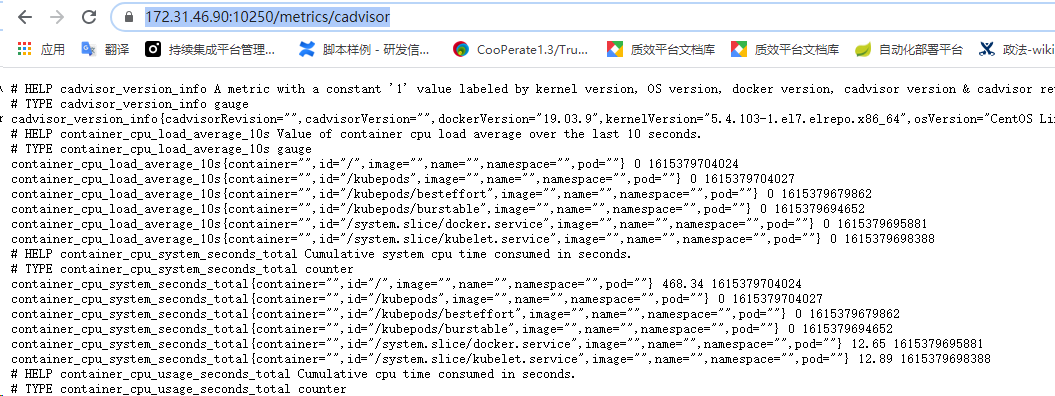

14.cadvisor 和 metrics

- cadvisor 是内嵌在 kubelet 二进制中的,统计所在节点各容器的资源(CPU、内存、磁盘、网卡)使用情况的服务。

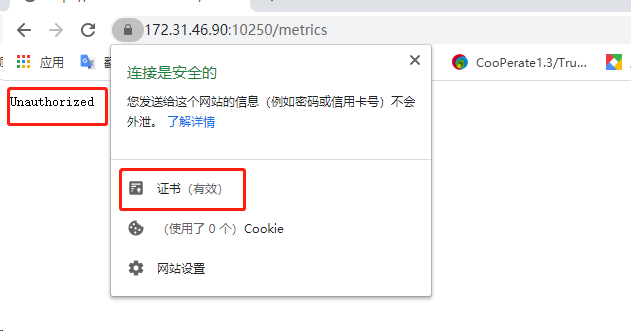

- 浏览器访问 https://172.31.46.90:10250/metrics和 https://172.31.46.90:10250/metrics/cadvisor 分别返回 kubelet 和 cadvisor 的 metrics。

注意:

- kubelet.config.json 设置 authentication.anonymous.enabled 为 false,不允许匿名证书访问 10250 的 https 服务;

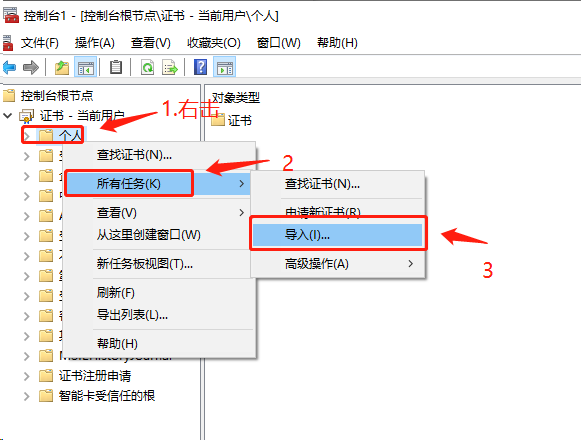

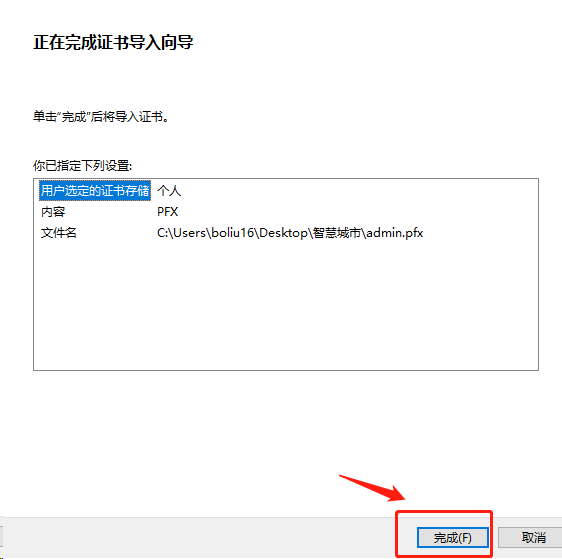

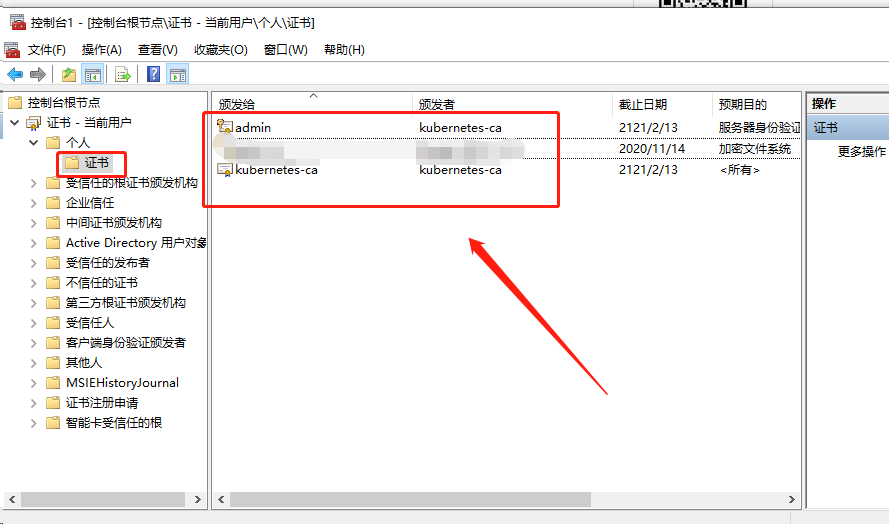

- 创建和导入相关证书,然后访问上面的 10250 端口;

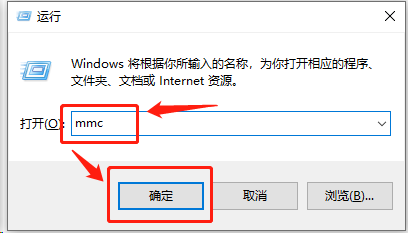

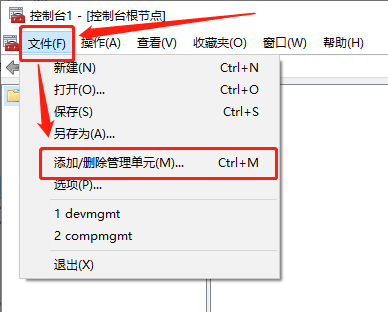

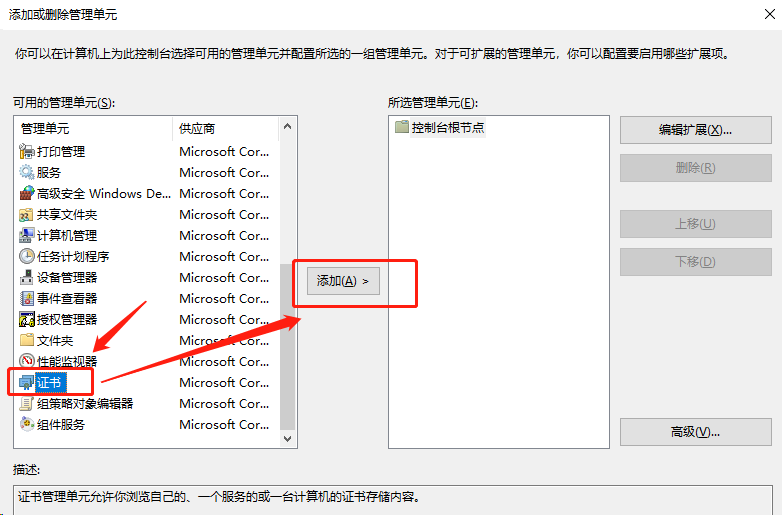

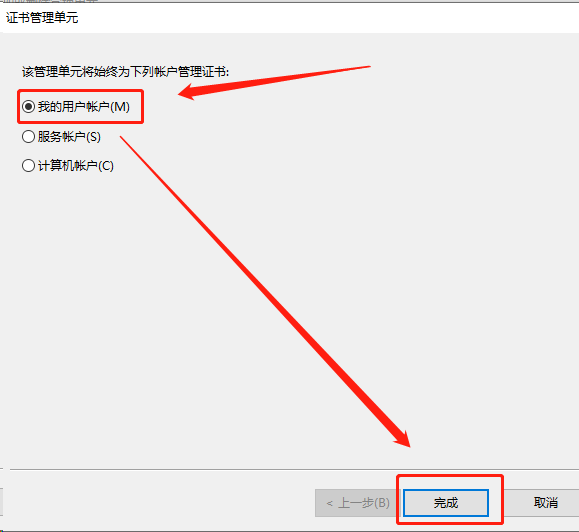

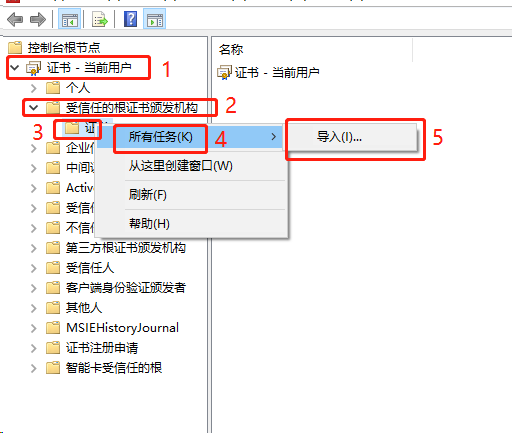

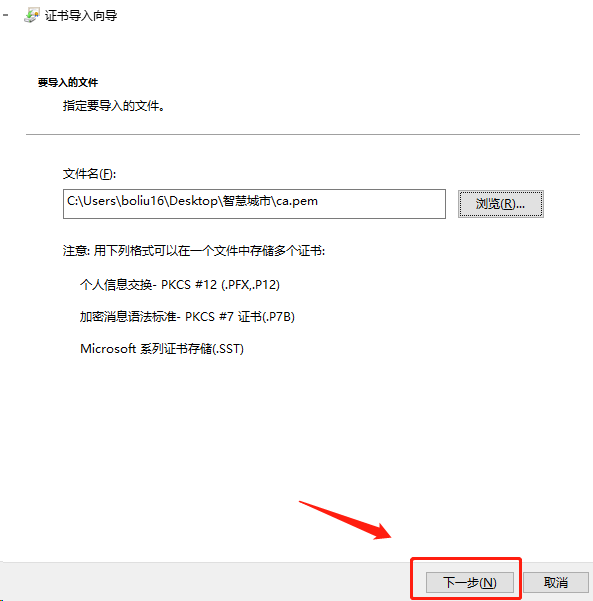

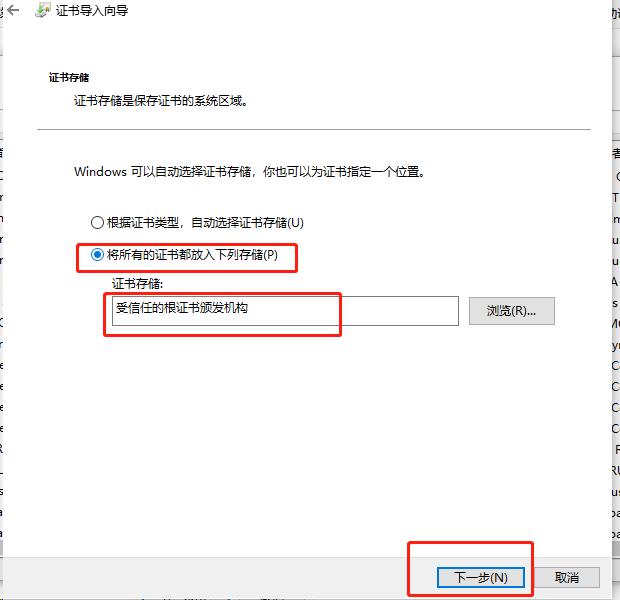

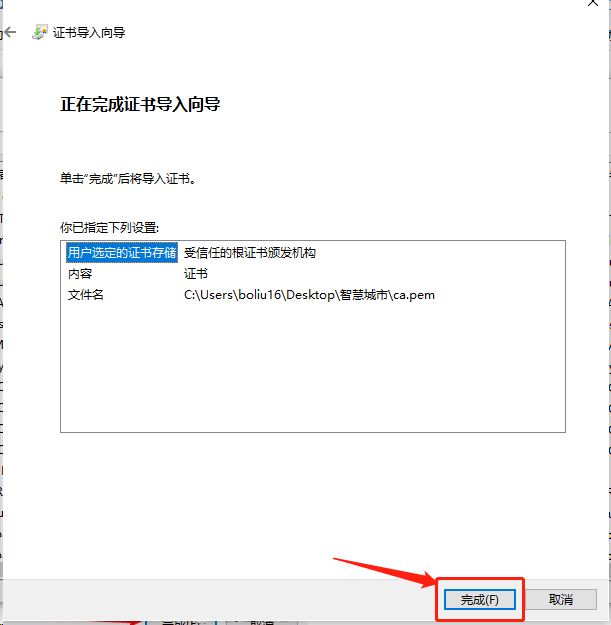

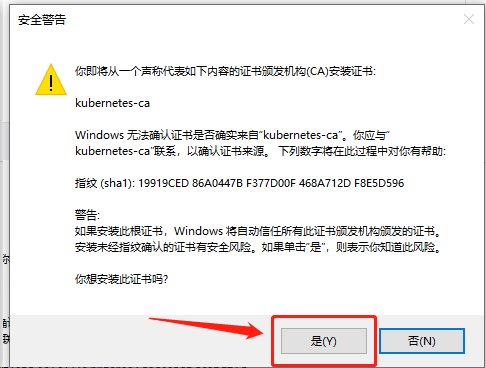

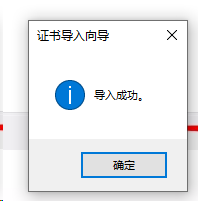

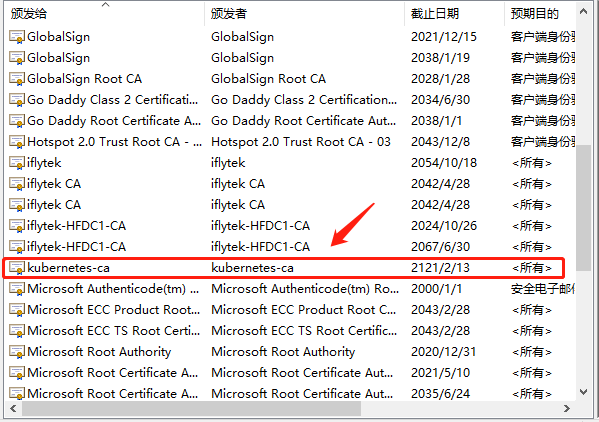

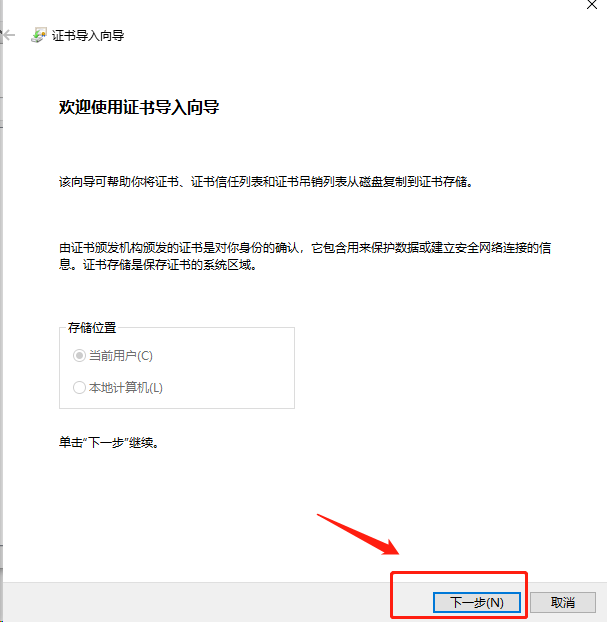

1.导入根证书

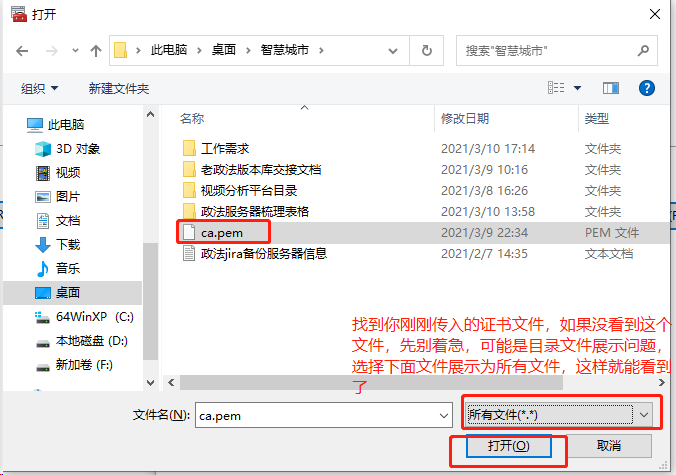

将根证书 ca.pem 从服务器中(/opt/k8s/work)导出道自己的PC电脑中,然后再导入到操作系统,并设置永久信任

[root@k8s-master01 work]# cd /opt/k8s/work/ [root@k8s-master01 work]# ls ca* ca-config.json ca.csr ca-csr.json ca-key.pem ca.pem

[root@k8s-master01 work]# sz ca.pem

已经被信任但是,连接提示未授权401提示

2.创建浏览器证书

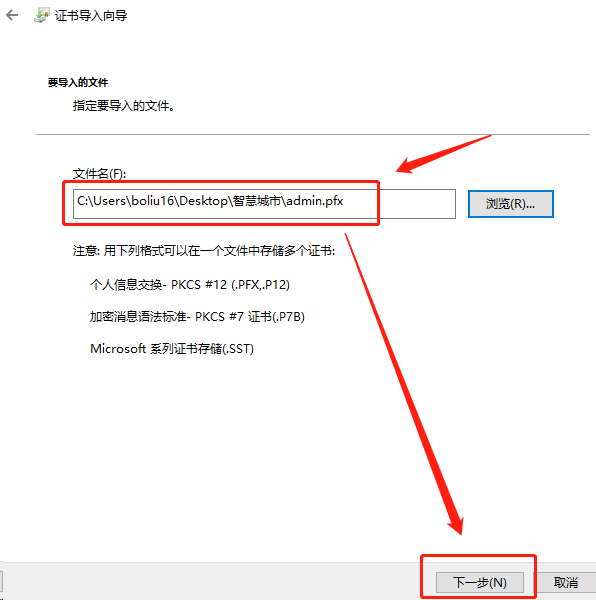

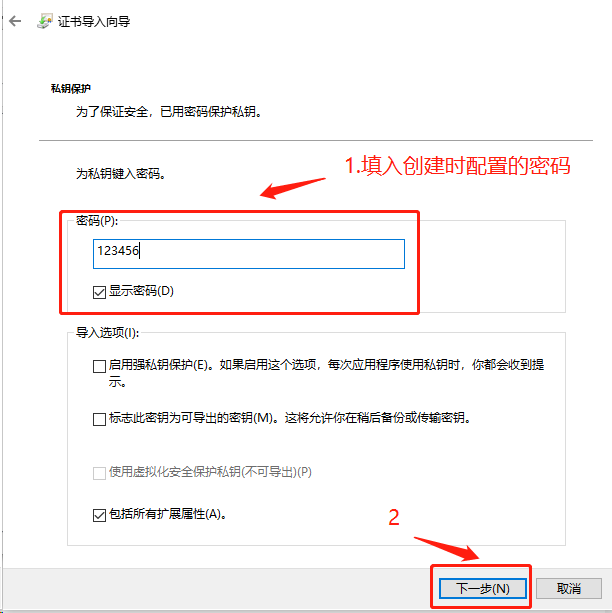

这里使用kubectl 命令行工具时创建的 admin 证书、私钥和上面的 ca 证书,创建一个浏览器可以使用 PKCS#12/PFX 格式的证书:

[root@k8s-master01 ~]# cd /opt/k8s/work/ [root@k8s-master01 work]# openssl pkcs12 -export -out admin.pfx -inkey admin-key.pem -in admin.pem -certfile ca.pem Enter Export Password: Verifying - Enter Export Password:

[root@k8s-master01 work]# sz admin.pfx

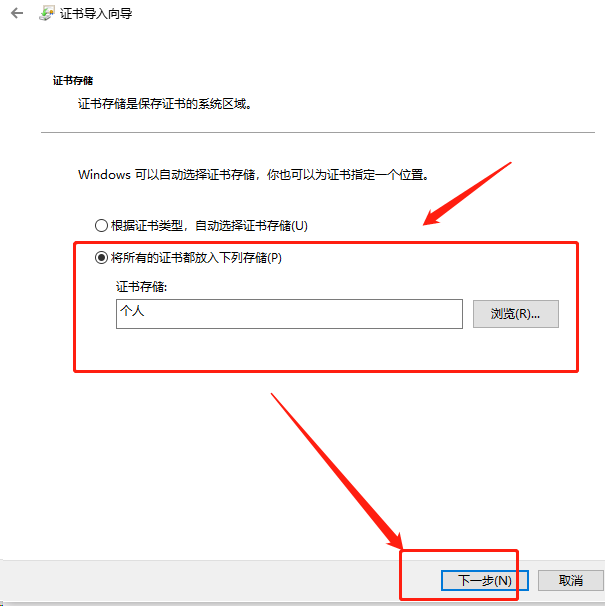

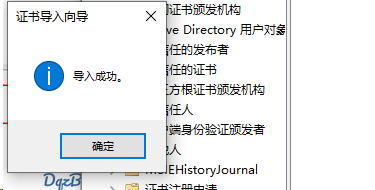

将创建的 admin.pfx 导入到系统的证书中

重启浏览器,再次访问,这里选中上面导入的 admin.pfx

https://172.31.46.90:10250/metrics

https://172.31.46.90:10250/metrics/cadvisor

13.部署 kube-proxy 组件

- kube-proxy 运行在所有 worker 节点上,它监听 apiserver 中

service和endpoint的变化情况,创建路由规则以提供服务 IP 和负载均衡功能。 - 以下操作没有特殊说明,则全部在k8s-master1节点通过远程调用进行操作

1.创建 kube-proxy 证书

1.创建证书签名请求 [root@k8s-master01 ~]# cd /opt/k8s/work [root@k8s-master01 work]# cat > kube-proxy-csr.json <<EOF { "CN": "system:kube-proxy", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "BeiJing", "L": "BeiJing", "O": "k8s", "OU": "opsnull" } ] } EOF 2.生成证书和私钥 [root@k8s-master01 ~]# cd /opt/k8s/work [root@k8s-master01 work]# cfssl gencert -ca=/opt/k8s/work/ca.pem -ca-key=/opt/k8s/work/ca-key.pem -config=/opt/k8s/work/ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy [root@k8s-master01 work]# ls kube-proxy* kube-proxy.csr kube-proxy-csr.json kube-proxy-key.pem kube-proxy.pem

2.创建和分发kubeconfig文件

1.创建kubeconfig文件 [root@k8s-master01 work]# kubectl config set-cluster kubernetes --certificate-authority=/opt/k8s/work/ca.pem --embed-certs=true --server=${KUBE_APISERVER} --kubeconfig=kube-proxy.kubeconfig [root@k8s-master01 work]# kubectl config set-credentials kube-proxy --client-certificate=kube-proxy.pem --client-key=kube-proxy-key.pem --embed-certs=true --kubeconfig=kube-proxy.kubeconfig [root@k8s-master01 work]# kubectl config set-context default --cluster=kubernetes --user=kube-proxy --kubeconfig=kube-proxy.kubeconfig [root@k8s-master01 work]# kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig 2.分发kubeconfig文件 分发至所有work节点机器 [root@k8s-master1 work]# for node_name in ${WORK_NAMES[@]} do echo ">>> ${node_name}" scp kube-proxy.kubeconfig root@${node_name}:/etc/kubernetes/ done

3.创建kube-proxy配置文件

1.创建 kube-proxy config 文件模板 [root@k8s-master01 work]# cat > kube-proxy-config.yaml.template <<EOF kind: KubeProxyConfiguration apiVersion: kubeproxy.config.k8s.io/v1alpha1 clientConnection: burst: 200 kubeconfig: "/etc/kubernetes/kube-proxy.kubeconfig" qps: 100 bindAddress: ##NODE_IP## healthzBindAddress: ##NODE_IP##:10256 metricsBindAddress: ##NODE_IP##:10249 enableProfiling: true clusterCIDR: ${CLUSTER_CIDR} hostnameOverride: ##NODE_NAME## mode: "ipvs" portRange: "" iptables: masqueradeAll: false ipvs: scheduler: rr excludeCIDRs: [] EOF 2.为各work节点创建和分发 kube-proxy 配置文件 [root@k8s-master1 work]# for (( i=0; i < 3; i++ )) do echo ">>> ${WORK_NAMES[i]}" sed -e "s/##NODE_NAME##/${WORK_NAMES[i]}/" -e "s/##NODE_IP##/${WORK_IPS[i]}/" kube-proxy-config.yaml.template > kube-proxy-config-${WORK_NAMES[i]}.yaml.template scp kube-proxy-config-${WORK_NAMES[i]}.yaml.template root@${WORK_NAMES[i]}:/etc/kubernetes/kube-proxy-config.yaml done

4.创建和分发 kube-proxy systemd unit 文件

1.创建文件 [root@k8s-master01 ~]# cd /opt/k8s/work [root@k8s-master01 work]# cat > kube-proxy.service <<EOF [Unit] Description=Kubernetes Kube-Proxy Server Documentation=https://github.com/GoogleCloudPlatform/kubernetes After=network.target [Service] WorkingDirectory=${K8S_DIR}/kube-proxy ExecStart=/opt/k8s/bin/kube-proxy \ --config=/etc/kubernetes/kube-proxy-config.yaml \ --logtostderr=true \ --v=2 Restart=on-failure RestartSec=5 LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF 2.分发文件 [root@k8s-master01 work]# for node_name in ${WORK_NAMES[@]} do echo ">>> ${node_name}" scp kube-proxy.service root@${node_name}:/etc/systemd/system/ done

5.启动kube-proxy服务

[root@k8s-master01 work]# for node_ip in ${WORK_IPS[@]} do echo ">>> ${node_ip}" ssh root@${node_ip} "mkdir -p ${K8S_DIR}/kube-proxy" ssh root@${node_ip} "modprobe ip_vs_rr" ssh root@${node_ip} "systemctl daemon-reload && systemctl enable kube-proxy && systemctl restart kube-proxy" done

6.检查启动结果

[root@k8s-master01 work]# for node_ip in ${WORK_IPS[@]} do echo ">>> ${node_ip}" ssh root@${node_ip} "systemctl status kube-proxy|grep Active" done

7.查看监听端口

登入各个work节点执行以下命令查看kube-proxy服务启动状况

[root@k8s-node01 ~]# netstat -lnpt|grep kube-prox

tcp 0 0 172.31.46.90:10256 0.0.0.0:* LISTEN 29926/kube-proxy

tcp 0 0 172.31.46.90:10249 0.0.0.0:* LISTEN 29926/kube-proxy

8.查看ipvs路由规则

[root@k8s-master01 work]# for node_ip in ${WORK_IPS[@]} do echo ">>> ${node_ip}" ssh root@${node_ip} "/usr/sbin/ipvsadm -ln" done

>>> 172.31.46.90

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.254.0.1:443 rr

-> 172.31.46.78:6443 Masq 1 0 0

-> 172.31.46.83:6443 Masq 1 0 0

-> 172.31.46.86:6443 Masq 1 0 0

>>> 172.31.46.103

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.254.0.1:443 rr

-> 172.31.46.78:6443 Masq 1 0 0

-> 172.31.46.83:6443 Masq 1 0 0

-> 172.31.46.86:6443 Masq 1 0 0

>>> 172.31.46.101

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.254.0.1:443 rr

-> 172.31.46.78:6443 Masq 1 0 0

-> 172.31.46.83:6443 Masq 1 0 0

-> 172.31.46.86:6443 Masq 1 0 0

可见所有通过 https 访问 K8S SVC kubernetes 的请求都转发到 kube-apiserver 节点的 6443 端口

14.部署calico网络

- kubernetes 要求集群内各节点(包括 master 节点)能通过 Pod 网段互联互通。

- calico 使用 IPIP 或 BGP 技术(默认为 IPIP)为各节点创建一个可以互通的 Pod 网络。

1.安装calico网络插件

[root@k8s-master01 ~]# cd /opt/k8s/work [root@k8s-master01 work]# curl https://docs.projectcalico.org/manifests/calico.yaml -O

2.修改配置

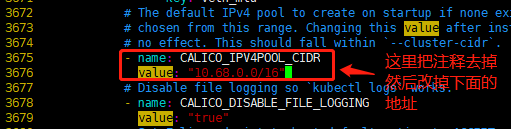

- 将 Pod 网段地址修改为 10.68.0.0/16,这个根据实际情况来填写;

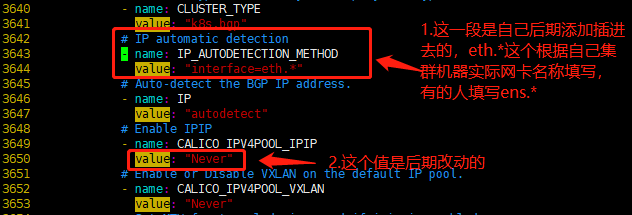

- calico 自动探查互联网卡,如果有多块网卡,则可以配置用于互联的网络接口命名正则表达式,如上面的 en.*(根据自己服务器的网络接口名修改);

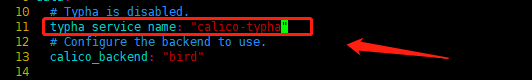

- 修改

typha_service_name - 更改

CALICO_IPV4POOL_IPIP为Never使用 BGP 模式;它会以daemonset方式安装在所有node主机,每台主机启动一个bird(BGP client),它会将calico网络内的所有node分配的ip段告知集群内的主机,并通过本机的网卡eth0或者ens33转发数据 - 另外增加

IP_AUTODETECTION_METHOD为interface使用匹配模式,默认是first-found模式,在复杂网络环境下还是有出错的可能

#备份默认文件 [root@k8s-master01 work]# cp calico.yaml calico.yaml.bak [root@k8s-master01 work]# vim calico.yaml

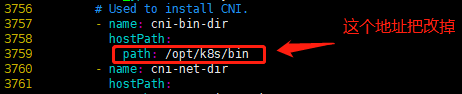

修改位置如下图

3.运行calico插件

[root@k8s-master01 work]# kubectl apply -f calico.yaml

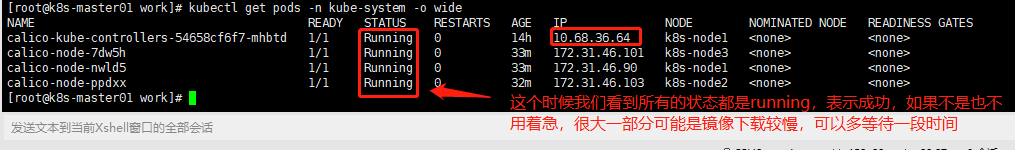

4.查看calico运行状态

[root@k8s-master01 work]# kubectl get pods -n kube-system -o wide

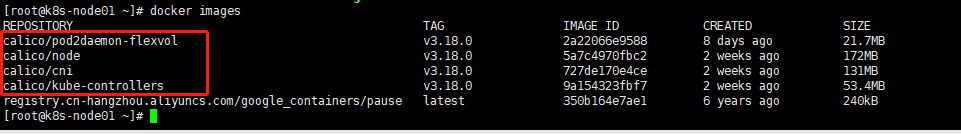

使用 docker命令查看 calico 使用的镜像,在worker节点执行

15.验证集群状态

1.检查节点状态

#都为 Ready 且版本为 v1.18.15 时正常。 [root@k8s-master01 ~]# cd /opt/k8s/work/ [root@k8s-master01 work]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-node01 Ready <none> 18h v1.18.15 k8s-node02 Ready <none> 18h v1.18.15 k8s-node03 Ready <none> 18h v1.18.15

2.创建测试文件

[root@k8s-master01 ~]# cd /opt/k8s/work [root@k8s-master01 work]# cat > nginx-test.yml <<EOF apiVersion: v1 kind: Service metadata: name: nginx labels: app: nginx spec: type: NodePort selector: app: nginx ports: - name: http port: 80 targetPort: 80 --- apiVersion: apps/v1 kind: DaemonSet metadata: name: nginx labels: addonmanager.kubernetes.io/mode: Reconcile spec: selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - name: my-nginx image: nginx:1.16.1 ports: - containerPort: 80 EOF

3.执行测试

[root@k8s-master01 work]# kubectl apply -f nginx-test.yml service/nginx created daemonset.apps/nginx created [root@k8s-master01 work]# kubectl get pods -o wide|grep nginx nginx-9778d 1/1 Running 0 63s 10.68.169.128 k8s-node02 <none> <none> nginx-b8kcr 1/1 Running 0 63s 10.68.107.192 k8s-node03 <none> <none> nginx-cm5qx 1/1 Running 0 63s 10.68.36.65 k8s-node01 <none> <none>

4.创建各节点的Pod IP连通性

[root@k8s-master01 work]# kubectl get pods -o wide -l app=nginx NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-9778d 1/1 Running 0 2m39s 10.68.169.128 k8s-node02 <none> <none> nginx-b8kcr 1/1 Running 0 2m39s 10.68.107.192 k8s-node03 <none> <none> nginx-cm5qx 1/1 Running 0 2m39s 10.68.36.65 k8s-node01 <none> <none> 在所有 worker 上分别 ping 上面三个 Pod IP,看是否连通 [root@k8s-master01 work]# for node_ip in ${WORK_IPS[@]} do echo ">>> ${node_ip}" ssh ${node_ip} "ping -c 1 10.68.169.128" ssh ${node_ip} "ping -c 1 10.68.107.192" ssh ${node_ip} "ping -c 1 10.68.36.65" done

5.检查服务IP和端口可达性

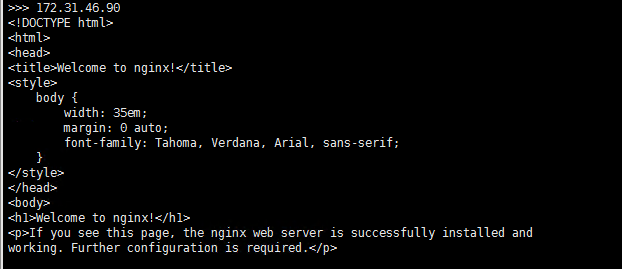

[root@k8s-master01 work]# kubectl get svc -l app=nginx NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE nginx NodePort 10.254.253.67 <none> 80:32304/TCP 6m44s 可见: Service Cluster IP:10.254.253.67 服务端口:80 NodePort 端口:32304 在所有 Node 上 curl Service IP [root@k8s-master01 work]# for node_ip in ${WORK_IPS[@]} do echo ">>> ${node_ip}" ssh ${node_ip} "curl -s 10.254.253.67" done 没问题则全部Node节点输出nginx的欢迎页面

6.检查服务的NodePort可达性

没问题同样也是全部Node节点输出nginx的欢迎页面 [root@k8s-master01 work]# for node_ip in ${WORK_IPS[@]} do echo ">>> ${node_ip}" ssh ${node_ip} "curl -s ${node_ip}:32304" done

16.部署集群插件

1.部署coredns插件

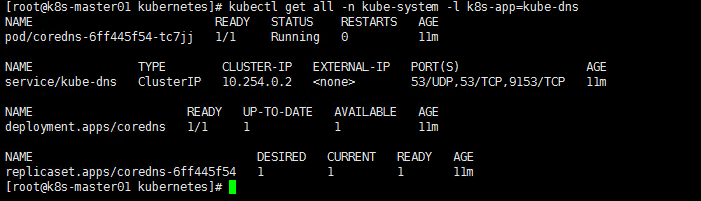

1.下载和配置 coredns [root@k8s-master01 ~]# cd /opt/k8s/work [root@k8s-master01 work]# git clone https://github.com/coredns/deployment.git [root@k8s-master01 work]# mv deployment coredns-deployment 2.创建coredns [root@k8s-master01 work]# cd /opt/k8s/work/coredns-deployment/kubernetes [root@k8s-master01 kubernetes]# ./deploy.sh -i ${CLUSTER_DNS_SVC_IP} -d ${CLUSTER_DNS_DOMAIN} | kubectl apply -f - serviceaccount/coredns created clusterrole.rbac.authorization.k8s.io/system:coredns created clusterrolebinding.rbac.authorization.k8s.io/system:coredns created configmap/coredns created deployment.apps/coredns created service/kube-dns created 3.检查coredns功能 [root@k8s-master01 kubernetes]# kubectl get all -n kube-system -l k8s-app=kube-dns

新建一个 Deployment

[root@k8s-master01 kubernetes]# cd /opt/k8s/work/ [root@k8s-master01 work]# cat > test-nginx.yaml <<EOF apiVersion: apps/v1 kind: Deployment metadata: name: test-nginx spec: replicas: 2 selector: matchLabels: run: test-nginx template: metadata: labels: run: test-nginx spec: containers: - name: test-nginx image: nginx:1.16.1 ports: - containerPort: 80 EOF [root@k8s-master01 work]# kubectl create -f test-nginx.yaml deployment.apps/test-nginx created #export 该 Deployment, 生成 test-nginx 服务 [root@k8s-master01 work]# kubectl expose deploy test-nginx service/test-nginx exposed [root@k8s-master01 work]# kubectl get services test-nginx -o wide NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR test-nginx ClusterIP 10.254.130.43 <none> 80/TCP 8s run=test-nginx 创建另一个 Pod,查看 /etc/resolv.conf 是否包含 kubelet 配置的 --cluster-dns 和 --cluster-domain,是否能够将服务 test-nginx 解析到上面显示的 Cluster IP 10.254.130.43 [root@k8s-master01 work]# cat > dnsutils-ds.yml <<EOF apiVersion: v1 kind: Service metadata: name: dnsutils-ds labels: app: dnsutils-ds spec: type: NodePort selector: app: dnsutils-ds ports: - name: http port: 80 targetPort: 80 --- apiVersion: apps/v1 kind: DaemonSet metadata: name: dnsutils-ds labels: addonmanager.kubernetes.io/mode: Reconcile spec: selector: matchLabels: app: dnsutils-ds template: metadata: labels: app: dnsutils-ds spec: containers: - name: my-dnsutils image: tutum/dnsutils:latest command: - sleep - "3600" ports: - containerPort: 80 EOF [root@k8s-master01 work]# kubectl create -f dnsutils-ds.yml service/dnsutils-ds created daemonset.apps/dnsutils-ds create [root@k8s-master01 work]# kubectl get pods -lapp=dnsutils-ds -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES dnsutils-ds-hhs7v 1/1 Running 0 8m5s 10.68.169.132 k8s-node02 <none> <none> dnsutils-ds-q65n8 1/1 Running 0 8m5s 10.68.107.196 k8s-node03 <none> <none> dnsutils-ds-xzlkf 1/1 Running 0 8m5s 10.68.36.68 k8s-node01 <none> <none> [root@k8s-master01 work]# kubectl -it exec dnsutils-ds-xzlkf cat /etc/resolv.conf kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl kubectl exec [POD] -- [COMMAND] instead. nameserver 10.254.0.2 search default.svc.cluster.local svc.cluster.local cluster.local host.com options ndots:5 [root@k8s-master01 work]# kubectl -it exec dnsutils-ds-xzlkf nslookup kubernetes kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl kubectl exec [POD] -- [COMMAND] instead. Server: 10.254.0.2 Address: 10.254.0.2#53 Name: kubernetes.default.svc.cluster.local Address: 10.254.0.1 [root@k8s-master01 work]# kubectl -it exec dnsutils-ds-xzlkf nslookup www.baidu.com kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl kubectl exec [POD] -- [COMMAND] instead. Server: 10.254.0.2 Address: 10.254.0.2#53 Non-authoritative answer: www.baidu.com canonical name = www.a.shifen.com. Name: www.a.shifen.com Address: 14.215.177.39 Name: www.a.shifen.com Address: 14.215.177.38 [root@k8s-master01 work]# kubectl -it exec dnsutils-ds-xzlkf nslookup test-nginx kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl kubectl exec [POD] -- [COMMAND] instead. Server: 10.254.0.2 Address: 10.254.0.2#53 Name: test-nginx.default.svc.cluster.local Address: 10.254.130.43

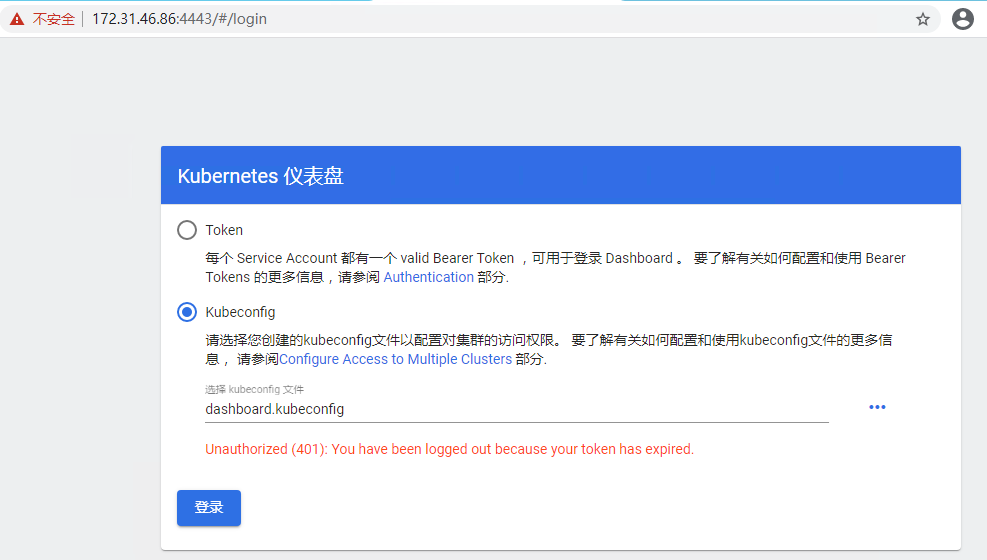

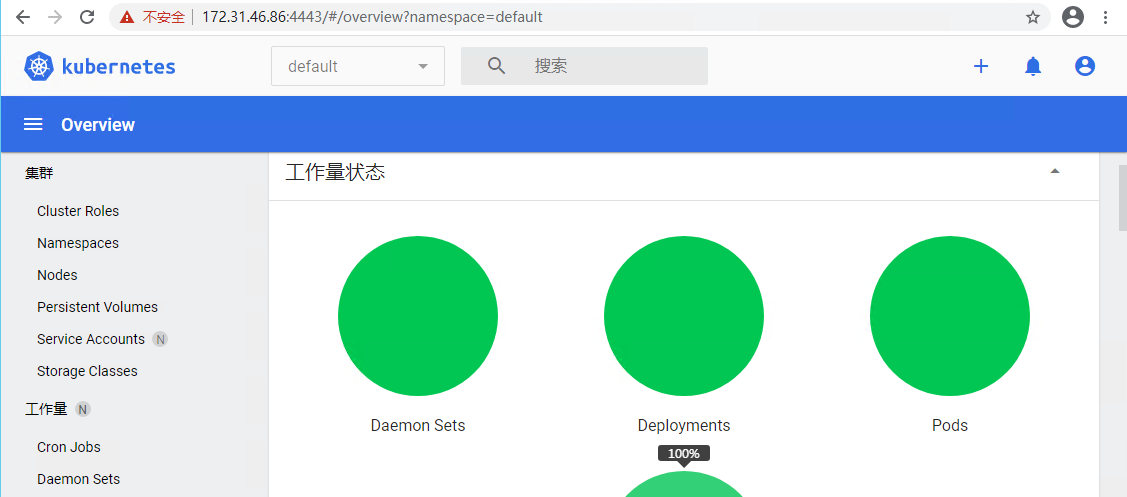

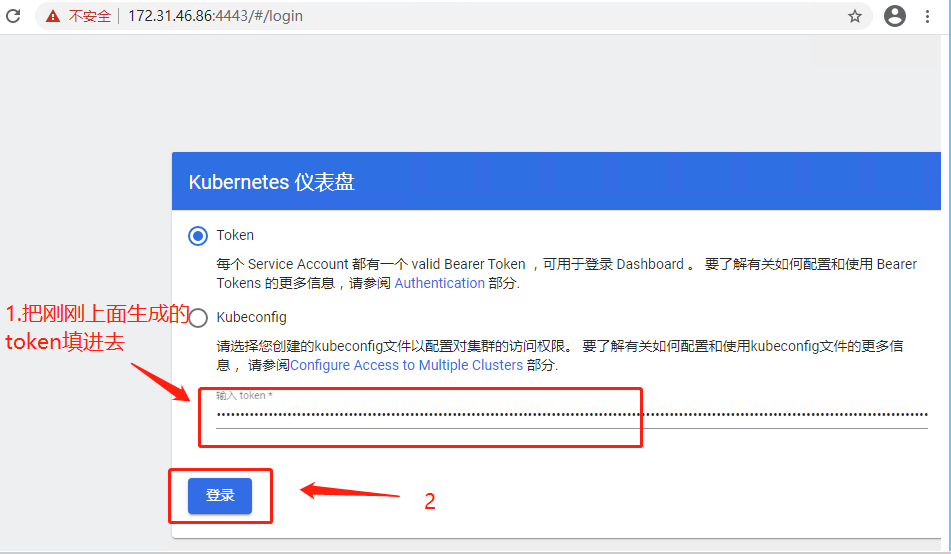

2.部署dashboard 插件

1.下载和修改配置文件 [root@k8s-master01 ~]# cd /opt/k8s/work/ [root@k8s-master01 work]# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.3/aio/deploy/recommended.yaml [root@k8s-master01 work]# mv recommended.yaml dashboard-recommended.yaml [root@k8s-master01 work]# kubectl apply -f dashboard-recommended.yaml 3.查看运行状态 [root@k8s-master01 work]# kubectl get pods -n kubernetes-dashboard NAME READY STATUS RESTARTS AGE dashboard-metrics-scraper-6b4884c9d5-s6xp9 1/1 Running 0 13h kubernetes-dashboard-7f99b75bf4-7xz7d 1/1 Running 0 13h 4.访问Dashboard 从 1.7 开始,dashboard 只允许通过 https 访问,如果使用 kube proxy 则必须监听 localhost 或 127.0.0.1。对于 NodePort 没有这个限制,

但是仅建议在开发环境中使用。对于不满足这些条件的登录访问,在登录成功后浏览器不跳转,始终停在登录界面。

通过 port forward 访问 dashboard

启动端口转发

[root@k8s-master01 work]# kubectl port-forward -n kubernetes-dashboard svc/kubernetes-dashboard 4443:443 --address 0.0.0.0

浏览器访问 URL:https://172.31.46.86:4443/

创建登陆token

[root@k8s-master01 ~]# kubectl create sa dashboard-admin -n kube-system [root@k8s-master01 ~]# kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin [root@k8s-master01 ~]# ADMIN_SECRET=$(kubectl get secrets -n kube-system | grep dashboard-admin | awk '{print $1}') [root@k8s-master01 ~]# DASHBOARD_LOGIN_TOKEN=$(kubectl describe secret -n kube-system ${ADMIN_SECRET} | grep -E '^token' | awk '{print $2}') [root@k8s-master01 ~]# echo ${DASHBOARD_LOGIN_TOKEN}

插件使用token的kubeconfig文件

# 设置集群参数 kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/cert/ca.pem --embed-certs=true --server=${KUBE_APISERVER} --kubeconfig=dashboard.kubeconfig # 设置客户端认证参数,使用上面创建的 Token kubectl config set-credentials dashboard_user --token=${DASHBOARD_LOGIN_TOKEN} --kubeconfig=dashboard.kubeconfig # 设置上下文参数 kubectl config set-context default --cluster=kubernetes --user=dashboard_user --kubeconfig=dashboard.kubeconfig # 设置默认上下文 kubectl config use-context default --kubeconfig=dashboard.kubeconfig

用生成的 dashboard.kubeconfig 登录 Dashboard