kudu是cloudera开源的运行在hadoop平台上的列式存储系统,拥有Hadoop生态系统应用的常见技术特性,运行在一般的商用硬件上,支持水平扩展,高可用,集成impala后,支持标准sql语句,相对于hbase易用性强,详细介绍。

impala是Cloudera公司主导开发的新型查询系统,它提供SQL语义,能查询存储在Hadoop的HDFS和HBase中的PB级大数据。已有的Hive系统虽然也提供了SQL语义,但由于Hive底层执行使用的是MapReduce引擎,仍然是一个批处理过程,难以满足查询的交互性。相比之下,Impala的最大特点也是最大卖点就是它的快速,导入数据实测可达30+W/s,详细介绍。

导入流程:准备数据--》上传hdfs--》导入impala临时表--》导入kudu表

1.准备数据

app@hadoop01:/software/develop/pujh>cat genBiData.sh #!/usr/bash date echo ''>data.txt chmod 777 data.txt for((i=1;i<=20593279;i++)) do echo "$i|aa$i|aa$i$i|aa$i$i$i" >>data.txt; done; date app@hadoop01:/software/develop/pujh> sed 's/|/,/g' data.txt > temp.csv

app@hadoop01:/software/develop/pujh>chmod 777 tmp.csv

2.上传到hdfs

su - root su - hdfs hadoop dfs -mkdir /input/data/pujh hadoop dfs -chmod -R 777 /input/data/pujh hadoop dfs -put /software/develop/pujh /input/data/pujh hadoop dfs -ls /input/data/pujh hdfs@hadoop01:>./hadoop dfs -ls /input/data/pujh DEPRECATED: Use of this script to execute hdfs command is deprecated. Instead use the hdfs command for it. Found 5 items -rwxrwxrwx 3 hdfs supergroup 4 2019-04-24 10:14 /input/data/pujh/aa.txt -rwxrwxrwx 3 hdfs supergroup 1813554712 2019-04-24 10:14 /input/data/pujh/data.txt -rwxrwxrwx 3 hdfs supergroup 1281378694 2019-04-24 10:14 /input/data/pujh/data2kw.csv -rwxrwxrwx 3 hdfs supergroup 1281378694 2019-04-24 10:14 /input/data/pujh/data_2kw.txt -rwxrwxrwx 3 hdfs supergroup 146 2019-04-24 10:14 /input/data/pujh/genBiData.sh

3.导入impala临时表

创建impala临时表

employee_temp

create table employee_temp ( eid int, name String,salary String, destination String) ROW FORMAT DELIMITED FIELDS TERMINATED BY ',';hdfs@hadoop02>./impala-shell

Starting Impala Shell without Kerberos authentication Connected to hadoop02:21000 Server version: impala version 2.8.0-cdh5.11.2 RELEASE (build f89269c4b96da14a841e94bdf6d4d48821b0d658) *********************************************************************************** Welcome to the Impala shell. (Impala Shell v2.8.0-cdh5.11.2 (f89269c) built on Fri Aug 18 14:04:44 PDT 2017) The HISTORY command lists all shell commands in chronological order. *********************************************************************************** [hadoop02:21000] > show databases; Query: show databases +------------------+----------------------------------------------+ | name | comment | +------------------+----------------------------------------------+ | _impala_builtins | System database for Impala builtin functions | | default | Default Hive database | | td_test | | +------------------+----------------------------------------------+ Fetched 3 row(s) in 0.01s [hadoop02:21000] > show tables; Query: show tables +----------------+ | name | +----------------+ | employee | | my_first_table | +----------------+ Fetched 2 row(s) in 0.00s [hadoop02:21000] > create table employee_temp ( eid int, name String,salary String, destination String) ROW FORMAT DELIMITED FIELDS TERMINATED BY ','; Query: create table employee_temp ( eid int, name String,salary String, destination String) ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' Fetched 0 row(s) in 0.32s [hadoop02:21000] > show tables; Query: show tables +----------------+ | name | +----------------+ | employee | | employee_temp | | my_first_table | +----------------+ Fetched 3 row(s) in 0.01s

将hadoop上的文件导入impala临时表

load data inpath '/input/data/pujh/temp.csv' into table employee_temp;

[hadoop02:21000] > load data inpath '/input/data/pujh/temp.csv' into table employee_temp; Query: load data inpath '/input/data/pujh/temp.csv' into table employee_temp ERROR: AnalysisException: Unable to LOAD DATA from hdfs://hadoop01:8020/input/data/pujh/temp.csv because Impala does not have WRITE permissions on its parent directory hdfs://hadoop01:8020/input/data/pujh [hadoop02:21000] > load data inpath '/input/data/pujh/temp.csv' into table employee_temp; Query: load data inpath '/input/data/pujh/temp.csv' into table employee_temp +----------------------------------------------------------+ | summary | +----------------------------------------------------------+ | Loaded 1 file(s). Total files in destination location: 1 | +----------------------------------------------------------+ Fetched 1 row(s) in 0.44s [hadoop02:21000] > select * from employee_temp limit 2; Query: select * from employee_temp limit 2 Query submitted at: 2019-04-24 10:30:10 (Coordinator: http://hadoop02:25000) Query progress can be monitored at: http://hadoop02:25000/query_plan?query_id=4246eaa38a3d8bbb:953ce4d300000000 +------+------+--------+-------------+ | eid | name | salary | destination | +------+------+--------+-------------+ | NULL | NULL | | | | 1 | aa1 | aa11 | aa111 | +------+------+--------+-------------+ Fetched 2 row(s) in 0.19s [hadoop02:21000] > select * from employee_temp limit 10; Query: select * from employee_temp limit 10 Query submitted at: 2019-04-24 10:30:16 (Coordinator: http://hadoop02:25000) Query progress can be monitored at: http://hadoop02:25000/query_plan?query_id=cb4c3cf5d647c97a:75d2985f00000000 +------+------+--------+-------------+ | eid | name | salary | destination | +------+------+--------+-------------+ | NULL | NULL | | | | 1 | aa1 | aa11 | aa111 | | 2 | aa2 | aa22 | aa222 | | 3 | aa3 | aa33 | aa333 | | 4 | aa4 | aa44 | aa444 | | 5 | aa5 | aa55 | aa555 | | 6 | aa6 | aa66 | aa666 | | 7 | aa7 | aa77 | aa777 | | 8 | aa8 | aa88 | aa888 | | 9 | aa9 | aa99 | aa999 | +------+------+--------+-------------+ Fetched 10 row(s) in 0.02s [hadoop02:21000] > select count(*) from employee_temp; Query: select count(*) from employee_temp Query submitted at: 2019-04-24 10:30:34 (Coordinator: http://hadoop02:25000) Query progress can be monitored at: http://hadoop02:25000/query_plan?query_id=5a4c1107de118395:bfe96a1600000000 +----------+ | count(*) | +----------+ | 20593280 | +----------+ Fetched 1 row(s) in 0.65s

3.从impala临时表employee_temp 导入kudu表employee_kudu

创建kudu表

create table employee_kudu ( eid int, name String,salary String, destination String,PRIMARY KEY(eid)) PARTITION BY HASH PARTITIONS 16 STORED AS KUDU;

[hadoop02:21000] > create table employee_kudu ( eid int, name String,salary String, destination String,PRIMARY KEY(eid)) PARTITION BY HASH PARTITIONS 16 STORED AS KUDU; Query: create table employee_kudu ( eid int, name String,salary String, destination String,PRIMARY KEY(eid)) PARTITION BY HASH PARTITIONS 16 STORED AS KUDU Fetched 0 row(s) in 0.94s [hadoop02:21000] > show tables; Query: show tables +----------------+ | name | +----------------+ | employee | | employee_kudu | | employee_temp | | my_first_table |

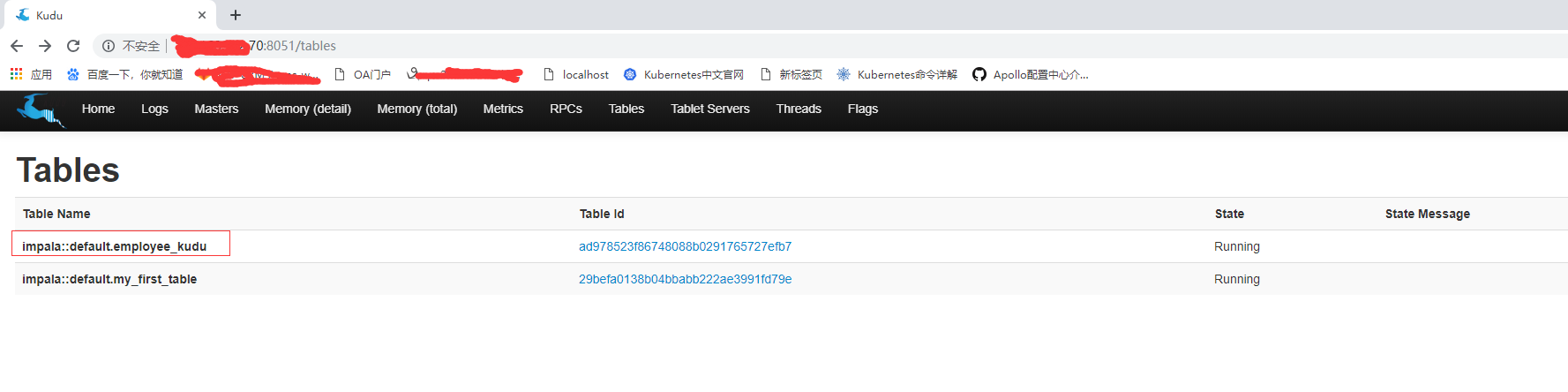

界面查看是否创建成功

从impala临时表employee_temp 导入kudu表employee_kudu

[hadoop02:21000] > insert into employee_kudu select * from employee_temp; Query: insert into employee_kudu select * from employee_temp Query submitted at: 2019-04-24 10:31:37 (Coordinator: http://hadoop02:25000) Query progress can be monitored at: http://hadoop02:25000/query_plan?query_id=2e47536cc5c82392:ef4d552600000000 WARNINGS: Row with null value violates nullability constraint on table 'impala::default.employee_kudu'. Modified 20593279 row(s), 1 row error(s) in 78.75s [hadoop02:21000] > select count(*) from employee_kudu; Query: select count(*) from employee_kudu Query submitted at: 2019-04-24 10:33:30 (Coordinator: http://hadoop02:25000) Query progress can be monitored at: http://hadoop02:25000/query_plan?query_id=6d4bad44a980f229:fd7878d00000000 +----------+ | count(*) | +----------+ | 20593279 | +----------+ Fetched 1 row(s) in 0.18s