PyTorch有多种方法搭建神经网络,下面识别手写数字为例,介绍4种搭建神经网络的方法。

方法一:torch.nn.Sequential()

torch.nn.Sequential类是torch.nn中的一种序列容器,参数会按照我们定义好的序列自动传递下去。

import torch.nn as nn

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Sequential( # input shape (1, 28, 28)

nn.Conv2d(1, 16, 5, 1, 2), # output shape (16, 28, 28)

nn.ReLU(),

nn.MaxPool2d(2), # output shape (16, 14, 14)

)

self.conv2 = nn.Sequential(

nn.Conv2d(16, 32, 5, 1, 2), # output shape (32, 14, 14)

nn.ReLU(),

nn.MaxPool2d(2), # output shape (32, 7, 7)

)

self.linear = nn.Linear(32*7*7, 10)

def forward(self, x):

x = self.conv1(x)

x = self.conv2(x)

x = x.view(x.size(0), -1)

output = self.linear(x)

return output

net = Net()

print(net)

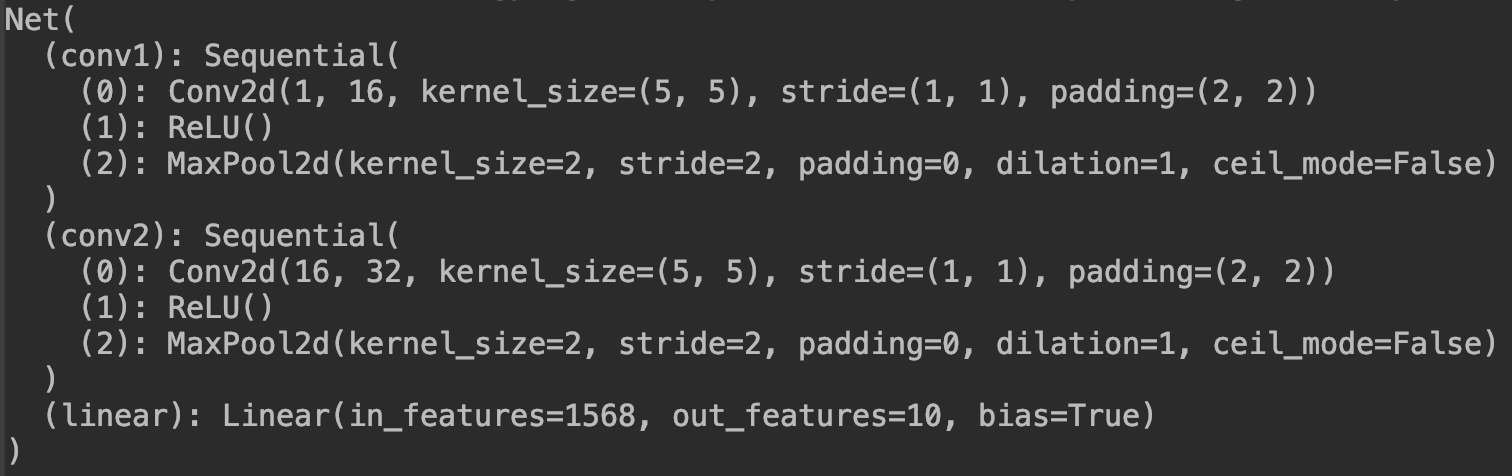

运行结果:

注意:这样做有一个问题,每一个层是没有名称,默认的是以0、1、2、3来命名,从上面的运行结果也可以看出。

方法二:torch.nn.Sequential() 搭配 collections.OrderDict()

import torch.nn as nn

from collections import OrderedDict # OrderedDict是字典的子类,可以记住元素的添加顺序

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Sequential(OrderedDict([

('conv1', nn.Conv2d(1, 16, 5, 1, 2)),

('ReLU1', nn.ReLU()),

('pool1', nn.MaxPool2d(2)),

]))

self.conv2 = nn.Sequential(OrderedDict([

('conv2', nn.Conv2d(16, 32, 5, 1, 2)),

('ReLU2', nn.ReLU()),

('pool2', nn.MaxPool2d(2)),

]))

self.linear = nn.Linear(32*7*7, 10)

def forward(self, x):

x = self.conv1(x)

x = self.conv2(x)

x = x.view(x.size(0), -1)

output = self.linear(x)

return output

net = Net()

print(net)

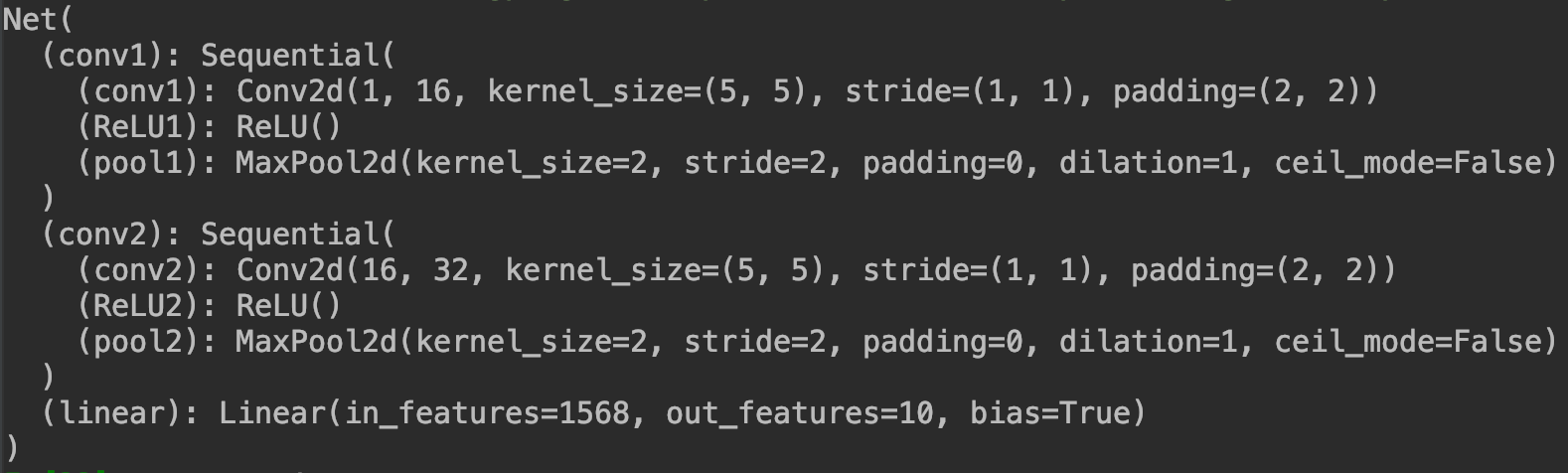

运行结果:

从上面的结果中可以看出,这个时候每一个层都有了自己的名称,但是此时需要注意,我们并不能够通过名称直接获取层,依然只能通过索引index,即net.conv1[1] 是正确的,net.conv1['ReLU1']是错误的。这是因为torch.nn.Sequential()只支持index访问。

方法三:torch.nn.Sequential() 搭配 add_module()

import torch.nn as nn

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Sequential()

self.conv1.add_module('conv1', nn.Conv2d(1, 16, 5, 1, 2))

self.conv1.add_module('ReLU1', nn.ReLU())

self.conv1.add_module('pool1', nn.MaxPool2d(2))

self.conv2 = nn.Sequential()

self.conv2.add_module('conv2', nn.Conv2d(16, 32, 5, 1, 2))

self.conv2.add_module('ReLU2', nn.ReLU())

self.conv2.add_module('pool2', nn.MaxPool2d(2))

self.linear = nn.Linear(32*7*7, 10)

def forward(self, x):

x = self.conv1(x)

x = self.conv2(x)

x = x.view(x.size(0), -1)

output = self.linear(x)

return output

net = Net()

print(net)

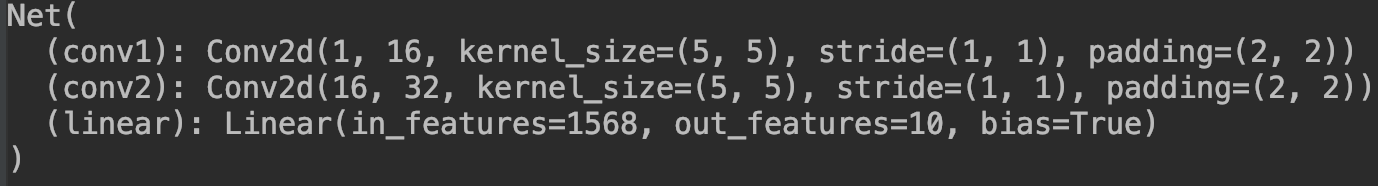

运行结果:

方法四

import torch.nn as nn

import torch.nn.functional as F

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(1, 16, 5, 1, 2)

self.conv2 = nn.Conv2d(16, 32, 5, 1, 2)

self.linear = nn.Linear(32*7*7, 10)

def forward(self, x):

x = F.max_pool2d(F.relu(self.conv1(x)), 2)

x = F.max_pool2d(F.relu(self.conv2(x)), 2)

output = self.linear(x)

return output

net = Net()

print(net)

运行结果:

参考资料

[1] pytorch教程之nn.Sequential类详解——使用Sequential类来自定义顺序连接模型

[3] 《深度学习之PyTorch实战计算机视觉》