1 /* 2 * Setup the initial page tables. We only setup the barest amount which is 3 * required to get the kernel running. The following sections are required: 4 * - identity mapping to enable the MMU (low address, TTBR0) 5 * - first few MB of the kernel linear mapping to jump to once the MMU has 6 * been enabled 7 */ 8 __create_page_tables: 9 mov x28, lr

1 /* 2 * Invalidate the idmap and swapper page tables to avoid potential 3 * dirty cache lines being evicted. 4 */ 5 adrp x0, idmap_pg_dir 6 adrp x1, swapper_pg_end 7 sub x1, x1, x0 8 bl __inval_dcache_area

1 . = ALIGN(PAGE_SIZE); 2 idmap_pg_dir = .; 3 . += IDMAP_DIR_SIZE; 4 5 #ifdef CONFIG_UNMAP_KERNEL_AT_EL0 6 tramp_pg_dir = .; 7 . += PAGE_SIZE; 8 #endif 9 10 swapper_pg_dir = .; 11 . += SWAPPER_DIR_SIZE; 12 swapper_pg_end = .;

其中IDMAP_DIR_SIZE定义如下:

1 #include <stdio.h> 2 3 #define CONFIG_PGTABLE_LEVELS 4 4 #define CONFIG_ARM64_PAGE_SHIFT 12 5 6 #define PAGE_SHIFT CONFIG_ARM64_PAGE_SHIFT 7 8 #define ARM64_HW_PGTABLE_LEVEL_SHIFT(n) ((PAGE_SHIFT - 3) * (4 - (n)) + 3) 9 10 #define PGDIR_SHIFT ARM64_HW_PGTABLE_LEVEL_SHIFT(4 - CONFIG_PGTABLE_LEVELS) 11 12 #define EARLY_ENTRIES(vstart, vend, shift) (((vend) >> (shift)) 13 - ((vstart) >> (shift)) + 1) 14 15 #define EARLY_PGDS(vstart, vend) (EARLY_ENTRIES(vstart, vend, PGDIR_SHIFT)) 16 17 #define PUD_SHIFT ARM64_HW_PGTABLE_LEVEL_SHIFT(1) 18 19 #define SWAPPER_TABLE_SHIFT PUD_SHIFT 20 21 #define EARLY_PMDS(vstart, vend) (EARLY_ENTRIES(vstart, vend, SWAPPER_TABLE_SHIFT)) 22 23 int main(int argc, const char *argv[]) 24 { 25 unsigned long long a; 26 27 unsigned long long start = 0xffff000008080000; 28 unsigned long long end = 0xffff000009536000; 29 30 a = 1 + EARLY_PGDS(start, end) + EARLY_PMDS(start, end); 31 32 printf("a: %llu ", a); 33 return 0; 34 }

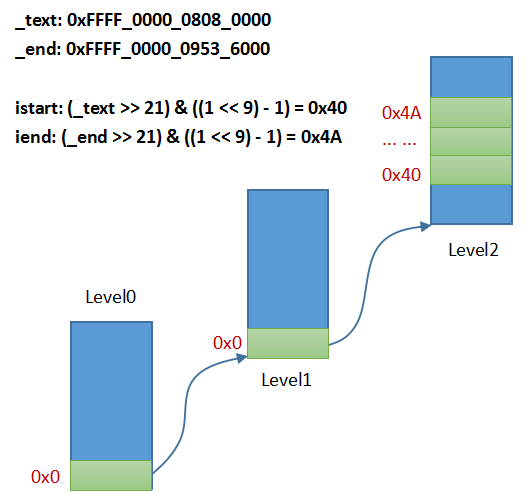

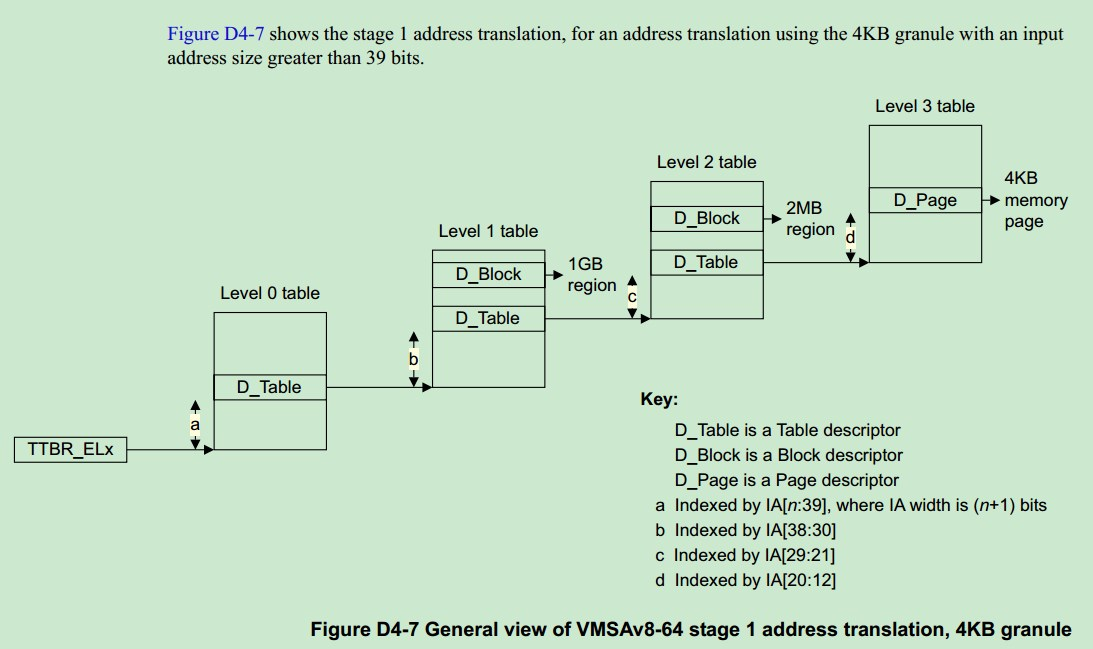

运行结果是3,所以SWAPPER_DIR_SIZE也是12KB,分别存放PGD、PUD和PMD表项,这个计算方法也容易理解,其中1表示存放level0 table需要1页,EARLY_PGDS(start, end)计算映射(start, end)占用了level0 table中几个表项,而每一个level0表项将来都会指向一个level1 table的物理首地址,每个level1 table占一页,所以可以得到存放level1 table一共需要几页,EARLY_PMDS(start, end)用于计算映射(start, end)需要占用的level1 table的表项的总和,因为level1 table的每个表项都会指向一个level2 table的物理首地址,而每个level2 table也占一页,所以可以得到存放level2 table一共需要几页

/* * Clear the idmap and swapper page tables. */ adrp x0, idmap_pg_dir adrp x1, swapper_pg_end sub x1, x1, x0 1: stp xzr, xzr, [x0], #16 stp xzr, xzr, [x0], #16 stp xzr, xzr, [x0], #16 stp xzr, xzr, [x0], #16 subs x1, x1, #64 b.ne 1b

将存放转换表的内存清空。

1 mov x7, SWAPPER_MM_MMUFLAGS // level2的block entry会用到 2 3 adrp x0, idmap_pg_dir 4 adrp x3, __idmap_text_start // __pa(__idmap_text_start) 5 adrp x5, __idmap_text_end 6 clz x5, x5 7 cmp x5, TCR_T0SZ(VA_BITS) // default T0SZ small enough? 8 b.ge 1f // .. then skip VA range extension 9 10 adr_l x6, idmap_t0sz 11 str x5, [x6] 12 dmb sy 13 dc ivac, x6 // Invalidate potentially stale cache line 14 15 mov x4, #1 << (PHYS_MASK_SHIFT - PGDIR_SHIFT) 16 str_l x4, idmap_ptrs_per_pgd, x5 17 18 1: 19 ldr_l x4, idmap_ptrs_per_pgd 20 mov x5, x3 // __pa(__idmap_text_start) 21 adr_l x6, __idmap_text_end // __pa(__idmap_text_end) 22 23 map_memory x0, x1, x3, x6, x7, x3, x4, x10, x11, x12, x13, x14

第23行的宏map_memory实现: 将虚拟地址[x3, x6]映射到(__idmap_text_start当前在物理内存中的地址)~(__idmap_text_end当前在物理内存中的地址),table从idmap_pg_dir当前所在的物理地址处开始存放。结合System.map,可以看到在这个范围内包含下面的符号,目的是保证在开启MMU的后,程序还可以正常运行:

ffff000008bdf000 T __idmap_text_start

ffff000008bdf000 T kimage_vaddr

ffff000008bdf008 T el2_setup

ffff000008bdf054 t set_hcr

ffff000008bdf128 t install_el2_stub

ffff000008bdf17c t set_cpu_boot_mode_flag

ffff000008bdf1a0 T secondary_holding_pen

ffff000008bdf1c4 t pen

ffff000008bdf1d8 T secondary_entry

ffff000008bdf1e4 t secondary_startup

ffff000008bdf1f4 t __secondary_switched

ffff000008bdf228 T __enable_mmu

ffff000008bdf284 t __no_granule_support

ffff000008bdf2a8 t __primary_switch

ffff000008bdf2c8 T cpu_resume

ffff000008bdf2e8 T __cpu_soft_restart

ffff000008bdf328 T cpu_do_resume

ffff000008bdf39c T idmap_cpu_replace_ttbr1

ffff000008bdf3d4 t __idmap_kpti_flag

ffff000008bdf3d8 T idmap_kpti_install_ng_mappings

ffff000008bdf414 t do_pgd

ffff000008bdf42c t next_pgd

ffff000008bdf438 t skip_pgd

ffff000008bdf46c t walk_puds

ffff000008bdf474 t do_pud

ffff000008bdf48c t next_pud

ffff000008bdf498 t skip_pud

ffff000008bdf4a8 t walk_pmds

ffff000008bdf4b0 t do_pmd

ffff000008bdf4c8 t next_pmd

ffff000008bdf4d4 t skip_pmd

ffff000008bdf4e4 t walk_ptes

ffff000008bdf4ec t do_pte

ffff000008bdf50c t skip_pte

ffff000008bdf51c t __idmap_kpti_secondary

ffff000008bdf564 T __cpu_setup

ffff000008bdf5f8 T __idmap_text_end

adrp x0, swapper_pg_dir mov_q x5, KIMAGE_VADDR + TEXT_OFFSET // compile time __va(_text) add x5, x5, x23 // add KASLR displacement mov x4, PTRS_PER_PGD adrp x6, _end // runtime __pa(_end) adrp x3, _text // runtime __pa(_text) sub x6, x6, x3 // _end - _text add x6, x6, x5 // runtime __va(_end) map_memory x0, x1, x5, x6, x7, x3, x4, x10, x11, x12, x13, x14

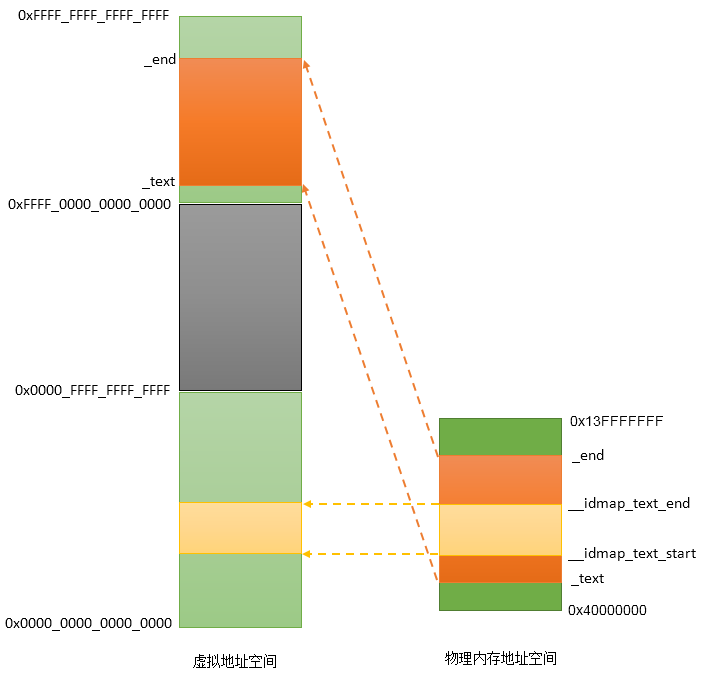

上面完成的工作是: 将kernel镜像占用的虚拟地址空间[_text, _end]映射到当前kernel镜像当前所在的物理内存地址空间上,table存放到swapper_pg_dir当前所在的物理内存地址处。

ffff000008080000 t _head

ffff000008080000 T _text

ffff000008080040 t pe_header

ffff000008080044 t coff_header

ffff000008080058 t optional_header

ffff000008080070 t extra_header_fields

ffff0000080800f8 t section_table

ffff000008081000 T __exception_text_start

ffff000008081000 T _stext

... ...

ffff000009536000 B _end

ffff000009536000 B swapper_pg_end

到这里,可以得到如下映射关系:

adrp x0, swapper_pg_dir mov_q x5, KIMAGE_VADDR + TEXT_OFFSET // compile time __va(_text) add x5, x5, x23 // add KASLR displacement, 如果不支持内核镜像加载地址随机化,x23为0 mov x4, PTRS_PER_PGD // 每个level0 table有一个表项,为1<<9 adrp x6, _end // runtime __pa(_end) adrp x3, _text // runtime __pa(_text) sub x6, x6, x3 // _end - _text add x6, x6, x5 // runtime __va(_end) map_memory x0, x1, x5, x6, x7, x3, x4, x10, x11, x12, x13, x14

上面的map_memory就负责建立上图中level0到level2的数据结构关系,没有用到level3

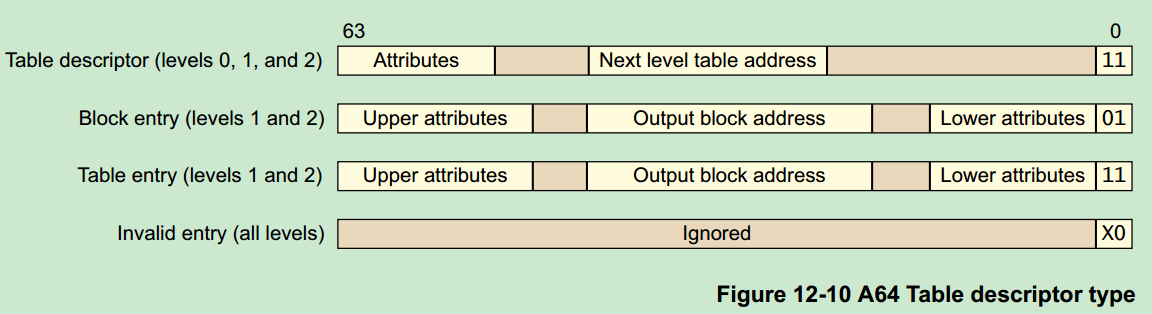

/* * Map memory for specified virtual address range. Each level of page table needed supports * multiple entries. If a level requires n entries the next page table level is assumed to be * formed from n pages. * * tbl: location of page table * rtbl: address to be used for first level page table entry (typically tbl + PAGE_SIZE) * vstart: start address to map * vend: end address to map - we map [vstart, vend] * flags: flags to use to map last level entries * phys: physical address corresponding to vstart - physical memory is contiguous * pgds: the number of pgd entries * * Temporaries: istart, iend, tmp, count, sv - these need to be different registers * Preserves: vstart, vend, flags * Corrupts: tbl, rtbl, istart, iend, tmp, count, sv */ .macro map_memory, tbl, rtbl, vstart, vend, flags, phys, pgds, istart, iend, tmp, count, sv add tbl, bl, #PAGE_SIZE mov sv, tbl mov count, #0 compute_indices vstart, vend, #PGDIR_SHIFT, pgds, istart, iend, count populate_entries bl, tbl, istart, iend, #PMD_TYPE_TABLE, #PAGE_SIZE, mp mov bl, sv mov sv, tbl compute_indices vstart, vend, #SWAPPER_TABLE_SHIFT, #PTRS_PER_PMD, istart, iend, count populate_entries bl, tbl, istart, iend, #PMD_TYPE_TABLE, #PAGE_SIZE, mp mov bl, sv compute_indices vstart, vend, #SWAPPER_BLOCK_SHIFT, #PTRS_PER_PTE, istart, iend, count bic count, phys, #SWAPPER_BLOCK_SIZE - 1 populate_entries bl, count, istart, iend, flags, #SWAPPER_BLOCK_SIZE, mp .endm

其中涉及到两个宏compute_indices和populate_entries,前者计算需要占用某个level的表项的索引范围,后者用于填充被占用的那些表项。

/* * Compute indices of table entries from virtual address range. If multiple entries * were needed in the previous page table level then the next page table level is assumed * to be composed of multiple pages. (This effectively scales the end index). * * vstart: virtual address of start of range * vend: virtual address of end of range * shift: shift used to transform virtual address into index * ptrs: number of entries in page table * istart: index in table corresponding to vstart * iend: index in table corresponding to vend * count: On entry: how many extra entries were required in previous level, scales * our end index. * On exit: returns how many extra entries required for next page table level * * Preserves: vstart, vend, shift, ptrs * Returns: istart, iend, count */ .macro compute_indices, vstart, vend, shift, ptrs, istart, iend, count lsr iend, vend, shift mov istart, ptrs sub istart, istart, #1 and iend, iend, istart // iend = (vend >> shift) & (ptrs - 1) mov istart, ptrs mul istart, istart, count add iend, iend, istart // iend += (count - 1) * ptrs // our entries span multiple tables lsr istart, vstart, shift mov count, ptrs sub count, count, #1 and istart, istart, count sub count, iend, istart .endm

下面是populate_entries的实现:

/* * Macro to populate page table entries, these entries can be pointers to the next level * or last level entries pointing to physical memory. * * tbl: page table address * rtbl: pointer to page table or physical memory * index: start index to write * eindex: end index to write - [index, eindex] written to * flags: flags for pagetable entry to or in * inc: increment to rtbl between each entry * tmp1: temporary variable * * Preserves: tbl, eindex, flags, inc * Corrupts: index, tmp1 * Returns: rtbl */ .macro populate_entries, tbl, rtbl, index, eindex, flags, inc, tmp1 .Lpe@: phys_to_pte mp1, tbl orr mp1, mp1, flags // tmp1 = table entry str mp1, [ bl, index, lsl #3] add tbl, tbl, inc // rtbl = pa next level add index, index, #1 cmp index, eindex b.ls .Lpe@ .endm

void populate_entries(char *tbl, char **rtbl, int index, int eindex, int flags, int inc, char *tmp1) { while (index <= eindex) { tmp1 = *rtbl; tmp1 = tmp1 | flags; *(tbl + index*8) = tmp1; *rtbl = *rtbl + inc; index++; } } void compute_indices (uint64_t vstart, uint64_t vend, int shift, int ptrs, int *istart, int *iend, int *count) { *iend = vend >> shift; *istart = ptrs; *istart = *istart - 1; *iend = *iend & *istart; // 计算end index *istart = ptrs; *istart = (*istart) * (*count); *iend = *iend + *istart; // 由于*count是0,这里end index没变变化 *istart = vstart >> shift; *count = ptrs; *count = *count - 1; *istart = *istart & *count; // 计算start index *count = *iend - *istart; // 计算需要的index的数量 } void map_memory(char *tbl, char *rtbl, uint64_t vstart, uint64_t vend, int flags, uint64_t phys, int pgds, int istart, int iend, int tmp, int count, char *sv) { #define PAGE_SIZE (1 << 12) tbl = (char *)malloc(PAGE_SIZE * 3); // 用于存放level0~level2的table的缓冲区 rtbl = tbl + PAGE_SIZE; // rtbl指向下一个level的table sv = rtbl; count = 0; #define PGDIR_SHIFT (39) #define PMD_TYPE_TABLE (0x3 << 0) // 表示table descriptor #define PGDS (1 << 9) compute_indices(vstart, vend, PGDIR_SHIFT, PGDS, &istart, &iend, &count); populate_entries(tbl, &rtbl, istart, iend, PMD_TYPE_TABLE, PAGE_SIZE, tmp); tbl = sv; sv = rtbl; #define SWAPPER_TABLE_SHIFT (30) #define PTRS_PER_PMD (1<<9) compute_indices(vstart, vend, SWAPPER_TABLE_SHIFT, PTRS_PER_PMD, &istart, &iend, &count); populate_entries(tbl, &rtbl, istart, iend, PMD_TYPE_TABLE, PAGE_SIZE, tmp); //table descriptor tbl = sv; #define SWAPPER_BLOCK_SHIFT (21) #define PTRS_PER_PTE (1 << 9) //512 #define SWAPPER_BLOCK_SIZE (1<<21) //2MB // 这里的flags是SWAPPER_MM_MMUFLAGS,为((4<<2) | ((1<<0) | (1<<10) | (3<<8))), 类型Block entry compute_indices(vstart, vend, SWAPPER_BLOCK_SHIFT, PTRS_PER_PTE, &istart, &iend, &count); count = phys ^ (SWAPPER_BLOCK_SIZE - 1); populate_entries(tbl, &count, istart, iend, flags, SWAPPER_BLOCK_SIZE, tmp); }

由于我们编译出来的kernel大概有20.7MB左右,所以用level0 table需要一项(512G),level1 table需要一项(1GB),level2 block需要11个(22MB)。