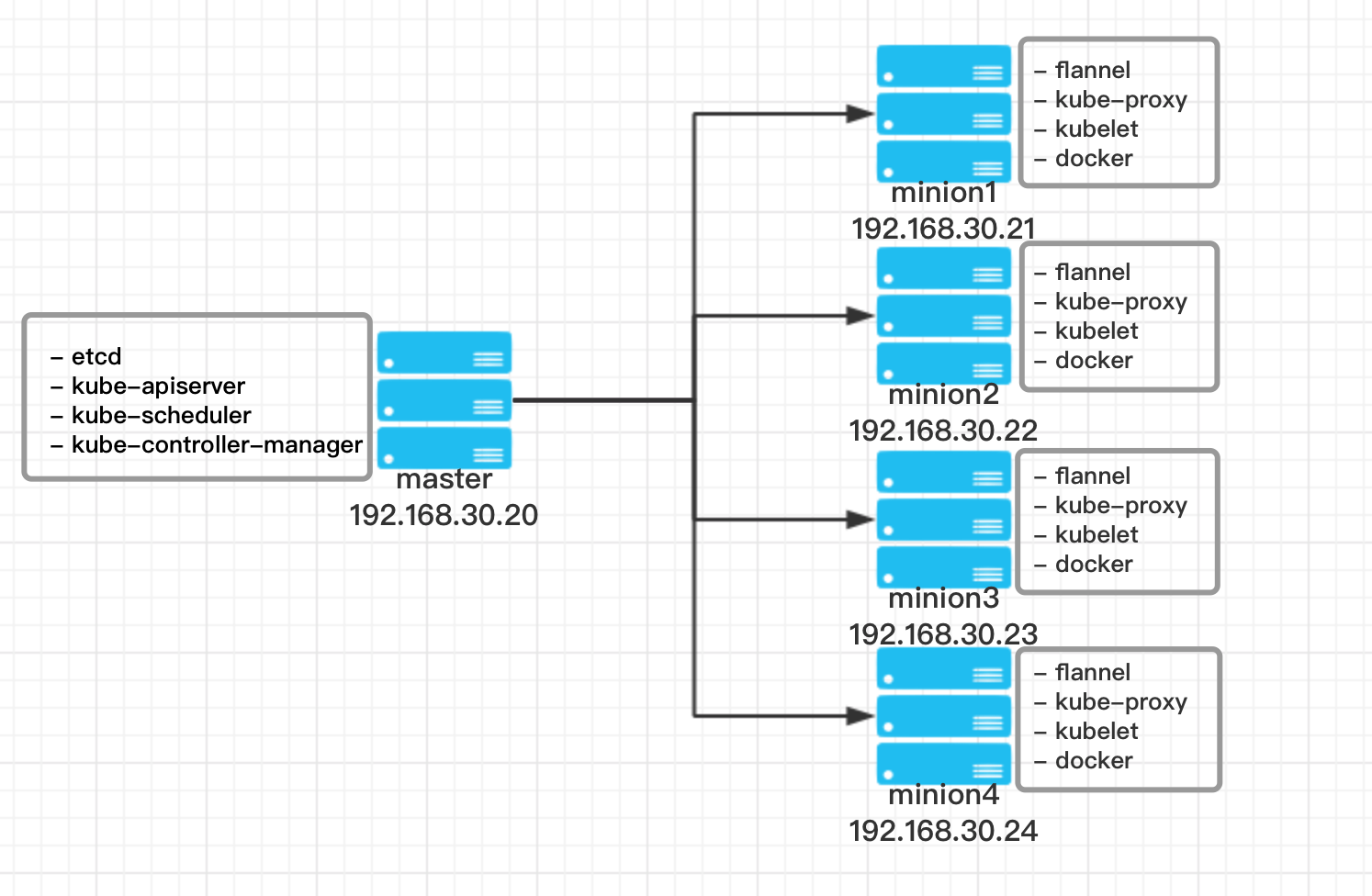

1、Kubernates集群组件

- etcd:在Master节点上,一个高可用的K/V键值对存储和服务发现系统

- kube-apiserver:在Master节点上,实现跨主机的容器网络通信

- kube-controller-manager:在Master节点上,确保集群服务

- kube-scheduler:在Master节点上,调度容器,分配到Node节点上

- kube-proxy:在Node节点上,提供网络代理服务

- kubelet:在Node节点上,按照配置文件中定义的容器规格启动容器

- flannel:在Node节点上,实现跨主机的容器网络的通信

2、集群示意图

3、环境安装

- 安装epel-release源

yum install -y epel-release

- 关闭防火墙和selinx(与docker容器的放火请规则冲突)

systemctl stop firewalld systemctl disable firewalld setenforce 0

4、安装Master节点

- 安装etcd和kubernates-master

yum -y install etcd kubernetes-master

- 编辑/etc/etcd/etcd.conf文件,设置etcd监听客户端的URLS

ETCD_NAME=default ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379" ETCD_ADVERTISE_CLIENT_URLS="http://localhost:2379"

- 配置ServiceAccount,用于Master使用yaml文件在Node上生成Pod

openssl genrsa -out /etc/kubernetes/serviceaccount.key 2048

- 编辑/etc/kubernetes/apiserver文件,设置kube-api的地址和端口和ServiceAccount

KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0" KUBE_API_PORT="--port=8080" KUBELET_PORT="--kubelet-port=10250" KUBE_ETCD_SERVERS="--etcd-servers=http://127.0.0.1:2379" KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.254.0.0/16" KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ResourceQuota" KUBE_API_ARGS="--service_account_key_file=/etc/kubernetes/serviceaccount.key"

- 编辑/etc/kubernetes/controller-manager文件,配置ServiceAccount

KUBE_CONTROLLER_MANAGER_ARGS="--service_account_private_key_file=/etc/kubernetes/serviceaccount.key"

- 启动etcd、kube-apiserver、kube-controller-manager、kube-scheduler等服务,并设置开机启动

for SERVICES in etcd kube-apiserver kube-controller-manager kube-scheduler; do systemctl restart $SERVICES; systemctl enable $SERVICES; systemctl status $SERVICES ; done

- 在etcd中定义flannel网络

etcdctl mk /atomic.io/network/config '{"Network":"172.17.0.0/16"}'

5、安装Node节点

- 安装flannel和kubernetes-node

yum -y install flannel kubernetes-node

- 修改/etc/sysconfig/flanneld文件,为flannel网络指定etcd服务

FLANNEL_ETCD="http://192.168.30.20:2379" FLANNEL_ETCD_KEY="/atomic.io/network"

- 修改/etc/kubernetes/config文件,指定kube master地址

KUBE_LOGTOSTDERR="--logtostderr=true" KUBE_LOG_LEVEL="--v=0" KUBE_ALLOW_PRIV="--allow-privileged=false" KUBE_MASTER="--master=http://192.168.30.20:8080"

- 修改/etc/kubernetes/kubelet文件,配置相应Node节点的信息

-

- node1

KUBELET_ADDRESS="--address=0.0.0.0" KUBELET_PORT="--port=10250" KUBELET_HOSTNAME="--hostname-override=192.168.30.21" #修改成对应Node的IP KUBELET_API_SERVER="--api-servers=http://192.168.30.20:8080" #指定Master节点的API Server KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest" KUBELET_ARGS=""

-

- node2

KUBELET_ADDRESS="--address=0.0.0.0" KUBELET_PORT="--port=10250" KUBELET_HOSTNAME="--hostname-override=192.168.30.22" KUBELET_API_SERVER="--api-servers=http://192.168.30.20:8080" KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest" KUBELET_ARGS=""

-

- node3

KUBELET_ADDRESS="--address=0.0.0.0" KUBELET_PORT="--port=10250" KUBELET_HOSTNAME="--hostname-override=192.168.30.23" KUBELET_API_SERVER="--api-servers=http://192.168.30.20:8080" KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest" KUBELET_ARGS=""

-

- node4

KUBELET_ADDRESS="--address=0.0.0.0" KUBELET_PORT="--port=10250" KUBELET_HOSTNAME="--hostname-override=192.168.30.24" KUBELET_API_SERVER="--api-servers=http://192.168.30.20:8080" KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest" KUBELET_ARGS=""

- 启动kube-proxy,kubelet,docker,flanneld等服务,并设置开机启动

for SERVICES in kube-proxy kubelet docker flanneld; do systemctl restart $SERVICES; systemctl enable $SERVICES; systemctl status $SERVICES; done

- 安装rhsm,解决Pod一直处于ContainerCreating状态

wget http://mirror.centos.org/centos/7/os/x86_64/Packages/python-rhsm-certificates-1.19.10-1.el7_4.x86_64.rpm

rpm2cpio python-rhsm-certificates-1.19.10-1.el7_4.x86_64.rpm | cpio -iv --to-stdout ./etc/rhsm/ca/redhat-uep.pem | tee /etc/rhsm/ca/redhat-uep.pem

6、验证集群是否安装成功(在Master上执行)

kubectl get node

文章来自于:韩德田Tivens-k8s入门系列之集群安装篇