一、实验环境说明

操作系统: Centos 6.6 x64

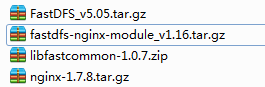

FastDFS 相关版本: fastdfs-5.05 fastdfs-nginx-module-v1.16 libfastcommon-v1.0.7

web 服务器软件: nginx-1.7.8

角色分配: 2 个 tracker, 地址分别为:10.1.1.243 10.1.1.244 两块磁盘

2个 group:

G1:10.1.1.245 10.1.1.246 三块磁盘

G2:10.1.1.247 10.1.1.248 三块磁盘

Client:10.1.1.249

目录规划:

/dev/sdb1 /data1 日志元数据目录

/dev/sdc1 /data2 数据目录

关闭selinux和iptables

做时间同步,最好添加定时任务

配置好yum源,推荐国内源

分区格式化磁盘并创建挂载目录进行挂载

二、安装 libfastcommon 和 fastdfs(所有机器均安装)

1.下载所需软件(链接: https://pan.baidu.com/s/1eSvSRPg 密码: 7k66)

unzip libfastcommon-1.0.7.zip

cd libfastcommon-1.0.7

./make.sh

./make.sh install

cd ..

tar xf FastDFS_v5.05.tar.gz

cd FastDFS

sed -i 's#/usr/local/bin/#/usr/bin/#g' init.d/fdfs_storaged

sed -i 's#/usr/local/bin/#/usr/bin/#g' init.d/fdfs_trackerd

./make.sh

./make.sh install

三、配置 tracker 10.1.1.243 10.1.1.244 (配置相同)

cp /etc/fdfs/tracker.conf.sample /etc/fdfs/tracker.conf

vim /etc/fdfs/tracker.conf

disabled=false

bind_addr=

port=22122

connect_timeout=30

network_timeout=60

base_path=/data1/

max_connections=256

accept_threads=1

work_threads=4

store_lookup=2

store_group=group2

store_server=0

store_path=0

download_server=0

reserved_storage_space = 10%

log_level=info

run_by_group=

run_by_user=

allow_hosts=*

sync_log_buff_interval = 10

check_active_interval = 120

thread_stack_size = 64KB

storage_ip_changed_auto_adjust = true

storage_sync_file_max_delay = 86400

storage_sync_file_max_time = 300

use_trunk_file = false

slot_min_size = 256

slot_max_size = 16MB

trunk_file_size = 64MB

trunk_create_file_advance = false

trunk_create_file_time_base = 02:00

trunk_create_file_interval = 86400

trunk_create_file_space_threshold = 20G

trunk_init_check_occupying = false

trunk_init_reload_from_binlog = false

trunk_compress_binlog_min_interval = 0

use_storage_id = false

storage_ids_filename = storage_ids.conf

id_type_in_filename = ip

store_slave_file_use_link = false

rotate_error_log = false

error_log_rotate_time=00:00

rotate_error_log_size = 0

log_file_keep_days = 0

use_connection_pool = false

connection_pool_max_idle_time = 3600

http.server_port=8080

http.check_alive_interval=30

http.check_alive_type=tcp

http.check_alive_uri=/status.html

启动 tracker 服务:

service fdfs_trackerd start

chkconfig fdfs_trackerd on

四、配置组G1 10.1.1.245 10.1.1.246 (配置相同)

cp /etc/fdfs/storage.conf.sample /etc/fdfs/storage.conf

vim /etc/fdfs/storage.conf

disabled=false

group_name=G1

bind_addr=

client_bind=true

port=23000

connect_timeout=30

network_timeout=60

heart_beat_interval=30

stat_report_interval=60

base_path=/data1/

max_connections=256

buff_size = 256KB

accept_threads=1

work_threads=4

disk_rw_separated = true

disk_reader_threads = 1

disk_writer_threads = 1

sync_wait_msec=50

sync_interval=0

sync_start_time=00:00

sync_end_time=23:59

write_mark_file_freq=500

store_path_count=1

store_path0=/data2

subdir_count_per_path=256

tracker_server=10.1.1.243:22122

tracker_server=10.1.1.244:22122

log_level=info

run_by_group=

run_by_user=

allow_hosts=*

file_distribute_path_mode=0

file_distribute_rotate_count=100

fsync_after_written_bytes=0

sync_log_buff_interval=10

sync_binlog_buff_interval=10

sync_stat_file_interval=300

thread_stack_size=512KB

upload_priority=10

if_alias_prefix=

check_file_duplicate=0

file_signature_method=hash

key_namespace=FastDFS

keep_alive=0

use_access_log = false

rotate_access_log = false

access_log_rotate_time=00:00

rotate_error_log = false

error_log_rotate_time=00:00

rotate_access_log_size = 0

rotate_error_log_size = 0

log_file_keep_days = 0

file_sync_skip_invalid_record=false

use_connection_pool = false

connection_pool_max_idle_time = 3600

http.domain_name=

http.server_port=8888

配置组G2 10.1.1.247 10.1.1.248 配置相同

cp /etc/fdfs/storage.conf.sample /etc/fdfs/storage.conf

vim /etc/fdfs/storage.conf

disabled=false

group_name=G2

bind_addr=

client_bind=true

port=23000

connect_timeout=30

network_timeout=60

heart_beat_interval=30

stat_report_interval=60

base_path=/data1

max_connections=256

buff_size = 256KB

accept_threads=1

work_threads=4

disk_rw_separated = true

disk_reader_threads = 1

disk_writer_threads = 1

sync_wait_msec=50

sync_interval=0

sync_start_time=00:00

sync_end_time=23:59

write_mark_file_freq=500

store_path_count=1

store_path0=/data2

subdir_count_per_path=256

tracker_server=10.1.1.243:22122

tracker_server=10.1.1.244:22122

log_level=info

run_by_group=

run_by_user=

allow_hosts=*

file_distribute_path_mode=0

file_distribute_rotate_count=100

fsync_after_written_bytes=0

sync_log_buff_interval=10

sync_binlog_buff_interval=10

sync_stat_file_interval=300

thread_stack_size=512KB

upload_priority=10

if_alias_prefix=

check_file_duplicate=0

file_signature_method=hash

key_namespace=FastDFS

keep_alive=0

use_access_log = false

rotate_access_log = false

access_log_rotate_time=00:00

rotate_error_log = false

error_log_rotate_time=00:00

rotate_access_log_size = 0

rotate_error_log_size = 0

log_file_keep_days = 0

file_sync_skip_invalid_record=false

use_connection_pool = false

connection_pool_max_idle_time = 3600

http.domain_name=

http.server_port=8888

启动 storage 服务:(四台)

service fdfs_storaged start

chkconfig fdfs_storaged on

五、storage server 安装 nginx

编译安装nginx,添加fastdfs nginx 模块

tar xf fastdfs-nginx-module_v1.16.tar.gz

vim fastdfs-nginx-module/src/config

CORE_INCS="$CORE_INCS /usr/include/fastdfs /usr/include/fastcommon/" #去掉local

添加软连接

ln -s /usr/lib64/libfastcommon.so /usr/local/lib/libfastcommon.so

ln -s /usr/lib64/libfastcommon.so /usr/lib/libfastcommon.so

ln -s /usr/lib64/libfdfsclient.so /usr/local/lib/libfdfsclient.so

ln -s /usr/lib64/libfdfsclient.so /usr/lib/libfdfsclient.so

tar xf nginx-1.7.8.tar.gz

cd nginx-1.7.8

./configure --add-module=../fastdfs-nginx-module/src/ --prefix=/usr/local/nginx --user=nobody --group=nobody --with-http_gzip_static_module --with-http_gunzip_module

make

make install

nginx 启动脚本

#!/bin/bash

#

# nginx - this script starts and stops the nginx daemon

#

# chkconfig: - 85 15

# description: Nginx is an HTTP(S) server, HTTP(S) reverse

# proxy and IMAP/POP3 proxy server

# processname: nginx

# config: /usr/local/nginx/conf/nginx.conf

# Source function library.

. /etc/rc.d/init.d/functions

# Source networking configuration.

. /etc/sysconfig/network

# Check that networking is up.

[ "$NETWORKING" = "no" ] && exit 0

NGINX_HOME="/usr/local/nginx/"

nginx=$NGINX_HOME"sbin/nginx"

prog=$(basename $nginx)

NGINX_CONF_FILE=$NGINX_HOME"conf/nginx.conf"

[ -f /etc/sysconfig/nginx ] && /etc/sysconfig/nginx

lockfile=/var/lock/subsys/nginx

start() {

[ -x $nginx ] || exit 5

[ -f $NGINX_CONF_FILE ] || exit 6

echo -n $"Starting $prog: "

daemon $nginx -c $NGINX_CONF_FILE

retval=$?

echo

[ $retval -eq 0 ] && touch $lockfile

return $retval

}

stop() {

echo -n $"Stopping $prog: "

killproc $prog -QUIT

retval=$?

echo

[ $retval -eq 0 ] && rm -f $lockfile

return $retval

killall -9 nginx

}

restart() {

configtest || return $?

stop

sleep 1

start

}

reload() {

configtest || return $?

echo -n $"Reloading $prog: "

killproc $nginx -HUP

RETVAL=$?

echo

}

force_reload() {

restart

}

configtest() {

$nginx -t -c $NGINX_CONF_FILE

}

rh_status() {

status $prog

}

rh_status_q() {

rh_status >/dev/null 2>&1

}

case "$1" in

start)

rh_status_q && exit 0

$1

;;

stop)

rh_status_q || exit 0

$1

;;

restart|configtest)

$1

;;

reload)

rh_status_q || exit 7

$1

;;

force-reload)

force_reload

;;

status)

rh_status

;;

condrestart|try-restart)

rh_status_q || exit 0

;;

*)

echo $"Usage: $0

{start|stop|status|restart|condrestart|try-restart|reload|force-reload|configtest}"

exit 2

esac

配置 nginx(添加一个server)

cp fastdfs-nginx-module/src/mod_fastdfs.conf /etc/fdfs/

cp fastdfs-5.05/conf/{anti-steal.jpg,http.conf,mime.types} /etc/fdfs/

touch /var/log/mod_fastdfs.log

chown nobody.nobody /var/log/mod_fastdfs.log

server {

listen 80;

server_name 本机IP地址;

location /G1/M00{

root /data2/;

ngx_fastdfs_module;

}

}

配置 模块配置文件 mod_fastdfs.conf

G1 组配置:

vim /etc/fdfs/mod_fastdfs.conf

connect_timeout=2

network_timeout=30

base_path=/tmp

load_fdfs_parameters_from_tracker=true

storage_sync_file_max_delay = 86400

use_storage_id = false

storage_ids_filename = storage_ids.conf

tracker_server=10.1.1.243:22122

tracker_server=10.1.1.244:22122

storage_server_port=23000

group_name=G1

url_have_group_name = true

store_path_count=1

store_path0=/data2/

log_level=info

log_filename=/var/log/mod_fastdfs.log

response_mode=proxy

if_alias_prefix=

#include http.conf

flv_support = true

flv_extension = flv

group_count = 2

[group1]

group_name=G1

storage_server_port=23000

store_path_count=1

store_path0=/data2/

[group2]

group_name=G2

storage_server_port=23000

store_path_count=1

store_path0=/data2/

G2 组配置:

vim /etc/fdfs/mod_fastdfs.conf

connect_timeout=2

network_timeout=30

base_path=/tmp

load_fdfs_parameters_from_tracker=true

storage_sync_file_max_delay = 86400

use_storage_id = false

storage_ids_filename = storage_ids.conf

tracker_server=10.1.1.243:22122

tracker_server=10.1.1.244:22122

storage_server_port=23000

group_name=G2

url_have_group_name = true

store_path_count=1

store_path0=/data2/

log_level=info

log_filename=/var/log/mod_fastdfs.log

response_mode=proxy

if_alias_prefix=

#include http.conf

flv_support = true

flv_extension = flv

group_count = 2

[group1]

group_name=G1

storage_server_port=23000

store_path_count=1

store_path0=/data2/

[group2]

group_name=G2

storage_server_port=23000

store_path_count=1

store_path0=/data2/

service nginx reload

chkconfig nginx on

六、测试

客户端配置/etc/fdfs/client.conf

connect_timeout=30

network_timeout=60

base_path=/tmp

tracker_server=10.1.1.243:22122

tracker_server=10.1.1.244:22122

log_level=info

use_connection_pool = false

connection_pool_max_idle_time = 3600

load_fdfs_parameters_from_tracker=false

use_storage_id = false

storage_ids_filename = storage_ids.conf

http.tracker_server_port=80

客户端测试上传下载(其他命令暂时不测试,这里仅为测试服务是否正常):

fdfs_upload_file /etc/fdfs/client.conf /etc/passwd #上传文件

G1/M00/00/00/CgEB9Vnwv1SAXm1HAAAEeivAsqE3998928

md5sum /etc/passwd

6923374d09e7f8c9a296fdf2e84764a1 /etc/passwd

fdfs_download_file /etc/fdfs/client.conf G1/M00/00/00/CgEB9Vnwv1SAXm1HAAAEeivAsqE3998928 #下载文件

md5sum CgEB9Vnwv1SAXm1HAAAEeivAsqE3998928

6923374d09e7f8c9a296fdf2e84764a1 CgEB9Vnwv1SAXm1HAAAEeivAsqE3998928

上传下载文件内容一致,未发生改变

上传一张图片自定义meizhi.jpg

fdfs_upload_file /etc/fdfs/client.conf meizhi.jpg

G1/M00/00/00/CgEB9lnwwTaAFqHZAAC9pQqjlp0604.jpg

访问效果:

#前端可以设置nginx服务器做反向代理,对后端做负载均衡,这里附上一份参考示例:

http {

include mime.types;

default_type application/octet-stream;

#设置主机名哈希表的表项长度默认 32|64|128

server_names_hash_bucket_size 128;

#设置请求缓冲区大小, 默认使用 client_header_buffer_size 来读取 header 值,

#如果 header 过大,会使用 large_client_header_buffers 来读取

client_header_buffer_size 32k;

large_client_header_buffers 4 32k;

#定义最大允许上传的文件大小

client_max_body_size 300m;

#开启高效文件传输模式, sendfile 指令指定 nginx 是否调用 sendfile 函数来输出文件,对于普通应用

设为 on,如果用来进行下载等应用磁盘 IO 重负载应用,可设置为 off,以平衡磁盘与网络 I/O 处理速度,

降低系统的负载。注意:如果图片显示不正常把这个改成 off

sendfile on;

# 防止网络堵塞

tcp_nopush on;

#长连接超时时间

keepalive_timeout 120;

#gzip 模块设置

gzip on; #开启 gzip 压缩输出

gzip_min_length 1k; #最小压缩文件大小

gzip_buffers 4 16k; #压缩缓冲区

gzip_http_version 1.0; #压缩版本(默认 1.1,前端如果是 squid2.5 请使用 1.0)

gzip_comp_level 2; #压缩等级(1~9)

gzip_types text/plain application/x-javascript text/css application/xml;

#压缩类型,默认就已经包含 text/html

gzip_vary on; #根据 HTTP 头来判断是否需要压缩

gzip_disable “MSIE[1-6].”#IE6 以下对 gzip 的支持不好, 在 IE6 下禁用 gzip

proxy_redirect off;

#当后端单台 web 服务器上也配置有多个虚拟主机时,需要使用该 Header 来区分反向代理哪个主

机名

proxy_set_header Host $http_host;

proxy_set_header X-Real-IP $remote_addr;

#后端的 Web 服务器可以通过 X-Real-IP/X-Forwarded-For 获取用户真实 IP

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

#后端服务器连接的超时时间(发起握手等候响应的超时时间)

proxy_connect_timeout 90;

#后端服务器数据回传时间(规定此时间内后端服务器必须传完所有数据)

proxy_send_timeout 90;

#等待后端服务器处理请求的时间

proxy_read_timeout 90;

#nginx 从被代理的服务器读取响应时,使用该缓冲区保存响应的开始部分,该值默认等于

proxy_buffers 设置的一块缓冲区大小,但可以被设置更小

proxy_buffer_size 16k;

#为每个连接设置缓冲区的数量为 number,每块缓冲区的大小为 size,用于保存从被

代理服务器读取的响应

proxy_buffers 4 64k;

proxy_busy_buffers_size 128k;

#在开启缓冲后端服务器响应到临时文件的功能后,设置 nginx 每次写数据到临时文件的 size(大

小)限制

proxy_temp_file_write_size 128k;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /usr/local/nginx/access.log main;

#设置缓存存储路径、存储方式、分配内存大小、磁盘最大空间、缓存期限

proxy_cache_path /var/cache/nginx/proxy_cache levels=1:2 keys_zone=http-cache:500m

max_size=10g inactive=30d;

proxy_temp_path /var/cache/nginx/proxy_cache/tmp;

upstream group1 {

server 10.1.1.245:80;

server 10.1.1.246:80;

}

upstream group2 {

server 10.1.1.247:80;

server10.1.1.248:80;

}

server {

listen 80;

server_name 10.1.1.249;

location /G1/M00 { #设置 group1 的负载均衡参数

#如果后端的服务器返回 502、 504、执行超时等错误,自动将请求转发到 upstream 负载均衡池中

的另一台服务器,实现故障转移

proxy_next_upstream http_502 http_504 error timeout invalid_header;

proxy_cache http-cache;

proxy_cache_valid 200 304 12h;

proxy_cache_key $uri$is_args$args;

proxy_pass http://group1;

expires 30d;

}

location ~* /G2/(M00|M01) { #设置 group2 的负载均衡参数

proxy_next_upstream http_502 http_504 error timeout invalid_header;

proxy_cache http-cache;

proxy_cache_valid 200 304 12h;

proxy_cache_key $uri$is_args$args;

proxy_pass http://group2;

expires 30d;

}

}