使用ansible自动化部署Kubernetes

服务列表

| IP | 主机名 | 角色 |

|---|---|---|

| 192.168.7.111 | kube-master1,kube-master1.pansn.cn | K8s 集群主节点 1 |

| 192.168.7.110 | kube-master2,kube-master2.pansn.cn | K8s 集群主节点 2 |

| 192.168.7.109 | kube-master3,kube-master3.pansn.cn | K8s 集群主节点 3 |

| 192.168.7.108 | node1,node1.pansn.cn | K8s 集群工作节点 1 |

| 192.168.7.107 | node2,node2.pansn.cn | K8s 集群工作节点 2 |

| 192.168.7.106 | node3,node3.pansn.cn | K8s 集群工作节点 3 |

| 192.168.7.105 | etcd-node1,etcd-node1.pansn.cn | 集群状态存储 etcd |

| 192.168.7.104 | ha1,ha1.pansn.cn | K8s 主节点访问入口 1(高可用及负载均衡) |

| 192.168.7.103 | ha2,ha2.pansn.cn | K8s 主节点访问入口 1(高可用及负载均衡) |

| 192.168.7.102 | harbor2,harbor.pansn.cn | 容器镜像仓库 |

| 192.168.7.101 | harbor2,harbor.pansn.cn | VIP |

一、环境部署准备

系统

Ubuntu 18.04.3

1.1.安装Ansible

1、各主机环境准备

~# apt update

~# apt install python2.7

~# ln -sv /usr/bin/python2.7 /usr/bin/python

2、管理端安装Ansible

root@k8s-master1:~# # apt install ansible

3、 Ansible 服务器基于 Key 与被控主机通讯

root@k8s-master1:~# cat > batch-copyid.sh << EOF

#!/bin/bash

#

# simple script to batch diliver pubkey to some hosts.

#

IP_LIST="

192.168.7.101

192.168.7.102

192.168.7.103

192.168.7.104

192.168.7.105

192.168.7.106

192.168.7.107

192.168.7.108

192.168.7.108

192.168.7.109

192.168.7.110

"

dpkg -l|grep sshpass

[ $? -eq 0 ] || echo "未安装sshdpass,即将安装sshpass " sleep 3 && apt install sshpass -y

ssh-keygen -f /root/.ssh/id_rsa -P ''

for host in ${IP_LIST}; do

sshpass -p 123456 ssh-copy-id -o StrictHostKeyChecking=no ${host}

if [ $? -eq 0 ]; then

echo "copy pubkey to ${host} done."

else

echo "copy pubkey to ${host} failed."

fi

done

EOF

1.2.时间同步

需要作为一台时间同步服务器

1、下载corony(所有节点都需要安装)

root@k8s-master1:# apt install chrony -y

2、服务端配置

vim /etc/chrony/chrony.conf

# 1. 配置时间源,国内可以增加阿里的时间源

server ntp1.aliyun.com iburst

# 2. 配置允许同步的客户端网段

allow 192.168.7.0/24

# 3. 配置离线也能作为源服务器

local stratum 10

3、启动服务

systemctl start chrony

systemctl enable chrony

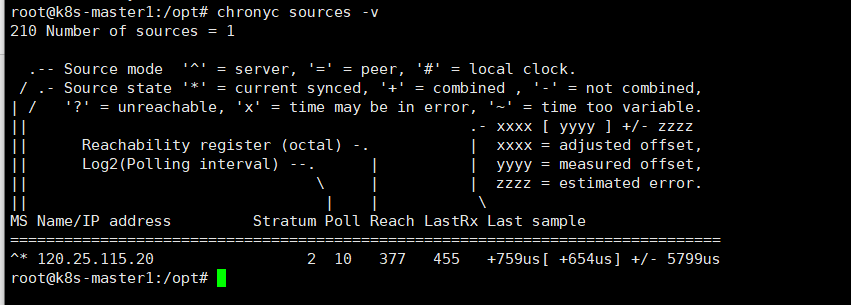

4、查看同步状态

查看 ntp_servers 状态

chronyc sources -v

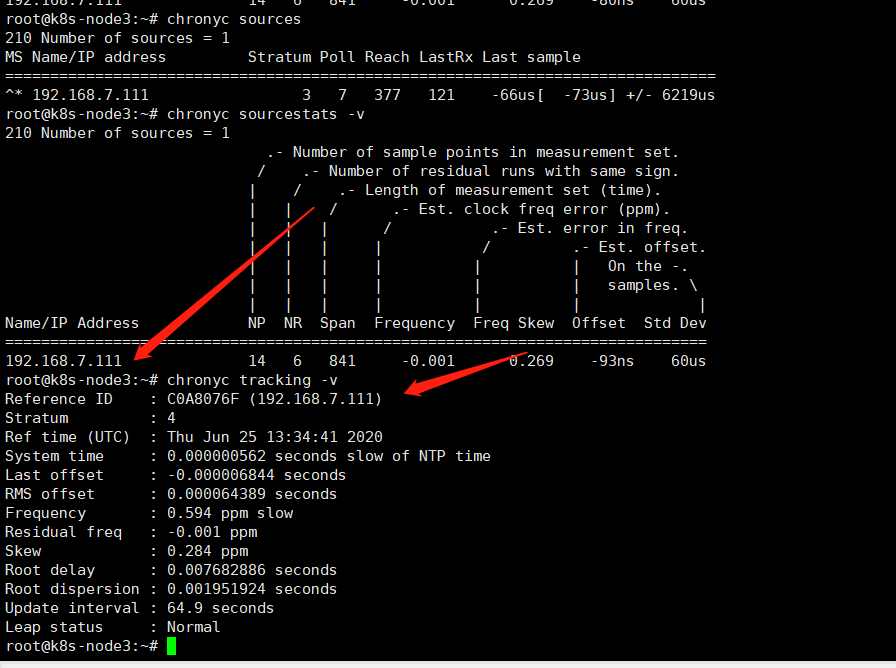

查看 ntp_sync 状态

chronyc sourcestats -v

查看 ntp_servers 是否在线

chronyc activity -v

查看 ntp 详细信息

chronyc tracking -v

节点配置

5、修改配置文件(所有节点操作)

vim /etcc/chrony/chrony.conf

server 192.168.7.111 iburst

6、启动服务

systemctl start chrony

systemctl enable chrony

7、检查同步状态

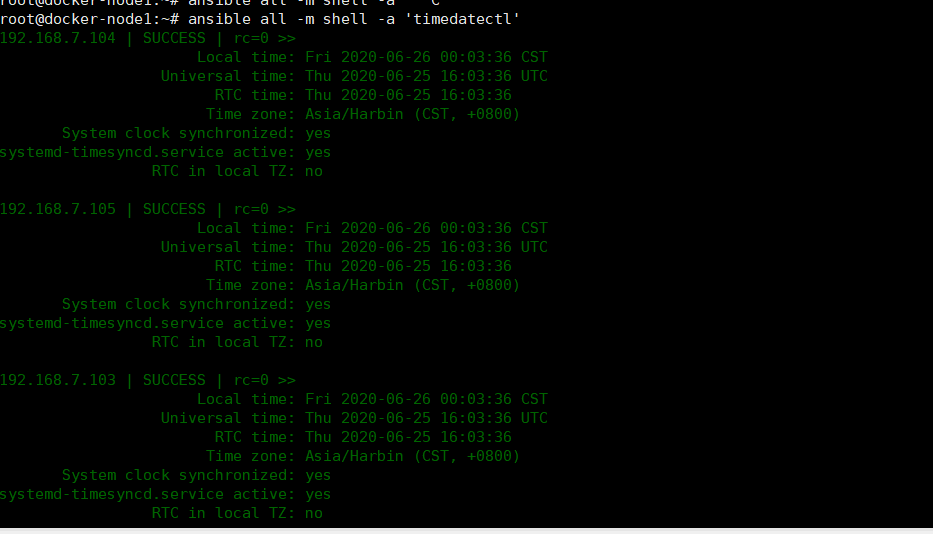

8、管理端验证时间同步是否完成

ansible all -m shell -a 'timedatectl'

1.3.下载部署 Kubernetes 集群所需的文件

1、下载脚本

curl -C- -fLO --retry 3 https://github.com/easzlab/kubeasz/releases/download/2.2.0/easzup

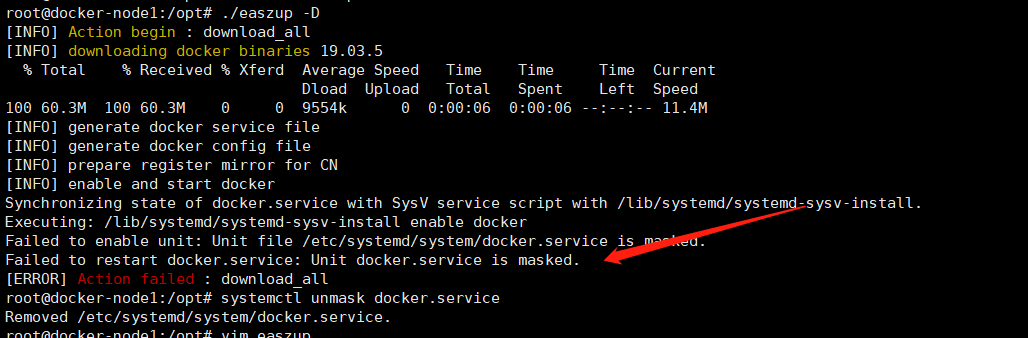

2、执行脚本

chmode +x easzup

./easzup -D

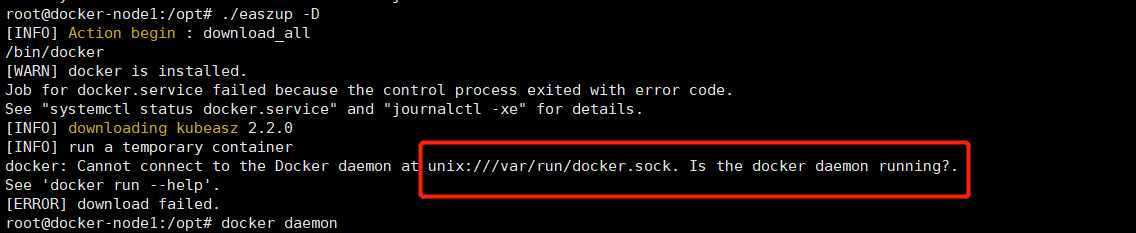

会报错

3、配置docker启动脚本

根据easzup脚本配置,新建docker启动脚本

# 尝试unmask,但是service文件被删除了?

root@kube-master1:/etc/ansible# systemctl unmask docker.service

Removed /etc/systemd/system/docker.service.

# 打开easzup脚本,将其生成docker.service文件的内容拷贝,自己写docker.service文件

root@kube-master1:/etc/ansible# vim easzup

...

echo "[INFO] generate docker service file"

cat > /etc/systemd/system/docker.service << EOF

[Unit]

Description=Docker Application Container Engine

Documentation=http://docs.docker.io

[Service]

Environment="PATH=/opt/kube/bin:/bin:/sbin:/usr/bin:/usr/sbin"

ExecStart=/opt/kube/bin/dockerd

ExecStartPost=/sbin/iptables -I FORWARD -s 0.0.0.0/0 -j ACCEPT

ExecReload=/bin/kill -s HUP $MAINPID

Restart=on-failure

RestartSec=5

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

Delegate=yes

KillMode=process

[Install]

WantedBy=multi-user.target

EOF

4、启动docker服务

root@docker-node1:/opt# systemctl daemon-reload

root@docker-node1:/opt# systemctl restart docker.service

如不启动docker服务,就执行easzup,会报错

5、重新下载

./easzup -D

root@kube-master1:/etc/ansible# curl -C- -fLO --retry 3 https://github.com/easzlab/kubeasz/releases/download/2.2.0/easzup

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 597 100 597 0 0 447 0 0:00:01 0:00:01 --:--:-- 447

100 12965 100 12965 0 0 4553 0 0:00:02 0:00:02 --:--:-- 30942

root@kube-master1:/etc/ansible# ls

ansible.cfg easzup hosts

root@kube-master1:/etc/ansible# chmode +x easzup

# 开始下载

root@kube-master1:/etc/ansible# ./easzup -D

[INFO] Action begin : download_all

[INFO] downloading docker binaries 19.03.5

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 60.3M 100 60.3M 0 0 1160k 0 0:00:53 0:00:53 --:--:-- 1111k

[INFO] generate docker service file

[INFO] generate docker config file

[INFO] prepare register mirror for CN

[INFO] enable and start docker

Synchronizing state of docker.service with SysV service script with /lib/systemd/systemd-sysv-install.

Executing: /lib/systemd/systemd-sysv-install enable docker

Failed to enable unit: Unit file /etc/systemd/system/docker.service is masked.

Failed to restart docker.service: Unit docker.service is masked. # 出错,提示docker.service masked

[ERROR] Action failed : download_all # 下载失败

# 尝试unmask,但是service文件被删除了?

root@kube-master1:/etc/ansible# systemctl unmask docker.service

Removed /etc/systemd/system/docker.service.

# 打开easzup脚本,将其生成docker.service文件的内容拷贝,自己写docker.service文件

root@kube-master1:/etc/ansible# vim easzup

...

echo "[INFO] generate docker service file"

cat > /etc/systemd/system/docker.service << EOF

[Unit]

Description=Docker Application Container Engine

Documentation=http://docs.docker.io

[Service]

Environment="PATH=/opt/kube/bin:/bin:/sbin:/usr/bin:/usr/sbin"

ExecStart=/opt/kube/bin/dockerd

ExecStartPost=/sbin/iptables -I FORWARD -s 0.0.0.0/0 -j ACCEPT

ExecReload=/bin/kill -s HUP $MAINPID

Restart=on-failure

RestartSec=5

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

Delegate=yes

KillMode=process

[Install]

WantedBy=multi-user.target

EOF

...

# 编写docker.service启动文件

root@kube-master1:/etc/ansible# vim /etc/systemd/system/docker.service

[Unit]

Description=Docker Application Container Engine

Documentation=http://docs.docker.io

[Service]

Environment="PATH=/opt/kube/bin:/bin:/sbin:/usr/bin:/usr/sbin"

ExecStart=/opt/kube/bin/dockerd

ExecStartPost=/sbin/iptables -I FORWARD -s 0.0.0.0/0 -j ACCEPT

ExecReload=/bin/kill -s HUP $MAINPID

Restart=on-failure

RestartSec=5

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

Delegate=yes

KillMode=process

[Install]

WantedBy=multi-user.target

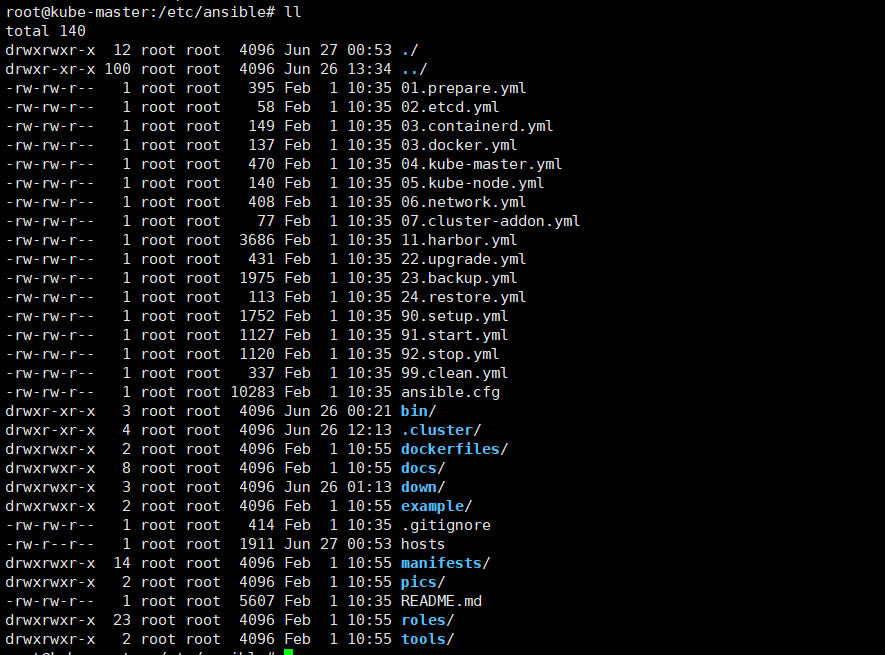

下载成功后目录和文件

二、部署Kubemetes

码云官方部署文档:https://gitee.com/ilanni/kubeasz/,建议先看一边部署文档在自己部署

在官方文档中部署分为两种部署,分步部署、一键部署,要对安装流程熟悉所以我们使用分步部署

2.1.基础参数设定

2.1.1. 必要配置

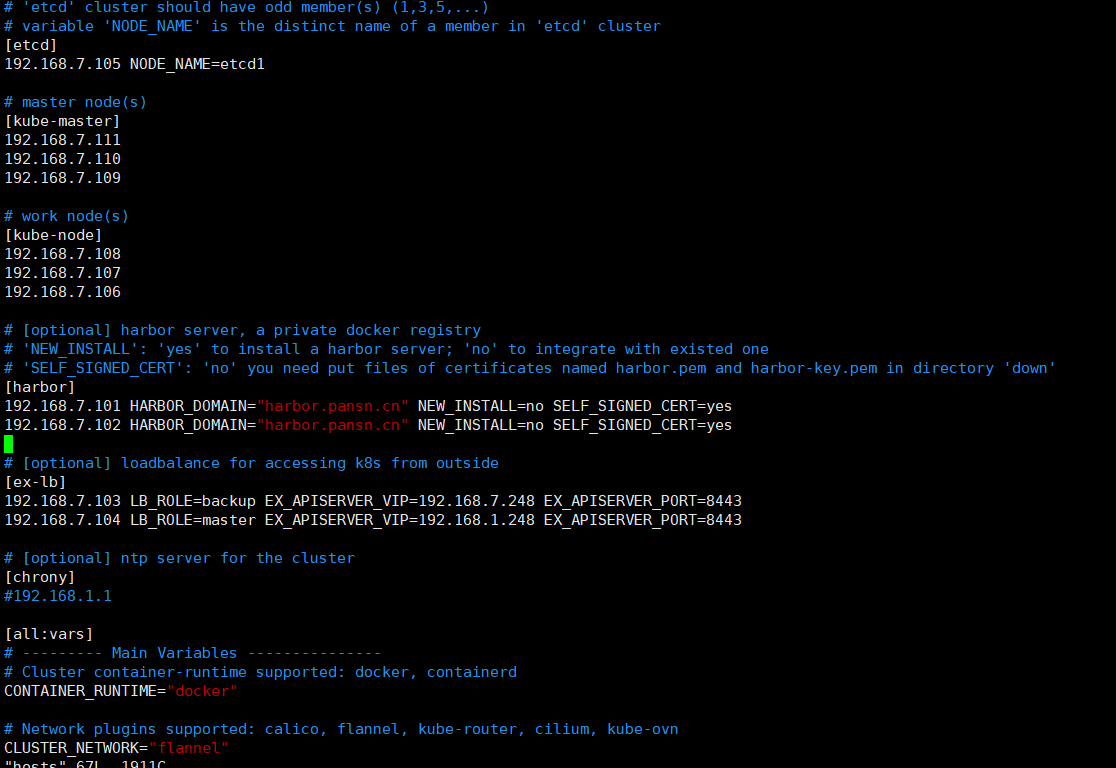

cd /etc/ansible && cp example/hosts.multi-node hosts, 然后实际情况修改此hosts文件

root@kube-master1:/etc/ansible# cp example/hosts.multi-node ./hosts

root@kube-master1:/etc/ansible# cat hosts

# 'etcd' cluster should have odd member(s) (1,3,5,...)

# variable 'NODE_NAME' is the distinct name of a member in 'etcd' cluster

[etcd]

192.168.7.105 NODE_NAME=etcd1

# master node(s)

[kube-master]

192.168.7.111

192.168.7.110

192.168.7.109

# work node(s)

[kube-node]

192.168.7.108

192.168.7.107

192.168.7.106

# [optional] harbor server, a private docker registry

# 'NEW_INSTALL': 'yes' to install a harbor server; 'no' to integrate with existed one

# 'SELF_SIGNED_CERT': 'no' you need put files of certificates named harbor.pem and harbor-key.pem in directory 'down'

[harbor]

192.168.7.101 HARBOR_DOMAIN="harbor.pansn.cn" NEW_INSTALL=no SELF_SIGNED_CERT=yes

192.168.7.102 HARBOR_DOMAIN="harbor.pansn.cn" NEW_INSTALL=no SELF_SIGNED_CERT=yes

# [optional] loadbalance for accessing k8s from outside

[ex-lb]

192.168.7.103 LB_ROLE=backup EX_APISERVER_VIP=192.168.7.248 EX_APISERVER_PORT=8443

192.168.7.104 LB_ROLE=master EX_APISERVER_VIP=192.168.1.248 EX_APISERVER_PORT=8443

# [optional] ntp server for the cluster

[chrony]

#192.168.1.1

[all:vars]

# --------- Main Variables ---------------

# Cluster container-runtime supported: docker, containerd

CONTAINER_RUNTIME="docker"

# Network plugins supported: calico, flannel, kube-router, cilium, kube-ovn

CLUSTER_NETWORK="flannel"

# Service proxy mode of kube-proxy: 'iptables' or 'ipvs'

PROXY_MODE="ipvs"

# K8S Service CIDR, not overlap with node(host) networking

SERVICE_CIDR="10.68.0.0/16"

# Cluster CIDR (Pod CIDR), not overlap with node(host) networking

CLUSTER_CIDR="172.20.0.0/16"

# NodePort Range

NODE_PORT_RANGE="20000-40000"

# Cluster DNS Domain

CLUSTER_DNS_DOMAIN="cluster.local."

# -------- Additional Variables (don't change the default value right now) ---

# Binaries Directory

bin_dir="/opt/kube/bin"

# CA and other components cert/key Directory

ca_dir="/etc/kubernetes/ssl"

# Deploy Directory (kubeasz workspace)

base_dir="/etc/ansible"

2.1.2.可选配置

初次使用可以不做修改,详见配置指南

主要包括集群某个具体组件的个性化配置,具体组件的配置项可能会不断增加;

可以在不做任何配置更改情况下使用默认值创建集群

可以根据实际需要配置 k8s 集群,常用举例

配置 lb 节点负载均衡算法:修改 roles/lb/defaults/main.yml 变量 BALANCE_ALG: "roundrobin"

配置 docker 国内镜像加速站点:修改 roles/docker/defaults/main.yml 相关变量

配置 apiserver 支持公网域名:修改 roles/kube-master/defaults/main.yml 相关变量

配置 flannel 使用镜像版本:修改 roles/flannel/defaults/main.yml 相关变量

配置选择不同 addon 组件:修改roles/cluster-addon/defaults/main.yml

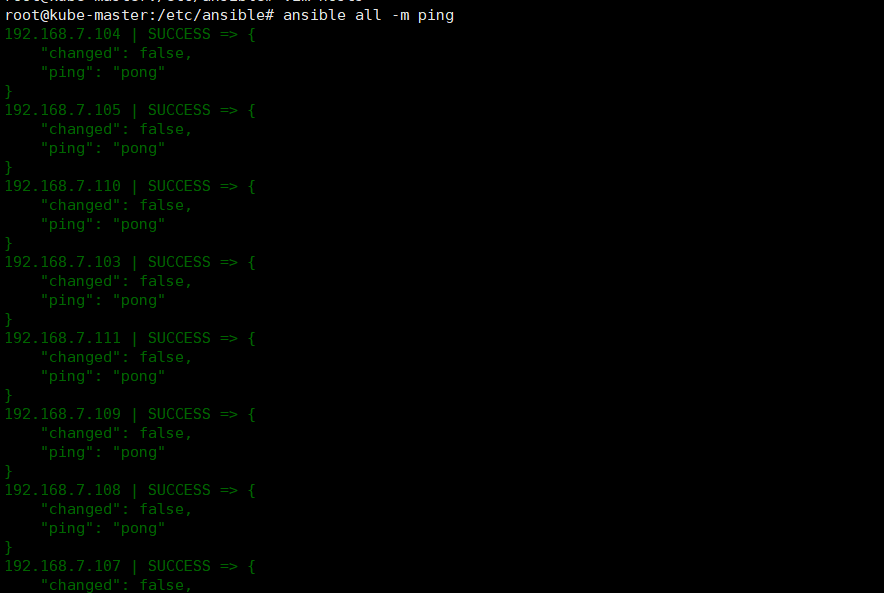

2.1.2. 验证ansible 安装

ansible all -m ping

2.2.创建证书和环境准备

查看roles文件

roles/deploy/

├── defaults

│ └── main.yml # 配置文件:证书有效期,kubeconfig 相关配置

├── files

│ └── read-group-rbac.yaml # 只读用户的 rbac 权限配置

├── tasks

│ └── main.yml # 主任务脚本

└── templates

├── admin-csr.json.j2 # kubectl客户端使用的admin证书请求模板

├── ca-config.json.j2 # ca 配置文件模板

├── ca-csr.json.j2 # ca 证书签名请求模板

├── kube-proxy-csr.json.j2 # kube-proxy使用的证书请求模板

└── read-csr.json.j2 # kubectl客户端使用的只读证书请求模板

root@kube-master1:/etc/ansible# ansible-playbook 01.prepare.yml

2.3.部署etcd集群

roles/etcd/

root@kube-master1:/etc/ansible# tree roles/etcd/

roles/etcd/

├── clean-etcd.yml

├── defaults

│ └── main.yml

├── tasks

│ └── main.yml

└── templates

├── etcd-csr.json.j2 # 可修改证书相应信息后部署

└── etcd.service.j2

root@kube-master1:/etc/ansible# ansible-playbook 02.etcd.yml

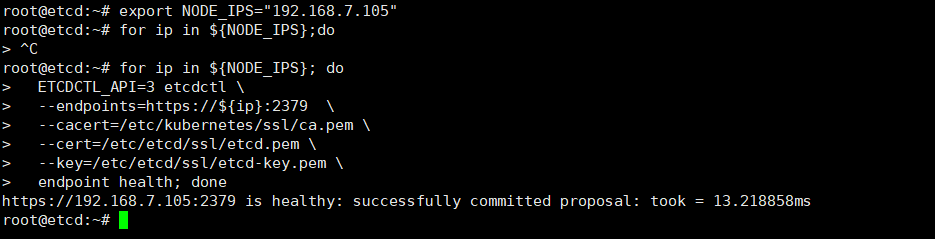

在任意 etcd 节点验证 etcd 运行信息

systemctl status etcd 查看服务状态

journalctl -u etcd 查看运行日志

在任一 etcd 集群节点上执行如下命令

# 根据hosts中配置设置shell变量 $NODE_IPS

export NODE_IPS="192.168.1.1 192.168.1.2 192.168.1.3"

for ip in ${NODE_IPS}; do

ETCDCTL_API=3 etcdctl

--endpoints=https://${ip}:2379

--cacert=/etc/kubernetes/ssl/ca.pem

--cert=/etc/etcd/ssl/etcd.pem

--key=/etc/etcd/ssl/etcd-key.pem

endpoint health; done

** etcd 的输出均为 healthy 时表示集群服务正常。**

2.4.部署docker服务

roles/docker/:

root@kube-master1:/etc/ansible# tree roles/docker/

roles/docker/

├── defaults

│ └── main.yml # 变量配置文件

├── files

│ ├── docker # bash 自动补全

│ └── docker-tag # 查询镜像tag的小工具

├── tasks

│ └── main.yml # 主执行文件

└── templates

├── daemon.json.j2 # docker daemon 配置文件

└── docker.service.j2 # service 服务模板

root@kube-master1:/etc/ansible# ansible-playbook 03.docker.yml

部署成功后验证

~# systemctl status docker # 服务状态

~# journalctl -u docker # 运行日志

~# docker version

~# docker info

2.5.部署master节点

部署master节点主要包含三个组件apiserver scheduler controller-manager,其中:

- apiserver提供集群管理的REST API接口,包括认证授权、数据校验以及集群状态变更等

- 只有API Server才直接操作etcd

- 其他模块通过API Server查询或修改数据

- 提供其他模块之间的数据交互和通信的枢纽

- scheduler负责分配调度Pod到集群内的node节点

- 监听kube-apiserver,查询还未分配Node的Pod

- 根据调度策略为这些Pod分配节点

- controller-manager由一系列的控制器组成,它通过apiserver监控整个集群的状态,并确保集群处于预期的工作状态

roles/kube-master/

├── defaults

│ └── main.yml

├── tasks

│ └── main.yml

└── templates

├── aggregator-proxy-csr.json.j2

├── basic-auth.csv.j2

├── basic-auth-rbac.yaml.j2

├── kube-apiserver.service.j2

├── kube-apiserver-v1.8.service.j2

├── kube-controller-manager.service.j2

├── kubernetes-csr.json.j2

└── kube-scheduler.service.j2

root@kube-master1:/etc/ansible# ansible-playbook 04.kube-master.yml

master 集群的验证

运行 ansible-playbook 04.kube-master.yml 成功后,验证 master节点的主要组件:

# 查看进程状态

systemctl status kube-apiserver

systemctl status kube-controller-manager

systemctl status kube-scheduler

# 查看进程运行日志

journalctl -u kube-apiserver

journalctl -u kube-controller-manager

journalctl -u kube-scheduler

执行 kubectl get componentstatus 可以看到

root@kube-master3:~# kubectl get componentstatus

NAME STATUS MESSAGE ERROR

etcd-0 Healthy {"health":"true"}

scheduler Healthy ok

controller-manager Healthy ok

2.6.部署node节点

kube-node 是集群中运行工作负载的节点,前置条件需要先部署好kube-master节点,它需要部署如下组件:

- docker:运行容器

- kubelet: kube-node上最主要的组件

- kube-proxy: 发布应用服务与负载均衡

- haproxy:用于请求转发到多个 apiserver,详见HA-2x 架构

- calico: 配置容器网络 (或者其他网络组件)

roles/kube-node/

├── defaults

│ └── main.yml # 变量配置文件

├── tasks

│ ├── main.yml # 主执行文件

│ ├── node_lb.yml # haproxy 安装文件

│ └── offline.yml # 离线安装 haproxy

└── templates

├── cni-default.conf.j2 # 默认cni插件配置模板

├── haproxy.cfg.j2 # haproxy 配置模板

├── haproxy.service.j2 # haproxy 服务模板

├── kubelet-config.yaml.j2 # kubelet 独立配置文件

├── kubelet-csr.json.j2 # 证书请求模板

├── kubelet.service.j2 # kubelet 服务模板

└── kube-proxy.service.j2 # kube-proxy 服务模板

root@kube-master1:/etc/ansible# ansible-playbook 05.kube-node.yml

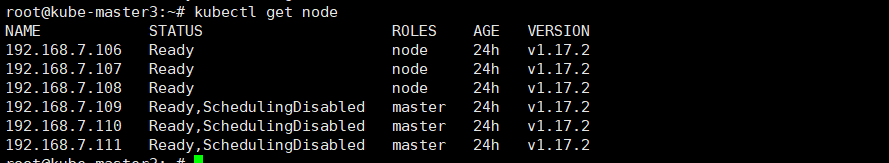

验证node状态

systemctl status kubelet # 查看状态

systemctl status kube-proxy

journalctl -u kubelet # 查看日志

journalctl -u kube-proxy

运行 kubectl get node 可以看到类似

2.7.部署集群网络

本次实验中我们选择flanne,其在所有node节点都在一个二层网络时候,flannel提供hostgw实现,避免vxlan实现的udp封装开销,估计是目前最高效的;calico也针对L3 Fabric,推出了IPinIP的选项,利用了GRE隧道封装;因此这些插件都能适合很多实际应用场景

root@kube-master1:/etc/ansible# tree roles/flannel/

roles/flannel/

├── defaults

│ └── main.yml

├── tasks

│ └── main.yml

└── templates

└── kube-flannel.yaml.j2

# 确保变量CLUSTER_NETWORK值为flannel

root@kube-master1:/etc/ansible# grep "CLUSTER_NETWORK" hosts

CLUSTER_NETWORK="flannel"

root@kube-master1:/etc/ansible# ansible-playbook 06.network.yml

验证flannel网络

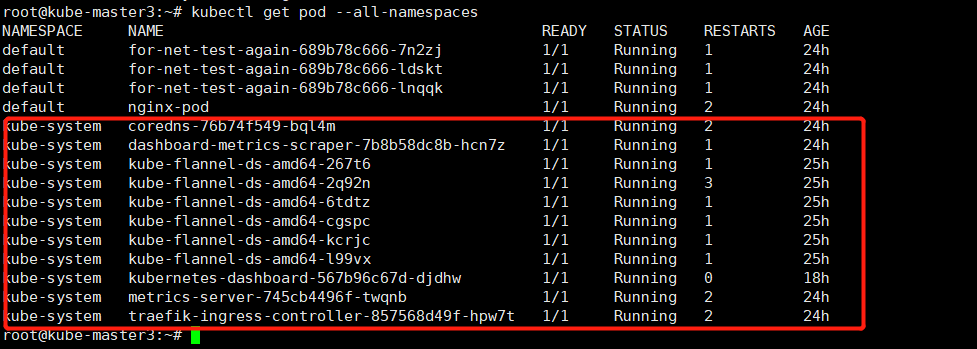

执行flannel安装成功后可以验证如下:(需要等待镜像下载完成,有时候即便上一步已经配置了docker国内加速,还是可能比较慢,请确认以下容器运行起来以后,再执行后续验证步骤)

kubectl get pod --all-namespaces

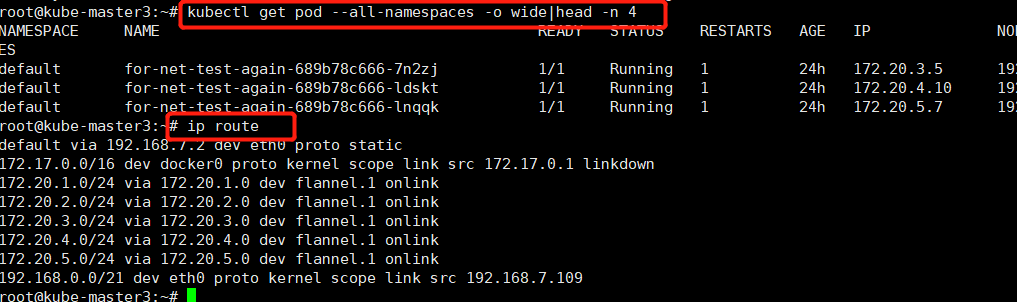

在集群创建几个测试pod: kubectl run test --image=busybox --replicas=3 sleep 30000

在各节点上分别 ping 这三个pod IP地址,确保能通

ping 172.20.3.5

ping 172.20.4.10

ping 172.50.5.7

2.8.部署集群插件

root@kube-master1:/etc/ansible# tree roles/cluster-addon/

roles/cluster-addon/

├── defaults

│ └── main.yml

├── tasks

│ ├── ingress.yml

│ └── main.yml

└── templates

├── coredns.yaml.j2

├── kubedns.yaml.j2

└── metallb

├── bgp.yaml.j2

├── layer2.yaml.j2

└── metallb.yaml.j2

root@kube-master1:/etc/ansible# ansible-playbook 07.cluster-addon.yml

2.9.验证网络连通性

1、测试 Pod 资源配置清单

root@kube-master1:/etc/ansible# cat /opt/k8s-data/pod-ex.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod

labels:

app: nginx

spec:

containers:

- name: nginx-pod

image: nginx:1.16.1

2、创建该 Pod 资源

root@kube-master1:/etc/ansible# kubectl apply -f /opt/k8s-data/pod-ex.yaml

pod/nginx-pod created

root@kube-master1:/etc/ansible# kubectl get -f /opt/k8s-data/pod-ex.yaml -w

NAME READY STATUS RESTARTS AGE

nginx-pod 1/1 Running 0 8s

3、验证网络连通性

root@kube-master1:~# kubectl exec -it nginx-pod bash

root@nginx-pod:/# apt update

root@nginx-pod:/# apt install iputils-ping

ping 172.20.4.9

ping 192.168.7.111

ping www.baidu.com

三、高可用及私有仓库集群部署

3.1.部署keepalived+haproxy

(两个节点都需要部署)

apt install keepalived haproxy

3.2.节点-修改配置(keepalived)

1、修改配置-master节点

root@jenkins-node1:~# vim /etc/keepalived/keepalived.conf

root@jenkins-node1:~# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

state MASTER

interface eth0

garp_master_delay 10

smtp_alert

virtual_router_id 55

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.7.200 dev eth0 label eth0:2

}

}

2、修改配置-backup节点

root@jenkins-node2:~# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

state BACKUP #修改backup

interface eth0

garp_master_delay 10

smtp_alert

virtual_router_id 55

priority 80 #修改权重

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.7.200 dev eth0 label eth0:2 修改vip

}

}

3、修改内核参数

(所有节点都需要修改)

root@jenkins-node2:~# vim /etc/sysctl.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

vm.swappiness=0

net.ipv4.ip_forward = 1 #没有这个haproxy启动会报错,同时无法实现vip漂移

net.ipv4.ip_nonlocal_bind = 1

4、启动服务

systemctl restart keepalived.service

systemctl enable keepalived.service

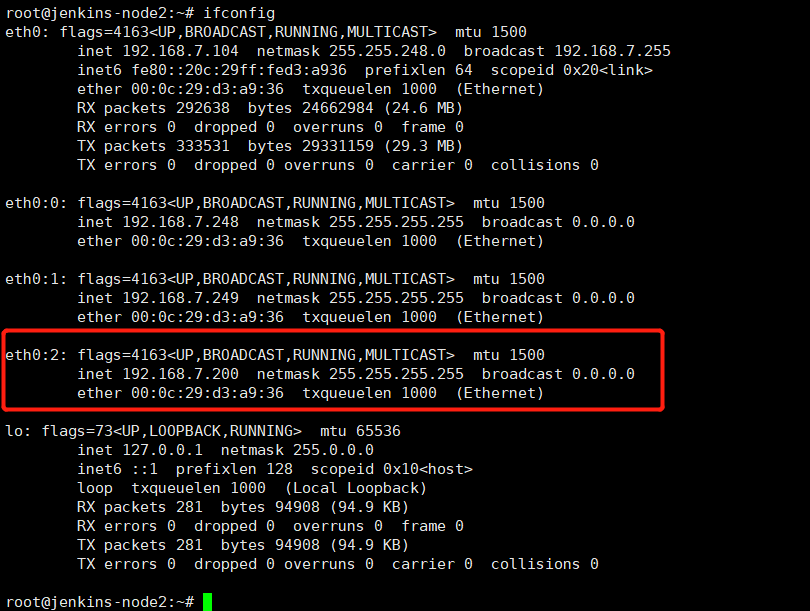

5、验证vip是否漂移

关闭master节点,看看是否vip漂移到backup节点上

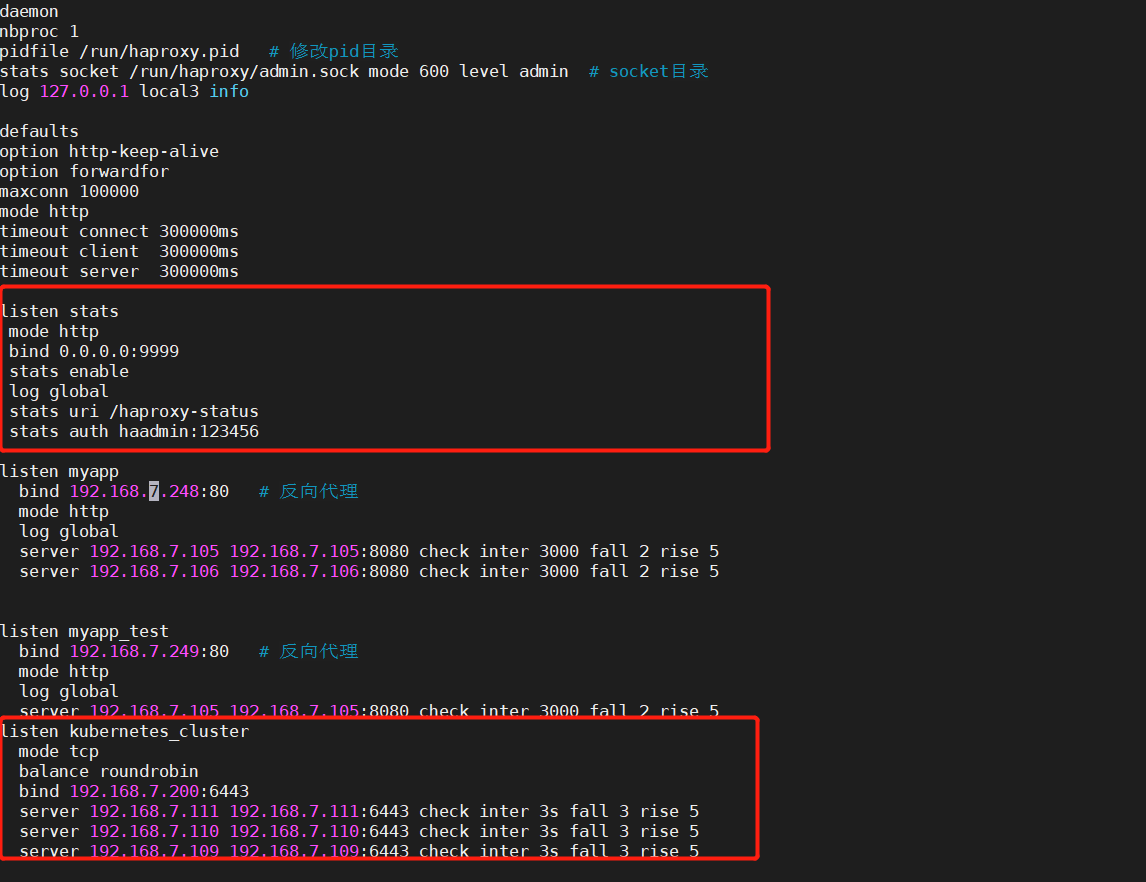

3.3.节点-修改配置(haproxy)

修改haproxy

vim /etc/haroxy/haroxy.cfg

global

maxconn 100000

#chroot /usr/local/haproxy

uid 99

gid 99

daemon

nbproc 1

pidfile /run/haproxy.pid # 修改pid目录

stats socket /run/haproxy/admin.sock mode 600 level admin # socket目录

log 127.0.0.1 local3 info

defaults

option http-keep-alive

option forwardfor

maxconn 100000

mode http

timeout connect 300000ms

timeout client 300000ms

timeout server 300000ms

listen stats

mode http

bind 0.0.0.0:9999

stats enable

log global

stats uri /haproxy-status

stats auth haadmin:123456

listen kubernetes_cluster

mode tcp

balance roundrobin

bind 192.168.7.200:6443

server 192.168.7.111 192.168.7.111:6443 check inter 3s fall 3 rise 5

server 192.168.7.110 192.168.7.110:6443 check inter 3s fall 3 rise 5

server 192.168.7.109 192.168.7.109:6443 check inter 3s fall 3 rise 5

2、启动服务

systemctl start haproxy

systemctl enable haproxy

3、查看9999端口是否起来

ps -ef|grep haproxy

4、发送配置到另外节点

scp /etc/haroxy/haroxy.cfg 192.168.7.104:/etc/haproxy

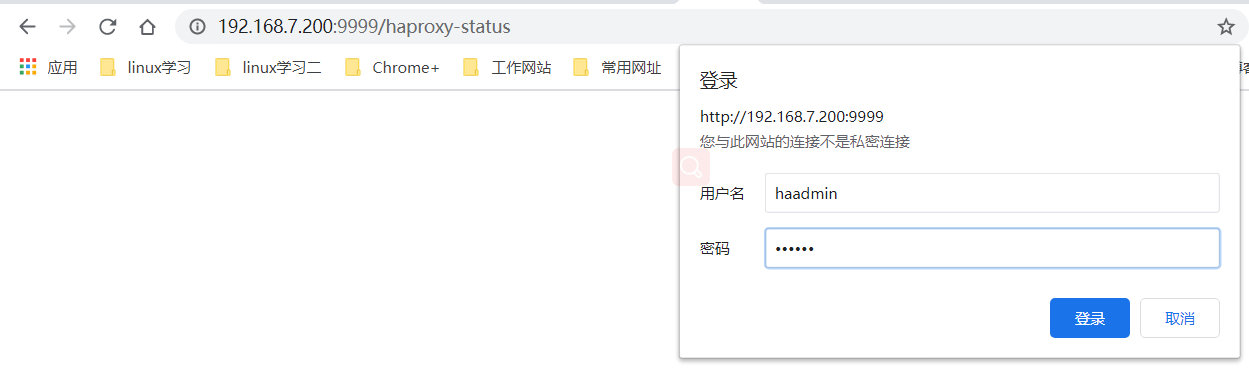

4、查看haproxy状态页

访问:vip:9999/haproxy-status

3.4.部署harbor

Harbor依赖docker,因此要先把docker安装完成

1、Ansible管理端部署docker

644 vim /etc/ansible/hosts

645 ansible -i /etc/ansible/hosts harbor -m shell -a 'sudo apt-get -y install apt-transport-https ca-certificates curl software-properties-common'

646 ansible -i /etc/ansible/hosts harbor -m shell -a 'curl -fsSL https://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg | sudo apt-key add -'

647 ansible -i /etc/ansible/hosts harbor -m shell -a 'sudo add-apt-repository "deb [arch=amd64] https://mirrors.aliyun.com/docker-ce/linux/ubuntu $(lsb_release -cs) stable"'

648 ansible -i /etc/ansible/hosts harbor -m shell -a 'apt-cache madison docker-ce'

选择自己需要版本安装

ansible -i /etc/ansible/hosts harbor -m shell -a 'apt-get -y install docker-ce=5:19.03.4~3-0~ubuntu-bionic'

启动服务

systemctl start docker

systemctl enable docker

2、部署docker-compose

apt install python-pip –y

pip install --upgrade pip

pip install docker-compose

2、生成SSL证书

apt install openssl

sudo apt-get install libssl-dev

mkdir /usr/local/src/harbor/certs/ -pv

生产key私钥

openssl genrsa -out /usr/local/src/harbor/certs/harbor-ca.key 2048

生成证书

openssl req -x509 -new -nodes -key /usr/local/src/harbor/certs/harbor-ca.key -subj "/CN=harbor.pansn.cn" -days 7120 -out /usr/local/src/harbor/certs/harbor-ca.crt

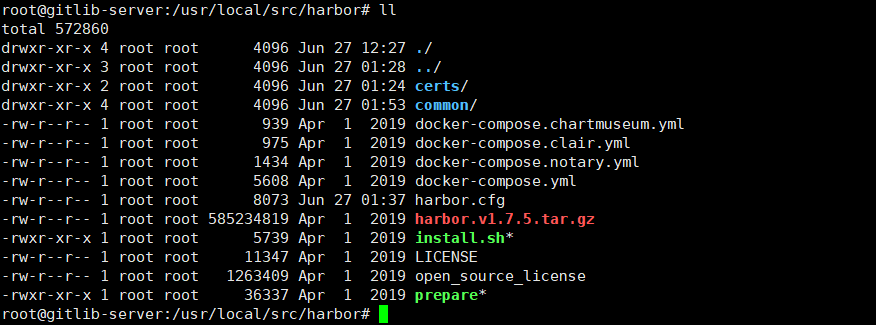

3、部署harbor

cd /usr/local/src

tar xf harbor-offline-installer-v1.7.5.tgz

4、修改harbor配置

vim harbor.cfg

hostname = harbor.pansn.cn

ui_url_protocol = https

ssl_cert = /usr/local/src/harbor/certs/harbor-ca.crt

ssl_cert_key = /usr/local/src/harbor/certs/harbor-ca.key

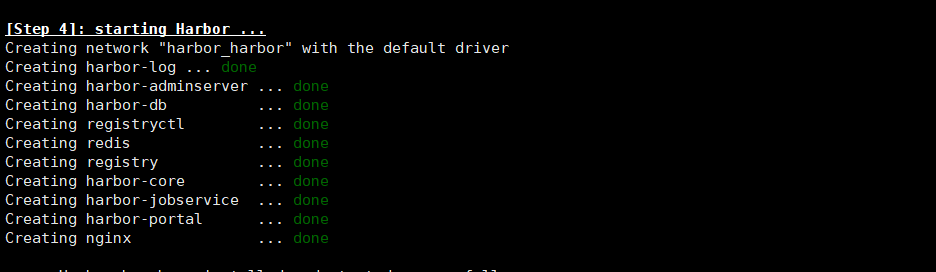

5、执行安装harbor

# ./install.sh #执行安装harbor

有上图即可成功

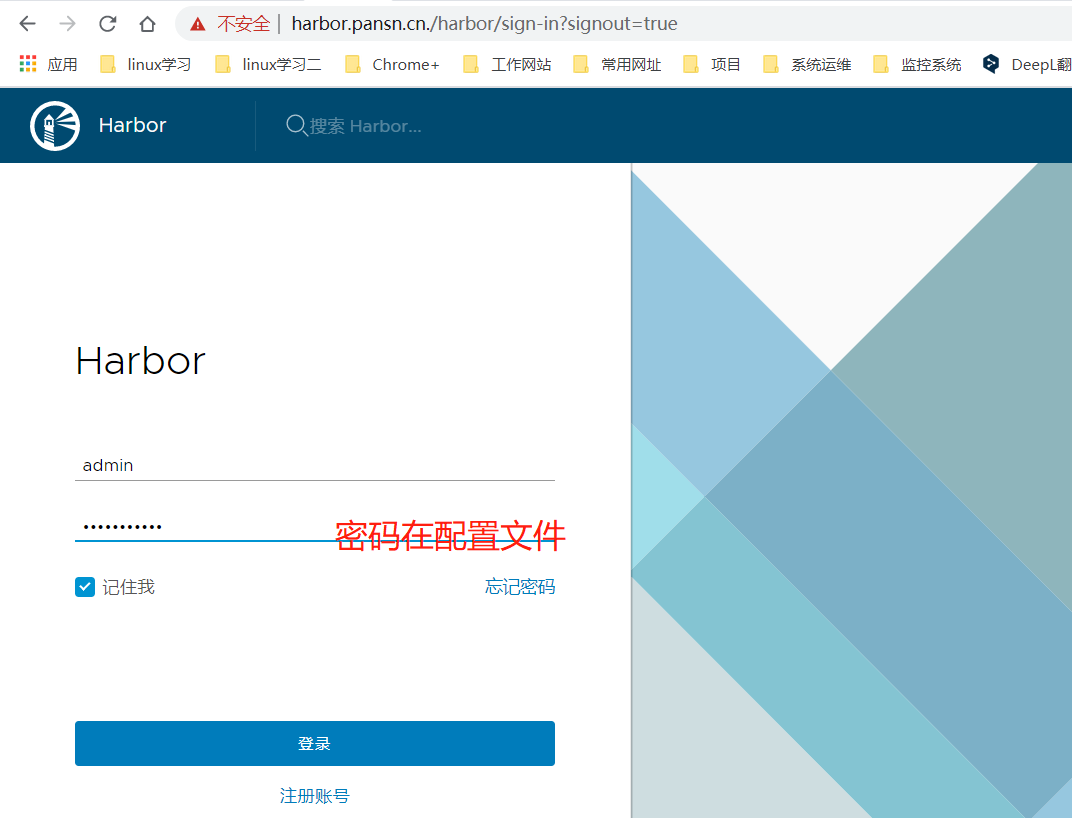

6、登陆

账号:admin

密码:Harbor12345

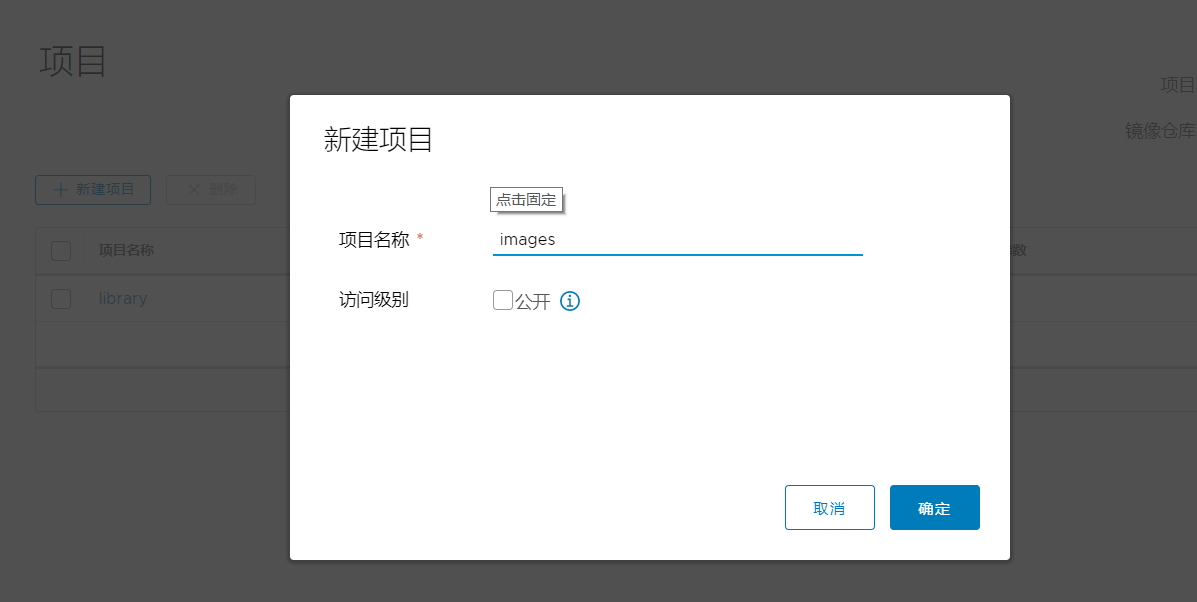

3.4.1.创建一个images项目

私有项目:上传和下载都需要登录。

公开项目:上传需要登录,下载不需要登录,默认是私有项目。

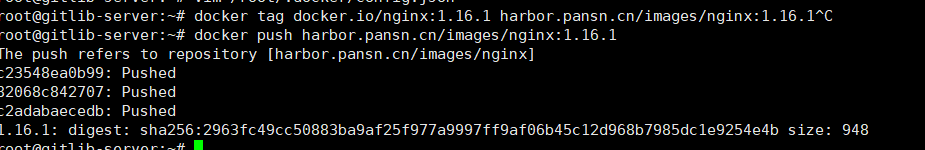

3.4.2.上传镜像

打标签并上传nginx镜像到 harbor

# docker tag docker.io/nginx:1.16.1 harbor.pansn.cn/images/nginx:1.16.1^C

# docker push harbor.pansn.cn/images/nginx:1.16.1

3.4.3.同步harbor登录证书到各node节点

报错汇总

docker: Cannot connect to the Docker daemon at unix:///var/run/docker.sock.

没做好时间同步