Pyspider爬虫教程

一、安装

1、 安装pip

(1)准备工作

yum install –y make gcc-c++ python-devel libxml2-devel libxslt-devel

(2)安装setuptools

https://pypi.python.org/pypi/setuptools/

python setup.py install

(3)安装pip

https://pypi.python.org/pypi/pip

python setup.py install

2、 安装pyspider

(1)安装pyspider及其依赖

pip install pyspider

OR

pip install --allow-all-external pyspider[all]

3、 安装可选库

pip install rsa

4、 phantomjs

下载后复制至/bin/

二、部署pyspider服务器

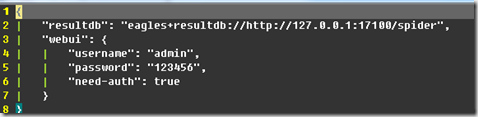

(1)配置pyspider.conf

配置eagles引擎和生成结果数据库,配置用户名。密码等

(2)运行install.sh安装脚本

(3)/etc/init.d/pyspider start 启动服务即可

三、使用

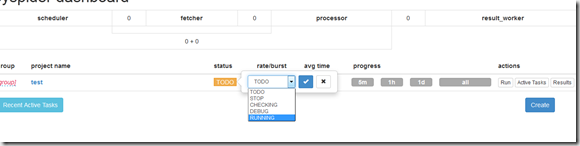

(1)ip:5000直接打开网页客户端

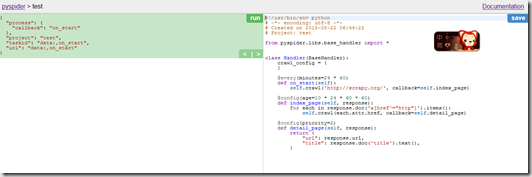

(2)点击创建脚本

(3)编写脚本,直接调试

(4)选择“running“点击运行

四、爬虫教程(1)—抓取简单的静态页面

静态页面的抓取最简单,获取HTML页面进行标签抽取即可,例子如下:

贵阳晚报新闻文章抓取:http://www.gywb.com.cn/

# Handler类和入口函数

class Handler(BaseHandler):

crawl_config = {

}

@every(minutes=24 * 60)

def on_start(self):

for url in urlList:

self.crawl(url, callback=self.index_page)

self.crawl抓取贵阳晚报首页的url,跳转到回调函数index_page

# 回调函数index_page

# config age:10天不刷新

# response.url:抓取的url

# response.doc(pyquery):获取标签内容,参数:pyquery标签

# 通过又一次抓取url到detail_page

@config(age=10 * 24 * 60 * 60)

def index_page(self, response):

for each in response.doc('a[href^="http"]').items():

for url in urlList:

if ('%s%s' % (url, 'content/') in each.attr.href) and # 字符串连接

(not ("#" in each.attr.href)): # 判断#是否在href里面

self.crawl(each.attr.href, callback=self.detail_page)

详细页面detail_page:获取文章标题,文章内容,时间等

# config priority:调用优先级

@config(priority=2)

def detail_page(self, response):

# article title

artTitle = response.doc(artTitleSelector1).text().strip() # 获取文章标题, artTitleSelector1:pyquery标签,如:h1[class="g-content-t text-center"]

完整代码如下:

#!/usr/bin/env python

# -*- encoding: utf-8 -*-

# Created on 2015-05-12 10:41:03

# Project: GYWB

from pyspider.libs.base_handler import *

import re

urlList = [ "http://www.gywb.com.cn/" ]

keyWords = [ #u"贵阳",

#u"交通",

u"违章",

u"交警",

u"交通管理",

#u"交通管理局",

u"交管局" ]

# article title

artTitleSelector1 = 'h1[class="g-content-t text-center"]'

artTitleSelector2 = 'div[class="detail_title_yy"] h1'

# article content

artContentSelector1 = 'div[class="g-content-c"] p'

artContentSelector2 = 'div[class="detailcon"] p'

# publish time

artPubTimeSelector1 = '#pubtime_baidu'

artPubTimeFilter1 = r'[^d]*'

artPubTimeSelector2 = '.detail_more'

artPubTimeFilter2 = r'[d-: ]*'

class Handler(BaseHandler):

crawl_config = {

}

@every(minutes=24 * 60)

def on_start(self):

for url in urlList:

self.crawl(url, callback=self.index_page)

@config(age=10 * 24 * 60 * 60)

def index_page(self, response):

for each in response.doc('a[href^="http"]').items():

for url in urlList:

if ('%s%s' % (url, 'content/') in each.attr.href) and

(not ("#" in each.attr.href)):

self.crawl(each.attr.href, callback=self.detail_page)

@config(priority=2)

def detail_page(self, response):

for each in response.doc('a[href^="http"]').items():

self.crawl(each.attr.href, callback=self.index_page)

# article title

artTitle = response.doc(artTitleSelector1).text().strip()

if artTitle == '':

artTitle = response.doc(artTitleSelector2).text().strip()

if artTitle == '':

return None

artContent = response.doc(artContentSelector1).text().strip()

if artContent == '':

artContent = response.doc(artContentSelector2).text().strip()

artPubTime = response.doc(artPubTimeSelector1).text().strip()

if artPubTime != '':

match = re.match (artPubTimeFilter1, artPubTime)

if match != None:

artPubTime = artPubTime[len(match.group()):]

else:

artPubTime = response.doc(artPubTimeSelector2).text().strip()

match = re.match (artPubTimeFilter1, artPubTime)

if match != None:

artPubTime = artPubTime[len(match.group()):]

match = re.search (artPubTimeFilter2, artPubTime)

if match != None:

artPubTime = match.group()

artPubTime = artPubTime.strip()

for word in keyWords:

if word in artContent:

return {

#"url": response.url,

#"title": response.doc('title').text(),

"title" : artTitle,

"time" : artPubTime,

"content" : artContent,

}

else:

return None

五、爬虫教程(2)—HTTP请求的页面(如登陆后抓取)

例子:爱卡汽车论坛:http://a.xcar.com.cn/bbs/forum-d-303.html

首先登陆就需要用户名和密码,但是好的网站都需要对用户名和密码进行加密的。所以我们只能模拟登陆方式,获取用户名和密码的加密类型,从而来进行模拟登陆,模拟登陆就需要获取浏览器的cookie

class Handler(BaseHandler):

crawl_config = {

}

@every(minutes=24 * 60)

def on_start(self):

cookies = getCookies() # 获取cookie

for url in URL_LIST:

self.crawl(url, cookies = cookies, callback=self.index_page) # 传入cookie模拟登陆

那么怎么样才能获取cookie呢?

(1)获取post提交的data,可以使用Firefox的httpfox插件或者wireshark来对包进行抓取

下面是采用Firefox的httpfox插件进行抓取

从上图可以看出,post_data包含username和password,还有chash和dhash等,当然这些都是进行加密后的。

所以在程序中需要获取post_data

def getPostData(self):

url = self.login_url.strip() # 登陆的url

if not re.match(r'^http://', url):

return None, None

req = urllib2.Request(url)

resp = urllib2.urlopen(req)

login_page = resp.read()

# 获取html表单数据

doc = HTML.fromstring (login_page)

post_url = doc.xpath("//form[@name='login' and @id='login']/@action")[0][1:]

chash = doc.xpath("//input[@name='chash' and @id='chash']/@value")[0]

dhash = doc.xpath("//input[@name='dhash' and @id='dhash']/@value")[0]

ehash = doc.xpath("//input[@name='ehash' and @id='ehash']/@value")[0]

formhash = doc.xpath("//input[@name='formhash']/@value")[0]

loginsubmit = doc.xpath("//input[@name='loginsubmit']/@value")[0].encode('utf-8')

cookietime = doc.xpath("//input[@name='cookietime' and @id='cookietime']/@value")[0]

username = self.account # 账户

password = self.encoded_password # 密码

#组合post_data

post_data = urllib.urlencode({

'username' : username,

'password' : password,

'chash' : chash,

'dhash' : dhash,

'ehash' : ehash,

'loginsubmit' : loginsubmit,

'formhash' : formhash,

'cookietime' : cookietime,

})

return post_url, post_data

#将post_data作为参数模拟登陆

def login(self):

post_url, post_data = self.getPostData()

post_url = self.post_url_prefix + post_url

req = urllib2.Request(url = post_url, data = post_data)

resp = urllib2.urlopen(req)

return True

# 通过本地浏览器cookie文件获取cookie

# 账号进行md5加密

COOKIES_FILE = '/tmp/pyspider.xcar.%s.cookies' % hashlib.md5(ACCOUNT).hexdigest()

COOKIES_DOMAIN = 'xcar.com.cn'

def getCookies():

CookiesJar = cookielib.MozillaCookieJar(COOKIES_FILE)

if not os.path.isfile(COOKIES_FILE):

CookiesJar.save()

CookiesJar.load (COOKIES_FILE)

CookieProcessor = urllib2.HTTPCookieProcessor(CookiesJar)

CookieOpener = urllib2.build_opener(CookieProcessor, urllib2.HTTPHandler)

for item in HTTP_HEADERS:

CookieOpener.addheaders.append ((item ,HTTP_HEADERS[item]))

urllib2.install_opener(CookieOpener)

if len(CookiesJar) == 0:

xc = xcar(ACCOUNT, ENCODED_PASSWORD, LOGIN_URL, POST_URL_PREFIX)

if xc.login(): # 判断登陆成功,保存cookie

CookiesJar.save()

else:

return None

CookiesDict = {}

# 选择对本次登陆的cookie

for cookie in CookiesJar:

if COOKIES_DOMAIN in cookie.domain:

CookiesDict[cookie.name] = cookie.value

return CookiesDict

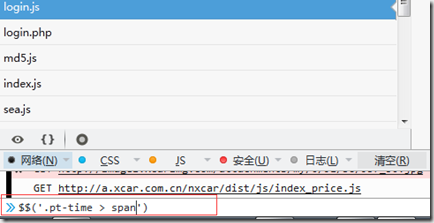

怎样查看用户名和密码的加密类型?——通过查看js文件

查看登陆login.js和表单信息login.php

发现:username是采用base64加密,password是先采用md5加密,然后再进行base64加密

完整代码如下:

#!/usr/bin/env python

# -*- encoding: utf-8 -*-

# Created on 2015-05-14 17:39:36

# Project: test_xcar

from pyspider.libs.base_handler import *

from pyspider.libs.response import *

from pyquery import PyQuery

import os

import re

import urllib

import urllib2

import cookielib

import lxml.html as HTML

import hashlib

URL_LIST= [ 'http://a.xcar.com.cn/bbs/forum-d-303.html' ]

THREAD_LIST_URL_FILTER = 'bbs/forum-d-303'

THREAD_LIST_URL_REG = r'bbs/forum-d-303(-w+)?.'

ACCOUNT = 'ZhangZujian'

# 32-bit MD5 Hash

ENCODED_PASSWORD = 'e3d541408adb57f4b40992202c5018d8'

LOGIN_URL = 'http://my.xcar.com.cn/logging.php?action=login'

POST_URL_PREFIX = 'http://my.xcar.com.cn/'

THREAD_URL_REG = r'bbs/thread-w+-0'

THREAD_URL_HREF_FILTER = 'bbs/thread-'

THREAD_URL_CLASS_LIST = [ 'prev', 'next' ]

THREAD_THEME_SELECTOR = 'h2'

POST_ITEM_SELECTOR = '.posts-con > div'

POST_TIME_SELECTOR = '.pt-time > span'

POST_MEMBER_SELECTOR = '.pt-name'

POST_FLOOR_SELECTOR = '.pt-floor > span'

POST_CONTENT_SELECTOR = '.pt-cons'

# THREAD_REPLY_SELECTOR = ''

# !!! Notice !!!

# Tasks that share the same account MUST share the same cookies file

COOKIES_FILE = '/tmp/pyspider.xcar.%s.cookies' % hashlib.md5(ACCOUNT).hexdigest()

COOKIES_DOMAIN = 'xcar.com.cn'

# USERAGENT_STR = 'Mozilla/5.0 (iPhone; CPU iPhone OS 8_0 like Mac OS X) AppleWebKit/600.1.4 (KHTML, like Gecko) Version/8.0 Mobile/12A366 Safari/600.1.4'

HTTP_HEADERS = {

'Accept' : 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8',

# 'Accept-Encoding' : 'gzip, deflate, sdch',

'Accept-Language' : 'zh-CN,zh;q=0.8,en;q=0.6',

'Connection' : 'keep-alive',

'DNT' : '1',

'Host' : 'my.xcar.com.cn',

'Referer' : 'http://a.xcar.com.cn/',

'User-Agent' : 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/42.0.2311.135 Safari/537.36',

}

class xcar(object):

def __init__(self, account, encoded_password, login_url, post_url_prefix):

self.account = account

self.encoded_password = encoded_password

self.login_url = login_url

self.post_url_prefix = post_url_prefix

def login(self):

post_url, post_data = self.getPostData()

post_url = self.post_url_prefix + post_url

req = urllib2.Request(url = post_url, data = post_data)

resp = urllib2.urlopen(req)

return True

def getPostData(self):

url = self.login_url.strip()

if not re.match(r'^http://', url):

return None, None

req = urllib2.Request(url)

resp = urllib2.urlopen(req)

login_page = resp.read()

doc = HTML.fromstring (login_page)

post_url = doc.xpath("//form[@name='login' and @id='login']/@action")[0][1:]

chash = doc.xpath("//input[@name='chash' and @id='chash']/@value")[0]

dhash = doc.xpath("//input[@name='dhash' and @id='dhash']/@value")[0]

ehash = doc.xpath("//input[@name='ehash' and @id='ehash']/@value")[0]

formhash = doc.xpath("//input[@name='formhash']/@value")[0]

loginsubmit = doc.xpath("//input[@name='loginsubmit']/@value")[0].encode('utf-8')

cookietime = doc.xpath("//input[@name='cookietime' and @id='cookietime']/@value")[0]

username = self.account

password = self.encoded_password

post_data = urllib.urlencode({

'username' : username,

'password' : password,

'chash' : chash,

'dhash' : dhash,

'ehash' : ehash,

'loginsubmit' : loginsubmit,

'formhash' : formhash,

'cookietime' : cookietime,

})

return post_url, post_data

def getCookies():

CookiesJar = cookielib.MozillaCookieJar(COOKIES_FILE)

if not os.path.isfile(COOKIES_FILE):

CookiesJar.save()

CookiesJar.load (COOKIES_FILE)

CookieProcessor = urllib2.HTTPCookieProcessor(CookiesJar)

CookieOpener = urllib2.build_opener(CookieProcessor, urllib2.HTTPHandler)

for item in HTTP_HEADERS:

CookieOpener.addheaders.append ((item ,HTTP_HEADERS[item]))

urllib2.install_opener(CookieOpener)

if len(CookiesJar) == 0:

xc = xcar(ACCOUNT, ENCODED_PASSWORD, LOGIN_URL, POST_URL_PREFIX)

if xc.login():

CookiesJar.save()

else:

return None

CookiesDict = {}

for cookie in CookiesJar:

if COOKIES_DOMAIN in cookie.domain:

CookiesDict[cookie.name] = cookie.value

return CookiesDict

class Handler(BaseHandler):

crawl_config = {

}

@every(minutes=24 * 60)

def on_start(self):

cookies = getCookies()

for url in URL_LIST:

self.crawl(url, cookies = cookies, callback=self.index_page)

@config(age=10 * 24 * 60 * 60)

def index_page(self, response):

cookies = getCookies()

for each in response.doc('a[href*="%s"]' % THREAD_URL_HREF_FILTER).items():

if re.search(THREAD_URL_REG, each.attr.href) and

'#' not in each.attr.href:

self.crawl(each.attr.href, cookies = cookies, callback=self.detail_page)

for each in response.doc('a[href*="%s"]' % THREAD_LIST_URL_FILTER).items():

if re.search(THREAD_LIST_URL_REG, each.attr.href) and

'#' not in each.attr.href:

self.crawl(each.attr.href, cookies = cookies, callback=self.index_page)

@config(priority=2)

def detail_page(self, response):

cookies = getCookies()

if '#' not in response.url:

for each in response.doc(POST_ITEM_SELECTOR).items():

floorNo = each(POST_FLOOR_SELECTOR).text()

url = '%s#%s' % (response.url, floorNo)

self.crawl(url, cookies = cookies, callback=self.detail_page)

return None

else:

floorNo = response.url[response.url.find('#')+1:]

for each in response.doc(POST_ITEM_SELECTOR).items():

if each(POST_FLOOR_SELECTOR).text() == floorNo:

theme = response.doc(THREAD_THEME_SELECTOR).text()

time = each(POST_TIME_SELECTOR).text()

member = each(POST_MEMBER_SELECTOR).text()

content = each(POST_CONTENT_SELECTOR).text()

return {

# "url" : response.url,

# "title" : response.doc('title').text(),

'theme' : theme,

'floor' : floorNo,

'time' : time,

'member' : member,

'content' : content,

}

六、爬虫教程(3)使用Phantomjs渲染带js的页面(视频链接抓取)

(1)安装Phantomjs

(2)其实和爬取网页的不同点在于,传入fetch_tpy = 'js'

#!/usr/bin/env python

# -*- encoding: utf-8 -*-

# Created on 2015-03-20 09:46:20

# Project: fly_spider

import re

import time

#from pyspider.database.mysql.mysqldb import SQL

from pyspider.libs.base_handler import *

from pyquery import PyQuery as pq

class Handler(BaseHandler):

headers= {

"Accept":"text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8",

"Accept-Encoding":"gzip, deflate, sdch",

"Accept-Language":"zh-CN,zh;q=0.8",

"Cache-Control":"max-age=0",

"Connection":"keep-alive",

"User-Agent":"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/41.0.2272.101 Safari/537.36"

}

crawl_config = {

"headers" : headers,

"timeout" : 100

}

@every(minutes= 1)

def on_start(self):

self.crawl('http://www.zhanqi.tv/games',callback=self.index_page)

@config(age=10 * 24 * 60 * 60)

def index_page(self, response):

print(response)

for each in response.doc('a[href^="http://www.zhanqi.tv/games/"]').items():

if re.match("http://www.zhanqi.tv/games/w+", each.attr.href, re.U):

self.crawl(each.attr.href,

fetch_type='js', # fetch_type参数

js_script=""" # JavaScript

function() {

setTimeout(window.scrollTo(0,document.body.scrollHeight), 5000);

}

""",callback=self.list_page)

@config(age=1*60*60, priority=2)

def list_page(self, response):

for each in response.doc('.active > div.live-list-tabc > ul#hotList.clearfix > li > a').items():

if re.match("http://www.zhanqi.tv/w+", each.attr.href, re.U):

self.crawl(each.attr.href,

fetch_type='js',

js_script="""

function() {

setTimeout(window.scrollTo(0,document.body.scrollHeight), 5000);

}

""",callback=self.detail_page)

@config(age=1*60*60, priority=2)

def detail_page(self, response):

for each in response.doc('.video-flash-cont').items():

d = pq(each)

print(d.html())

return {

"url": response.url,

"author":response.doc('.meat > span').text(),

"title":response.doc('.title-name').text(),

"game-name":response.doc('span > .game-name').text(),

"users2":response.doc('div.live-anchor-info.clearfix > div.sub-anchor-info > div.clearfix > div.meat-info > span.num.dv.js-onlines-panel > span.dv.js-onlines-txt > span').text(),

"flash-cont":d.html(),

"picture":response.doc('.active > img').text(),

}

七、附录

怎样判断获取pyquery的结果是自己想要的?

通过参看元素的命令行,可以查看结果

八、参考

官方教程:http://docs.pyspider.org/en/latest/tutorial/

pyspider 爬虫教程(一):HTML 和 CSS 选择器:http://segmentfault.com/a/1190000002477863

pyspider 爬虫教程(二):AJAX 和 HTTP:http://segmentfault.com/a/1190000002477870

pyspider 爬虫教程(三):使用 PhantomJS 渲染带 JS 的页面:http://segmentfault.com/a/1190000002477913