1 说明

这些数据其实早在2015年底就已经收集,并处理。

1.1 数据说明

数据来源于全国水雨晴信息网站,html表格的形式作为原始的存储。

1.2 处理与展示

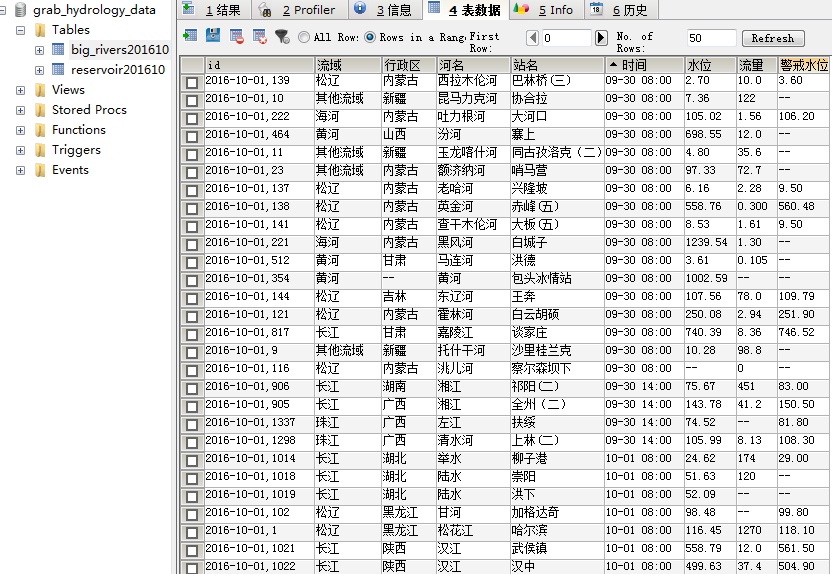

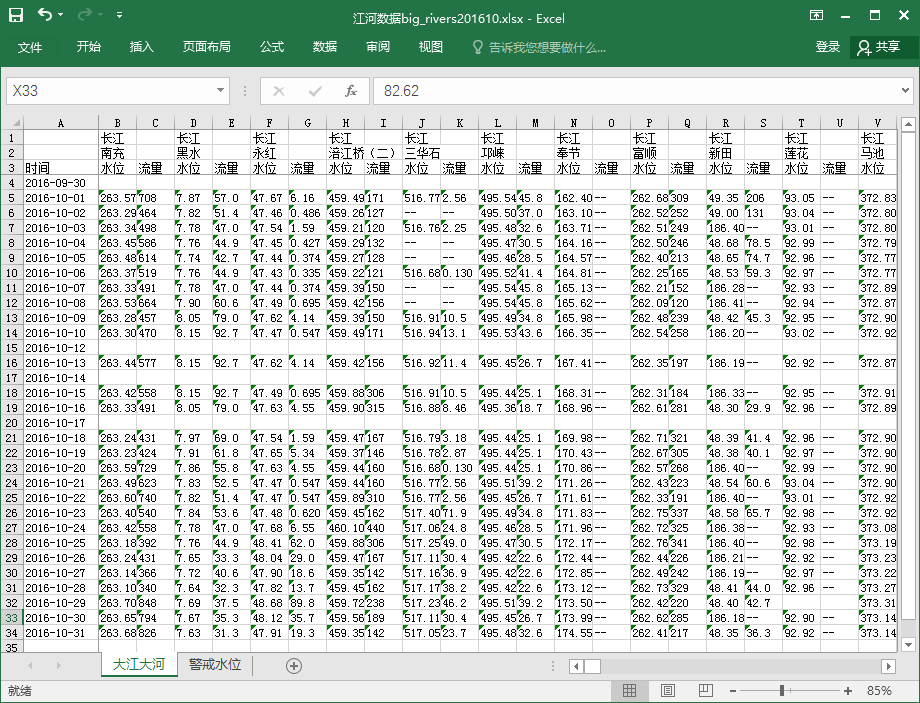

用Mysql数据存储,每月一个Table;通过这个一个table数据又处理为Excel的表格。

2 写Mysql数据库

2.1 读html写入数据库

以一个月为单位,将这一个月的html数据转存到Mysql的一张表中。

# -*- encoding: utf8 -*-

'''

Created on 2015年12月8日

@author: baoluo

'''

from bs4 import BeautifulSoup

import time

import MySQLdb

import sys

import re

import os

import glob

reload(sys)

sys.setdefaultencoding( 'utf-8' )

def checkDir():

if not os.path.isdir('./htmls'):

os.mkdir("./htmls")

def tn():

t = time.strftime("%Y%m%d", time.localtime())

#print t,type(t)

return t

def _w_log(fn):

#print fn + ' write log.txt'

fp = open('log.txt','a+')

fp.write(time.strftime("%Y-%m-%d %H:%M:%S ", time.localtime()) + fn + '

')

fp.close()

def table(fn, tp):

fp = open(fn,'r')

html =fp.read()

fp.close()

strhtml = html.replace(' ','')

date =''

re1 = r'd{4}-d{2}-d{2}'

rg=re.compile(re1)

m = rg.findall(strhtml[:7777])

if m:

date = m[0]

#print date

dbname = date.split('-')[0]+date.split('-')[1]

sql = ''

if tp=='hd':

dbname = 'big_rivers'+dbname

else:

dbname = 'reservoir'+ dbname

#数据库操作

conn= MySQLdb.connect(

host='localhost',

port = 3306,

user='root',

passwd='root',

charset='utf8'

)

cur = conn.cursor()

conn.select_db('information_schema')

#print 'dbname :->'+dbname

fl = cur.execute("SELECT * FROM tables WHERE table_name='"+dbname+"';")

conn.select_db('Grab_Hydrology_Data')

if 0 == fl :

print 'Creat new database:' + dbname

if 'big_rivers' in dbname:

creatsql = "CREATE TABLE " + dbname + """

(id VARCHAR(20) primary key,

流域 VARCHAR(20),

行政区 VARCHAR(30),

河名 VARCHAR(30),

站名 VARCHAR(30),

时间 VARCHAR(30),

水位 VARCHAR(10),

流量 VARCHAR(10),

警戒水位 VARCHAR(10))"""

else:

creatsql = "CREATE TABLE " + dbname + """

(id VARCHAR(20) primary key,

流域 VARCHAR(20),

行政区 VARCHAR(30),

河名 VARCHAR(30),

库名 VARCHAR(30),

库水位 VARCHAR(10),

蓄水量 VARCHAR(10),

入库 VARCHAR(10),

提顶高程 VARCHAR(10))"""

#print creatsql

cur.execute(creatsql)

soup = BeautifulSoup(strhtml,"html.parser")

trs= soup.findAll('tr')

i=0

#print 'trs',trs

for tr in trs:

i += 1

param=[]

sp = BeautifulSoup(str(tr),"html.parser")

tds= sp.findAll('td')

#print i,'tds',tds

for td in tds:

td = str(td)

#print i,'td ',td

if 'style' not in td:

begin=td.index('">')+len('">')

end=td.index('</')

param.append(td[begin:end])

else:

#print td

try:

td = td.split(')">')[1]

param.append(td.split('<')[0])

except IndexError:

td = td.split(');">')[1]

param.append(td.split('<')[0])

idname = "'"+date + ',' + str(i)+"',"

vs = ''

#print i,param

for v in param:

v = "'" + v.strip() + "'"

vs += v + ','

vs = idname + vs[:-1] +')'

'''

print param[0].decode('utf8').encode('GBK'),

param[1].decode('utf8').encode('GBK'),

param[2].decode('utf8').encode('GBK'),

param[3].decode('utf8').encode('GBK'),

param[4],param[5],param[6],param[7]

'''

try:

cur.execute('INSERT INTO '+ dbname + ' VALUES (' + vs)

conn.commit() # 提交到数据库执行

except MySQLdb.Error,e:

print "Mysql Error %d: %s" % (e.args[0], e.args[1])

if 1062==e.args[0]:

break

elif 1064==e.args[0]:

print vs.decode('utf8').encode('GBK')

break

else:

break

#print 'Already exists:' + vs.decode('utf8').encode('GBK')

#_w_log(fn + " except")

conn.rollback() # 发生错误时回滚

cur.close()

conn.close()

if __name__ == '__main__':

header = {'User-Agent':'Mozilla/5.0 (Windows NT 5.1; rv:28.0) Gecko/20100101 Firefox/28.0'}

#checkDir()

files = glob.glob('./htmdata/htmls-counter/2016/201610*.htm')

print len(files),"个文件",time.strftime("%Y-%m-%d %H:%M:%S ", time.localtime())

for f in files:

if ( '江河' in f.decode('gbk') or 'big_rivers' in f):

print f.decode('gbk'),time.strftime("%Y-%m-%d %H:%M:%S ", time.localtime())

table(f,'hd')

print time.strftime("%Y-%m-%d %H:%M:%S ", time.localtime())

elif '水库' or 'reservoir' in f.decode('gbk'):

print f.decode('gbk'),time.strftime("%Y-%m-%d %H:%M:%S ", time.localtime())

table(f,'sk')

print time.strftime("%Y-%m-%d %H:%M:%S ", time.localtime())

2.2 运行结果

(从运行时间上看简直无法直视.....)

59 个文件 2016-11-01 10:04:12

./htmdata/htmls-counter/201620161001水库数据.htm

Creat new database:reservoir201610

./htmdata/htmls-counter/201620161001江河数据.htm

Mysql Error 1062: Duplicate entry '2016-10-01,1' for key 'PRIMARY'

2016-11-01 10:04:26

./htmdata/htmls-counter/201620161002水库数据.htm

./htmdata/htmls-counter/201620161002江河数据.htm

Mysql Error 1062: Duplicate entry '2016-10-02,1' for key 'PRIMARY'

2016-11-01 10:05:00

./htmdata/htmls-counter/201620161003水库数据.htm

./htmdata/htmls-counter/201620161003江河数据.htm

Mysql Error 1062: Duplicate entry '2016-10-03,1' for key 'PRIMARY'

2016-11-01 10:05:36

./htmdata/htmls-counter/201620161004水库数据.htm

./htmdata/htmls-counter/201620161004江河数据.htm

Mysql Error 1062: Duplicate entry '2016-10-04,1' for key 'PRIMARY'

2016-11-01 10:06:05

./htmdata/htmls-counter/201620161005水库数据.htm

./htmdata/htmls-counter/201620161005江河数据.htm

Mysql Error 1062: Duplicate entry '2016-10-05,1' for key 'PRIMARY'

2016-11-01 10:06:37

./htmdata/htmls-counter/201620161006水库数据.htm

./htmdata/htmls-counter/201620161006江河数据.htm

Mysql Error 1062: Duplicate entry '2016-10-06,1' for key 'PRIMARY'

2016-11-01 10:07:08

./htmdata/htmls-counter/201620161007水库数据.htm

./htmdata/htmls-counter/201620161007江河数据.htm

Mysql Error 1062: Duplicate entry '2016-10-07,1' for key 'PRIMARY'

2016-11-01 10:07:37

./htmdata/htmls-counter/201620161009水库数据.htm

./htmdata/htmls-counter/201620161009江河数据.htm

Mysql Error 1062: Duplicate entry '2016-10-09,1' for key 'PRIMARY'

2016-11-01 10:08:12

./htmdata/htmls-counter/201620161010水库数据.htm

./htmdata/htmls-counter/201620161010江河数据.htm

2016-11-01 10:09:47

./htmdata/htmls-counter/201620161011水库数据.htm

./htmdata/htmls-counter/201620161011江河数据.htm

2016-11-01 10:11:39

./htmdata/htmls-counter/201620161013水库数据.htm

./htmdata/htmls-counter/201620161013江河数据.htm

2016-11-01 10:13:23

./htmdata/htmls-counter/201620161013雨水情数据.htm

Mysql Error 1136: Column count doesn't match value count at row 1

./htmdata/htmls-counter/201620161015水库数据.htm

./htmdata/htmls-counter/201620161015江河数据.htm

2016-11-01 10:15:09

./htmdata/htmls-counter/201620161015雨水情数据.htm

Mysql Error 1136: Column count doesn't match value count at row 1

./htmdata/htmls-counter/201620161016水库数据.htm

./htmdata/htmls-counter/201620161016江河数据.htm

2016-11-01 10:16:45

./htmdata/htmls-counter/201620161016雨水情数据.htm

Mysql Error 1136: Column count doesn't match value count at row 1

./htmdata/htmls-counter/201620161017水库数据.htm

Mysql Error 1062: Duplicate entry '2016-10-17,1' for key 'PRIMARY'

./htmdata/htmls-counter/201620161017江河数据.htm

Mysql Error 1062: Duplicate entry '2016-10-17,1' for key 'PRIMARY'

2016-11-01 10:16:48

./htmdata/htmls-counter/201620161018水库数据.htm

./htmdata/htmls-counter/201620161018江河数据.htm

2016-11-01 10:18:30

./htmdata/htmls-counter/201620161019水库数据.htm

./htmdata/htmls-counter/201620161019江河数据.htm

2016-11-01 10:19:58

./htmdata/htmls-counter/201620161020水库数据.htm

./htmdata/htmls-counter/201620161020江河数据.htm

2016-11-01 10:21:32

./htmdata/htmls-counter/201620161021水库数据.htm

./htmdata/htmls-counter/201620161021江河数据.htm

2016-11-01 10:23:46

./htmdata/htmls-counter/201620161022水库数据.htm

./htmdata/htmls-counter/201620161022江河数据.htm

2016-11-01 10:26:13

./htmdata/htmls-counter/201620161023水库数据.htm

./htmdata/htmls-counter/201620161023江河数据.htm

2016-11-01 10:28:09

./htmdata/htmls-counter/201620161024水库数据.htm

./htmdata/htmls-counter/201620161024江河数据.htm

2016-11-01 10:29:56

./htmdata/htmls-counter/201620161025水库数据.htm

./htmdata/htmls-counter/201620161025江河数据.htm

2016-11-01 10:31:34

./htmdata/htmls-counter/201620161026水库数据.htm

./htmdata/htmls-counter/201620161026江河数据.htm

2016-11-01 10:33:09

./htmdata/htmls-counter/201620161027水库数据.htm

./htmdata/htmls-counter/201620161027江河数据.htm

2016-11-01 10:34:45

./htmdata/htmls-counter/201620161028水库数据.htm

./htmdata/htmls-counter/201620161028江河数据.htm

2016-11-01 10:36:11

./htmdata/htmls-counter/201620161029水库数据.htm

./htmdata/htmls-counter/201620161029江河数据.htm

2016-11-01 10:37:43

./htmdata/htmls-counter/201620161030水库数据.htm

./htmdata/htmls-counter/201620161030江河数据.htm

2016-11-01 10:39:17

./htmdata/htmls-counter/201620161031水库数据.htm

./htmdata/htmls-counter/201620161031江河数据.htm

2016-11-01 10:40:58

[Finished in 2206.9s]

3 写Excel文件

# -*- encoding: utf-8 -*-

from StringIO import StringIO

import time

from openpyxl.workbook import Workbook

from openpyxl.reader.excel import load_workbook

from openpyxl.writer.excel import ExcelWriter

import json

import MySQLdb

import os,sys

#reload(sys)

#sys.setdefaultencoding( 'utf-8' )

'''

JSON {"流域":{"站名":{"时间": [水位, 流量 , 警戒水位]}, ...

{"站名":{"时间", [水位, 流量 , 警戒水位]} }, ...

"黄河":{"站名":{ "时间": [水位, 流量 , 警戒水位]}, ...

{"站名":{"时间", [水位, 流量 , 警戒水位]} }, ...

"长江":{"站名":{ "时间": [水位, 流量 , 警戒水位]}, ...

{"站名":{"时间", [水位, 流量 , 警戒水位]} }, ...

}'''

rjson ={}

daterow = {}

def loadJson(dic):

io = StringIO(dic)

io = json.dumps(str(io), sort_keys=True)

return json.loads(io)

def rDB(dbname):

#数据库操作

conn= MySQLdb.connect(

host='localhost',

port = 3306,

user='root',

passwd='root',

charset='utf8'

)

cursor = conn.cursor()

conn.select_db('information_schema')

fl = cursor.execute("SELECT * FROM tables WHERE table_name='"+dbname+"';")

conn.select_db('Grab_Hydrology_Data')

selectsql = "SELECT 流域, 时间, 站名, 水位, 流量 , 警戒水位 FROM " + dbname

if 0 == fl :

print 'NO DB :' + dbname

cursor.close()

conn.close()

return

else:

lens = cursor.execute(selectsql)

results = cursor.fetchall()

#print type(results),len(results),results[0]

for nrow in range(lens):

#print(results[i][0])

#设置流域

rjson.setdefault(results[nrow][0],{})

#设置站名

rjson[results[nrow][0]].setdefault(results[nrow][2] ,{})

#设置时间

rjson[results[nrow][0]][results[nrow][2]].setdefault(results[nrow][1] ,

[results[nrow][3],results[nrow][4],results[nrow][5]])

#绑定时间

daterow.setdefault(results[nrow][1],0)

cursor.close()

conn.close()

def pyxlWsheet(jss,dbname):

dic = jss

tn = time.strftime("%Y%m%d", time.localtime())

fn = u'江河数据' + dbname + '.xlsx'

sheet = Workbook()

ew = ExcelWriter(workbook = sheet)

ws = sheet.worksheets[0]

ws.freeze_panes = ws['B4']

ws.title = u'大江大河'

ws.cell(row=3, column=1, value=u'时间')

list = sorted(daterow.iteritems(),key=lambda t:t[0],reverse=False)

rowdate = {}

#dbname = 'big_rivers201512'

#rtn = time.strftime("%Y", time.localtime())+'-'

rtn = dbname[-6:-2]+'-'

count_line = 3

for i in range(len(list)):

if i%2==0:

count_line += 1

rowdate[list[i][0]] = count_line

ws.cell(row=count_line, column=1, value=rtn+list[i][0][:5])

else:

rowdate[list[i][0]] = count_line

col = 2

for k1,v1 in dic.items():

for k2,v2 in v1.items():

ws.cell(row=1, column=col, value=k1)

ws.cell(row=2, column=col, value=k2)

ws.cell(row=3, column=col, value=u'水位')

ws.cell(row=3, column=col+1, value=u'流量')

for k3,v3 in v2.items():

ws.cell(row=rowdate[k3], column=col, value=v3[0])

ws.cell(row=rowdate[k3], column=col+1, value=v3[1])

col+=2

ws = sheet.create_sheet()

ws.freeze_panes = ws['A2']

ws.title = u'警戒水位'

rows = 1

ws.cell(row=rows, column=1, value=u'河流')

ws.cell(row=rows, column=2, value=u'站名')

ws.cell(row=rows, column=3, value=u'警戒水位')

for k1,v1 in dic.items():

for k2,v2 in v1.items():

rows += 1

ws.cell(row=rows, column=1, value=k1)

ws.cell(row=rows, column=2, value=k2)

for k3,v3 in v2.items():

ws.cell(row=rows, column=3, value=v3[2])

break

ew.save(filename = fn)

def freeze_panes(fn):

wr1 = load_workbook(filename = fn)

wr = wr1.worksheets[0]

p = wr['B4']

wr.freeze_panes = p

wr1.save(fn)

if __name__=="__main__":

dbname = 'big_rivers201610'

rDB(dbname)

print len(rjson)

pyxlWsheet(rjson,dbname)

4 问题

-

Mysql数据库表设计差,写入效率太低。