上次写了爬取这个网站的程序,有一些地方不完善,而且爬取速度较慢,今天完善一下并开启多进程爬取,速度就像坐火箭。。

# 需要的库 from lxml import etree import requests from multiprocessing import Pool # 请求头 headers = { 'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/65.0.3325.181 Safari/537.36' } # 保存文本的地址 pathname=r'E:爬虫诗词名句网\' # 获取书籍名称的函数 def get_book(url): try: response = requests.get(url,headers) etrees = etree.HTML(response.text) url_infos = etrees.xpath('//div[@class="bookmark-list"]/ul/li') urls = [] for i in url_infos: url_info = i.xpath('./h2/a/@href') book_name = i.xpath('./h2/a/text()')[0] print('开始下载.'+book_name) urls.append('http://www.shicimingju.com' + url_info[0]) # print('http://www.shicimingju.com'+url_info[0]) # get_index('http://www.shicimingju.com'+url_info[0]) # 开启多进程 pool.map(get_index,urls) except Exception: print('get_book failed') # 获取书籍目录的函数 def get_index(url): try: response = requests.get(url, headers) etrees = etree.HTML(response.text) url_infos = etrees.xpath('//div[@class="book-mulu"]/ul/li') for i in url_infos: url_info = i.xpath('./a/@href') # print('http://www.shicimingju.com' + url_info[0]) get_content('http://www.shicimingju.com' + url_info[0]) except Exception as e: print(e) # 获取书籍内容并写入.txt文件 def get_content(url): try: response = requests.get(url, headers) etrees = etree.HTML(response.text) title = etrees.xpath('//div[@class="www-main-container www-shadow-card "]/h1/text()')[0] content = etrees.xpath('//div[@class="chapter_content"]/p/text()') if not content: content = etrees.xpath('//div[@class="chapter_content"]/text()') content = ''.join(content) book_name = etrees.xpath('//div[@class="nav-top"]/a[3]/text()')[0] with open(pathname + book_name + '.txt', 'a+', encoding='utf-8') as f: f.write(title + ' ' + content + ' ') print(title + '..下载完成') else: content = ''.join(content) book_name=etrees.xpath('//div[@class="nav-top"]/a[3]/text()')[0] with open(pathname+book_name+'.txt','a+',encoding='utf-8') as f: f.write(title+' '+content+' ') print(title+'..下载完成') except Exception: print('get_content failed') # 程序入口 if __name__ == '__main__': url = 'http://www.shicimingju.com/book/' # 开启进程池 pool = Pool() # 启动函数 get_book(url)

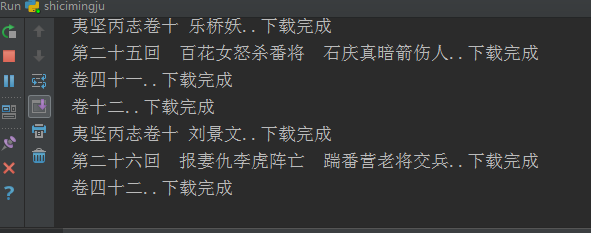

控制台输出;

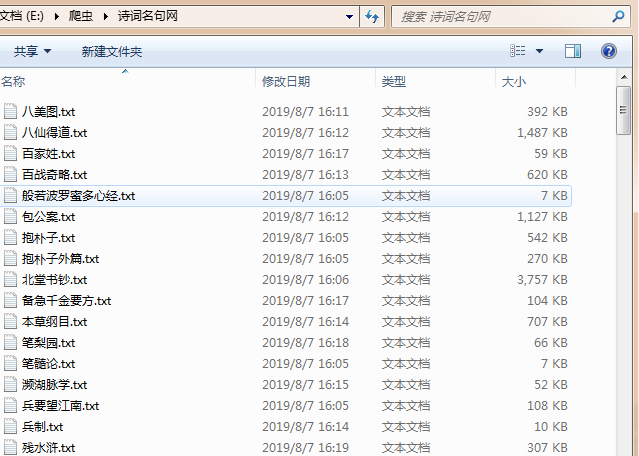

查看文件夹,可以发现文件是多个多个的同时在下载;

done。