抓取腾讯视频存入数据库!

1 #coding: utf-8 2 import re 3 import urllib2 4 from bs4 import BeautifulSoup 5 import time 6 import MySQLdb 7 import sys 8 reload(sys) 9 sys.setdefaultencoding('utf8') 10 11 NUM = 0 #全局变量。电影数量 12 m_type = u'' #全局变量。电影类型 13 m_site = u'qq' #全局变量。电影网站 14 movieStore = [] #存储电影信息 15 16 #根据指定的URL获取网页内容 17 def getHtml(url): 18 headers = { 19 'User-Agent':'Mozilla/5.0 (Windows NT 6.3) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/39.0.2171.95 Safari/537.36', 20 'Accept':'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8'} 21 timeout = 30 22 req = urllib2.Request(url, None, headers) 23 response = urllib2.urlopen(req, None, timeout) 24 return response.read() 25 26 #从电影分类列表页面获取电影分类 27 def getTags(html): 28 global m_type 29 soup = BeautifulSoup(html) 30 #return soup 31 tags_all = soup.find_all('ul', {'class': 'clearfix _group', 'gname': 'mi_type'}) 32 #print len(tags_all), tags_all 33 #print str(tags_all[0]).replace(' ', '') 34 #<a _hot="tag.sub" class="_gtag _hotkey" href="http://v.qq.com/list/1_0_-1_-1_1_0_0_20_0_-1_0.html" title="动作" tvalue="0">动作</a> 35 reTags = r'<a _hot="tag.sub" class="_gtag _hotkey" href="(.+?)" title="(.+?)" tvalue="(.+?)">.+?</a>' 36 pattern = re.compile(reTags, re.DOTALL) 37 38 tags = pattern.findall(str(tags_all[0])) 39 if tags: 40 tagsURL = {} 41 for tag in tags: 42 #print tag 43 tagURL = tag[0].decode('utf-8') 44 m_type = tag[1].decode('utf-8') 45 tagsURL[m_type] = tagURL 46 47 else: 48 print "Not Find" 49 return tagsURL 50 51 #获取每个分类的页数 52 def getPages(tagUrl): 53 tag_html = getHtml(tagUrl) 54 #div class="paginator 55 soup = BeautifulSoup(tag_html) #过滤出标记页面的html 56 #print soup 57 #<div class="mod_pagenav" id="pager"> 58 div_page = soup.find_all('div', {'class': 'mod_pagenav', 'id': 'pager'}) 59 #print div_page[0] 60 61 #<a _hot="movie.page2." class="c_txt6" href="http://v.qq.com/list/1_18_-1_-1_1_0_1_20_0_-1_0_-1.html" title="2"><span>2</span></a> 62 re_pages = r'<a _hot=.+?><span>(.+?)</span></a>' 63 p = re.compile(re_pages, re.DOTALL) 64 pages = p.findall(str(div_page[0])) 65 #print pages 66 if len(pages) > 1: 67 return pages[-2] 68 else: 69 return 1 70 71 #获取电影列表 72 def getMovieList(html): 73 soup = BeautifulSoup(html) 74 #<ul class="mod_list_pic_130"> 75 divs = soup.find_all('ul', {'class': 'mod_list_pic_130'}) 76 #print divs 77 for divHtml in divs: 78 divHtml = str(divHtml).replace(' ', '') 79 #print divHtml 80 getMovie(divHtml) 81 82 83 def getMovie(html): 84 global NUM 85 global m_type 86 global m_site 87 88 reMovie = r'<li><a _hot="movie.image.link.1." class="mod_poster_130" href="(.+?)" target="_blank" title="(.+?)">.+?</li>' 89 p = re.compile(reMovie, re.DOTALL) 90 movies = p.findall(html) 91 #print movies 92 if movies: 93 for movie in movies: 94 #print movie 95 NUM += 1 96 #print "%s : %d" % ("=" * 70, NUM) 97 ''' 98 values = dict( 99 movieTitle=movie[1], 100 movieUrl=movie[0], 101 movieSite=m_site, 102 movieType=m_type 103 ) 104 print values 105 ''' 106 eachMovie = [NUM, movie[1], movie[0], m_type] 107 movieStore.append(eachMovie) 108 109 110 #数据库插入数据,自己创建表,字段为:number, title, url, type 111 def db_insert(insert_list): 112 try: 113 conn = MySQLdb.connect(host="127.0.0.1", user="root", passwd="meimei1118", db="ctdata", charset='utf8') 114 cursor = conn.cursor() 115 cursor.execute('delete from movies') 116 cursor.execute('alter table movies AUTO_INCREMENT=1') 117 cursor.executemany("INSERT INTO movies(number,title,url,type) VALUES(%s, %s, %s,%s)", insert_list) 118 conn.commit() 119 cursor.close() 120 conn.close() 121 122 except MySQLdb.Error, e: 123 print "Mysql Error %d: %s" %(e.args[0], e.args[1]) 124 125 126 if __name__ == "__main__": 127 url = "http://v.qq.com/list/1_-1_-1_-1_1_0_0_20_0_-1_0.html" 128 html = getHtml(url) 129 tagUrls = getTags(html) 130 #print tagHtml 131 #print tagUrls 132 133 for url in tagUrls.items(): 134 #print str(url[1]).encode('utf-8'), url[0] 135 #getPages(str(url[1])) 136 maxPage = int(getPages(str(url[1]).encode('utf-8'))) 137 print maxPage 138 139 for x in range(0, 5): 140 #http://v.qq.com/list/1_18_-1_-1_1_0_0_20_0_-1_0_-1.html 141 m_url = str(url[1]).replace('0_20_0_-1_0_-1.html', '') 142 #print m_url 143 movie_url = "%s%d_20_0_-1_0_-1.html" % (m_url, x) 144 #print movie_url 145 movie_html = getHtml(movie_url.encode('utf-8')) 146 #print movie_html 147 getMovieList(movie_html) 148 time.sleep(10) 149 150 db_insert(movieStore)

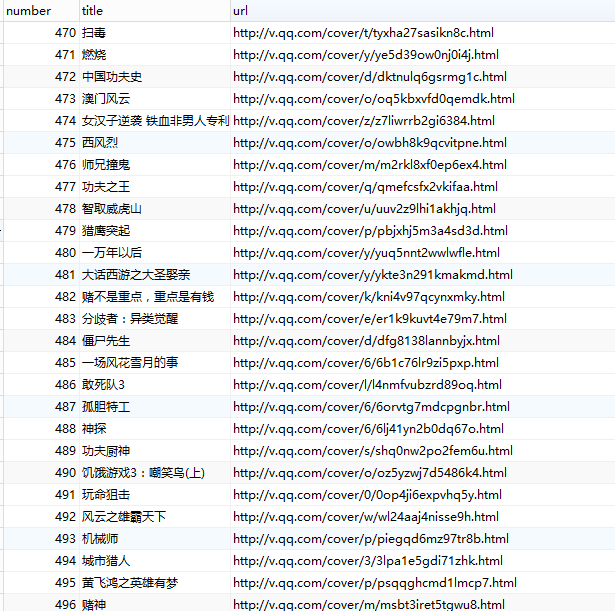

数据存入MySQL:

1 #coding: utf-8 2 import re 3 import urllib2 4 import datetime 5 import Queue 6 from bs4 import BeautifulSoup 7 import time 8 import MySQLdb 9 import sys 10 reload(sys) 11 sys.setdefaultencoding('utf8') 12 13 NUM = 0 #全局变量。电影数量 14 m_type = u'' #全局变量。电影类型 15 m_site = u'qq' #全局变量。电影网站 16 movieStore = [] #存储电影信息 17 18 #根据指定的URL获取网页内容 19 def getHtml(url): 20 headers = { 21 'User-Agent':'Mozilla/5.0 (Windows NT 6.3) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/39.0.2171.95 Safari/537.36', 22 'Accept':'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8'} 23 timeout = 30 24 req = urllib2.Request(url, None, headers) 25 response = urllib2.urlopen(req, None, timeout) 26 return response.read() 27 28 #从电影分类列表页面获取电影分类 29 def getTags(html): 30 global m_type 31 soup = BeautifulSoup(html) 32 #return soup 33 tags_all = soup.find_all('ul', {'class': 'clearfix _group', 'gname': 'mi_type'}) 34 #print len(tags_all), tags_all 35 #print str(tags_all[0]).replace(' ', '') 36 #<a _hot="tag.sub" class="_gtag _hotkey" href="http://v.qq.com/list/1_0_-1_-1_1_0_0_20_0_-1_0.html" title="动作" tvalue="0">动作</a> 37 reTags = r'<a _hot="tag.sub" class="_gtag _hotkey" href="(.+?)" title="(.+?)" tvalue="(.+?)">.+?</a>' 38 pattern = re.compile(reTags, re.DOTALL) 39 40 tags = pattern.findall(str(tags_all[0])) 41 if tags: 42 tagsURL = {} 43 for tag in tags: 44 #print tag 45 tagURL = tag[0].decode('utf-8') 46 m_type = tag[1].decode('utf-8') 47 tagsURL[m_type] = tagURL 48 49 else: 50 print "Not Find" 51 return tagsURL 52 53 #获取每个分类的页数 54 def getPages(tagUrl): 55 tag_html = getHtml(tagUrl) 56 #div class="paginator 57 soup = BeautifulSoup(tag_html) #过滤出标记页面的html 58 #print soup 59 #<div class="mod_pagenav" id="pager"> 60 div_page = soup.find_all('div', {'class': 'mod_pagenav', 'id': 'pager'}) 61 #print div_page[0] 62 63 #<a _hot="movie.page2." class="c_txt6" href="http://v.qq.com/list/1_18_-1_-1_1_0_1_20_0_-1_0_-1.html" title="2"><span>2</span></a> 64 re_pages = r'<a _hot=.+?><span>(.+?)</span></a>' 65 p = re.compile(re_pages, re.DOTALL) 66 pages = p.findall(str(div_page[0])) 67 #print pages 68 if len(pages) > 1: 69 return pages[-2] 70 else: 71 return 1 72 73 #获取电影列表 74 def getMovieList(html): 75 soup = BeautifulSoup(html) 76 #<ul class="mod_list_pic_130"> 77 divs = soup.find_all('ul', {'class': 'mod_list_pic_130'}) 78 #print divs 79 for divHtml in divs: 80 divHtml = str(divHtml).replace(' ', '') 81 #print divHtml 82 getMovie(divHtml) 83 84 85 def getMovie(html): 86 global NUM 87 global m_type 88 global m_site 89 90 reMovie = r'<li><a _hot="movie.image.link.1." class="mod_poster_130" href="(.+?)" target="_blank" title="(.+?)">.+?</li>' 91 p = re.compile(reMovie, re.DOTALL) 92 movies = p.findall(html) 93 #print movies 94 95 if movies: 96 for movie in movies: 97 #print movie 98 NUM += 1 99 #print "%s : %d" % ("=" * 70, NUM) 100 ''' 101 values = dict( 102 movieTitle=movie[1], 103 movieUrl=movie[0], 104 movieSite=m_site, 105 movieType=m_type 106 ) 107 print values 108 ''' 109 eachMovie = [NUM, movie[1], movie[0], datetime.datetime.now(), m_type] 110 #cursor.execute('alter table movies AUTO_INCREMENT=1') 111 cursor.execute("INSERT INTO movies(number,title,url,time, type) VALUES(%s, %s, %s,%s,%s)", eachMovie) 112 conn.commit() 113 #movieStore.append(eachMovie) 114 115 116 #数据库插入数据,自己创建表,字段为:number, title, url, type 117 def db_insert(insert_list): 118 try: 119 conn = MySQLdb.connect(host="127.0.0.1", user="root", passwd="meimei1118", db="ctdata", charset='utf8') 120 cursor = conn.cursor() 121 cursor.execute('delete from movies') 122 cursor.execute('alter table movies AUTO_INCREMENT=1') 123 cursor.executemany("INSERT INTO movies(number,title,url,type) VALUES(%s, %s, %s,%s)", insert_list) 124 conn.commit() 125 cursor.close() 126 conn.close() 127 128 except MySQLdb.Error, e: 129 print "Mysql Error %d: %s" %(e.args[0], e.args[1]) 130 131 132 if __name__ == "__main__": 133 url = "http://v.qq.com/list/1_-1_-1_-1_1_0_0_20_0_-1_0.html" 134 html = getHtml(url) 135 tagUrls = getTags(html) 136 #print tagHtml 137 #print tagUrls 138 try: 139 conn = MySQLdb.connect(host="127.0.0.1", user="root", passwd="meimei1118", db="ctdata", charset='utf8') 140 cursor = conn.cursor() 141 cursor.execute('delete from movies') 142 for url in tagUrls.items(): 143 #print str(url[1]).encode('utf-8'), url[0] 144 #getPages(str(url[1])) 145 maxPage = int(getPages(str(url[1]).encode('utf-8'))) 146 print maxPage 147 148 for x in range(0, maxPage): 149 #http://v.qq.com/list/1_18_-1_-1_1_0_0_20_0_-1_0_-1.html 150 m_url = str(url[1]).replace('0_20_0_-1_0_-1.html', '') 151 #print m_url 152 movie_url = "%s%d_20_0_-1_0_-1.html" % (m_url, x) 153 #print movie_url 154 movie_html = getHtml(movie_url.encode('utf-8')) 155 #print movie_html 156 getMovieList(movie_html) 157 time.sleep(10) 158 159 print u"完成..." 160 161 cursor.close() 162 conn.close() 163 except MySQLdb.Error, e: 164 print "Mysql Error %d: %s" %(e.args[0], e.args[1]) 165 166 167 #db_insert(movieStore)

将上述程序根据面向对象的思想进行改写,并对电影列表获取部分进行多线程的改写。

代码主要分成两个部分,一个是方法类methods,一个是面向对象的改写

methods.py

1 #coding: utf-8 2 import re 3 import urllib2 4 import datetime 5 from bs4 import BeautifulSoup 6 import sys 7 reload(sys) 8 sys.setdefaultencoding('utf8') 9 10 NUM = 0 11 m_type = u'' #全局变量。电影类型 12 m_site = u'qq' #全局变量。电影网站 13 movieStore = [] #存储电影信息 14 15 16 #根据指定的URL获取网页内容 17 def getHtml(url): 18 headers = { 19 'User-Agent':'Mozilla/5.0 (Windows NT 6.3) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/39.0.2171.95 Safari/537.36', 20 'Accept':'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8'} 21 timeout = 20 22 req = urllib2.Request(url, None, headers) 23 response = urllib2.urlopen(req, None, timeout) 24 return response.read() 25 26 #从电影分类列表页面获取电影分类 27 def getTags(html): 28 global m_type 29 soup = BeautifulSoup(html) 30 #return soup 31 tags_all = soup.find_all('ul', {'class': 'clearfix _group', 'gname': 'mi_type'}) 32 #print len(tags_all), tags_all 33 #print str(tags_all[0]).replace(' ', '') 34 #<a _hot="tag.sub" class="_gtag _hotkey" href="http://v.qq.com/list/1_0_-1_-1_1_0_0_20_0_-1_0.html" title="动作" tvalue="0">动作</a> 35 reTags = r'<a _hot="tag.sub" class="_gtag _hotkey" href="(.+?)" title="(.+?)" tvalue="(.+?)">.+?</a>' 36 pattern = re.compile(reTags, re.DOTALL) 37 38 tags = pattern.findall(str(tags_all[0])) 39 if tags: 40 tagsURL = {} 41 for tag in tags: 42 #print tag 43 tagURL = tag[0].decode('utf-8') 44 m_type = tag[1].decode('utf-8') 45 tagsURL[m_type] = tagURL 46 print m_type 47 48 else: 49 print "Not Find" 50 return tagsURL 51 52 #获取每个分类的页数 53 def getPages(tagUrl): 54 tag_html = getHtml(tagUrl) 55 #div class="paginator 56 soup = BeautifulSoup(tag_html) #过滤出标记页面的html 57 #print soup 58 #<div class="mod_pagenav" id="pager"> 59 div_page = soup.find_all('div', {'class': 'mod_pagenav', 'id': 'pager'}) 60 #print div_page[0] 61 62 #<a _hot="movie.page2." class="c_txt6" href="http://v.qq.com/list/1_18_-1_-1_1_0_1_20_0_-1_0_-1.html" title="2"><span>2</span></a> 63 re_pages = r'<a _hot=.+?><span>(.+?)</span></a>' 64 p = re.compile(re_pages, re.DOTALL) 65 pages = p.findall(str(div_page[0])) 66 #print pages 67 if len(pages) > 1: 68 return pages[-2] 69 else: 70 return 1 71 #从指定电影块页面获取电影具体内容 72 def getMovie(html): 73 global NUM 74 global m_type 75 global m_site 76 77 reMovie = r'<li><a _hot="movie.image.link.1." class="mod_poster_130" href="(.+?)" target="_blank" title="(.+?)">.+?</li>' 78 p = re.compile(reMovie, re.DOTALL) 79 movies = p.findall(html) 80 #print movies 81 if movies: 82 for movie in movies: 83 #print movie 84 #NUM += 1 85 #print "%s : %d" % ("=" * 70, NUM) 86 ''' 87 values = dict( 88 movieTitle=movie[1], 89 movieUrl=movie[0], 90 movieSite=m_site, 91 movieType=m_type 92 ) 93 print values 94 ''' 95 eachMovie = [movie[1], movie[0], m_type, datetime.datetime.now()] 96 print eachMovie 97 movieStore.append(eachMovie) 98 99 #获取一页的电影列表 100 def getMovieList(html): 101 soup = BeautifulSoup(html) 102 #<ul class="mod_list_pic_130"> 103 divs = soup.find_all('ul', {'class': 'mod_list_pic_130'}) 104 #print divs 105 for divHtml in divs: 106 divHtml = str(divHtml).replace(' ', '') 107 #print divHtml 108 getMovie(divHtml)

movies.py

1 #coding: utf-8 2 import re 3 import urllib2 4 import datetime 5 import threading 6 from methods import * 7 import time 8 import MySQLdb 9 import sys 10 11 reload(sys) 12 sys.setdefaultencoding('utf8') 13 14 NUM = 0 #全局变量。电影数量 15 movieTypeList = [] 16 17 def getMovieType(): 18 url = "http://v.qq.com/list/1_-1_-1_-1_1_0_0_20_0_-1_0.html" 19 html = getHtml(url) 20 tagUrls = getTags(html) 21 for url in tagUrls.items(): 22 #print str(url[1]).encode('utf-8'), url[0] 23 #getPages(str(url[1])) 24 maxPage = int(getPages(str(url[1]).encode('utf-8'))) 25 #print maxPage 26 typeList = [url[0], str(url[1]).encode('utf-8'), maxPage] 27 movieTypeList.append(typeList) 28 29 #多线程获取每一类型的电影列表 30 class GetMovies(threading.Thread): 31 def __init__(self, movie): 32 threading.Thread.__init__(self) 33 self.movie = movie 34 35 def getMovies(self): 36 maxPage = int(self.movie[2]) 37 m_type = self.movie[0] 38 for x in range(0, maxPage): 39 #http://v.qq.com/list/1_18_-1_-1_1_0_0_20_0_-1_0_-1.html 40 m_url = str(self.movie[1]).replace('0_20_0_-1_0_-1.html', '') 41 #print m_url 42 movie_url = "%s%d_20_0_-1_0_-1.html" % (m_url, x) 43 #print movie_url, url[0] 44 movie_html = getHtml(movie_url.encode('utf-8')) 45 #print movie_html 46 getMovieList(movie_html) 47 time.sleep(5) 48 49 print m_type + u"完成..." 50 51 def run(self): 52 self.getMovies() 53 54 #插入数据库,表结构自己创建 55 def db_insert(insert_list): 56 try: 57 conn = MySQLdb.connect(host="127.0.0.1", user="root", passwd="meimei1118", db="ctdata", charset='utf8') 58 cursor = conn.cursor() 59 cursor.execute('delete from test') 60 cursor.execute('alter table test AUTO_INCREMENT=1') 61 cursor.executemany("INSERT INTO test(title,url,type,time) VALUES (%s,%s,%s,%s)", insert_list) 62 conn.commit() 63 cursor.close() 64 conn.close() 65 except MySQLdb.Error, e: 66 print "Mysql Error %d: %s" % (e.args[0], e.args[1]) 67 68 69 70 71 72 if __name__ == "__main__": 73 getThreads = [] 74 getMovieType() 75 start = time.time() 76 for i in range(len(movieTypeList)): 77 t = GetMovies(movieTypeList[i]) 78 getThreads.append(t) 79 80 for i in range(len(getThreads)): 81 getThreads[i].start() 82 83 for i in range(len(getThreads)): 84 getThreads[i].join() 85 86 t1 = time.time() - start 87 print t1 88 t = time.time() 89 db_insert(movieStore) 90 t2 = time.time() - t 91 print t2