Movielens and Netflix remain the most-used datasets. Other datasets such as Amazon, Yelp and CiteUlike are also frequently adopted.

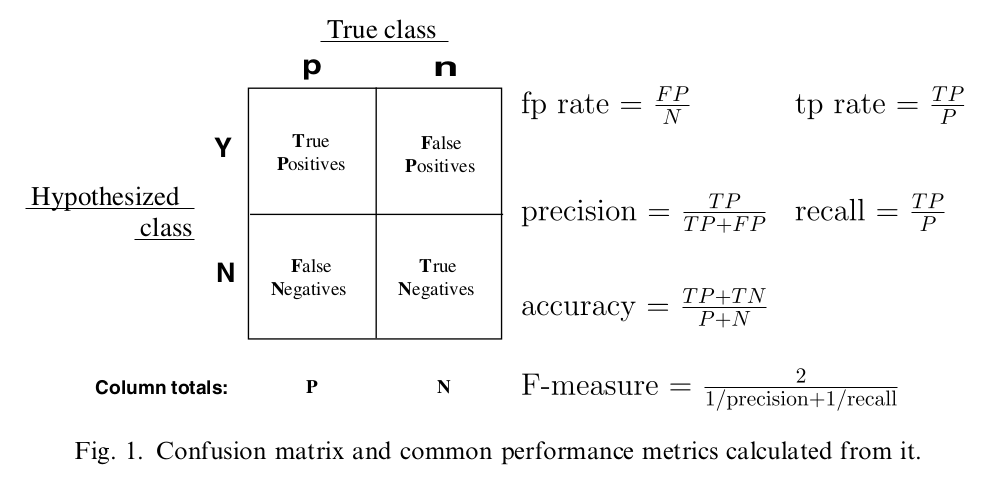

As for evaluation metrics, Root Mean Square Error (RMSE) and Mean Average Error (MAE) are usually used for rating prediction evaluation, while Recall, Precision, Normalized Discounted Cumulative Gain (NDCG) and Area Under the Curve (AUC) are often adopted for evaluating the ranking scores. Precision, Recall and F1-score are widely used for classification result evaluation.

@NDCG:Normalized Discounted Cumulative Gain:measures the items position in the ranked list and penalizes the score for ranking the item lower in the list.

@HR:Hit Ratio:measures the presence of the positive item within the top N.

@ROC:Receiver Operating Characteristic Curve:

@AUC:Area under the Curve of ROC:

@MAP:Mean Average Precision:

@MRR:Mean Reciprocal Rank:

Reference:Learning to Rank for IR的评价指标—MAP,NDCG,MRR。精确率、召回率、F1 值、ROC、AUC 各自的优缺点是什么?。ROC和AUC介绍以及如何计算AUC。

2019-01-18:

Average precision is approximately area under Precision-Recall curve. Ref:Average Precision;PR Curve。