Typical Loss

-

Mean Squared Error

-

Cross Entropy Loss

- binary

- multi-class

- +softmax

MSE

-

(loss = sum[y-(xw+b)]^2)

-

(L_{2-norm} = ||y-(xw+b)||_2)

-

(loss = norm(y-(xw+b))^2)

Derivative

-

(loss = sum[y-f_ heta(x)]^2)

-

(frac{ abla ext{loss}}{ abla{ heta}}=2sum{[y-f_ heta(x)]}*frac{ abla{f_ heta{(x)}}}{ abla{ heta}})

MSE Gradient

import tensorflow as tf

x = tf.random.normal([2, 4])

w = tf.random.normal([4, 3])

b = tf.zeros([3])

y = tf.constant([2, 0])

with tf.GradientTape() as tape:

tape.watch([w, b])

prob = tf.nn.softmax(x @ w + b, axis=1)

loss = tf.reduce_mean(tf.losses.MSE(tf.one_hot(y, depth=3), prob))

grads = tape.gradient(loss, [w, b])

grads[0]

<tf.Tensor: id=92, shape=(4, 3), dtype=float32, numpy=

array([[ 0.01156707, -0.00927749, -0.00228957],

[ 0.03556816, -0.03894382, 0.00337564],

[-0.02537526, 0.01924876, 0.00612648],

[-0.0074787 , 0.00161515, 0.00586352]], dtype=float32)>

grads[1]

<tf.Tensor: id=90, shape=(3,), dtype=float32, numpy=array([-0.01552947, 0.01993286, -0.00440337], dtype=float32)>

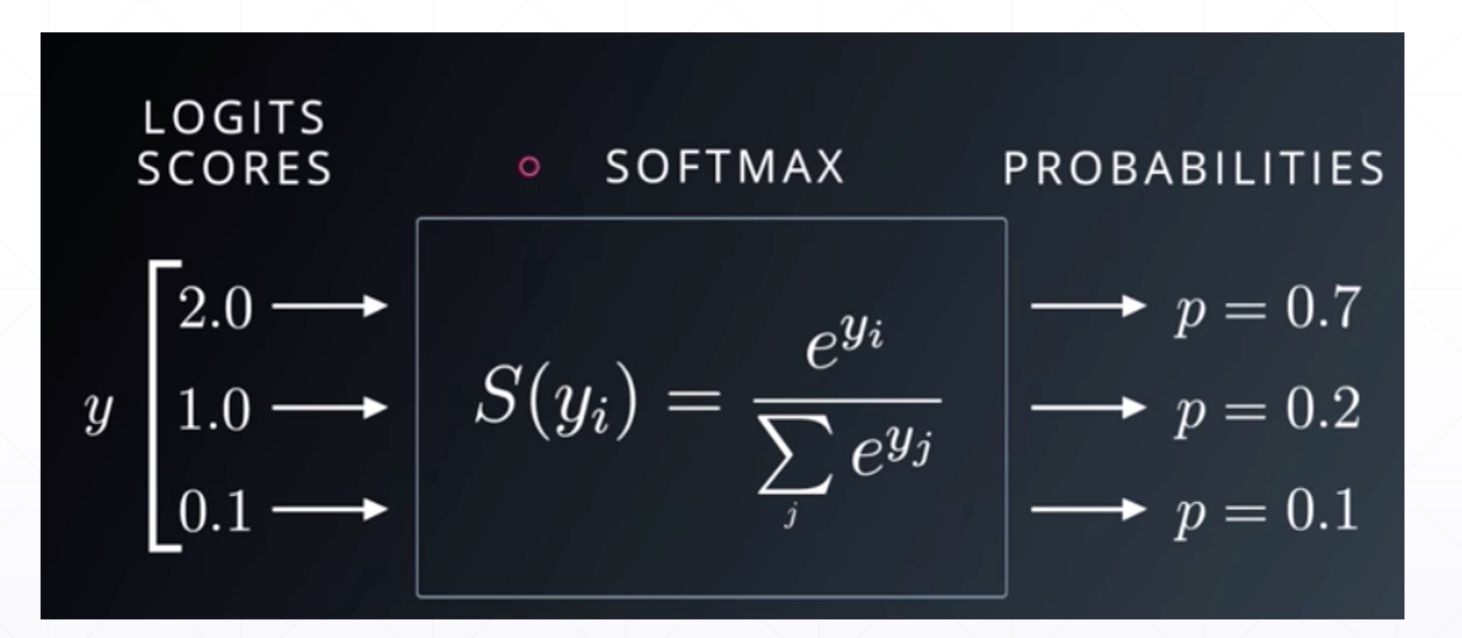

Softmax

-

soft version of max

-

大的越来越大,小的越来越小、越密集

Derivative

[p_i = frac{e^{a_i}}{sum_{k=1}^Ne^{a_k}}

]

- i=j

[frac{partial{p_i}}{partial{a_j}}=frac{partial{frac{e^{a_i}}{sum_{k=1}^Ne^{a_k}}}}{{partial{a_j}}} = p_i(1-p_j)

]

- (i eq{j})

[frac{partial{p_i}}{partial{a_j}}=frac{partial{frac{e^{a_i}}{sum_{k=1}^Ne^{a_k}}}}{{partial{a_j}}} = -p_j*p_i

]

x = tf.random.normal([2, 4])

w = tf.random.normal([4, 3])

b = tf.zeros([3])

y = tf.constant([2, 0])

with tf.GradientTape() as tape:

tape.watch([w, b])

logits =x @ w + b

loss = tf.reduce_mean(

tf.losses.categorical_crossentropy(tf.one_hot(y, depth=3),

logits,

from_logits=True))

grads = tape.gradient(loss, [w, b])

grads[0]

<tf.Tensor: id=226, shape=(4, 3), dtype=float32, numpy=

array([[-0.38076094, 0.33844548, 0.04231545],

[-1.0262716 , -0.6730384 , 1.69931 ],

[ 0.20613424, -0.50421923, 0.298085 ],

[ 0.5800004 , -0.22329211, -0.35670823]], dtype=float32)>

grads[1]

<tf.Tensor: id=224, shape=(3,), dtype=float32, numpy=array([-0.3719653 , 0.53269935, -0.16073406], dtype=float32)>