Outline

-

What's Gradient

-

What does it mean

-

How to Search

-

AutoGrad

What's Gradient

-

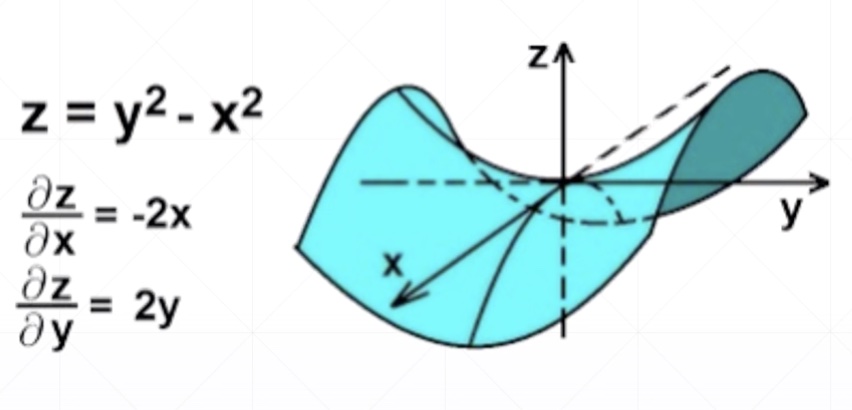

导数,derivative,抽象表达

-

偏微分,partial derivative,沿着某个具体的轴运动

-

梯度,gradient,向量

[

abla{f} = (frac{partial{f}}{partial{x_1}};frac{partial{f}}{{partial{x_2}}};cdots;frac{partial{f}}{{partial{x_n}}})

]

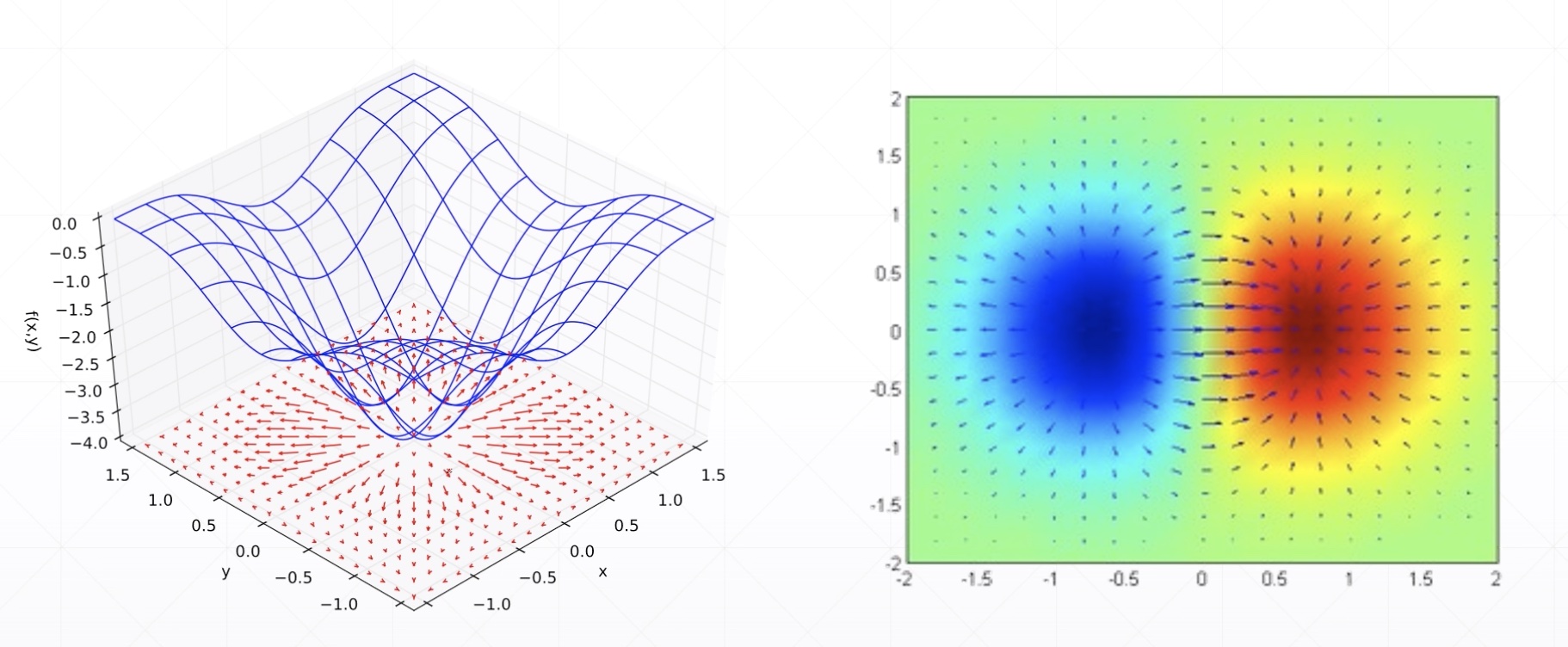

What does it mean?

- 箭头的方向表示梯度的方向

- 箭头模的大小表示梯度增大的速率

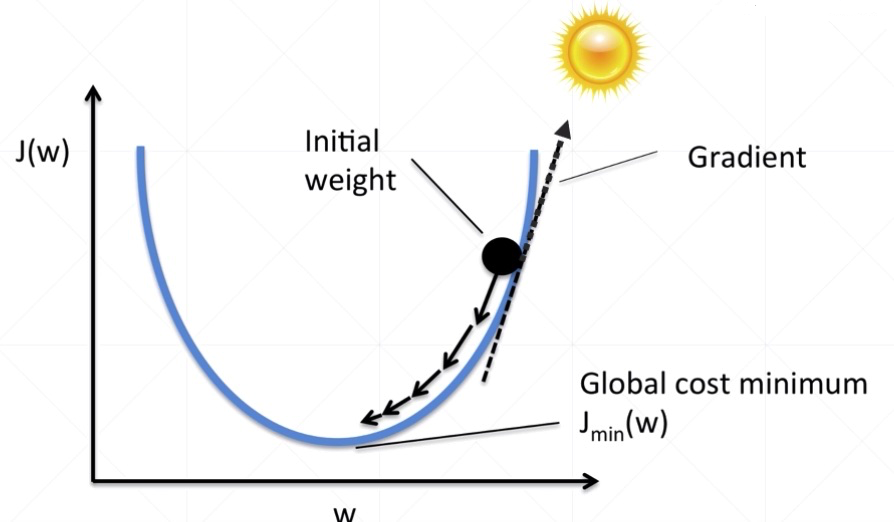

How to search

- 沿着梯度下降的反方向搜索

For instance

[ heta_{t+1}= heta_t-alpha_t

abla{f( heta_t)}

]

AutoGrad

-

With Tf.GradientTape() as tape:

- Build computation graph

- (loss = f_ heta{(x)})

-

[w_grad] = tape.gradient(loss,[w])

import tensorflow as tf

w = tf.constant(1.)

x = tf.constant(2.)

y = x * w

with tf.GradientTape() as tape:

tape.watch([w])

y2 = x * w

grad1 = tape.gradient(y, [w])

grad1

[None]

with tf.GradientTape() as tape:

tape.watch([w])

y2 = x * w

grad2 = tape.gradient(y2, [w])

grad2

[<tf.Tensor: id=30, shape=(), dtype=float32, numpy=2.0>]

try:

grad2 = tape.gradient(y2, [w])

except Exception as e:

print(e)

GradientTape.gradient can only be called once on non-persistent tapes.

- 永久保存grad

with tf.GradientTape(persistent=True) as tape:

tape.watch([w])

y2 = x * w

grad2 = tape.gradient(y2, [w])

grad2

[<tf.Tensor: id=35, shape=(), dtype=float32, numpy=2.0>]

grad2 = tape.gradient(y2, [w])

grad2

[<tf.Tensor: id=39, shape=(), dtype=float32, numpy=2.0>]

(2^{nd})-order

-

y = xw + b

-

(frac{partial{y}}{partial{w}} = x)

-

(frac{partial^2{y}}{partial{w^2}} = frac{partial{y'}}{partial{w}} = frac{partial{X}}{partial{w}} = None)

with tf.GradientTape() as t1:

with tf.GradientTape() as t2:

y = x * w + b

dy_dw, dy_db = t2.gradient(y, [w, b])

d2y_dw2 = t1.gradient(dy_dw, w)