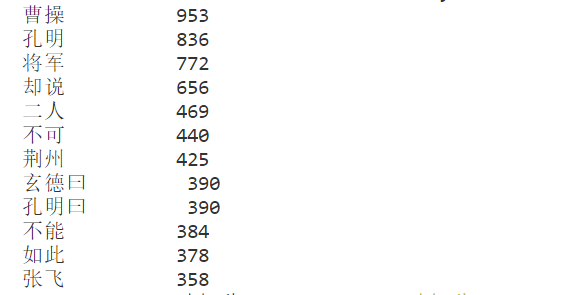

词频统计文本:《三国演义》

底图:

代码:

1 import jieba 2 txt = open("D:/桌面/pytest/threekingdoms.txt","r",encoding = "utf-8").read() #打开文本 3 words = jieba.lcut(txt) #分词 4 counts = {} 5 for word in words: 6 if len(word) == 1: 7 continue 8 else: 9 counts[word] = counts.get(word,0)+1 #统计 10 items = list(counts.items()) #此处得到类似 [('曹操', '953'), ('孔明', '836')] <-字典中的键和值组成了这样的列表 11 items.sort(key= lambda x:x[1],reverse=True) #排序。其中lambda x:x[1] 即将词频次数进行排序(Ture,从大到小) 12 elem = [] 13 for i in range(15): 14 word ,count = items[i] 15 elem.append(word) 16 print("{:<10}{:>5}".format(word,count)) #输出频率最高的前15个词 17 18 19 import wordcloud 20 import imageio 21 mk = imageio.imread("D:/桌面/pytest/picture.jpg") #选择底图 22 23 w = wordcloud.WordCloud(font_path="msyh.ttc",mask=mk,background_color="white",height=800,width=1000) #设置词云参数,注意mask匹配底图 24 w.generate(" ".join(elem)) 25 w.to_file("beautiful.png")

效果如下:

【学习过程中 发现scipy.misc中已经没有imread了(但老师依旧教from scipy.misc import imread),通过百度发现用imageio来替代。】