0.目录

1.参考

2. pool_connections 默认值为10,一个站点主机host对应一个pool

(4)分析

host A>>host B>>host A page2>>host A page3

限定只保留一个pool(host),根据TCP源端口可知,第四次get才能复用连接。

3. pool_maxsize 默认值为10,一个站点主机host对应一个pool, 该pool内根据多线程需求可保留到某一相同主机host的多条连接

(4)分析

多线程启动时到特定主机host的连接数没有收到 pool_maxsize 的限制,但是之后只有min(线程数,pool_maxsize ) 的连接数能够保留。

后续线程(应用层)并不关心实际会使用到的具体连接(传输层源端口)

1.参考

Requests' secret: pool_connections and pool_maxsize

Python - 体验urllib3 -- HTTP连接池的应用

通过wireshark抓取包:

所有http://ent.qq.com/a/20111216/******.htm对应的src port都是13136,可见端口重用了

2. pool_connections 默认值为10,一个站点主机host对应一个pool

(1)代码

#!/usr/bin/env python # -*- coding: UTF-8 -* import time import requests from threading import Thread import logging logging.basicConfig() logging.getLogger().setLevel(logging.DEBUG) requests_log = logging.getLogger("requests.packages.urllib3") requests_log.setLevel(logging.DEBUG) requests_log.propagate = True url_sohu_1 = 'http://www.sohu.com/sohu/1.html' url_sohu_2 = 'http://www.sohu.com/sohu/2.html' url_sohu_3 = 'http://www.sohu.com/sohu/3.html' url_sohu_4 = 'http://www.sohu.com/sohu/4.html' url_sohu_5 = 'http://www.sohu.com/sohu/5.html' url_sohu_6 = 'http://www.sohu.com/sohu/6.html' url_news_1 = 'http://news.163.com/air/' url_news_2 = 'http://news.163.com/domestic/' url_news_3 = 'http://news.163.com/photo/' url_news_4 = 'http://news.163.com/shehui/' url_news_5 = 'http://news.163.com/uav/5/' url_news_6 = 'http://news.163.com/world/6/' s = requests.Session() s.mount('http://', requests.adapters.HTTPAdapter(pool_connections=1)) s.get(url_sohu_1) s.get(url_news_1) s.get(url_sohu_2) s.get(url_sohu_3)

(2)log输出

DEBUG:urllib3.connectionpool:Starting new HTTP connection (1): www.sohu.com #host A DEBUG:urllib3.connectionpool:http://www.sohu.com:80 "GET /sohu/1.html HTTP/1.1" 404 None DEBUG:urllib3.connectionpool:Starting new HTTP connection (1): news.163.com #host B DEBUG:urllib3.connectionpool:http://news.163.com:80 "GET /air/ HTTP/1.1" 200 None DEBUG:urllib3.connectionpool:Starting new HTTP connection (1): www.sohu.com #host A DEBUG:urllib3.connectionpool:http://www.sohu.com:80 "GET /sohu/2.html HTTP/1.1" 404 None #host A page2 DEBUG:urllib3.connectionpool:http://www.sohu.com:80 "GET /sohu/3.html HTTP/1.1" 404 None #host A page3

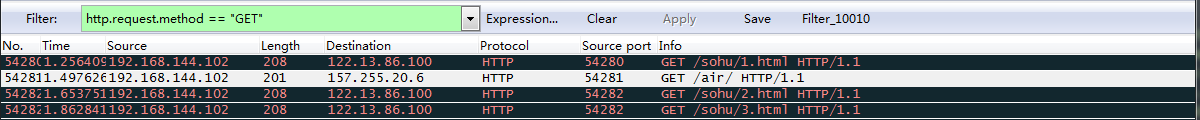

(3)wireshark抓包 https过滤方法?用tcp syn? ping m.10010.com 然后 tcp.flags == 0x0002 and ip.dst == 157.255.128.111

(4)分析

host A>>host B>>host A page2>>host A page3

限定只保留一个pool(host),根据TCP源端口可知,第四次get才能复用连接。

3. pool_maxsize 默认值为10,一个站点主机host对应一个pool, 该pool内根据多线程需求可保留到某一相同主机host的多条连接

(1)代码

def thread_get(url): s.get(url) def thread_get_wait_3s(url): s.get(url) time.sleep(3) s.get(url) def thread_get_wait_5s(url): s.get(url) time.sleep(5) s.get(url) s = requests.Session() s.mount('http://', requests.adapters.HTTPAdapter(pool_maxsize=2)) t1 = Thread(target=thread_get_wait_5s, args=(url_sohu_1,)) t2 = Thread(target=thread_get, args=(url_news_1,)) t3 = Thread(target=thread_get_wait_3s, args=(url_sohu_2,)) t4 = Thread(target=thread_get_wait_5s, args=(url_sohu_3,)) t1.start() t2.start() t3.start() t4.start() t1.join() t2.join() t3.join() t4.join()

(2)log输出

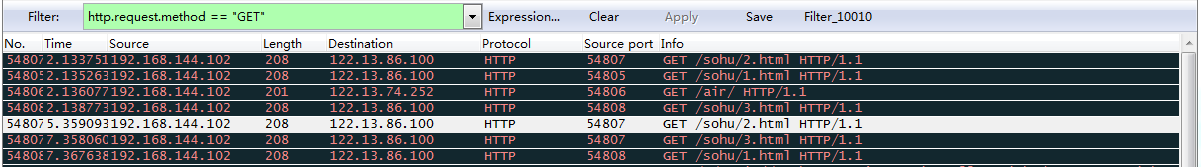

DEBUG:urllib3.connectionpool:Starting new HTTP connection (1): www.sohu.com #pool_sohu_connection_1_port_54805 DEBUG:urllib3.connectionpool:Starting new HTTP connection (1): news.163.com #pool_163_connection_1_port_54806 DEBUG:urllib3.connectionpool:Starting new HTTP connection (2): www.sohu.com #pool_sohu_connection_2_port_54807 DEBUG:urllib3.connectionpool:Starting new HTTP connection (3): www.sohu.com #pool_sohu_connection_3_port_54808 DEBUG:urllib3.connectionpool:http://news.163.com:80 "GET /air/ HTTP/1.1" 200 None DEBUG:urllib3.connectionpool:http://www.sohu.com:80 "GET /sohu/3.html HTTP/1.1" 404 None DEBUG:urllib3.connectionpool:http://www.sohu.com:80 "GET /sohu/2.html HTTP/1.1" 404 None DEBUG:urllib3.connectionpool:http://www.sohu.com:80 "GET /sohu/1.html HTTP/1.1" 404 None WARNING:urllib3.connectionpool:Connection pool is full, discarding connection: www.sohu.com #pool_sohu_connection_1_port_54805 被丢弃?最初host sohu能够建立3条连接,之后终究只能保存2条??? DEBUG:urllib3.connectionpool:http://www.sohu.com:80 "GET /sohu/2.html HTTP/1.1" 404 None #pool_sohu_connection_2_port_54807 3秒后sohu/2复用了原来sohu/2的端口 DEBUG:urllib3.connectionpool:http://www.sohu.com:80 "GET /sohu/3.html HTTP/1.1" 404 None #pool_sohu_connection_2_port_54807 5秒后sohu/3复用了原来sohu/2的端口 DEBUG:urllib3.connectionpool:http://www.sohu.com:80 "GET /sohu/1.html HTTP/1.1" 404 None #pool_sohu_connection_3_port_54807 5秒后sohu/1复用了原来sohu/3的端口

(3)wireshark抓包

(4)分析

多线程启动时到特定主机host的连接数没有收到 pool_maxsize 的限制,但是之后只有min(线程数,pool_maxsize ) 的连接数能够保留。

后续线程(应用层)并不关心实际会使用到的具体连接(传输层源端口)