/etc/hosts

192.168.153.147 Hadoop-host

192.168.153.146 Hadoopnode1

192.168.153.145 Hadoopnode2

::1 localhost

/etc/profile

export HADOOP_HOME=/opt/hadoop

export PATH=$PATH:$HADOOP_HOME/bin

设置关键的分布式IP地址配置:

masters文件:

192.168.153.147

slaves文件:

192.168.153.147

192.168.153.146

192.168.153.145

将hadoop配置分发至hadoopnode1节点计算机

scp –r /opt/hadoop root@hadoopnode1 :/opt/

运行

hadoop namenode -format,格式化namenode

Hadoop启动命令:

start-all.sh

免密登录

检查SSH key是否存在 ls -al ~/.ssh

主节点配置

1. 首先到用户主目录(cd ~),ls -a查看文件,

其中一个为“.ssh”,该文件价是存放密钥的

2. 现在执行命令生成密钥: ssh-keygen -t rsa -P ""

(使用rsa加密方式生成密钥)回车后,会提示三次输入信息,我们直接回车即可。

3. 进入文件夹cd .ssh (进入文件夹后可以执行ls -a 查看文件)

4. 将生成的公钥id_rsa.pub 内容追加到authorized_keys(执行命令:cat id_rsa.pub >> authorized_keys)

5. 把Hadoop-host机器下的id_rsa.pub复制到Hadoopnode1机器下的.ssh/authorized_keys文件里

scp id_rsa.pub Hadoopnode1:/root/.ssh/id_rsa.pub.Hadoop-host

scp id_rsa.pub Hadoopnode2:/root/.ssh/id_rsa.pub.Hadoop-host

6. Hadoopnode1机把从Hadoop-host机复制的id_rsa.pub.Hadoop-host添加到.ssh/authorzied_keys文件里

cat id_rsa.pub.Hadoop-host >> authorized_keys

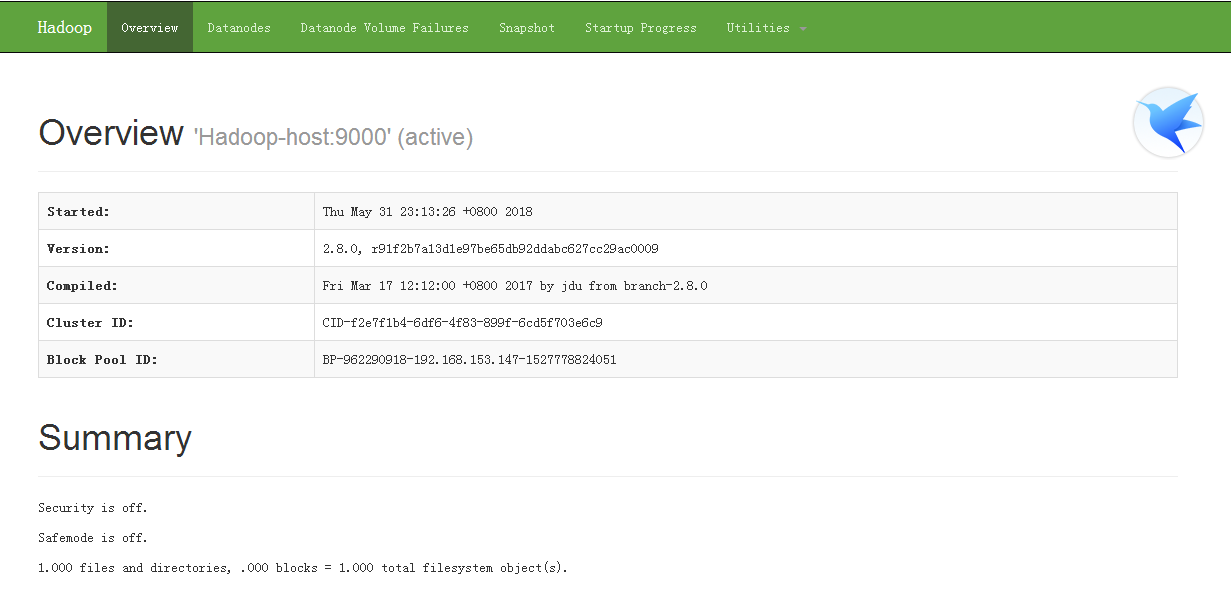

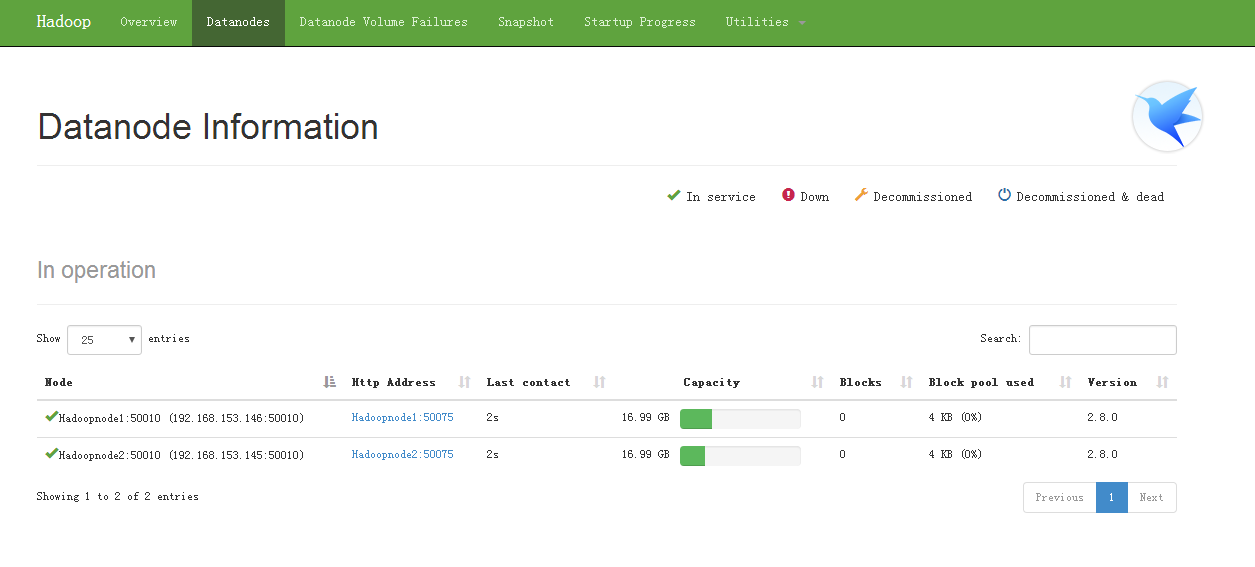

http://192.168.153.147:50070

启动成功效果图