Hadoop快速入门

Hadoop单机运行

衔接上一章节,首先切换到Hadoop根目录

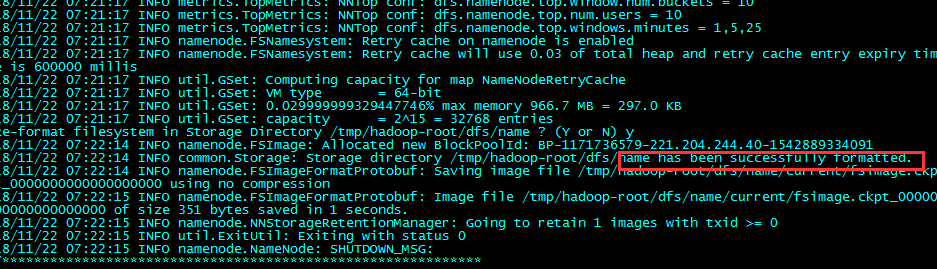

namenode格式化

执行bin/hadoop namenode -format命令,进行namenode格式化

18/11/22 08:02:18 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

18/11/22 08:02:18 INFO namenode.NameNode: createNameNode [-format]

Formatting using clusterid: CID-eeda45a6-6cd5-4121-9a73-998e2a00a91b

18/11/22 08:02:21 INFO namenode.FSNamesystem: No KeyProvider found.

18/11/22 08:02:21 INFO namenode.FSNamesystem: fsLock is fair:true

18/11/22 08:02:21 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit=1000

18/11/22 08:02:21 INFO blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true

18/11/22 08:02:21 INFO blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to 000:00:00:00.000

18/11/22 08:02:21 INFO blockmanagement.BlockManager: The block deletion will start around 2018 Nov 22 08:02:21

18/11/22 08:02:21 INFO util.GSet: Computing capacity for map BlocksMap

18/11/22 08:02:21 INFO util.GSet: VM type = 64-bit

18/11/22 08:02:21 INFO util.GSet: 2.0% max memory 966.7 MB = 19.3 MB

18/11/22 08:02:21 INFO util.GSet: capacity = 2^21 = 2097152 entries

18/11/22 08:02:21 INFO blockmanagement.BlockManager: dfs.block.access.token.enable=false

18/11/22 08:02:21 INFO blockmanagement.BlockManager: defaultReplication = 1

18/11/22 08:02:21 INFO blockmanagement.BlockManager: maxReplication = 512

18/11/22 08:02:21 INFO blockmanagement.BlockManager: minReplication = 1

18/11/22 08:02:21 INFO blockmanagement.BlockManager: maxReplicationStreams = 2

18/11/22 08:02:21 INFO blockmanagement.BlockManager: replicationRecheckInterval = 3000

18/11/22 08:02:21 INFO blockmanagement.BlockManager: encryptDataTransfer = false

18/11/22 08:02:21 INFO blockmanagement.BlockManager: maxNumBlocksToLog = 1000

18/11/22 08:02:21 INFO namenode.FSNamesystem: fsOwner = root (auth:SIMPLE)

18/11/22 08:02:21 INFO namenode.FSNamesystem: supergroup = supergroup

18/11/22 08:02:21 INFO namenode.FSNamesystem: isPermissionEnabled = true

18/11/22 08:02:21 INFO namenode.FSNamesystem: HA Enabled: false

18/11/22 08:02:21 INFO namenode.FSNamesystem: Append Enabled: true

18/11/22 08:02:22 INFO util.GSet: Computing capacity for map INodeMap

18/11/22 08:02:22 INFO util.GSet: VM type = 64-bit

18/11/22 08:02:22 INFO util.GSet: 1.0% max memory 966.7 MB = 9.7 MB

18/11/22 08:02:22 INFO util.GSet: capacity = 2^20 = 1048576 entries

18/11/22 08:02:22 INFO namenode.FSDirectory: ACLs enabled? false

18/11/22 08:02:22 INFO namenode.FSDirectory: XAttrs enabled? true

18/11/22 08:02:22 INFO namenode.FSDirectory: Maximum size of an xattr: 16384

18/11/22 08:02:22 INFO namenode.NameNode: Caching file names occuring more than 10 times

18/11/22 08:02:22 INFO util.GSet: Computing capacity for map cachedBlocks

18/11/22 08:02:22 INFO util.GSet: VM type = 64-bit

18/11/22 08:02:22 INFO util.GSet: 0.25% max memory 966.7 MB = 2.4 MB

18/11/22 08:02:22 INFO util.GSet: capacity = 2^18 = 262144 entries

18/11/22 08:02:22 INFO namenode.FSNamesystem: dfs.namenode.safemode.threshold-pct = 0.9990000128746033

18/11/22 08:02:22 INFO namenode.FSNamesystem: dfs.namenode.safemode.min.datanodes = 0

18/11/22 08:02:22 INFO namenode.FSNamesystem: dfs.namenode.safemode.extension = 30000

18/11/22 08:02:22 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.window.num.buckets = 10

18/11/22 08:02:22 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.num.users = 10

18/11/22 08:02:22 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.windows.minutes = 1,5,25

18/11/22 08:02:22 INFO namenode.FSNamesystem: Retry cache on namenode is enabled

18/11/22 08:02:22 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis

18/11/22 08:02:22 INFO util.GSet: Computing capacity for map NameNodeRetryCache

18/11/22 08:02:22 INFO util.GSet: VM type = 64-bit

18/11/22 08:02:22 INFO util.GSet: 0.029999999329447746% max memory 966.7 MB = 297.0 KB

18/11/22 08:02:22 INFO util.GSet: capacity = 2^15 = 32768 entries

Re-format filesystem in Storage Directory /tmp/hadoop-root/dfs/name ? (Y or N) y

18/11/22 08:02:37 INFO namenode.FSImage: Allocated new BlockPoolId: BP-594298259-192.168.55.128-1542891757196

18/11/22 08:02:37 INFO common.Storage: Storage directory /tmp/hadoop-root/dfs/name has been successfully formatted.

18/11/22 08:02:37 INFO namenode.FSImageFormatProtobuf: Saving image file /tmp/hadoop-root/dfs/name/current/fsimage.ckpt_0000000000000000000 using no compression

18/11/22 08:02:37 INFO namenode.FSImageFormatProtobuf: Image file /tmp/hadoop-root/dfs/name/current/fsimage.ckpt_0000000000000000000 of size 350 bytes saved in 0 seconds.

18/11/22 08:02:37 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

18/11/22 08:02:37 INFO util.ExitUtil: Exiting with status 0

18/11/22 08:02:37 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at node1/192.168.55.128

在执行结果中可以找到has been successfully formatted,说明namenode格式化成功了!

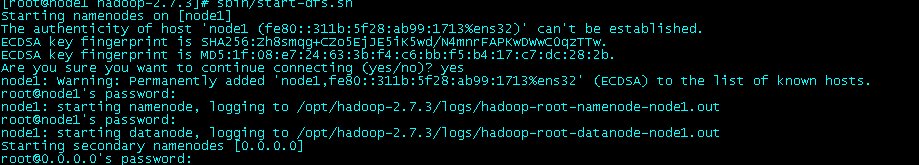

启动HDFS

执行sbin/start-dfs.sh命令启动HDFS

Starting namenodes on [node1]

node1: ssh_exchange_identification: Connection closed by remote host

node1: ssh_exchange_identification: read: Connection reset by peer

Starting secondary namenodes [0.0.0.0]

The authenticity of host '0.0.0.0 (0.0.0.0)' can't be established.

ECDSA key fingerprint is SHA256:Zh8smqg+CZo5EjJE5iK5wd/N4mnrFAPKwDWwC0qzTTw.

ECDSA key fingerprint is MD5:1f:08:e7:24:63:3b:f4:c6:bb:f5:b4:17:c7:dc:28:2b.

Are you sure you want to continue connecting (yes/no)? yes

0.0.0.0: Warning: Permanently added '0.0.0.0' (ECDSA) to the list of known hosts.

root@0.0.0.0's password:

0.0.0.0: starting secondarynamenode, logging to /opt/hadoop-2.7.3/logs/hadoop-root-secondarynamenode-node1.out

在启动HDFS过程中,按照提示输入“yes”

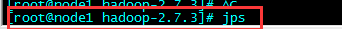

通过jps命令可以查看Java进程

[root@node1 hadoop-2.7.3]# jps 1446 NameNode 1831 Jps 1722 SecondaryNameNode 1564 DataNode

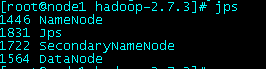

jps(Java Virtual Machine Process Status Tool)是JDK 1.5开始提供的一个显示当前所有Java进程pid的命令,简单实用,非常适合在Linux/unix平台上简单察看当前java进程的一些简单情况。

jps -l输出应用程序main class的完整package名 或者 应用程序的jar文件完整路径名

[root@node1 hadoop-2.7.3]# jps -l 1446 org.apache.hadoop.hdfs.server.namenode.NameNode 1722 org.apache.hadoop.hdfs.server.namenode.SecondaryNameNode 1564 org.apache.hadoop.hdfs.server.datanode.DataNode 1870 sun.tools.jps.Jps

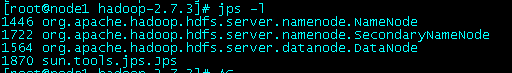

启动YARN

通过命令sbin/start-yarn.sh启动YARN

[root@node1 hadoop-2.7.3]# sbin/start-yarn.sh starting yarn daemons starting resourcemanager, logging to /opt/hadoop-2.7.3/logs/yarn-root-resourcemanager-node1.out root@node1's password: node1: starting nodemanager, logging to /opt/hadoop-2.7.3/logs/yarn-root-nodemanager-node1.out

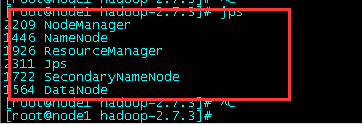

然后通过jps查看YARN的进程

[root@node1 hadoop-2.7.3]# jps 2209 NodeManager 1446 NameNode 1926 ResourceManager 2311 Jps 1722 SecondaryNameNode 1564 DataNode [root@node1 hadoop-2.7.3]#

可以看到多了ResourceManager和NodeManager两个进程。

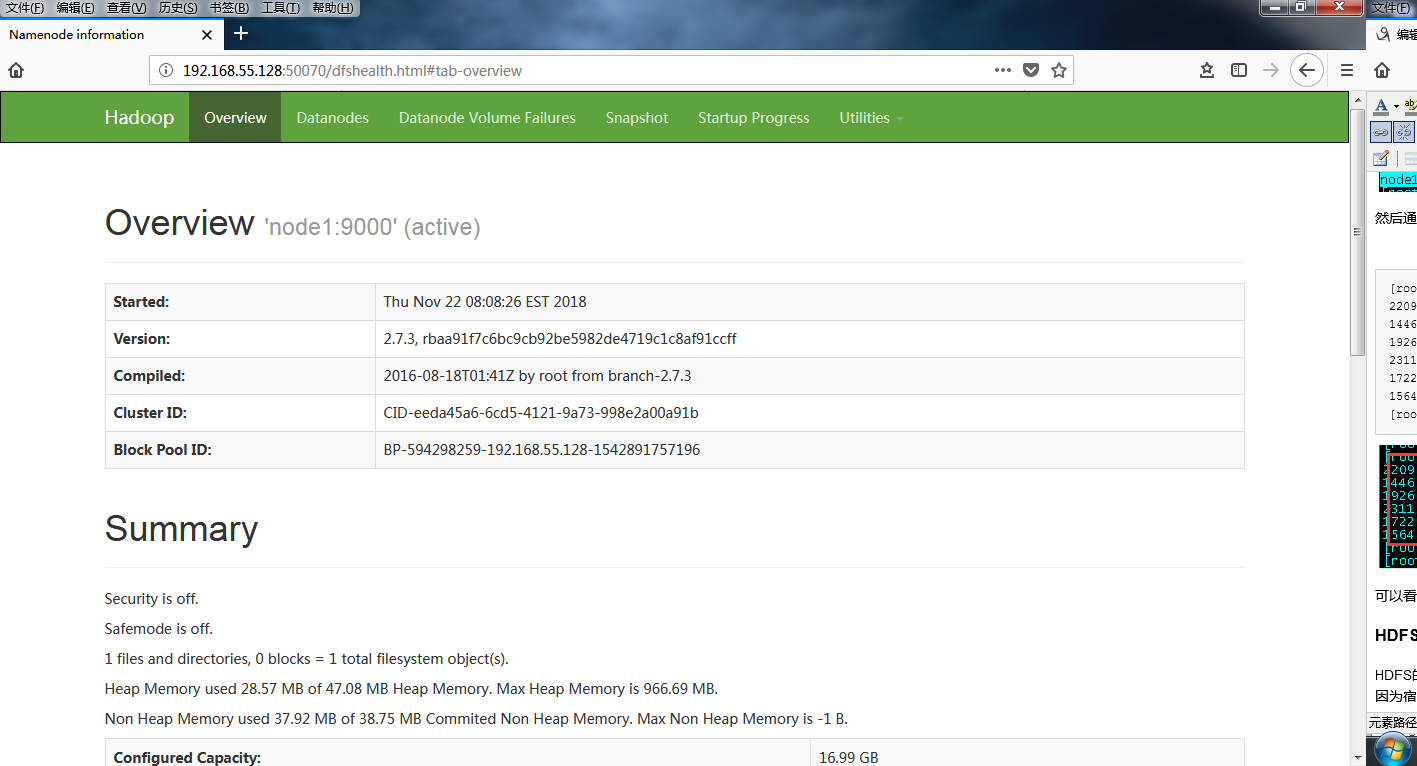

HDFS WEB界面

HDFS的Web界面默认端口号是50070。

因为宿主机Windows的hosts文件没有配置虚拟机相关IP信息,所以需要通过IP地址来访问HDFS WEB界面,在浏览器中打开:http://192.168.55.128:50070

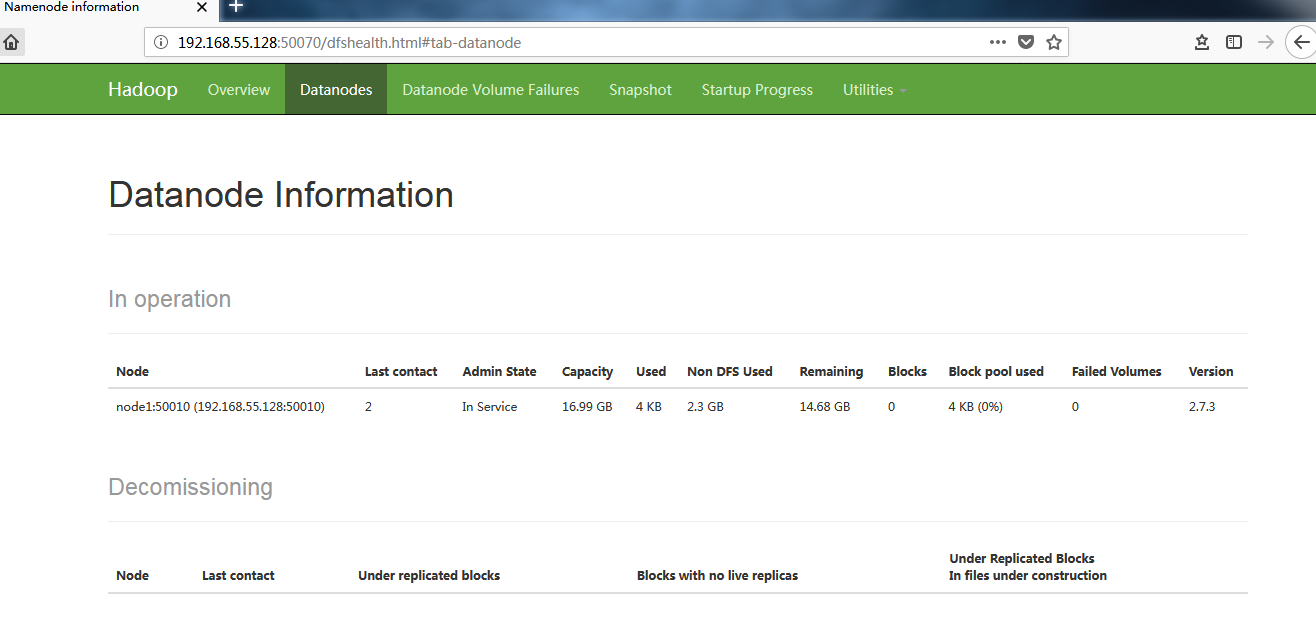

单击页面上部的导航栏中的“Datanodes”

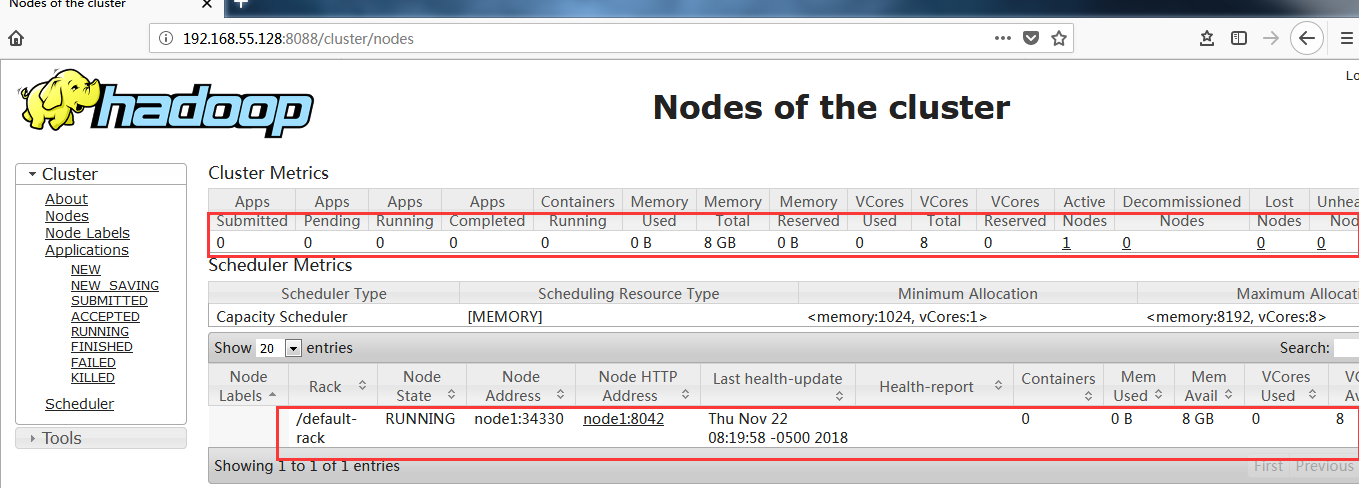

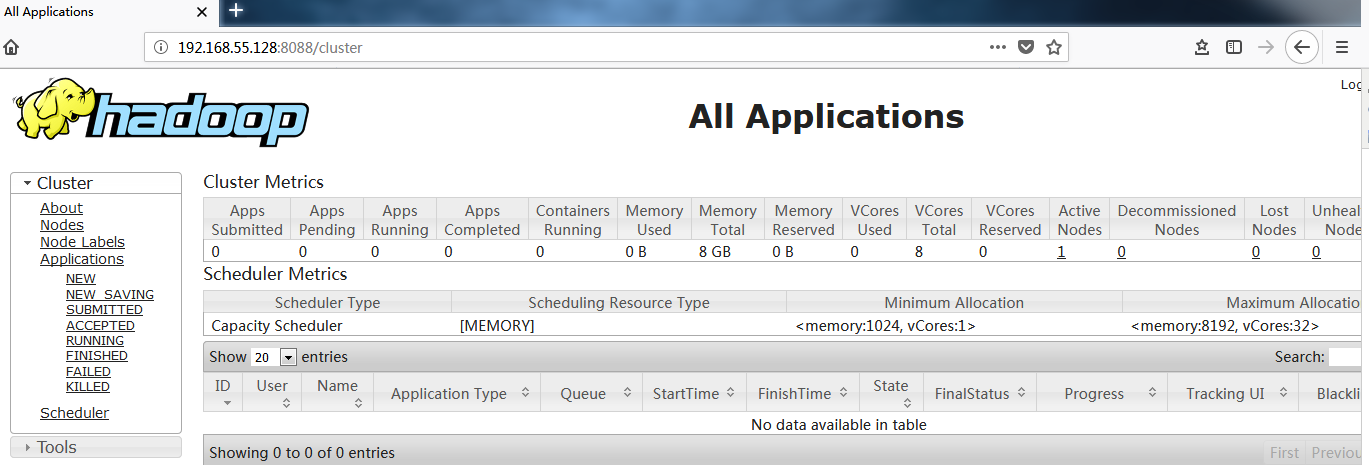

YARN WEB界面

YARN的Web界面默认端口号是8088。

http://192.168.55.128:8088

单击左侧菜单栏的“Nodes”,可以查看NodeManager信息