背景

有两张表,都是主键递增,类似于主表和明细表:

statistics_apply:统计申请表,主键applyId,7万多条记录statistics_apply_progress:统计申请进度表(申请统计的状态变更记录表),主键progressId,字段applyId保存的是上表的主键,30多万条记录

现在我们需要通过多个applyId查询对应的最新的progress记录。

当前数据库版本:5.6.16-log

原SQL

SELECT p2.APPLY_ID, p2.TASK_MESSAGE

FROM statistics_apply_progress AS p2

WHERE p2.progress_id IN (

SELECT max( p1.PROGRESS_ID ) AS PROGRESS_ID

FROM statistics_apply_progress AS p1

WHERE p1.APPLY_ID IN (

39574,49304,57423,8830,20416,29298,41672,52163,

62564,10850,20985,30982,46701,57364,3414,16753,

21808,46315,33520,47612,50974,61741,16210,19503,

28713,38700,48318,56743,20868,20870,38094,20872,

20805,8165,20525,29426,12345,75321,95325,12784 )

GROUP BY p1.APPLY_ID

)

ORDER BY p2.APPLY_ID;

最初的想法是通过对progress表的applyId字段做筛选,然后通过applyId分组,由于主键都是自增的,选取分组后最大的progressId,然后把查询出的所有progressIds当作子查询的结果再在progress表用IN来筛选,最后拿到我们需要的applyId对应的最新的progress记录中的task_messsage字段信息。

开始觉得这能有啥问题,太顺理成章了,在测试环境数据量太小也看不出什么端倪,于是提交、上线~

发现问题

发布到生产之后,一开始没太处理这块,过段时间后在SkyWalking上看到有个查询接口之前一直稳定在200ms内返回,最近都上升到800ms了,加上其他接口页面响应都迟钝了一小会。

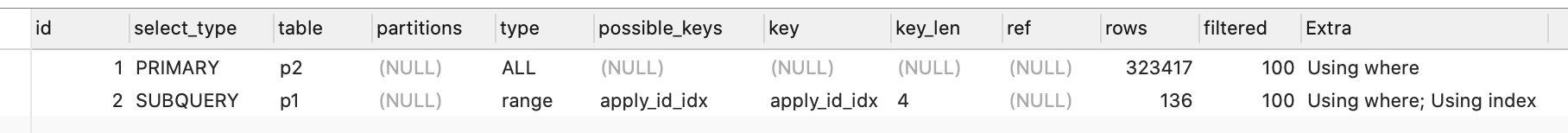

于是把该接口的所有SQL都过了一遍,看下它们的执行计划,才看到这个看上去老实但却不按套路出牌的SQL:

看到type那一栏了吗?那居然是all,天呐,全表扫描就不用看了,赶快优化吧。

但是觉得这个太反人类了,progress的progressId和applyId都是有索引的,分组,排序也都是走索引列,但这结果和想象差距太远了。

此外,子查询的select_type是SUBQUERY,又不是DEPENDENT SUBQUERY,都不需要依赖外部传入的参数去查询,为什么还全表扫呢?

解决问题

造成全表扫描的原因有哪些

查找官方文档,看到Avoiding Full Table Scans说以下情况经常会有全表扫描:

- The table is so small that it is faster to perform a table scan than to bother with a key lookup. This is common for tables with fewer than 10 rows and a short row length.(不符合当前查询场景)

- There are no usable restrictions in the ON or WHERE clause for indexed columns.(不符合当前查询场景)

- You are comparing indexed columns with constant values and MySQL has calculated (based on the index tree) that the constants cover too large a part of the table and that a table scan would be faster. See Section 8.2.1.1, “WHERE Clause Optimization”.(不符合当前查询场景)

- You are using a key with low cardinality (many rows match the key value) through another column. In this case, MySQL assumes that by using the key it probably will do many key lookups and that a table scan would be faster.(不符合当前查询场景)

到这我就很尴尬了,都不是我这种场景啊。那我们继续看看子查询有哪些限制。

子查询的限制

官方文档:SubQuery Restriction

-

不能在子查询中查询了一张表还在外部更新它:

In general, you cannot modify a table and select from the same table in a subquery. For example, this limitation applies to statements of the following forms:

DELETE FROM t WHERE ... (SELECT ... FROM t ...);

UPDATE t ... WHERE col = (SELECT ... FROM t ...);

{INSERT|REPLACE} INTO t (SELECT ... FROM t ...);但我的是查询语句,并不满足描述中关于

modify a table的说明,这应该不是我问题的本质。 -

行比较操作部分支持

我们使用的是很普通的IN操作,不属于此类。 -

子查询不能是相关子查询

很明显我这里的查询不存在内外依赖关系。关于这个相关子查询我要另写文章整理。 -

子查询不能用limit

我也没有使用 -

最后一个限制和我的场景相去甚远我就不描述了

说实在的,这个问题优化起来还是很方便的,现在是要解释很难。最后Google了很久,在mysql bugs里面发现了和我使用场景一模一样的描述,太搞人了!

Design problem

官方回复问题MAX() causes a full table scan when used in a sub-query

这么重要的原话我得贴出来:

This is expected behavior. If you explain both EXPLAINs you shall see that query node as a nested query and standalone query have 100 % identical execution plan.

Your problem is that highest level query gets a full table scan. That is actually a cause of the design problem that was introduced when nested queries were developed, which affects a large number of queries that use nested queries. There are plans to solve this problem, but scheduling is yet unknown.

The only thing that you can try is to have an index on the column xxx and to avoid nested query by using prepared statements or stored function that will create a string with values in brackets returned by your inner query as standalone one.

看到了吧,有这个问题干嘛不在SubQuery Restriction中说明一下,让我一顿好找!

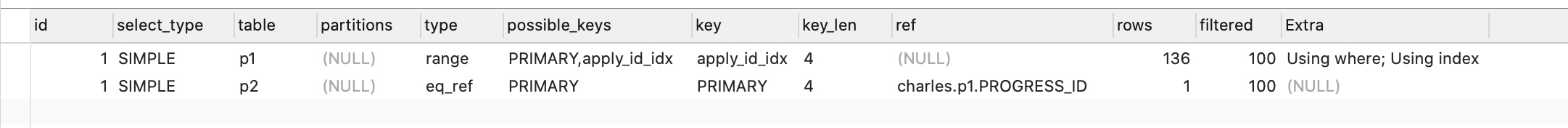

后面我把max语句删除掉之后重新看了下执行计划:

SELECT p2.APPLY_ID, p2.TASK_MESSAGE

FROM statistics_apply_progress AS p2

WHERE p2.progress_id IN (

SELECT PROGRESS_ID

FROM statistics_apply_progress AS p1

WHERE p1.APPLY_ID IN (

39574,49304,57423,8830,20416,29298,41672,52163,

62564,10850,20985,30982,46701,57364,3414,16753,

21808,46315,33520,47612,50974,61741,16210,19503,

28713,38700,48318,56743,20868,20870,38094,20872,

20805,8165,20525,29426,12345,75321,95325,12784 )

);

这样的语句看起来很傻,只是为了验证子查询来看下。

找到问题之后我前后在mysql5.6.47和mysql5.7.28中都试了一下,仍然存在。 大家以后注意一下吧。

修改SQL

既然找到问题了,就转换思路了,同样在MySQL官方文档里能找到Rewriting Subqueries as Joins

文中有这么一段话

A LEFT [OUTER] JOIN can be faster than an equivalent subquery because the server might be able to optimize it better

那怎么就试试吧,修改后的SQL如下:

SELECT p2.APPLY_ID, p2.TASK_MESSAGE

FROM (

SELECT max( p1.PROGRESS_ID ) AS PROGRESS_ID

FROM statistics_apply_progress as p1

WHERE p1.APPLY_ID IN (

39574,49304,57423,8830,20416,29298,41672,52163,

62564,10850,20985,30982,46701,57364,3414,16753,

21808,46315,33520,47612,50974,61741,16210,19503,

28713,38700,48318,56743,20868,20870,38094,20872,

20805,8165,20525,29426,12345,75321,95325,12784 )

GROUP BY p1.APPLY_ID

) AS p3

LEFT JOIN statistics_apply_progress as p2 ON p3.PROGRESS_ID = p2.PROGRESS_ID

ORDER BY p2.APPLY_ID;

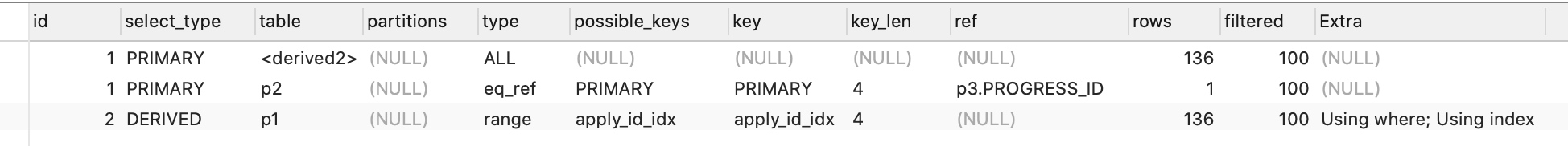

查看一下执行计划:

已经没有full scan了,第一行的PRIMARY/ALL表示查询中若包含任何复杂的子部分,最外层的select被标记为PRIMARY。主要还是看2、3两行,都走索引,结果查不到哪去了。

性能对比

不同机器配置不同查询时间也不同

- 修改前的查询时间固定在800ms左右

- 修改前的查询时间固定在2ms左右

但是知道原因后这么比较已经没多大意义了,全表扫描和走索引,哈哈哈,就这么吧,结束。