利用Filter进行筛选:HBase的Scan可以通过setFilter方法添加过滤器(Filter),这也是分页、多条件查询的基础。HBase为筛选数据提供了一组过滤器,通过这个过滤器可以在HBase中的数据的多个维度(行,列,数据版本)上进行对数据的筛选操作。Filter是可以加多个的,HBase提供十多种Filter类型。filterList.addFilter(scvf) 就是可以添加多个查询条件,然后调用setFilter函数给Scanner。

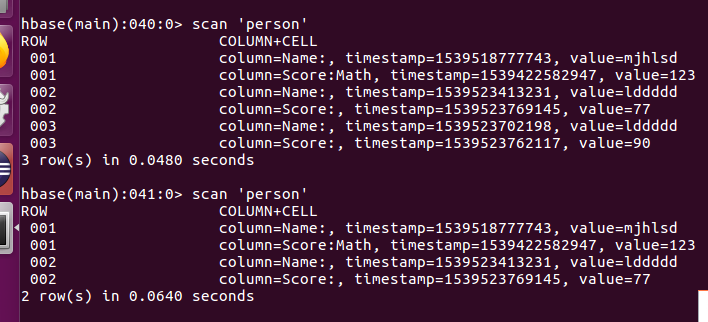

直接附上源码:(多条件查询之后对该2行键一行数据删除)

package cn.edu.zucc.hbase; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.hbase.Cell; import org.apache.hadoop.hbase.CellUtil; import org.apache.hadoop.hbase.HBaseConfiguration; import org.apache.hadoop.hbase.TableName; import org.apache.hadoop.hbase.client.*; import org.apache.hadoop.hbase.HColumnDescriptor; import org.apache.hadoop.hbase.HTableDescriptor; import java.io.IOException; import org.apache.hadoop.hbase.KeyValue; import java.io.IOException; import java.util.ArrayList; import java.util.List; import org.apache.hadoop.hbase.filter.CompareFilter.CompareOp; import org.apache.hadoop.hbase.filter.Filter; import org.apache.hadoop.hbase.filter.FilterList; import org.apache.hadoop.hbase.filter.SingleColumnValueFilter; import org.apache.hadoop.hbase.util.Bytes; public class test { public static Configuration configuration; public static Connection connection; public static Admin admin; public static void QueryByCondition3(String tableName) { try { init(); Table table = connection.getTable(TableName.valueOf(tableName)); List<Filter> filters = new ArrayList<Filter>(); Filter filter1 = new SingleColumnValueFilter(Bytes.toBytes("Name"), null, CompareOp.EQUAL, Bytes.toBytes("lddddd")); filters.add(filter1); Filter filter2 = new SingleColumnValueFilter(Bytes.toBytes("Score"), null, CompareOp.EQUAL, Bytes.toBytes("90")); filters.add(filter2); /* Filter filter3 = new SingleColumnValueFilter(Bytes.toBytes("column3"), null, CompareOp.EQUAL, Bytes.toBytes("ccc")); filters.add(filter3);*/ FilterList filterList1 = new FilterList(filters); Scan scan = new Scan(); scan.setFilter(filterList1); ResultScanner rs = table.getScanner(scan); System.out.println(rs); String delrow=""; for (Result r : rs) { System.out.println("获得到rowkey:" + new String(r.getRow())); delrow=new String(r.getRow()); for (KeyValue keyValue : r.raw()) { System.out.println( "列:" + new String(keyValue.getFamily())+new String(keyValue.getQualifier()) + "====值:" + new String(keyValue.getValue())); } } Delete delete = new Delete(delrow.getBytes()); //删除指定列族 //delete.addFamily(Bytes.toBytes(colFamily)); //删除指定列 //delete.addColumn(Bytes.toBytes(colFamily),Bytes.toBytes(col)); table.delete(delete); System.out.println("删除成功!"); table.close(); close(); rs.close(); } catch (Exception e) { e.printStackTrace(); } } public static void init() { configuration = HBaseConfiguration.create(); configuration.set("hbase.rootdir", "hdfs://localhost:9000/hbase"); try { connection = ConnectionFactory.createConnection(configuration); admin = connection.getAdmin(); } catch (IOException e) { e.printStackTrace(); } } public static void close() { try { if (admin != null) { admin.close(); } if (null != connection) { connection.close(); } } catch (IOException e) { e.printStackTrace(); } } public static void main(String[] args) { try { QueryByCondition3("person"); System.out.println("over111"); } catch (Exception e) { e.printStackTrace(); } } }

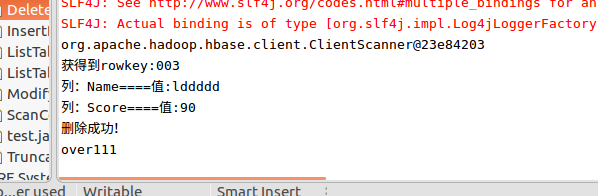

结果: