k8s部署

1.集群所有主机关闭swap

sudo swapoff -a

sudo sed -i 's/.*swap.*/#&/' /etc/fstab

如果重启后swap还是自动挂载执行systemctl mask dev-sda3.swap(dev-sdax为swap磁盘分区)

2.集群中所有服务器安装docker

sudo apt-get update

sudo apt-get -y install

apt-transport-https

ca-certificates

curl

gnupg-agent

software-properties-common

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add -

sudo add-apt-repository

"deb [arch=amd64] https://download.docker.com/linux/ubuntu

$(lsb_release -cs)

stable"

sudo apt-get update

sudo apt-get install -y docker-ce docker-ce-cli containerd.io

3.集群所有节点上安装 kubectl kubelet kubeadm

添加阿里云k8s源

curl -fsSL https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | sudo apt-key add -

sudo vim /etc/apt/sources.list

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

sudo apt-get update

sudo apt-get -y install kubectl kubelet kubeadm

sudo apt-mark hold kubelet kubeadm kubectl

修改docker Cgroup Driver为systemd

cat <<EOF | sudo tee /etc/docker/daemon.json

{

"exec-opts":["native.cgroupdriver=systemd"]

}

EOF

sudo systemctl restart docker.service

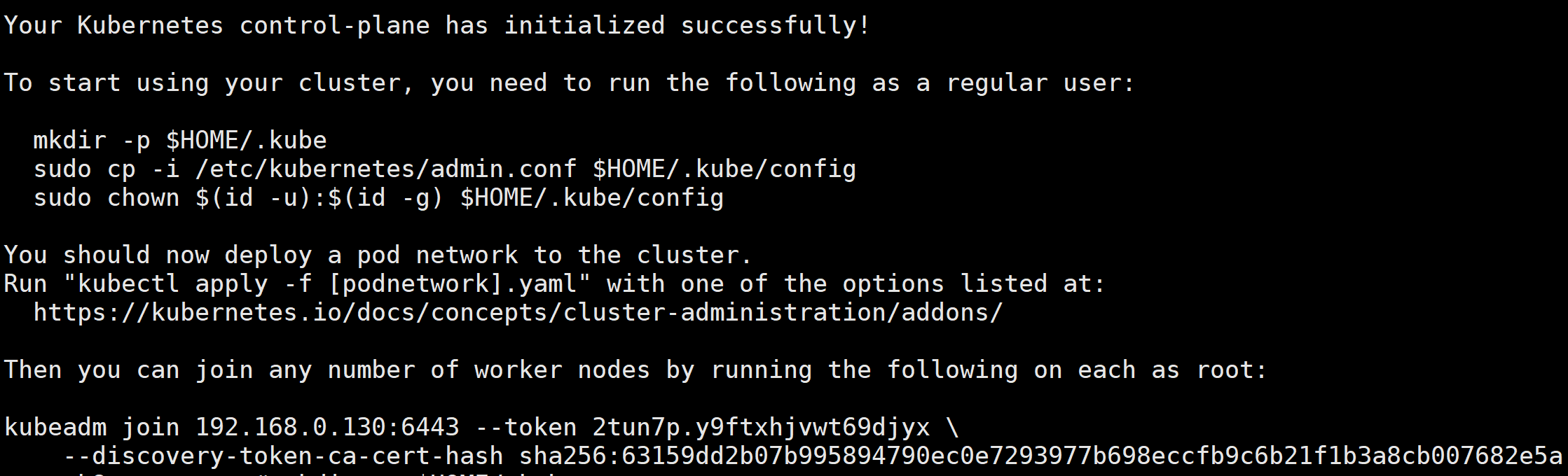

4.初始化master节点

kubeadm init --image-repository registry.aliyuncs.com/google_containers --kubernetes-version v1.18.2 --pod-network-cidr=10.244.0.0/16

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.0.130:6443 --token 2tun7p.y9ftxhjvwt69djyx

--discovery-token-ca-cert-hash sha256:63159dd2b07b995894790ec0e7293977b698eccfb9c6b21f1b3a8cb007682e5a

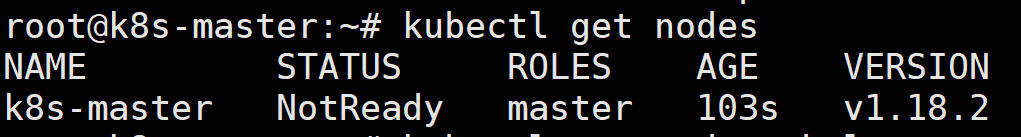

kubectl get nodes

为了使用更便捷,启用 kubectl 命令的自动补全功能。

echo "source <(kubectl completion bash)" >> ~/.bashrc

source ~/.bashrc

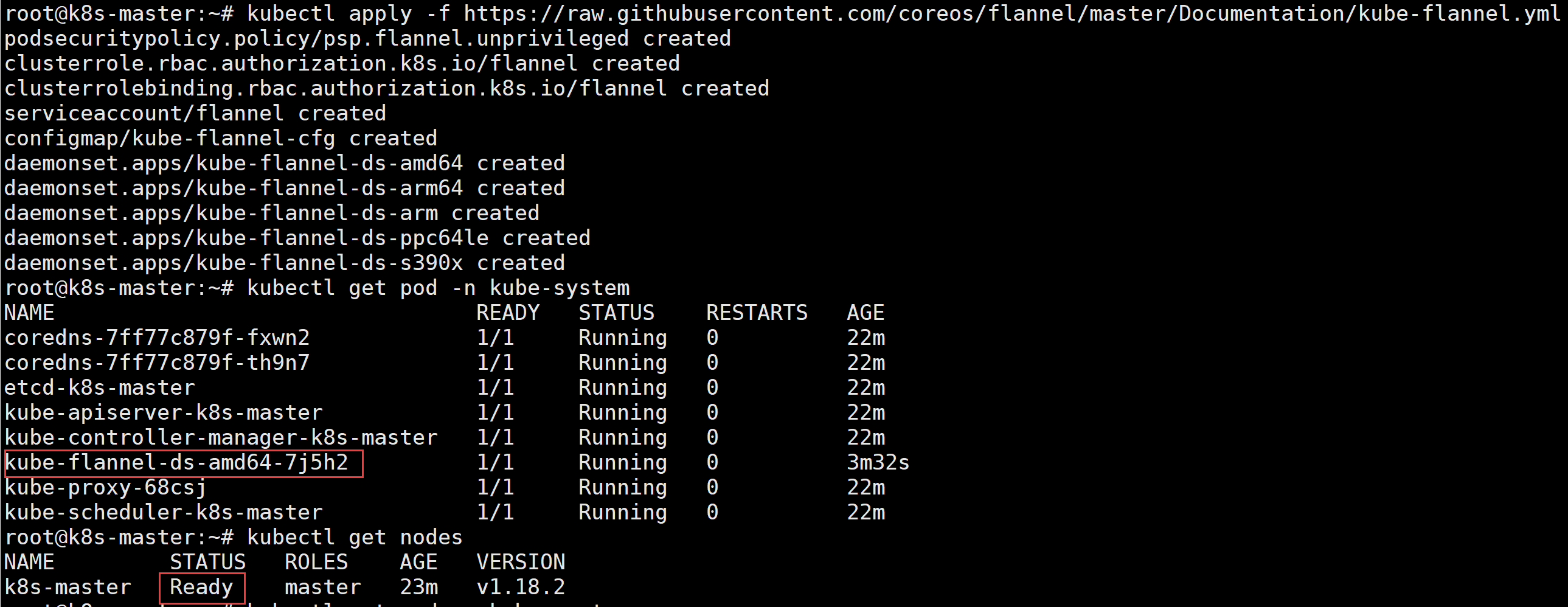

5.master节点安装flannel网络

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

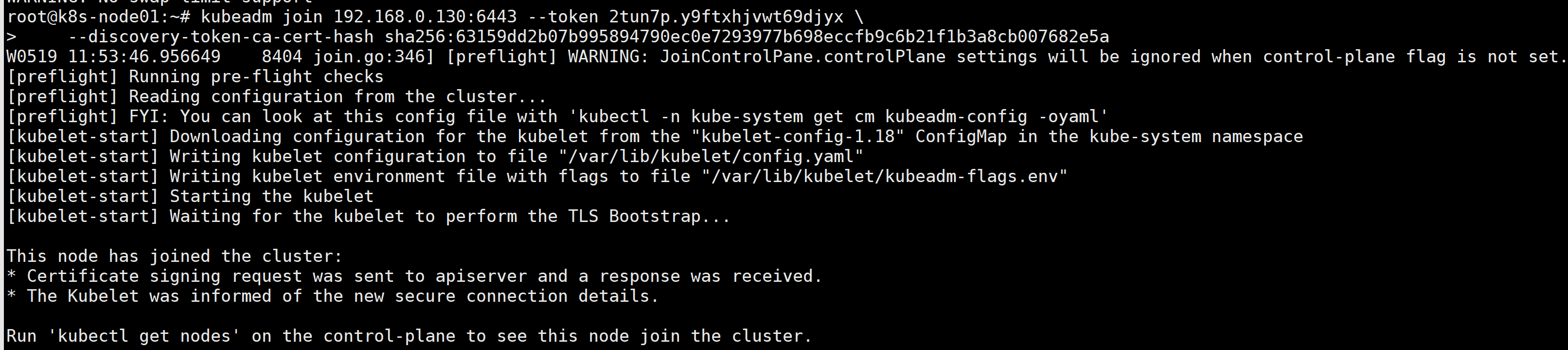

6.加入node节点

kubeadm join 192.168.0.130:6443 --token 2tun7p.y9ftxhjvwt69djyx

--discovery-token-ca-cert-hash sha256:63159dd2b07b995894790ec0e7293977b698eccfb9c6b21f1b3a8cb007682e5a

journalctl -xeu kubelet查看kubelet日志

默认情况下,token的有效期是24小时,如果我们的token已经过期的话,可以使用以下命令重新生成:

# kubeadm token create

如果我们也没有--discovery-token-ca-cert-hash的值,可以使用以下命令生成:

# openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //'

kubeadm reset 可以重置'kubeadm init' or 'kubeadm join'的操作。 同时需要删除家目录下的.kube 目录。才能恢复到初始化之前的状态

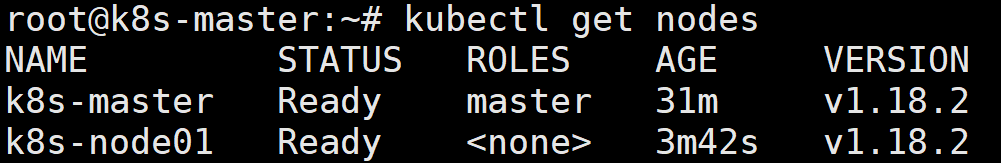

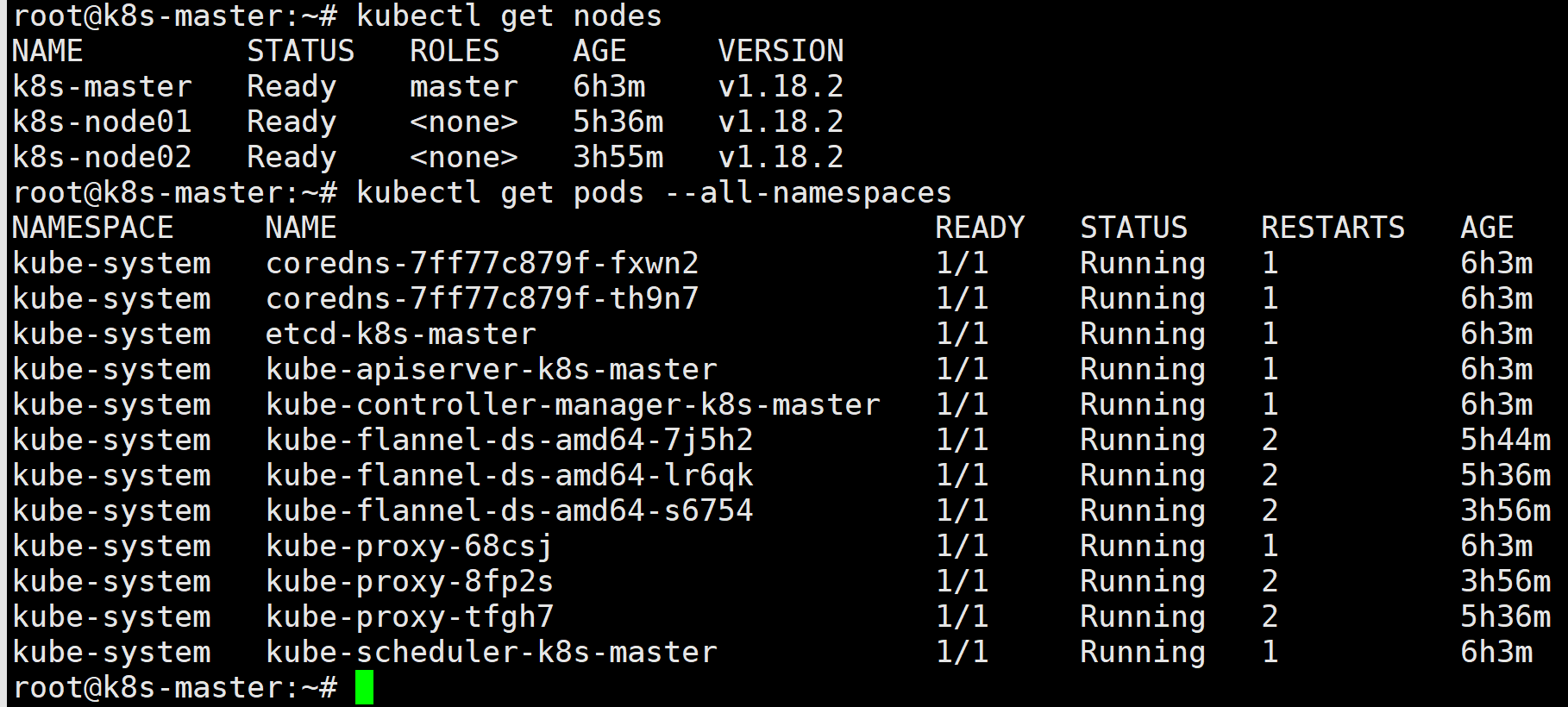

在master上查看node节点

kubectl get nodes

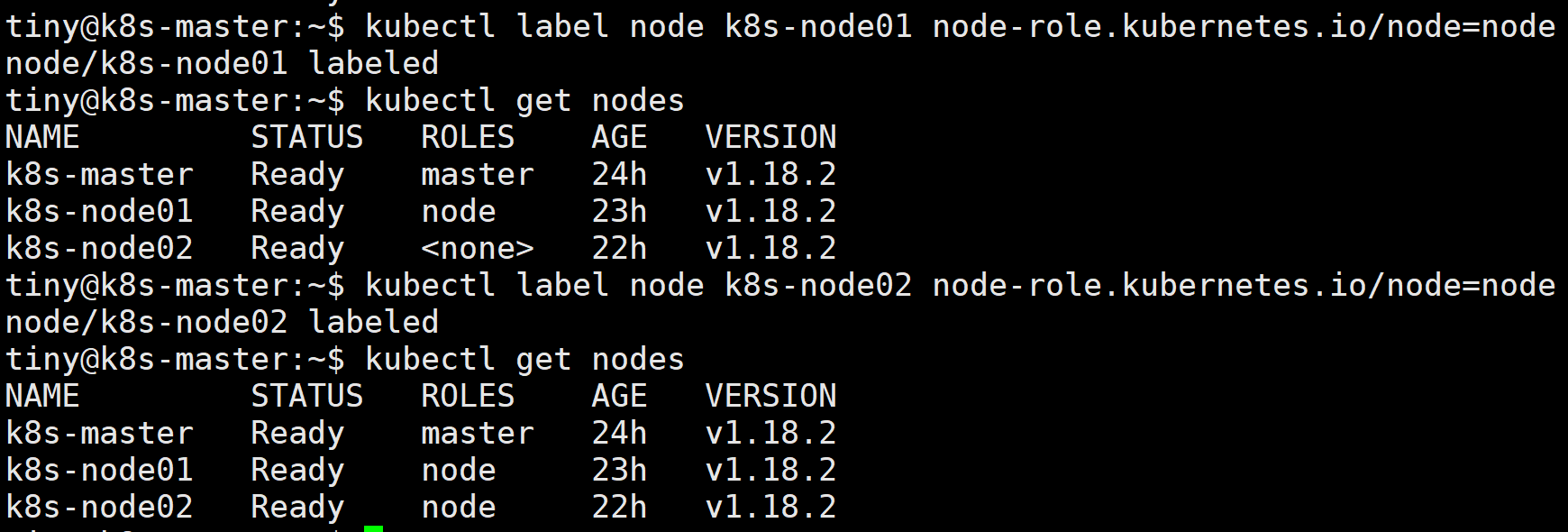

使用label给节点角色打标签

kubectl label node k8s-node01 node-role.kubernetes.io/node=node

安装部署Dashboard

1.在master上下载并修改Dashboard安装脚本

cd ~

mkdir Dashboard

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.1/aio/deploy/recommended.yaml

vim recommended.yaml

-----

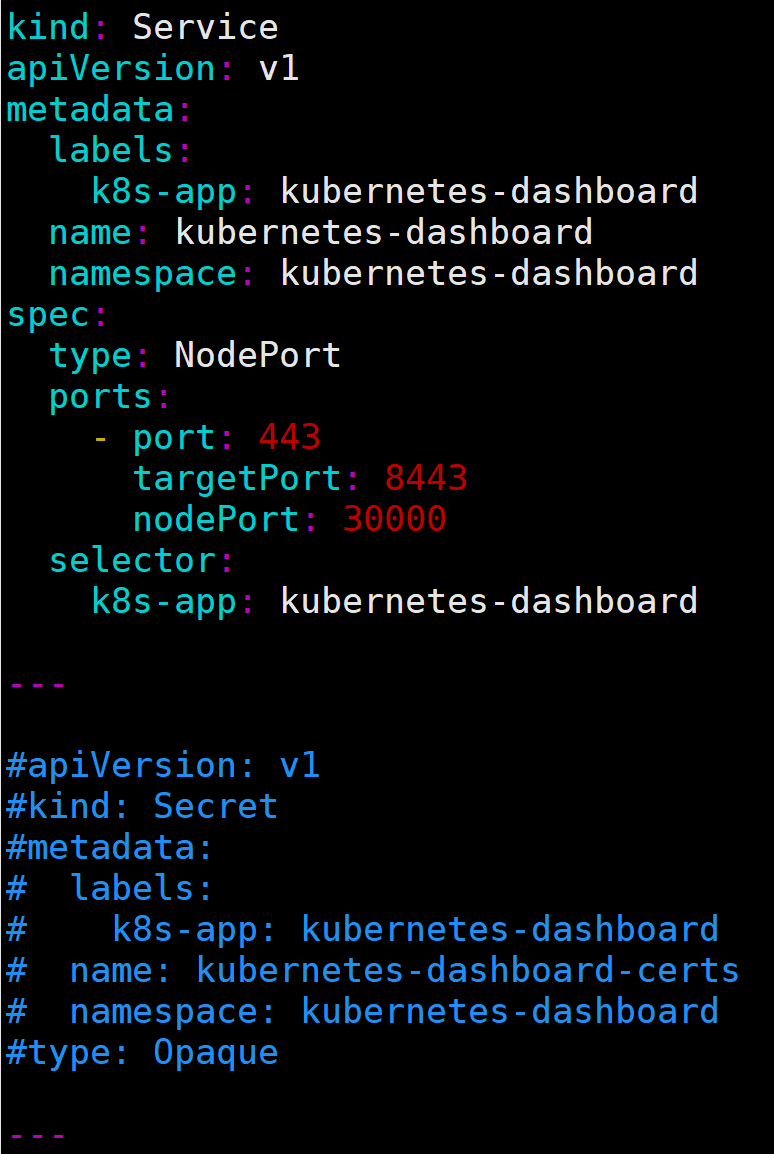

#增加直接访问端口

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort #增加

ports:

- port: 443

targetPort: 8443

nodePort: 30000 #增加

selector:

k8s-app: kubernetes-dashboard

---

因为自动生成的证书很多浏览器无法使用,所以我们自己创建,注释掉kubernetes-dashboard-certs对象声明

#apiVersion: v1

#kind: Secret

#metadata:

# labels:

# k8s-app: kubernetes-dashboard

# name: kubernetes-dashboard-certs

# namespace: kubernetes-dashboard

#type: Opaque

2.创建证书

mkdir dashboard-certs

cd dashboard-certs

创建命名空间

kubectl create namespace kubernetes-dashboard

创建key文件

openssl genrsa -out dashboard.key 2048

证书请求

openssl rand -writerand /home/tiny/.rnd

openssl req -new -out dashboard.csr -key dashboard.key -subj '/CN=dashboard-cert'

自签证书

openssl x509 -req -in dashboard.csr -signkey dashboard.key -out dashboard.crt

创建kubernetes-dashboard-certs对象

kubectl create secret generic kubernetes-dashboard-certs --from-file=dashboard.key --from-file=dashboard.crt -n kubernetes-dashboard

3.安装Dashboard

cd ~/Dashboard

kubectl create -f recommended.yaml

检查结果

kubectl get pods -A -o wide

kubectl get service -n kubernetes-dashboard -o wide

4.创建dashboard管理员

创建账号

vim dashboard-admin.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: dashboard-admin

namespace: kubernetes-dashboard

kubectl create -f dashboard-admin.yaml

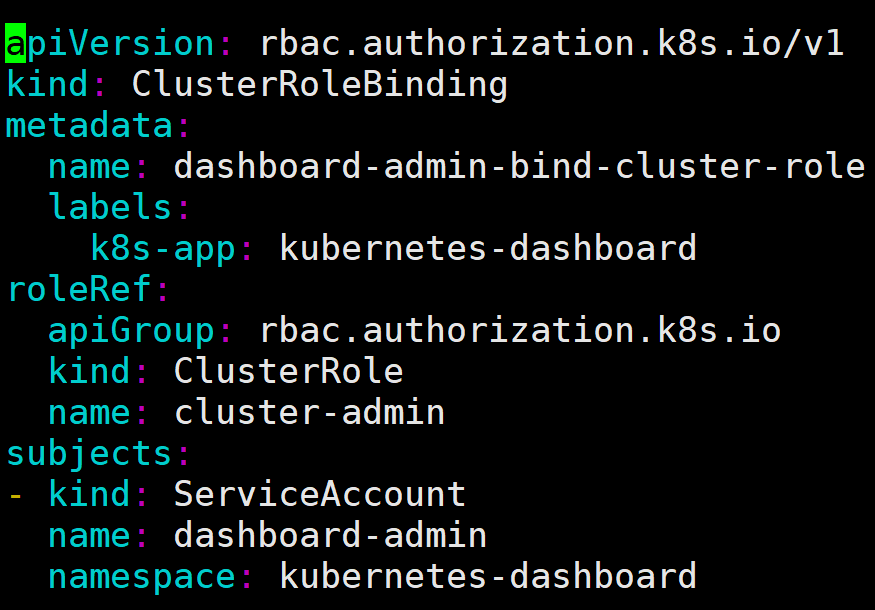

为用户分配权限

vim dashboard-admin-bind-cluster-role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: dashboard-admin-bind-cluster-role

labels:

k8s-app: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: dashboard-admin

namespace: kubernetes-dashboard

kubectl create -f dashboard-admin-bind-cluster-role.yaml

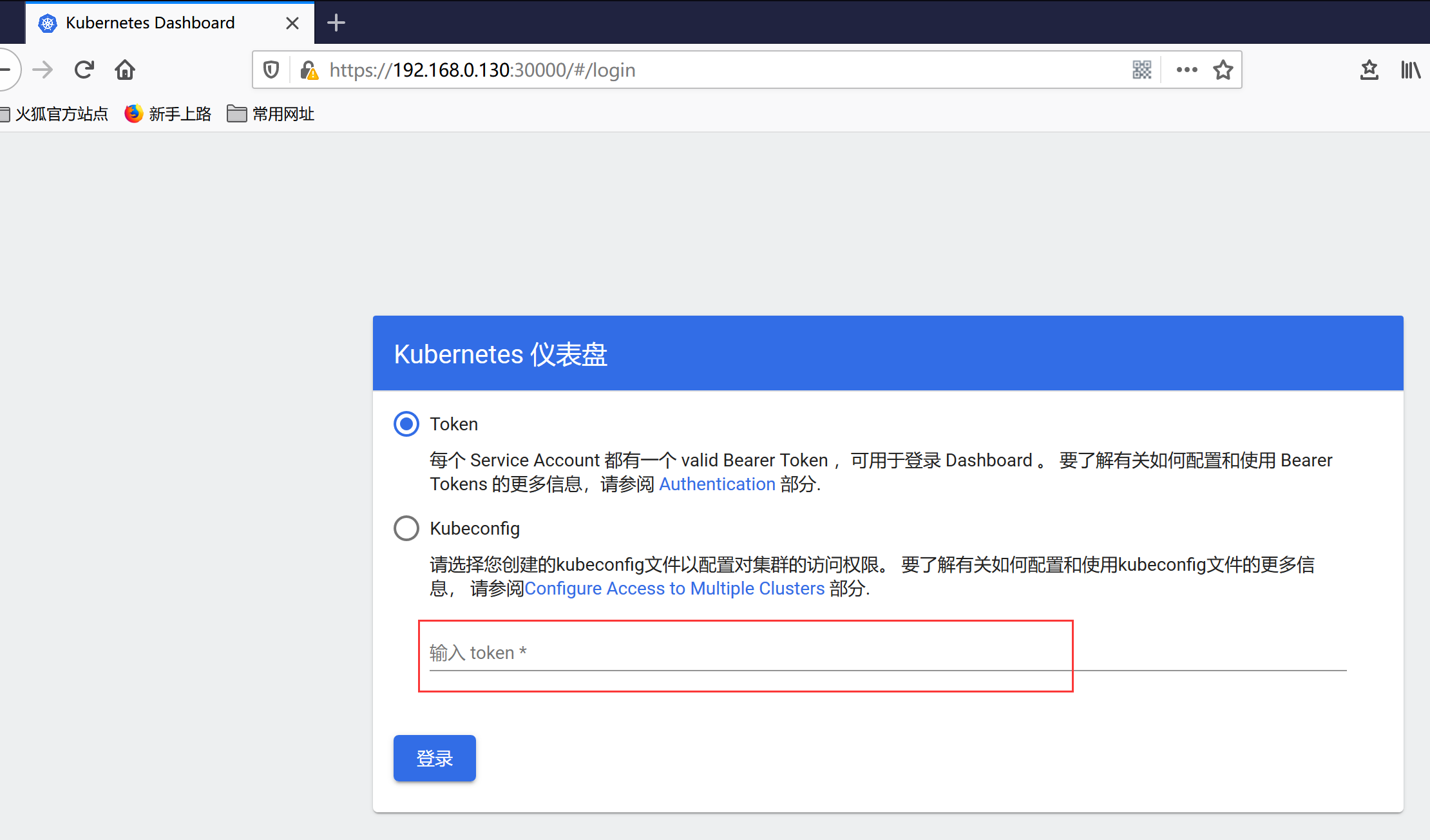

查看并复制用户Token

kubectl -n kubernetes-dashboard describe secret $(kubectl -n kubernetes-dashboard get secret | grep dashboard-admin | awk '{print $1}')

在浏览器中打开链接 https://192.168.0.130:30000

选择Token登录,输入刚才获取到的Token登录。

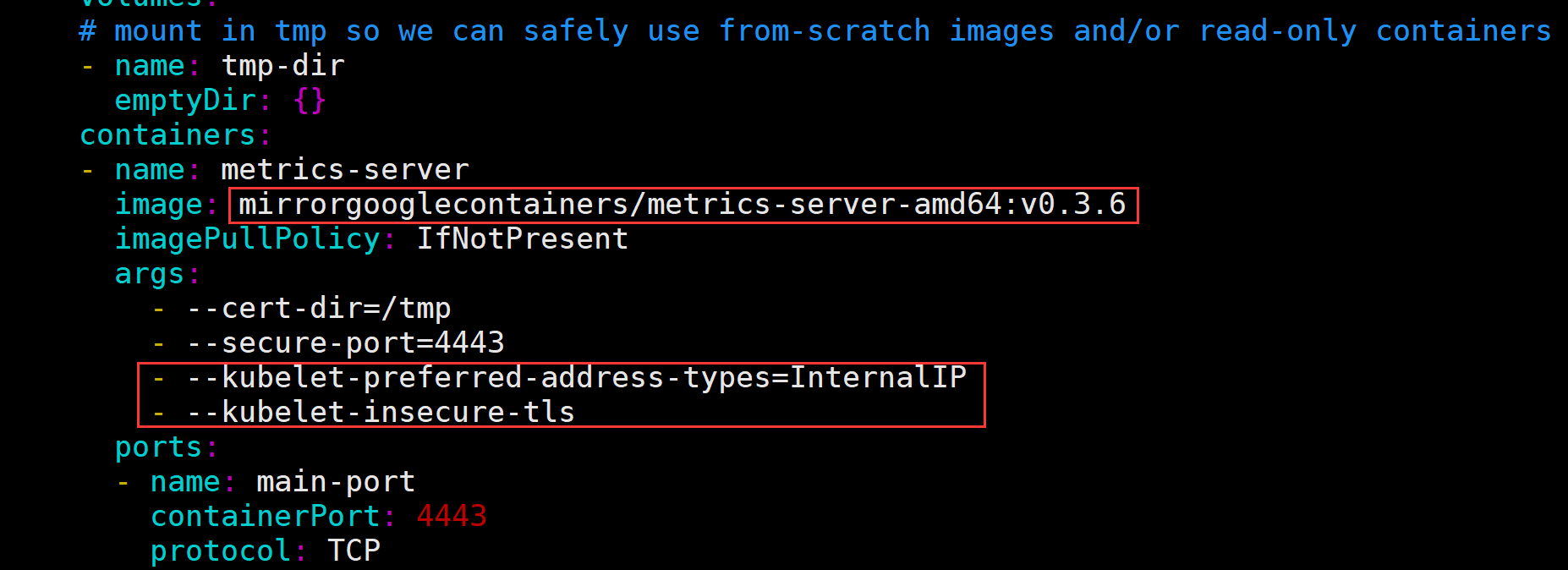

5.在master上安装metrics-server

cd ~

mkdir metrics-server

cd metrics-server

wget https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.3.6/components.yaml

vim components.yaml

修改image

image: mirrorgooglecontainers/metrics-server-amd64:v0.3.6

添加启动参数

- --kubelet-preferred-address-types=InternalIP

- --kubelet-insecure-tls

kubectl create -f components.yaml

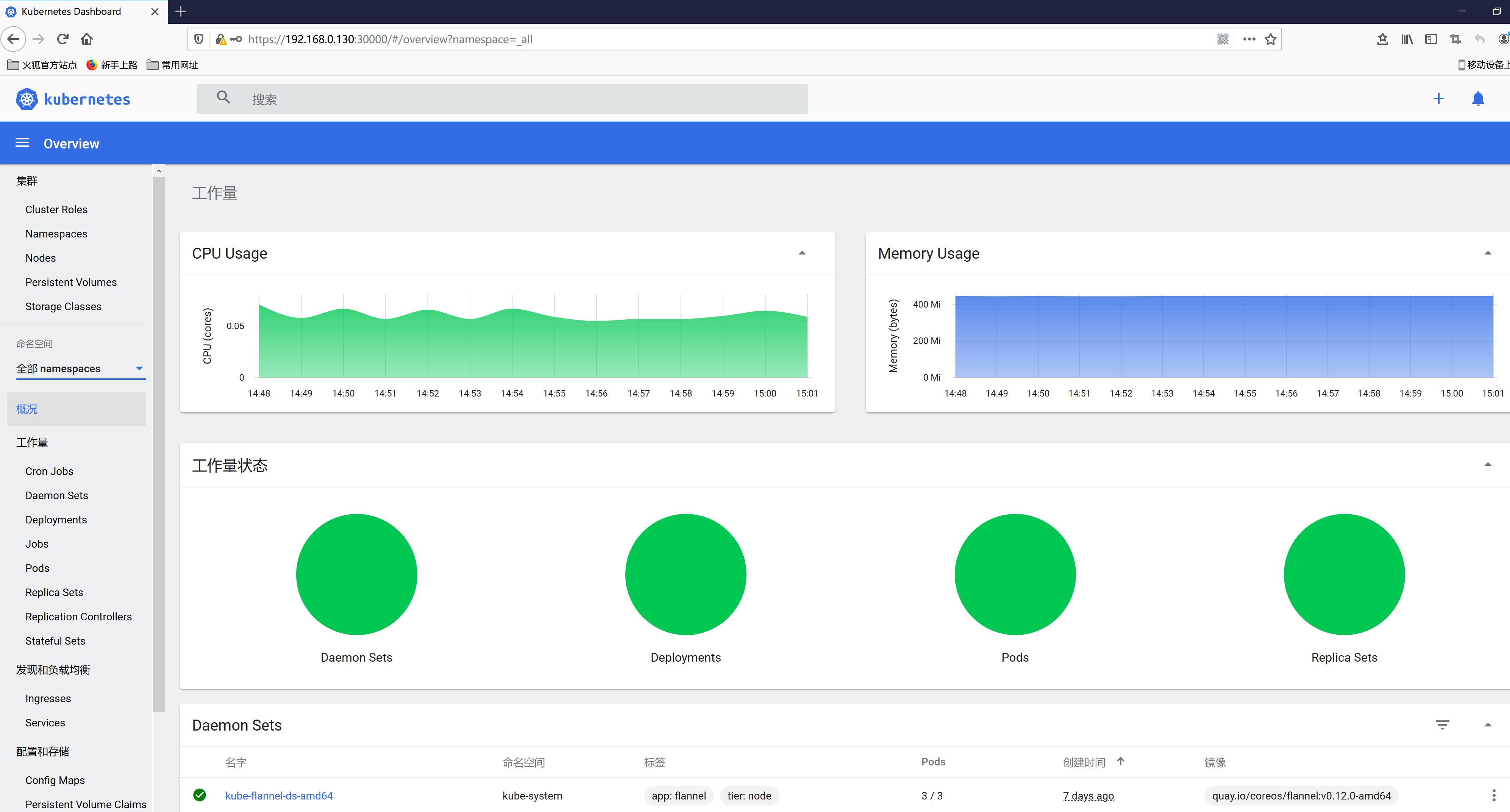

安装完成后可以看到dashboard界面上CPU和内存使用情况了

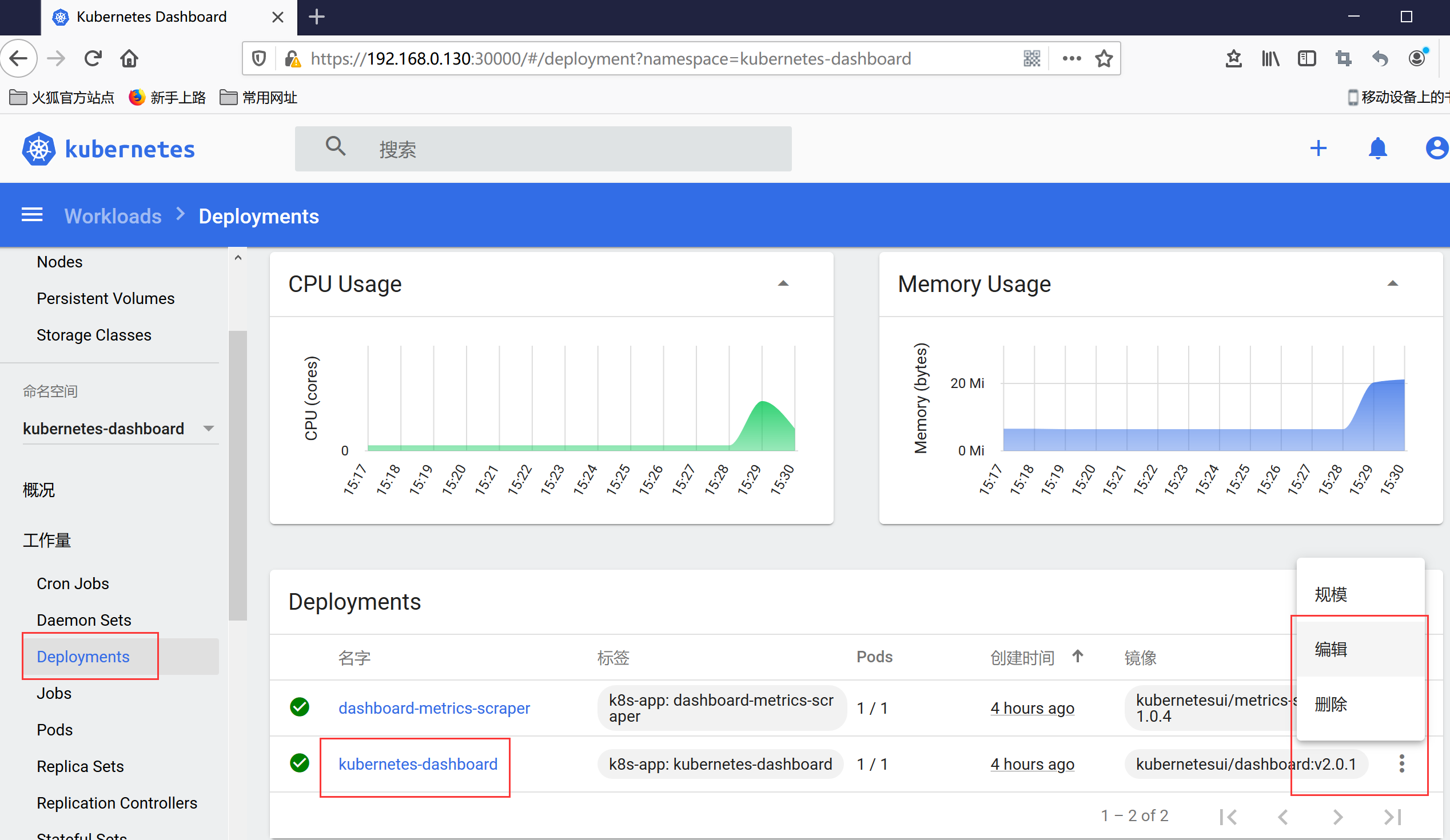

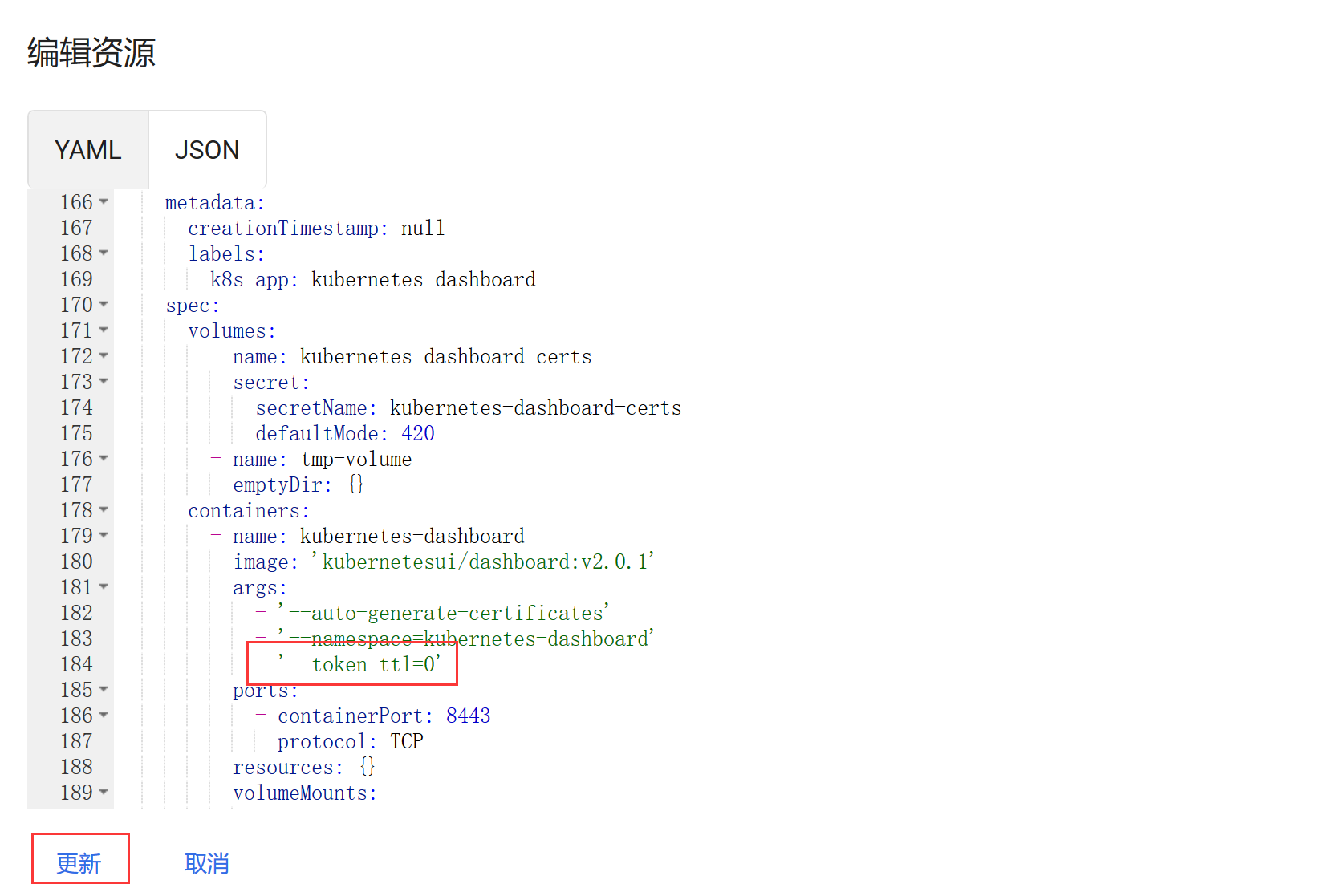

6.修改登录dashboard Token过期时间,默认15分钟

登录dashboard,修改kubernetes-dashboard 配置文件

部署helm

1.在master上下载helm二进制包

wget https://get.helm.sh/helm-v2.16.7-linux-amd64.tar.gz

tar -zxvf helm-v2.16.7-linux-amd64.tar.gz

mv linux-amd64/helm /usr/local/bin/helm

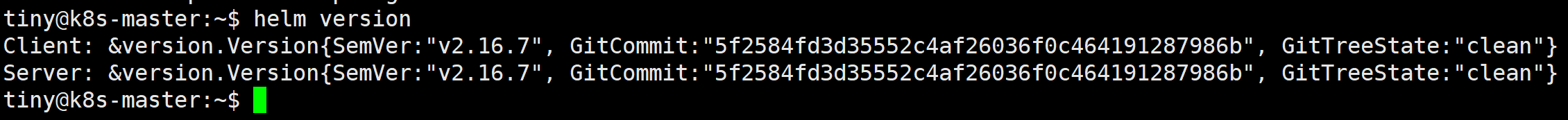

2.查看helm版本

helm version

![]()

3.安装helm命令补全

helm completion bash > .helmrc && echo "source .helmrc" >> .bashrc

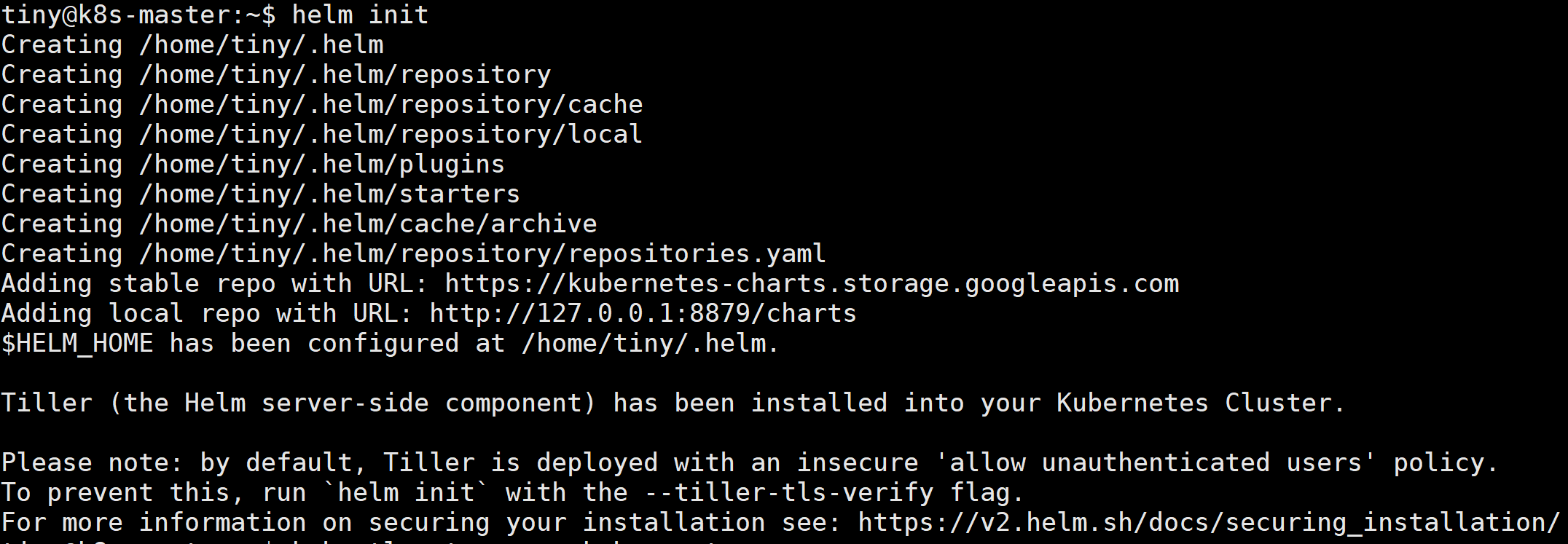

4.安装tiller服务器

helm init

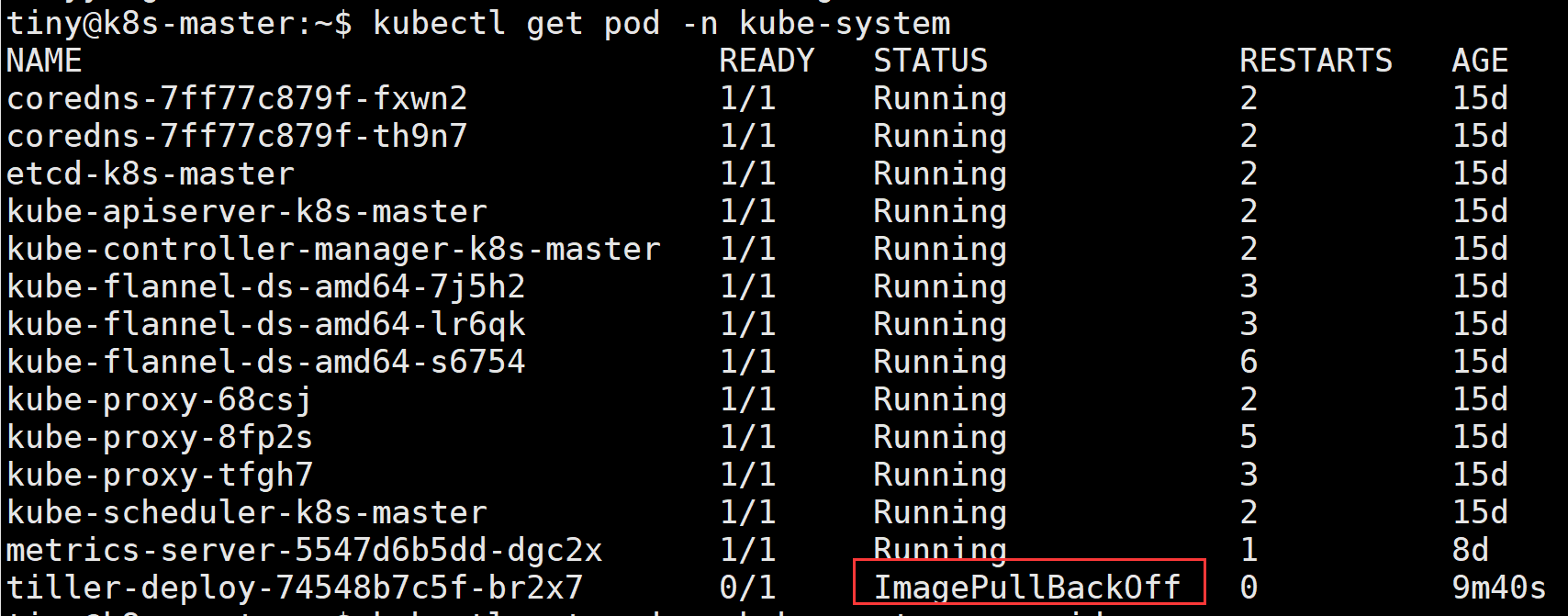

查看tiller pod发现镜像下载失败

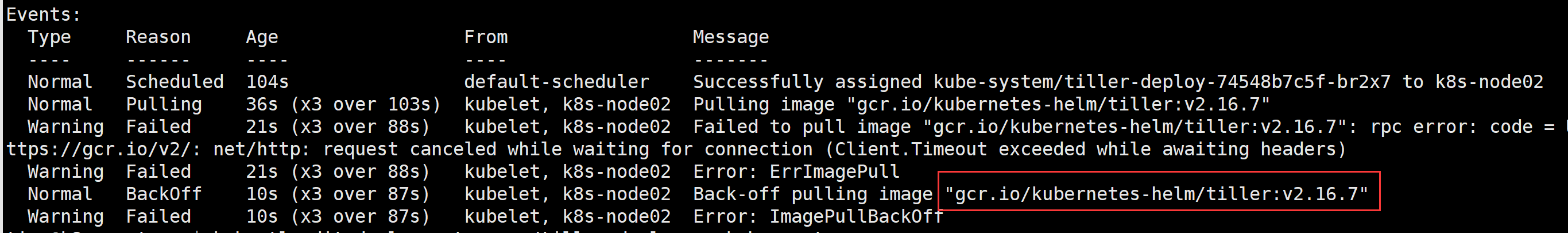

使用kubectl describe pod/tiller-deploy-74548b7c5f-br2x7 -n kube-system查看详情

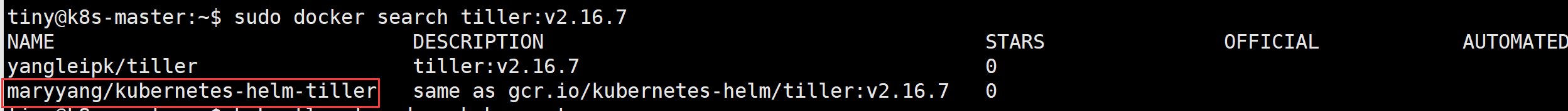

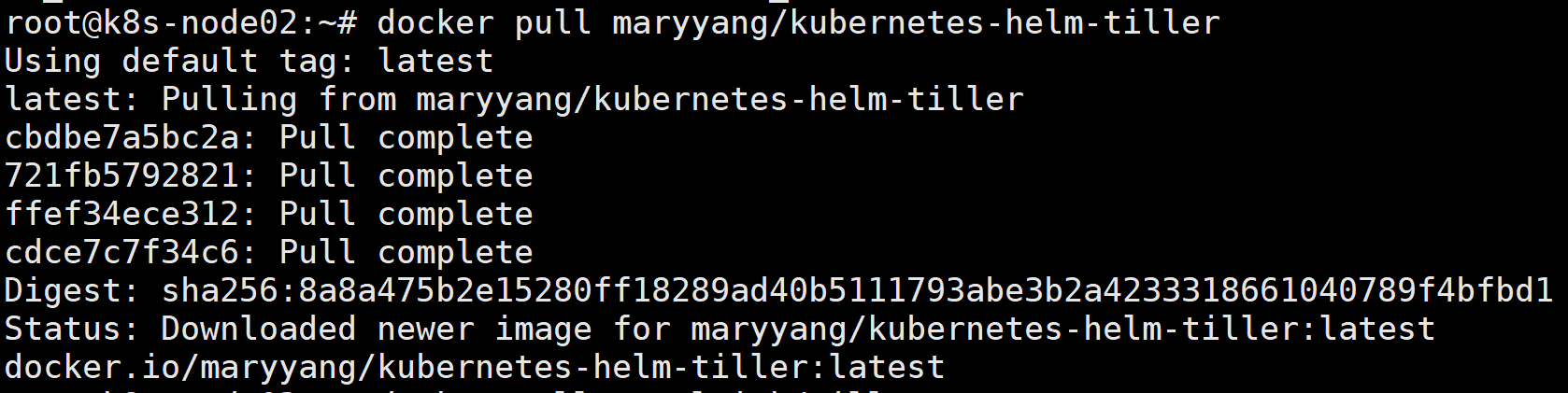

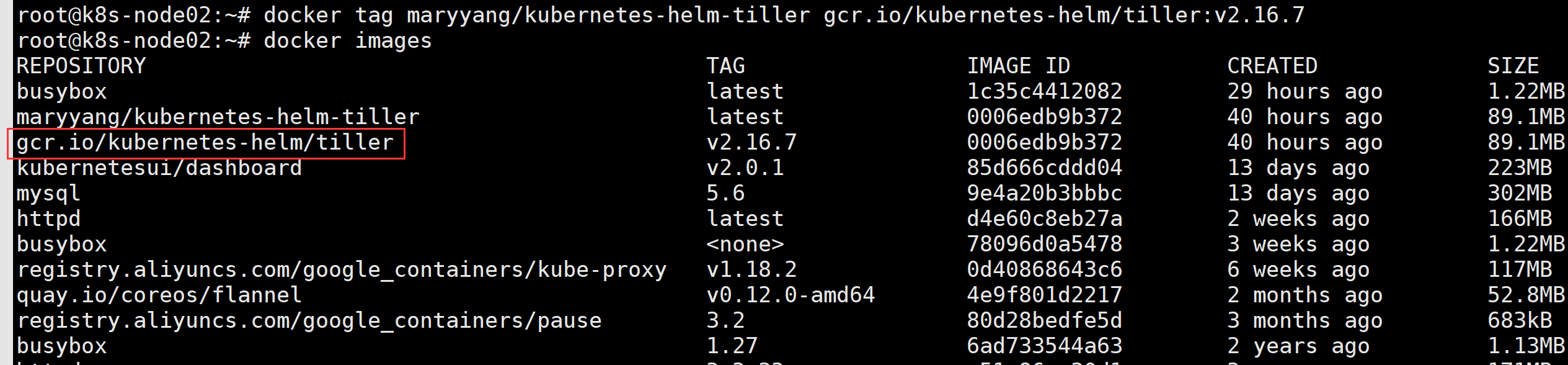

在node节点上查找下载该镜像

改名

docker tag maryyang/kubernetes-helm-tiller gcr.io/kubernetes-helm/tiller:v2.16.7

pod已经正常运行

将该镜像导入到node01上

node02上保存镜像

docker save -o tiller.v2.16.7.tar.gz gcr.io/kubernetes-helm/tiller

上传到node01上

docker load < tiller.v2.16.7.tar.gz

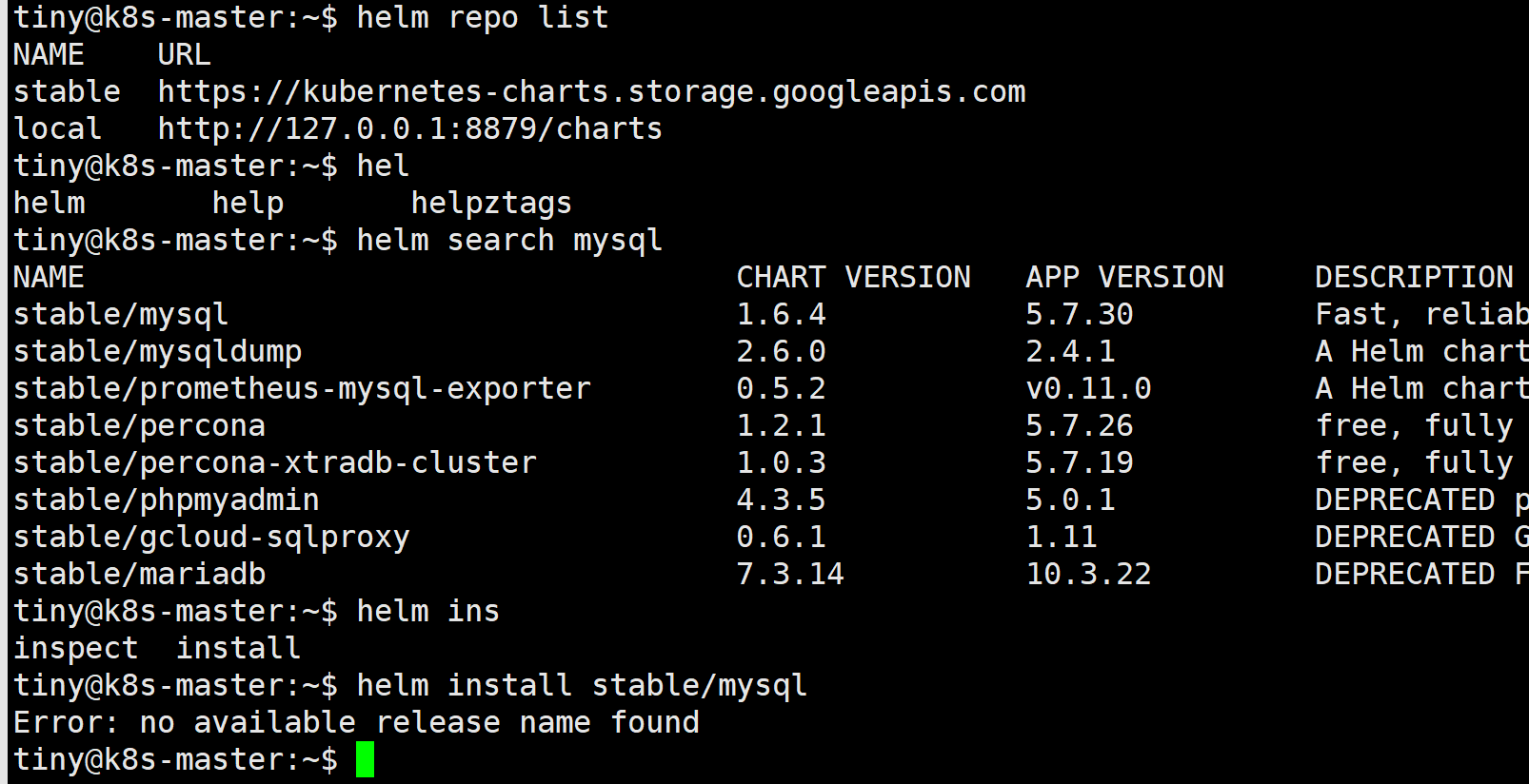

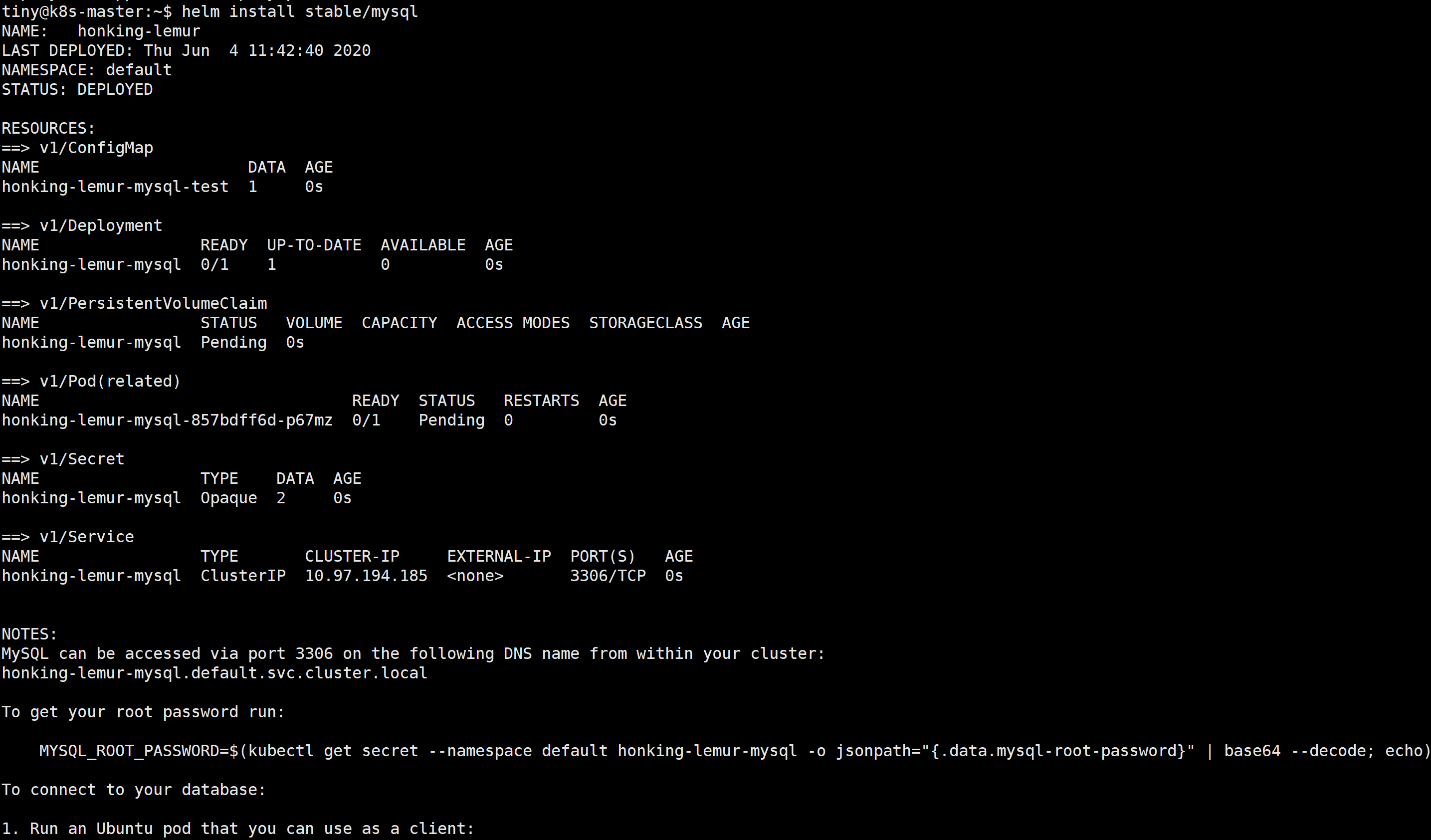

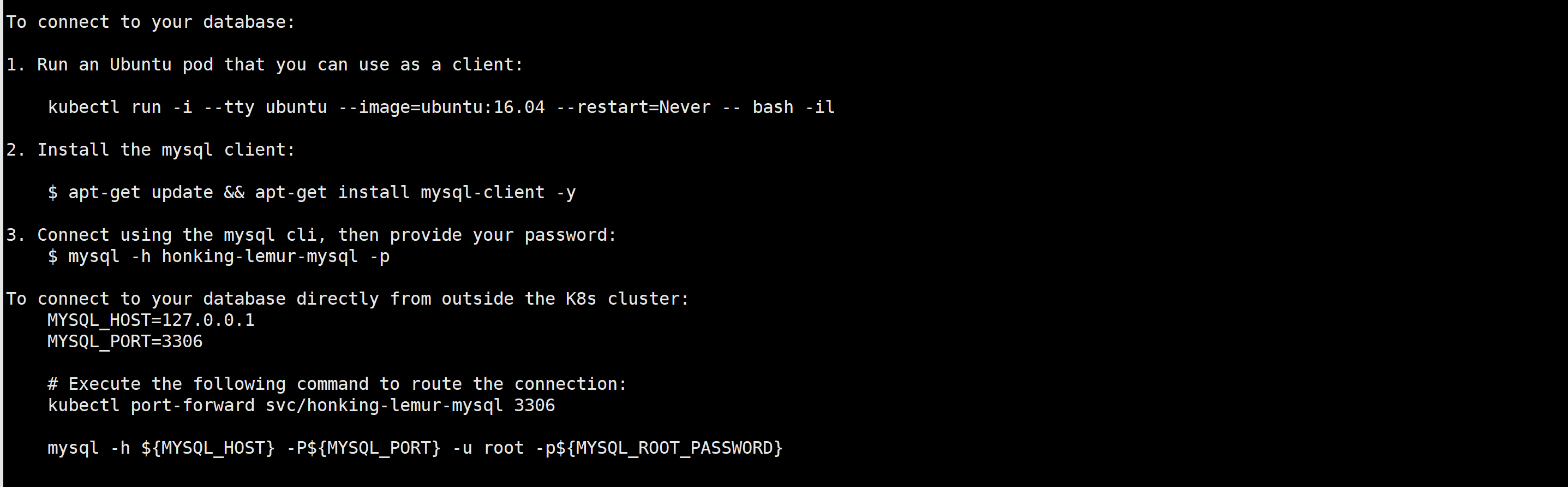

使用helm安装mysql chart

报错是因为tiller服务器权限不足

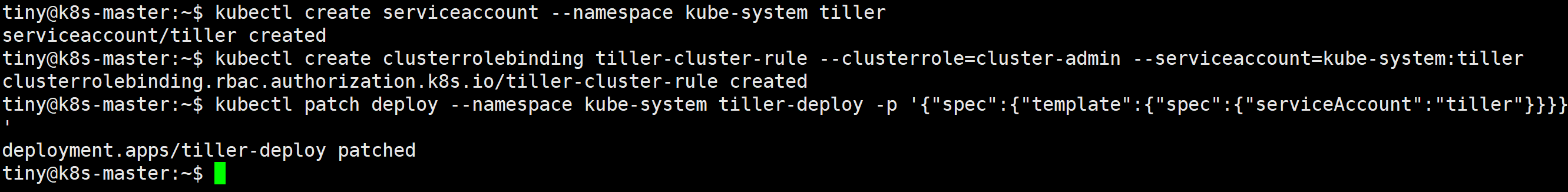

执行如下命令添加权限

kubectl create serviceaccount --namespace kube-system tiller

kubectl create clusterrolebinding tiller-cluster-rule --clusterrole=cluster-admin --serviceaccount=kube-system:tiller

kubectl patch deploy --namespace kube-system tiller-deploy -p '{"spec":{"template":{"spec":{"serviceAccount":"tiller"}}}}'

再次安装

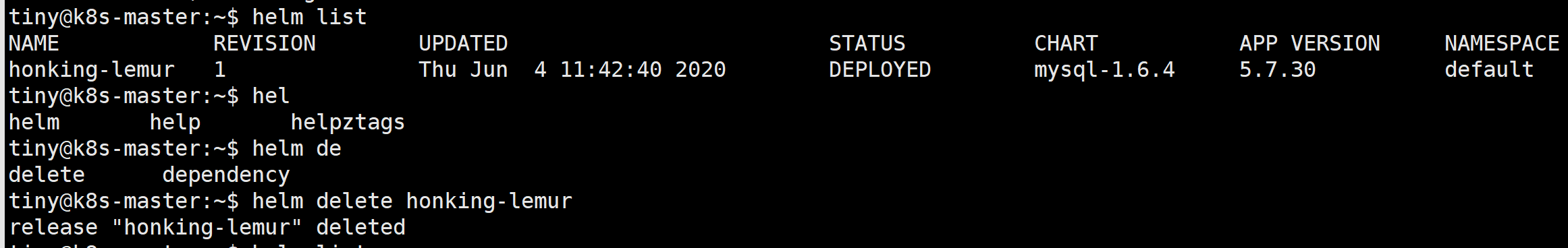

helm list 显示已经部署的 release,helm delete 可以删除 release

k8s练习

1.部署应用

kubectl create deployment httpd-app --image=httpd

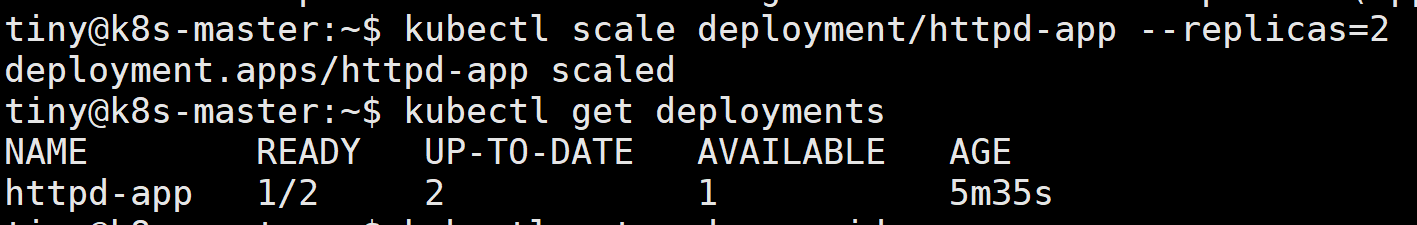

2.扩容应用

kubectl scale deployment/httpd-app --replicas=2