前提:搭建好elasticsearch和kibana服务

下载镜像,需要下载与elasticsearch和kibana相同版本镜像

docker pull docker.elastic.co/logstash/logstash:6.6.2

编写收集日志配置文件

# cat /etc/logstash/conf.d/logstash.conf

input{

stdin{}

}

filter{

}

output{

elasticsearch{

hosts => ["172.16.90.24:9200"]

index => "logstash-%{+YYYY.MM.dd}"

}

stdout{

codec => rubydebug

}

}

标准输入输出至elasticsearch和标准输出

编写logstash配置文件

# cat /etc/logstash/conf.d/logstash.yml http.host: "0.0.0.0" xpack.monitoring.elasticsearch.url: http://172.16.90.24:9200

使用docker启动logstash

docker run --rm -it -v /etc/logstash/conf.d/logstash.conf:/usr/share/logstash/pipeline/logstash.conf -v /etc/logstash/conf.d/logstash.yml:/usr/share/logstash/config/logstash.yml docker.elastic.co/logstash/logstash:6.6.2

参数解析

docker run #运行 --rm #退出删除 -it #后台运行 -v /etc/logstash/conf.d/logstash.conf:/usr/share/logstash/pipeline/logstash.conf#挂载日志收集配置文件 -v /etc/logstash/conf.d/logstash.yml:/usr/share/logstash/config/logstash.yml#挂载logstash配置文件默认主机是elasticsearch docker.elastic.co/logstash/logstash:6.6.2#使用的镜像

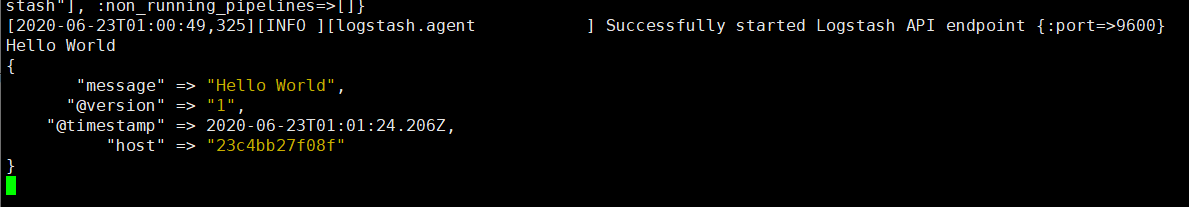

标准输入所以可以在屏幕输入

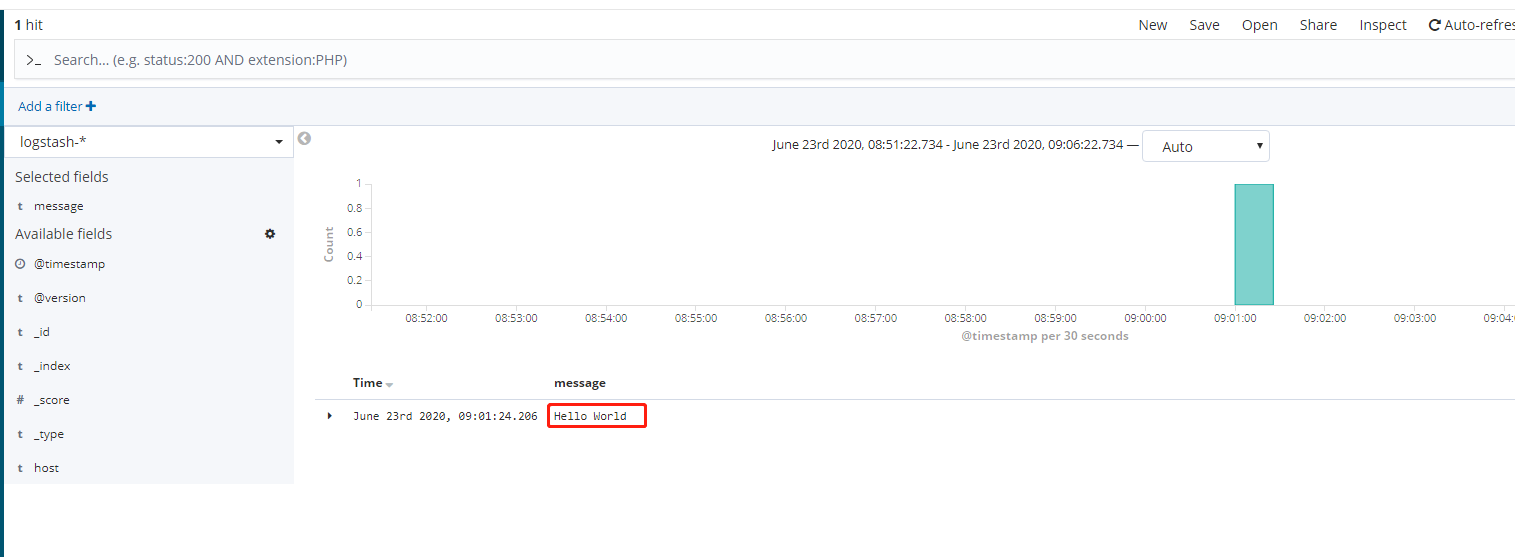

登录kibana添加日志也可以查看到相同内容

使用logstash启动5044端口收集日志

logstash配置文件

input{

beats{

port => 5044

}

}

filter{

}

output{

elasticsearch{

hosts => ["172.16.90.24:9200"]

index => "logstash-%{+YYYY.MM.dd}"

}

stdout{

codec => rubydebug

}

}

启动5044端口把日志输出至elasticsearch和标准屏幕输出

启动

docker run --rm -it -v /etc/logstash/conf.d/logstash.conf:/usr/share/logstash/pipeline/logstash.conf -v /etc/logstash/conf.d/logstash.yml:/usr/share/logstash/config/logstash.yml -p 5044:5044 docker.elastic.co/logstash/logstash:6.6.2

在主机暴露5044端口

安装filebeat并配置文件用于收集日志

# sed '/#/d' /etc/filebeat/filebeat.yml |sed '/^$/d'

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/messages

filebeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

setup.template.settings:

index.number_of_shards: 3

setup.kibana:

output.logstash:

hosts: ["192.168.1.11:5044"]

processors:

- add_host_metadata: ~

- add_cloud_metadata: ~

启动filebeat

systemctl start filebeat

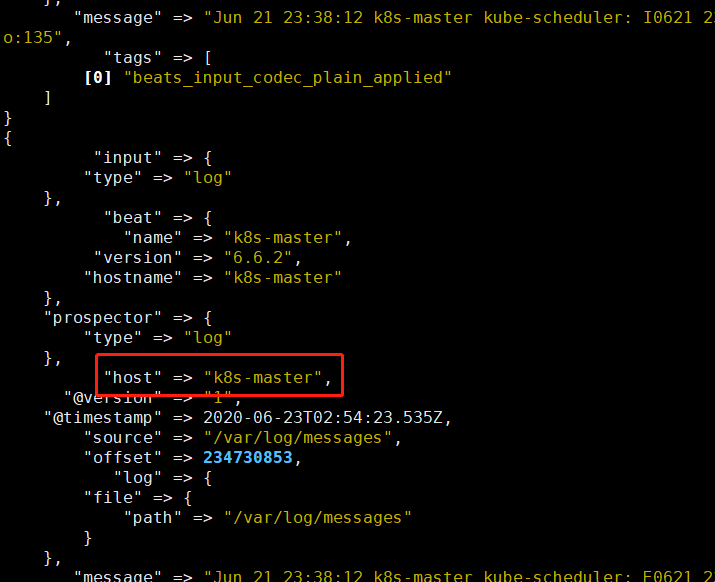

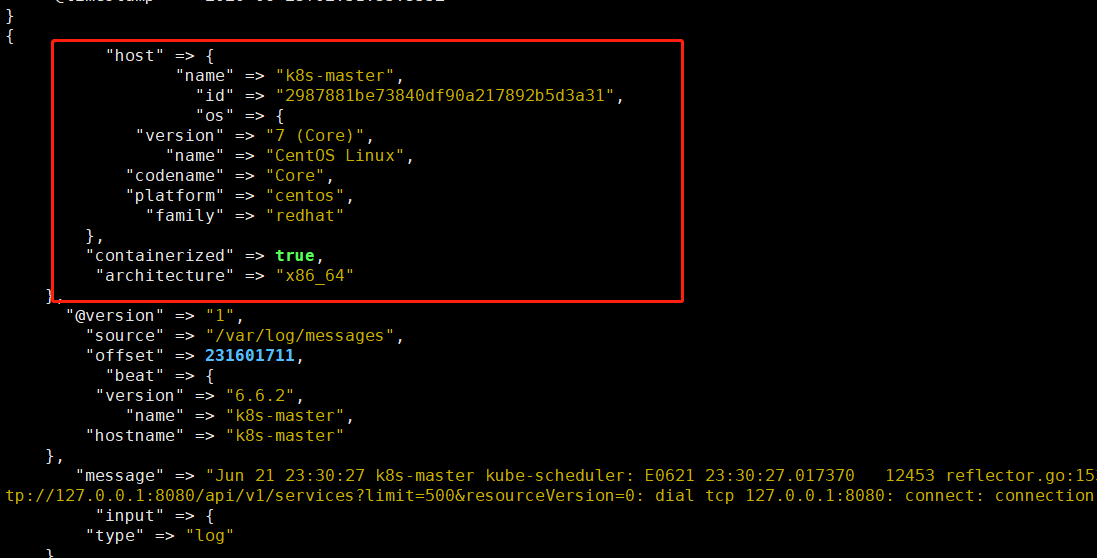

但此时logstash写入elasticsearch会报错:failed to parse field [host] of type [text] in document with id 'E0lsjW4BTdp_eLcgfhbu'看elasticsearch日志发现此时host为一个json对象,需要变为字符串才行

修改配置,添加过滤器,把host.name赋值为host

input{

beats{

port => 5044

}

#stdin {}

}

filter{

mutate {

rename => { "[host][name]" => "host" }

}

}

output{

elasticsearch{

hosts => ["172.16.90.24:9200"]

index => "logstash-%{+YYYY.MM.dd}"

}

stdout{

codec => rubydebug

}

}

再次使用docker启动logstash

docker run --rm -it -v /etc/logstash/conf.d/logstash.conf:/usr/share/logstash/pipeline/logstash.conf -v /etc/logstash/conf.d/logstash.yml:/usr/share/logstash/config/logstash.yml -p 5044:5044 docker.elastic.co/logstash/logstash:6.6.2

输出正常了 host字段也变成了字符串而不是json,可以正常输入至logstash