安装参考:

https://www.cnblogs.com/vipsoft/p/13233045.html

环境安装需要 Zookeeper + Kafka

要学习Kafka还需要繁琐的安装配置,所以环境搭建方案改用Docker完成

这里使用Docker寻找Kafka相关镜像:

[root@localhost ~]# docker search kafka NAME DESCRIPTION STARS OFFICIAL AUTOMATED wurstmeister/kafka Multi-Broker Apache Kafka Image 1451 [OK] spotify/kafka A simple docker image with both Kafka and Zo… 414 [OK] sheepkiller/kafka-manager kafka-manager 211 [OK] kafkamanager/kafka-manager Docker image for Kafka manager 146 ches/kafka Apache Kafka. Tagged versions. JMX. Cluster-… 117 [OK] hlebalbau/kafka-manager CMAK (previous known as Kafka Manager) As Do… 90 [OK] landoop/kafka-topics-ui UI for viewing Kafka Topics config and data … 36 [OK] debezium/kafka Kafka image required when running the Debezi… 24 [OK] solsson/kafka http://kafka.apache.org/documentation.html#q… 23 [OK] danielqsj/kafka-exporter Kafka exporter for Prometheus 23 [OK] johnnypark/kafka-zookeeper Kafka and Zookeeper combined image 23 landoop/kafka-lenses-dev Lenses with Kafka. +Connect +Generators +Con… 21 [OK] landoop/kafka-connect-ui Web based UI for Kafka Connect. 17 [OK] digitalwonderland/kafka Latest Kafka - clusterable 15 [OK] tchiotludo/kafkahq Kafka GUI to view topics, topics data, consu… 6 [OK] solsson/kafka-manager Deprecated in favor of solsson/kafka:cmak 5 [OK] solsson/kafkacat https://github.com/edenhill/kafkacat/pull/110 5 [OK] solsson/kafka-prometheus-jmx-exporter For monitoring of Kubernetes Kafka clusters … 4 [OK] solsson/kafka-consumers-prometheus https://github.com/cloudworkz/kafka-minion 4 mesosphere/kafka-client Kafka client 3 [OK] zenko/kafka-manager Kafka Manger https://github.com/yahoo/kafka-… 2 [OK] digitsy/kafka-magic Kafka Magic images 2 anchorfree/kafka Kafka broker and Zookeeper image 2 zenreach/kafka-connect Zenreach's Kafka Connect Docker Image 2 humio/kafka-dev Kafka build for dev. 0 [root@localhost ~]#

然后寻找Zookeeper镜像:

[root@localhost ~]# docker search zookeeper NAME DESCRIPTION STARS OFFICIAL AUTOMATED zookeeper Apache ZooKeeper is an open-source server wh… 1170 [OK] jplock/zookeeper Builds a docker image for Zookeeper version … 165 [OK] wurstmeister/zookeeper 158 [OK] mesoscloud/zookeeper ZooKeeper 73 [OK] mbabineau/zookeeper-exhibitor 23 [OK] digitalwonderland/zookeeper Latest Zookeeper - clusterable 23 [OK] tobilg/zookeeper-webui Docker image for using `zk-web` as ZooKeeper… 15 [OK] debezium/zookeeper Zookeeper image required when running the De… 14 [OK] confluent/zookeeper [deprecated - please use confluentinc/cp-zoo… 13 [OK] 31z4/zookeeper Dockerized Apache Zookeeper. 9 [OK] elevy/zookeeper ZooKeeper configured to execute an ensemble … 7 [OK] thefactory/zookeeper-exhibitor Exhibitor-managed ZooKeeper with S3 backups … 6 [OK] engapa/zookeeper Zookeeper image optimised for being used int… 3 emccorp/zookeeper Zookeeper 2 josdotso/zookeeper-exporter ref: https://github.com/carlpett/zookeeper_e… 2 [OK] paulbrown/zookeeper Zookeeper on Kubernetes (PetSet) 1 [OK] perrykim/zookeeper k8s - zookeeper ( forked k8s contrib ) 1 [OK] dabealu/zookeeper-exporter zookeeper exporter for prometheus 1 [OK] duffqiu/zookeeper-cli 1 [OK] openshift/zookeeper-346-fedora20 ZooKeeper 3.4.6 with replication support 1 midonet/zookeeper Dockerfile for a Zookeeper server. 0 [OK] pravega/zookeeper-operator Kubernetes operator for Zookeeper 0 phenompeople/zookeeper Apache ZooKeeper is an open-source server wh… 0 [OK] avvo/zookeeper Apache Zookeeper 0 [OK] humio/zookeeper-dev zookeeper build with zulu jvm. 0 [root@localhost ~]#

一般用最高Stars的镜像就行,但是ZK是搭配Kafka的,所以用同一来源的。

拉取镜像:

docker pull wurstmeister/zookeeper

docker pull wurstmeister/kafka

然后各自运行一个容器:

docker run -d --name zookeeper -p 2181:2181 -t wurstmeister/zookeeper docker run -d --name kafka -p 9092:9092 -e KAFKA_BROKER_ID=0 -e KAFKA_ZOOKEEPER_CONNECT=Linux主机IP:2181 -e KAFKA_ADVERTISED_LISTENERS=PLAINTEXT://Linux主机IP:9092 -e KAFKA_LISTENERS=PLAINTEXT://0.0.0.0:9092 -t wurstmeister/kafka

检查容器运行是否正常:

[root@localhost ~]# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 329fb126c6ee wurstmeister/kafka "start-kafka.sh" 2 days ago Up 2 days 0.0.0.0:9092->9092/tcp, :::9092->9092/tcp kafka 6c8c9f12a5f2 wurstmeister/zookeeper "/bin/sh -c '/usr/sb…" 2 days ago Up 2 days 22/tcp, 2888/tcp, 3888/tcp, 0.0.0.0:2181->2181/tcp, :::2181->2181/tcp zookeeper

Kafka容器提供了生产者和消费者的SHELL脚本

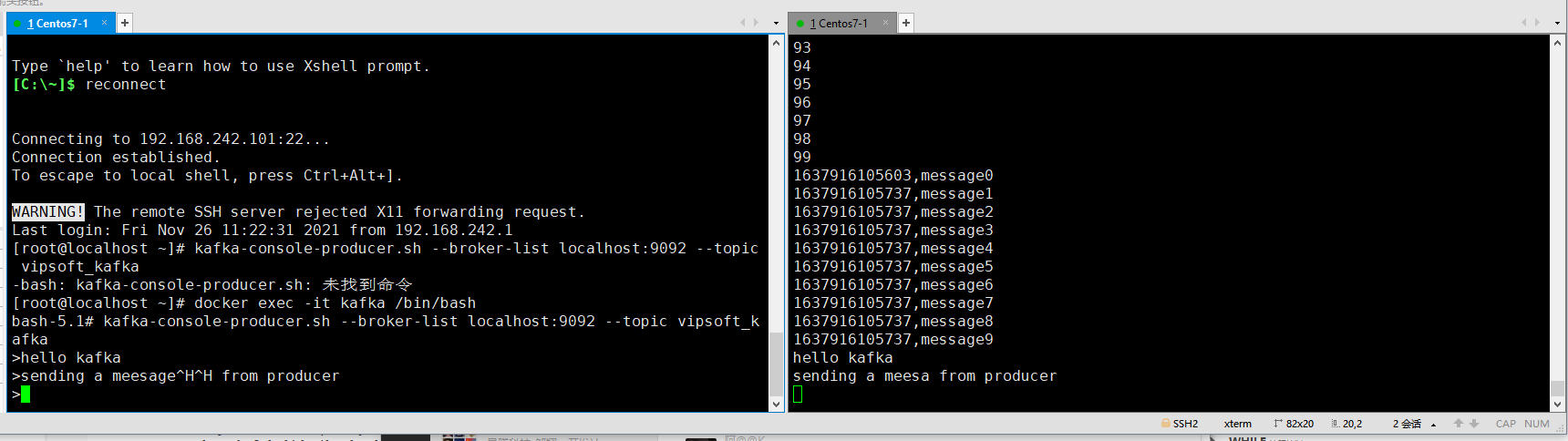

可以使用脚本来测试通信,但是注意,生产者和消费者都将占用终端窗口,需要多开另外两个终端来进行测试

#窗口1 生产 [root@centos-linux ~]# docker exec -it kafka /bin/bash bash-4.4# kafka-console-producer.sh --broker-list localhost:9092 --topic vipsoft_kafka #窗口2 消费 [root@centos-linux ~]# docker exec -it kafka /bin/bash bash-4.4# kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic vipsoft_kafka --from-beginning

Java API:

<dependency> <groupId>org.apache.kafka</groupId> <artifactId>kafka-clients</artifactId> <version>0.11.0.0</version> </dependency> <dependency> <groupId>org.apache.kafka</groupId> <artifactId>kafka-streams</artifactId> <version>0.11.0.0</version> </dependency>

Demo代码:

生产者发送异步消息

/** * 异步消息发送 */ @Test public void asyncMessageSend() { Properties props = new Properties(); props.put("bootstrap.servers", "192.168.242.101:9092");//kafka 集群,broker - list props.put("acks", "all"); props.put("retries", 1);//重试次数 props.put("batch.size", 16384);//批次大小 props.put("linger.ms", 1);//等待时间 props.put("buffer.memory", 33554432);//RecordAccumulator 缓冲区大小 props.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer"); props.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer"); Producer<String, String> producer = new KafkaProducer<>(props); for (int i = 0; i < 100; i++) { producer.send(new ProducerRecord<>(TOPIC, Integer.toString(i), Integer.toString(i)), (metadata, exception) -> { //回调函数,该方法会在 Producer 收到 ack 时调用,为异步调用 if (null == exception) { System.out.println("success->" + metadata.offset()); } else { exception.printStackTrace(); } }); } producer.close(); }

/** * 同步发送? */ @Test public void syncMessageSend() { try { Properties props = new Properties(); props.put("bootstrap.servers", "192.168.242.101:9092");//kafka 集群,broker-list props.put("acks", "all"); props.put("retries", 1);//重试次数 props.put("batch.size", 16384);//批次大小 props.put("linger.ms", 1);//等待时间 props.put("buffer.memory", 33554432);//RecordAccumulator 缓冲区大小 props.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer"); props.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer"); Producer<String, String> producer = new KafkaProducer<>(props); for (int i = 0; i < 100; i++) { producer.send(new ProducerRecord<>(TOPIC, Integer.toString(i), Integer.toString(i))).get(); } producer.close(); } catch (Exception exception) { exception.printStackTrace(); } }

消费者自动提交Offset

/** * 自动提交offset */ @Test public void autoReceiveCommit() { Properties props = new Properties(); props.put("bootstrap.servers", "192.168.242.101:9092"); props.put("group.id", "test"); props.put("enable.auto.commit", "true"); // 自动提交参数为true props.put("auto.commit.interval.ms", "1000"); props.put("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); props.put("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); KafkaConsumer<String, String> consumer = new KafkaConsumer<>(props); consumer.subscribe(Arrays.asList(TOPIC)); while (true) { ConsumerRecords<String, String> records = consumer.poll(100); records.forEach(record -> System.out.printf("offset = %d, key = %s, value = %s%n", record.offset(), record.key(), record.value())); } }

手动 —— 同步提交:

/** * 手动提交offset */ @Test public void manualReceiveCommitWithSync() { Properties props = new Properties(); //Kafka 集群 props.put("bootstrap.servers", "192.168.242.101:9092"); //消费者组,只要 group.id 相同,就属于同一个消费者组 props.put("group.id", "test"); props.put("enable.auto.commit", "false"); //关闭自动提交 offset props.put("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); props.put("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); KafkaConsumer<String, String> consumer = new KafkaConsumer<>(props); consumer.subscribe(Arrays.asList(TOPIC)); //消费者订阅主题 while (true) { //消费者拉取数据 ConsumerRecords<String, String> records = consumer.poll(100); records.forEach(record -> { System.out.printf("offset = %d, key = %s, value = %s%n", record.offset(), record.key(), record.value()); }); /** * 手动提交 offset 的方法有两种: * 分别是 commitSync(同步提交)和 commitAsync(异步提交)。 * * 两者的相同点是,都会将本次 poll 的一批数据最高的偏移量提交; * 不同点是,commitSync 阻塞当前线程,一直到提交成功,并且会自动失败重试 * (由不可控因素导致,也会出现提交失败);而 commitAsync 则没有失败重试机制,故有可能提交失败。 */ //同步提交,当前线程会阻塞直到 offset 提交成功 consumer.commitSync(); } }

手动 —— 异步提交:

/** * 手动提交 + 异步提交 */ @Test public void manualReceiveCommitWithAsync() { Properties props = new Properties(); //Kafka 集群 props.put("bootstrap.servers", "192.168.242.101:9092"); //消费者组,只要 group.id 相同,就属于同一个消费者组 props.put("group.id", "test"); //关闭自动提交 offset props.put("enable.auto.commit", "false"); props.put("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); props.put("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); KafkaConsumer<String, String> consumer = new KafkaConsumer<>(props); consumer.subscribe(Arrays.asList(TOPIC));//消费者订阅主题 while (true) { ConsumerRecords<String, String> records = consumer.poll(100);//消费者拉取数据 records.forEach(record -> { System.out.printf("offset = %d, key = %s, value = %s%n", record.offset(), record.key(), record.value()); }); //异步提交 consumer.commitAsync((offsets, exception) -> { if (exception != null) { System.err.println("Commit failed for" + offsets); } }); } }