官方文档:https://docs.python.org/3.5/library/http.html

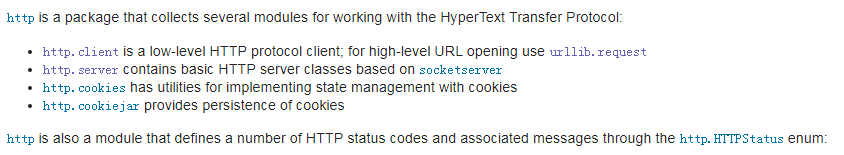

偷个懒,截图如下:

即,http客户端编程一般用urllib.request库(主要用于“在这复杂的世界里打开各种url”,包括:authentication、redirections、cookies and more.)。

1. urllib.request—— Extensible library for opening URLs

使用手册,结合代码写的很详细:HOW TO Fetch Internet Resources Using The urllib Package

该模块提供的函数:

urllib.request.urlopen(url, data=None, [timeout, ]*, cafile=None, capath=None, cadefault=False, context=None)

urllib.request.install_opener(opener)

urllib.request.build_opener([handler, ...])

urllib.request.pathname2url(path)

urllib.request.url2pathname(path)

urllib.request.getproxies()

该模块提供的类:

class urllib.request.Request(url, data=None, headers={}, origin_req_host=None, unverifiable=False, method=None)

class urllib.request.OpenerDirector

class urllib.request.BaseHandler

class urllib.request.HTTPDefaultErrorHandler

class urllib.request.HTTPRedirectHandler

class urllib.request.HTTPCookieProcessor(cookiejar=None)

class urllib.request.ProxyHandler(proxies=None)

class urllib.request.HTTPPasswordMgr

还有很多,不一一列出了。。。

1.2 Request对象

下面的方法是Request提供的公共接口,所以它们可以被子类重写。同时,也提供了一些客户端可以查阅解析的请求的公共属性。

Request.full_url Request.type Request.host Request.origin_req_host #不包含端口号

Request.selector Request.data Request.unverifiable Request.method

Request.get_method() Request.add_header(key, val) Request.add_unredirected_header(key, header) Request.has_header(header) Request.remove_header(header)

Request.get_full_url() Request.set_proxy(host, type) Request.get_header(header_name, default=None) Request.header_items()

1.3 OpenerDirector Objects

有以下方法:

OpenerDirector.add_handler(handler)

OpenerDirector.open(url, data=None[, timeout])

OpenerDirector.error(proto, *args)

1.4 BaseHandler Objects

1.5 HTTPRedirectHandler Objects

1.6 HTTPCookieProcessor Objects

它只有一个属性:HTTPCookieProcessor.cookiejar ,所有的cookies都保存在http.cookiejar.CookeiJar中。

1.x 还有太多类,需要用时直接查看官方文档吧。。

EXamples

打开url读取数据:

>>> import urllib.request

>>> with urllib.request.urlopen('http://www.python.org/') as f:

... print(f.read(300))

...

b'<!DOCTYPE html PUBLIC "-//W3C//DTD XHTML 1.0 Transitional//EN"

"http://www.w3.org/TR/xhtml1/DTD/xhtml1-transitional.dtd">

<html

xmlns="http://www.w3.org/1999/xhtml" xml:lang="en" lang="en">

<head>

<meta http-equiv="content-type" content="text/html; charset=utf-8" />

<title>Python Programming '

注意:urlopen返回一个bytes object(字节对象)。

>>> with urllib.request.urlopen('http://www.python.org/') as f:

... print(f.read(100).decode('utf-8'))

...

<!DOCTYPE html PUBLIC "-//W3C//DTD XHTML 1.0 Transitional//EN"

"http://www.w3.org/TR/xhtml1/DTD/xhtm

向CGI的stdin发送数据流:

>>> import urllib.request

>>> req = urllib.request.Request(url='https://localhost/cgi-bin/test.cgi',

... data=b'This data is passed to stdin of the CGI')

>>> with urllib.request.urlopen(req) as f:

... print(f.read().decode('utf-8'))

...

Got Data: "This data is passed to stdin of the CGI"

CGI的另一端通过stdin接收数据:

#!/usr/bin/env python

import sys

data = sys.stdin.read()

print('Content-type: text/plain

Got Data: "%s"' % data)

Use of Basic HTTP Authentication:

import urllib.request

# Create an OpenerDirector with support for Basic HTTP Authentication...

auth_handler = urllib.request.HTTPBasicAuthHandler()

auth_handler.add_password(realm='PDQ Application',

uri='https://mahler:8092/site-updates.py',

user='klem',

passwd='kadidd!ehopper')

opener = urllib.request.build_opener(auth_handler)

# ...and install it globally so it can be used with urlopen.

urllib.request.install_opener(opener)

urllib.request.urlopen('http://www.example.com/login.html')

添加HTTP头部:

import urllib.request

req = urllib.request.Request('http://www.example.com/')

req.add_header('Referer', 'http://www.python.org/')

# Customize the default User-Agent header value:

req.add_header('User-Agent', 'urllib-example/0.1 (Contact: . . .)')

r = urllib.request.urlopen(req)

OpenerDirector automatically adds a User-Agent header to every Request. To change this:

import urllib.request

opener = urllib.request.build_opener()

opener.addheaders = [('User-agent', 'Mozilla/5.0')]

opener.open('http://www.example.com/')

Also, remember that a few standard headers (Content-Length, Content-Type and Host) are added when the Request is passed to urlopen() (or OpenerDirector.open()).

GET:

>>> import urllib.request

>>> import urllib.parse

>>> params = urllib.parse.urlencode({'spam': 1, 'eggs': 2, 'bacon': 0})

>>> url = "http://www.musi-cal.com/cgi-bin/query?%s" % params

>>> with urllib.request.urlopen(url) as f:

... print(f.read().decode('utf-8'))

POST:

>>> import urllib.request

>>> import urllib.parse

>>> data = urllib.parse.urlencode({'spam': 1, 'eggs': 2, 'bacon': 0})

>>> data = data.encode('ascii')

>>> with urllib.request.urlopen("http://requestb.in/xrbl82xr", data) as f:

... print(f.read().decode('utf-8'))

The following example uses an explicitly specified HTTP proxy, overriding environment settings:

>>> import urllib.request

>>> proxies = {'http': 'http://proxy.example.com:8080/'}

>>> opener = urllib.request.FancyURLopener(proxies)

>>> with opener.open("http://www.python.org") as f:

... f.read().decode('utf-8'

The following example uses no proxies at all, overriding environment settings:

>>> import urllib.request

>>> opener = urllib.request.FancyURLopener({})

>>> with opener.open("http://www.python.org/") as f:

... f.read().decode('utf-8')