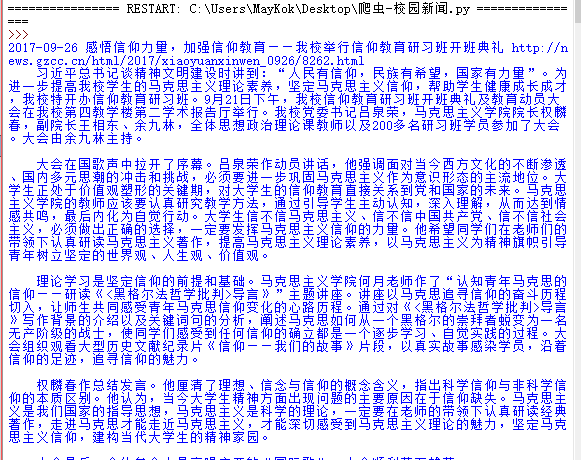

1.用requests库和BeautifulSoup4库,爬取校园新闻列表的时间、标题、链接、来源。

1 import requests #HTTP库 从html或xml中提取数据 2 from bs4 import BeautifulSoup #爬虫库BeautifulSoup4 3 url = requests.get("http://news.gzcc.cn/html/xiaoyuanxinwen/") 4 url.encoding = "utf-8" 5 soup = BeautifulSoup(url.text,'html.parser') 6 #print(soup.head.title.text) 7

8 #找出含有特定标签的html元素:‘ID’前缀‘#’;‘class’前缀‘.’,其它直接soup.select('p') 9 for news in soup.select('li'): 10 if len(news.select('.news-list-title'))>0: 11 #print(news.select('.news-list-title')) #1.爬出的资料生成一个大列表 12 #print(news.select('.news-list-title')[0]) #2.取出了列表中的第一个元素 13 #print(news.select('.news-list-title')[0].text) #3.Bs4库text()负责取出文本 14 15 time = news.select('.news-list-info')[0].contents[0].text #时间 16 title = news.select('.news-list-title')[0].text #标题 17 href = news.select('a')[0]['href'] #链接 18 19 href_text = requests.get(href) #链接内容 20 href_text.encoding = "utf-8" 21 href_soup = BeautifulSoup(href_text.text,'html.parser') 22 href_text_body = href_soup.select('.show-content')[0].text 23 print(time,title,href,href_text_body)

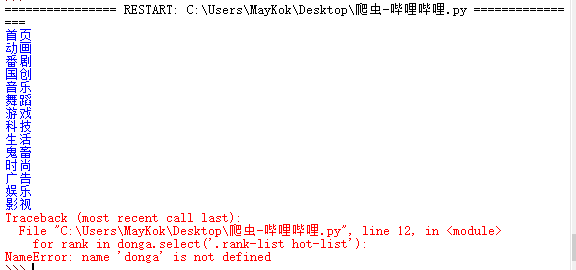

2.选一个自己感兴趣的主题,做类似的操作,为“爬取网络数据并进行文本分析”做准备。

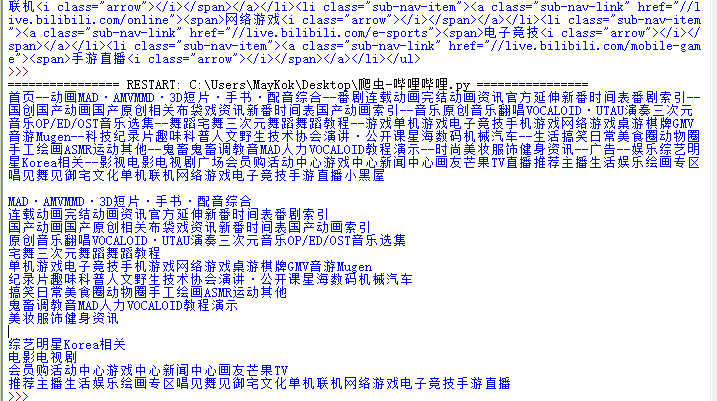

1 import requests 2 from bs4 import BeautifulSoup 3 url= requests.get('https://www.bilibili.com') 4 url.encoding="utf-8" 5 soup=BeautifulSoup(url.text,'html.parser')#'html.parser'是HTML的解析器 6 #print(soup) 7 for titles in soup.select('.nav-name'): 8 # if len(titles.select('.nav-name'))>0: 9 # title=titles.select('.nav-name')[0].text 10 print(titles.text) 11 douga = soup.select('#bili_douga') 12 for rank in donga.select('.rank-list hot-list'): 13 14 15 if len(rank.select('.rank-list hot-list'))>0: 16 ranks=rank.select('.rank-item highlight')[0].text 17 print(ranks)

并不能爬到“动画”里这个“排行”的url。