转自:https://www.cnblogs.com/jsonhc/p/7862518.html

由于在centos7系统上使用docker-machine安装的swarm实现服务的负载均衡一直失败,存在问题,故将环境改到ubuntu16.04.2 LTS上进行操作

两个节点manager1:192.168.101.17(安装docker、docker-machine),work1节点192.168.101.18(只进行安装docker)

1、在ubuntu系统上安装docker,这里采用dpkg包安装deb

下载对应系统架构的deb:https://download.docker.com/linux/ubuntu/dists/xenial/pool/stable/amd64/docker-ce_17.09.0~ce-0~ubuntu_amd64.deb

$ sudo dpkg -i docker-ce_17.09.0_ce-0_ubuntu_amd64.deb $ sudo systemctl start docker $ sudo systemctl status docker

给两个节点配置镜像加速器:

root@manager1:~# cat /etc/docker/daemon.json

{

"dns": ["192.168.101.2","8.8.8.8"],

"registry-mirrors": ["https://cbd49ltj.mirror.aliyuncs.com"]

}

如果使用普通用户操作docker:将用户添加到docker组进行

wadeson@wadeson:~$ groupadd docker groupadd:“docker”组已存在 wadeson@wadeson:~$ sudo usermod -aG docker wadeson

配置完成后,需要exit退出一下控制端,重新开启就行

2、为manager1节点单独安装docker-machine:一下操作我这里使用的都是root

# cp docker-machine /usr/local/bin/ # cd /usr/local/bin/ # chmod +x docker-machine # docker-machine version docker-machine version 0.13.0, build 9ba6da9

两个节点的基础环境配置好后,现在开始创建machine:

root@wadeson:~# ssh-keygen root@wadeson:~# ssh-copy-id root@192.168.101.17 root@wadeson:~# docker-machine create -d generic --generic-ip-address=192.168.101.17 --generic-ssh-key ~/.ssh/id_rsa --generic-ssh-user=root manager1

上面为管理节点自己创建一个machine,下面给远程主机work1节点创建machine:

root@wadeson:~# ssh-copy-id root@192.168.101.18 root@wadeson:~# docker-machine create -d generic --generic-ip-address=192.168.101.18 --generic-ssh-key ~/.ssh/id_rsa --generic-ssh-user=root work1

root@manager1:~# docker-machine ls NAME ACTIVE DRIVER STATE URL SWARM DOCKER ERRORS manager1 - generic Running tcp://192.168.101.17:2376 v17.09.0-ce work1 - generic Running tcp://192.168.101.18:2376 v17.09.0-ce

将machine创建好了之后,接下来搭建swarm集群,在管理节点manager1节点搭建:

root@wadeson:~# docker-machine ssh manager1 "docker swarm init --advertise-addr 192.168.101.17"

然后将work1节点加进到swarm集群中:

root@wadeson:~# docker-machine ssh work1 "docker swarm join --token SWMTKN-1-3pb7f9xynb3m8kiejf63w6klcwcbfg0r4dcalnxzeuennfwccc-7ubvqutczmn1ag2qpev2u31wd 192.168.101.17:2377" This node joined a swarm as a worker.

执行完成后,swarm集群就搭建完成了,查看节点信息:

root@wadeson:~# docker-machine ssh manager1 "docker node ls" ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS izidcyk70x8jbhm20emeblymz * manager1 Ready Active Leader gt0onqyz71f2fnzmwqqgtv83z work1 Ready Active

3、创建service

创建一个将端口映射到host主机上的web服务:

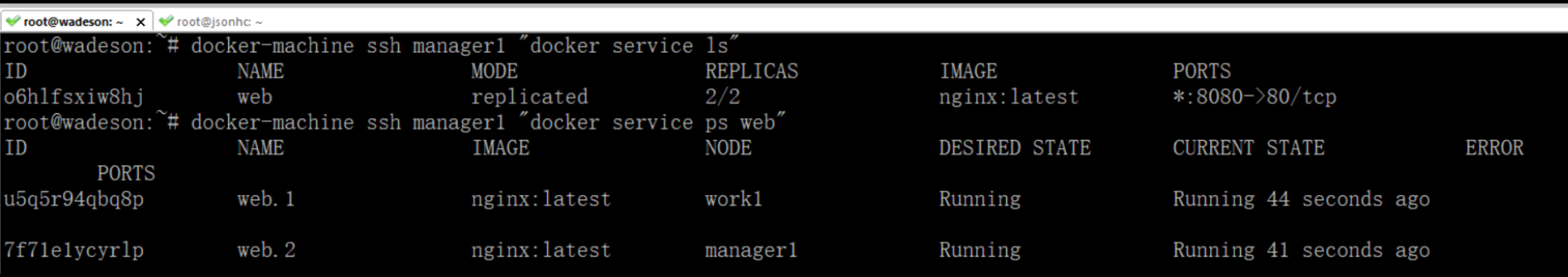

root@wadeson:~# docker-machine ssh manager1 "docker service create --name web --replicas 2 --publish 8080:80 nginx" o6hlfsxiw8hjrh0uigmsg46cm

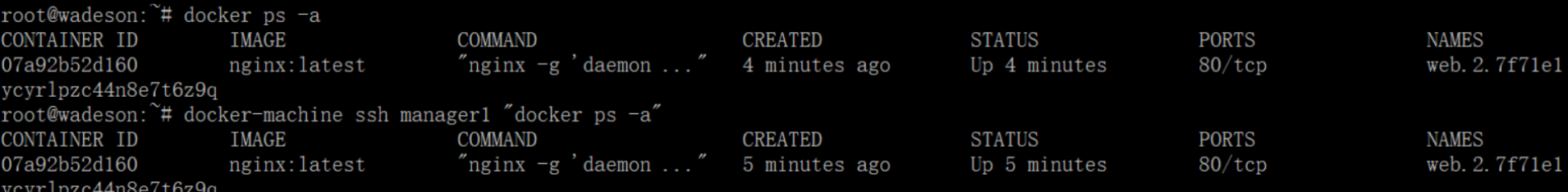

root@wadeson:~# docker-machine ssh manager1 "docker service ls" ID NAME MODE REPLICAS IMAGE PORTS o6hlfsxiw8hj web replicated 0/2 nginx:latest *:8080->80/tcp root@wadeson:~# docker-machine ssh manager1 "docker service ps web" ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS u5q5r94qbq8p web.1 nginx:latest work1 Running Preparing 13 seconds ago 7f71e1ycyrlp web.2 nginx:latest manager1 Running Preparing 13 seconds ago 正在下载镜像,所以不是马上就是running

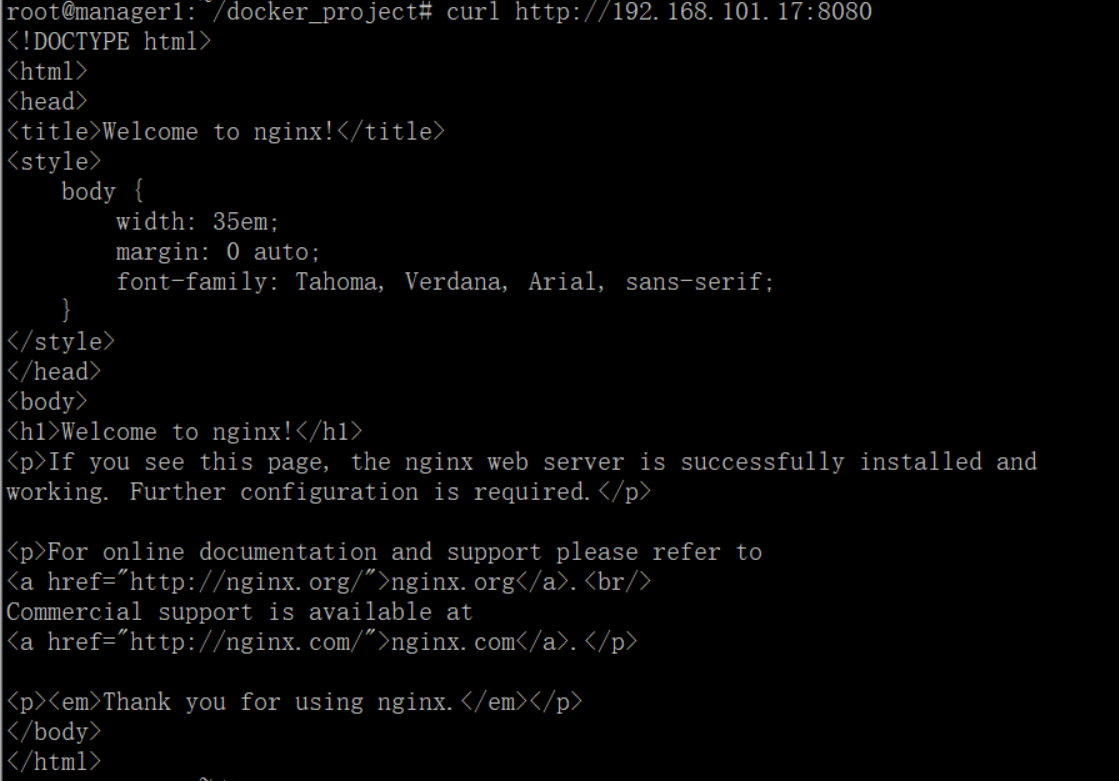

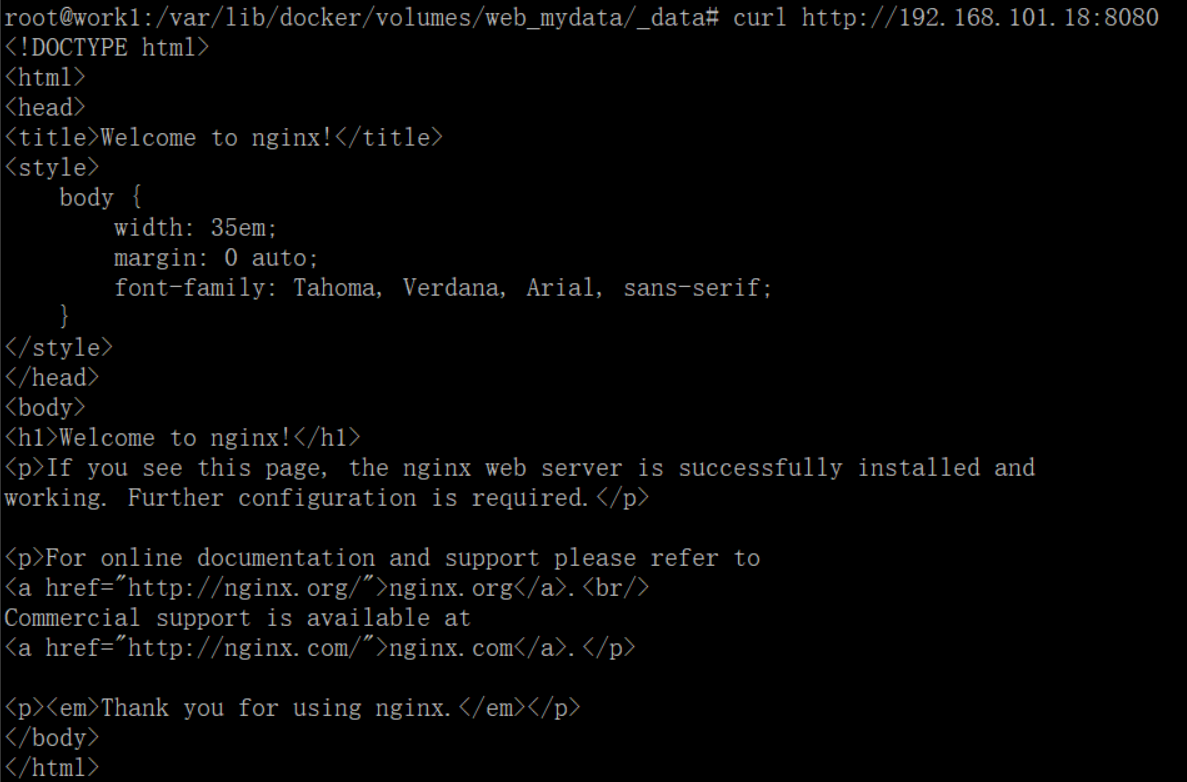

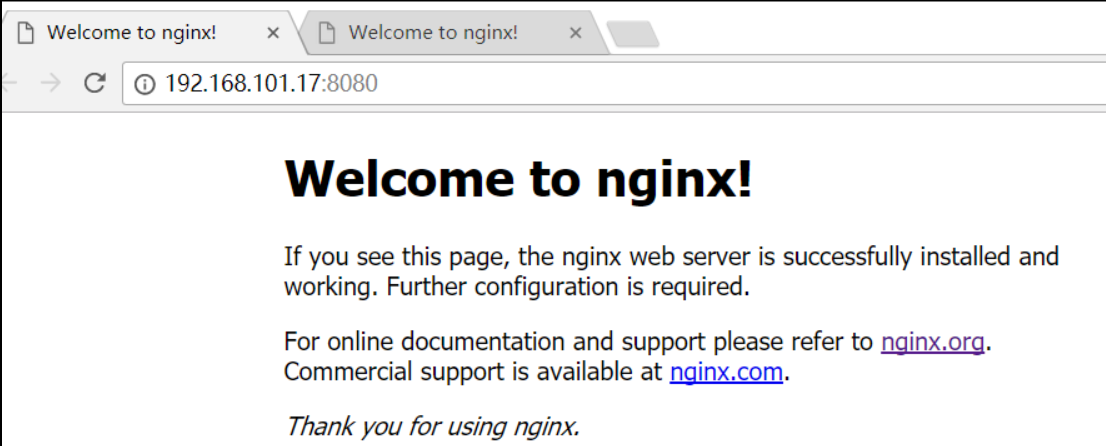

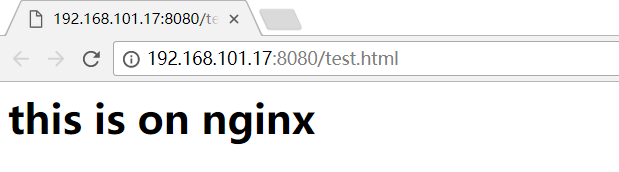

当分配到节点的状态都是running时,表示服务创建成功没有问题,由于在使用centos创建这样类型的服务时,只有manager管理节点能够访问,现在使用ubuntu系统创建的服务:

可以看见在ubuntu创建相同的服务,能够实现负载均衡的效果的,分配在两个节点的任务都能够进行访问成功

当创建的是一个web服务提供网站访问,那么数据目录是需要进行挂载出来的,而service在创建过程中也是可以指定的,但是参数变成了--mount

root@wadeson:~/docker_project# docker-machine ssh manager1 "docker service create --name web --replicas 2 --publish 8080:80 --mount source=,destination=/usr/share/nginx/html nginx" 0kuxwkaoophassmzhzt7yuk6p

上面创建了一个源挂载点为默认的,将它挂载到了nginx的根目录,查看映射到host的详细路径:

root@manager1:/www# docker inspect web.2.rpva7n1100w8tbh55lfi8l9w2

使用容器名进行查看

"Mounts": [

{

"Type": "volume",

"Name": "1e2bcfdf684f1e8ee6bc9f110cbf455b2efe4d4551b940a80991948cbeaca589",

"Source": "/var/lib/docker/volumes/1e2bcfdf684f1e8ee6bc9f110cbf455b2efe4d4551b940a80991948cbeaca589/_data",

"Destination": "/usr/share/nginx/html",

"Driver": "local",

"Mode": "z",

"RW": true,

"Propagation": ""

}

可以详细的知道host主机上的哪一个目录与nginx根目录对应:

查看volume的一些信息:

root@manager1:/www# docker volume ls DRIVER VOLUME NAME local 1e2bcfdf684f1e8ee6bc9f110cbf455b2efe4d4551b940a80991948cbeaca589

可以看见在上面创建的service web之后也创建了一个随机命名的volume(默认为本地)

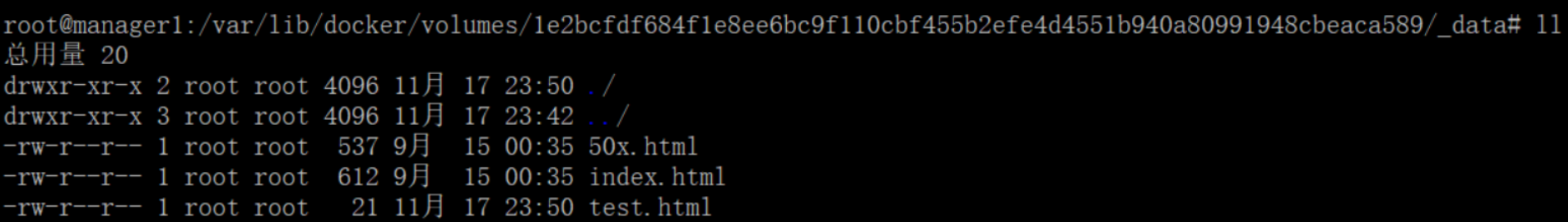

现在在管理节点manager1上的挂载目录创建一个html:

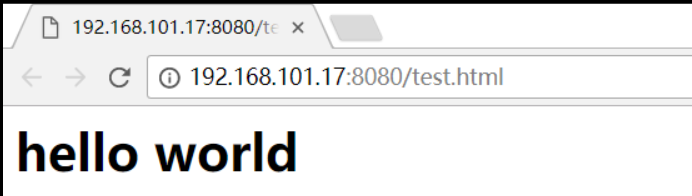

然后进行访问:

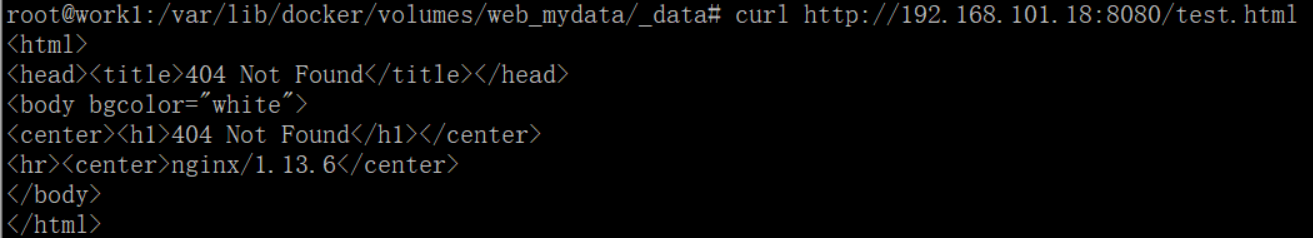

但是查看并访问work1节点时:

在work1节点上创建的随机名是和manager1节点不一样的,所以相应的在manager1节点上增加一个html,而在work1节点上并没有该html的创建

现在在manager1节点上创建一个本地volume:

root@wadeson:~/docker_project# docker volume create test test root@wadeson:~/docker_project# docker volume ls DRIVER VOLUME NAME local test

然后使用该创建的test volume进行创建一个service:

root@wadeson:~/docker_project# docker-machine ssh manager1 "docker service create --name web --replicas 2 --publish 8080:80 --mount source=test,destination=/usr/share/nginx/html nginx" 39bphuu4248u8cvwv57q5880s 使用test来挂载nginx的网站目录

创建完成service之后,现在查看manager1节点将绝对路径分配到了哪里?

root@manager1:/www# docker inspect web.1.znwxgy5k5133xbt4gmys5iho4

"Mounts": [

{

"Type": "volume",

"Name": "test",

"Source": "/var/lib/docker/volumes/test/_data",

"Destination": "/usr/share/nginx/html",

"Driver": "local",

"Mode": "z",

"RW": true,

"Propagation": ""

}

root@manager1:~# docker volume inspect test

[

{

"CreatedAt": "2017-11-18T00:01:43+08:00",

"Driver": "local",

"Labels": {},

"Mountpoint": "/var/lib/docker/volumes/test/_data",

"Name": "test",

"Options": {},

"Scope": "local"

}

]

现在在manager1节点的挂载点上创建一个新的html

root@manager1:/var/lib/docker/volumes/test/_data# ll 总用量 20 drwxr-xr-x 2 root root 4096 11月 18 00:01 ./ drwxr-xr-x 3 root root 4096 11月 17 23:57 ../ -rw-r--r-- 1 root root 537 9月 15 00:35 50x.html -rw-r--r-- 1 root root 612 9月 15 00:35 index.html -rw-r--r-- 1 root root 26 11月 18 00:01 test.html

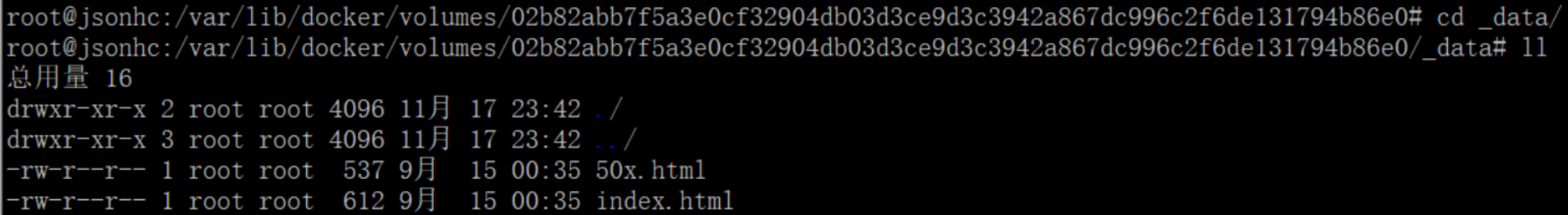

然后在节点work1上查看挂载的点目录:

root@work1:/var/lib/docker/volumes/test/_data# ll 总用量 16 drwxr-xr-x 2 root root 4096 11月 17 23:58 ./ drwxr-xr-x 3 root root 4096 11月 17 23:58 ../ -rw-r--r-- 1 root root 537 9月 15 00:35 50x.html -rw-r--r-- 1 root root 612 9月 15 00:35 index.html

可以看见work1和manager1上并不一致,然后我们访问两个ip:

可以发现即使使用相同的挂载点,如果仅仅在其中一个节点上创建了html,另一个节点依然不能够进行访问到,这种模式就需要进行共享存储或者分布式存储解决了

docker针对volume的方式有很多种:

创建一个tmpfs: root@wadeson:~/docker_project# docker volume create -o type=tmpfs -o device=tmpfs -o o=size=100m,uid=1000 html html

然后查看volume信息:

root@manager1:~# docker volume inspect html

[

{

"CreatedAt": "2017-11-18T00:33:18+08:00",

"Driver": "local",

"Labels": {},

"Mountpoint": "/var/lib/docker/volumes/html/_data",

"Name": "html",

"Options": {

"device": "tmpfs",

"o": "size=100m,uid=1000",

"type": "tmpfs"

},

"Scope": "local"

}

]

root@manager1:~# docker volume ls DRIVER VOLUME NAME local html local test

仅仅是在manager1节点上进行创建了,而work1节点是没有的:

root@work1:~# docker volume ls DRIVER VOLUME NAME local test

现在使用这个html volume进行挂载,那么work1将会创建volume为html的挂载点,但是属性是和manager1完全不一样的

root@manager1:~# docker service create --name web --replicas 2 --publish 8080:80 --mount source=html,destination=/usr/share/nginx/html nginx a410dj6ob3v7dc7dge2os3h28

root@work1:~# docker volume inspect html

[

{

"CreatedAt": "2017-11-19T21:08:10+08:00",

"Driver": "local",

"Labels": null,

"Mountpoint": "/var/lib/docker/volumes/html/_data",

"Name": "html",

"Options": {},

"Scope": "local"

}

]

对于manager1这个节点来说html volume的信息:

root@manager1:~# docker volume inspect html

[

{

"CreatedAt": "2017-11-19T21:08:10+08:00",

"Driver": "local",

"Labels": {},

"Mountpoint": "/var/lib/docker/volumes/html/_data",

"Name": "html",

"Options": {

"device": "tmpfs",

"o": "size=100m,uid=1000",

"type": "tmpfs"

},

"Scope": "local"

}

]

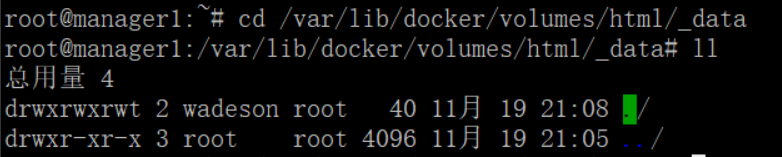

在manager1节点的挂载目录下面看不见nginx的原始html:

关于volume的使用查看官网

4、上面通过docker service创建了服务,现在这里通过stack命令通过compose文件进行创建stack来管理服务:

root@manager1:~/docker_project# cat docker-compose.yml

version: "3"

services:

web:

image: nginx

deploy:

replicas: 2

restart_policy:

condition: on-failure

ports:

- "8080:80"

networks:

- "net"

networks:

net:

编写玩compose文件后,使用stack进行创建:

root@manager1:~/docker_project# docker stack deploy -c docker-compose.yml web Creating network web_net Creating service web_web

可以看见创建了服务web_web,创建了网络web_net,而这里创建的网络与之前单独使用compose创建的服务中的网络driver是不一样的:

root@manager1:~/docker_project# docker network ls NETWORK ID NAME DRIVER SCOPE 535f88afa37e bridge bridge local de07db93a69a docker_gwbridge bridge local c496484033f7 host host local q8u4pz1f00sq ingress overlay swarm 4e36c8cfed74 none null local rny61337fv5k web_net overlay swarm

使用swarm集群创建下的网络默认的driver是overlay模式,而不是bridge

查看创建的stack:

root@manager1:~/docker_project# docker stack ls NAME SERVICES web 1 root@manager1:~/docker_project# docker stack ps web ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS j8eljggx5u0g web_web.1 nginx:latest work1 Running Running about a minute ago 1ogxqm87cmsu web_web.2 nginx:latest manager1 Running Running about a minute ago

由于stack可以用来管理service,所以也可以用service来查看服务:

root@manager1:~/docker_project# docker service ls ID NAME MODE REPLICAS IMAGE PORTS ds0z266ttqrf web_web replicated 2/2 nginx:latest *:8080->80/tcp root@manager1:~/docker_project# docker service ps web_web ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS j8eljggx5u0g web_web.1 nginx:latest work1 Running Running 2 minutes ago 1ogxqm87cmsu web_web.2 nginx:latest manager1 Running Running 2 minutes ago

可以看见service的名称和stack的名称是不一致的

列出在stack中的服务:

root@manager1:~/docker_project# docker stack services web ID NAME MODE REPLICAS IMAGE PORTS ds0z266ttqrf web_web replicated 2/2 nginx:latest *:8080->80/tcp

然后进行访问分配的两个节点:

docker stack的一些命令:

Commands: deploy Deploy a new stack or update an existing stack ls List stacks ps List the tasks in the stack rm Remove one or more stacks services List the services in the stack

这些命令都是比较好操作的,这里不做介绍了

向compose文件中加入volume:

root@manager1:~/docker_project# cat docker-compose.yml

version: "3"

services:

web:

image: nginx

deploy:

replicas: 2

restart_policy:

condition: on-failure

ports:

- "8080:80"

networks:

- "net"

volumes:

- "/www:/usr/share/nginx/html"

networks:

net:

volumes指令这里,只是在manager1节点上创建了/www目录,而work1节点并没有:

root@manager1:~/docker_project# docker stack ps web ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS yeoo33w9gtyg web_web.1 nginx:latest manager1 Running Running 26 seconds ago h42wivwjeyyg web_web.2 nginx:latest manager1 Running Running 1 second ago k9cwzgiwqfkw \_ web_web.2 nginx:latest work1 Shutdown Rejected 16 seconds ago "invalid mount config for type…" k1z0euovj5ot \_ web_web.2 nginx:latest work1 Shutdown Rejected 21 seconds ago "invalid mount config for type…" xqchylah6ovp \_ web_web.2 nginx:latest work1 Shutdown Rejected 26 seconds ago "invalid mount config for type…" h7cc0rl07vz7 \_ web_web.2 nginx:latest work1 Shutdown Rejected 26 seconds ago "invalid mount config for type…"

于是swarm试图在work1上分配任务失败,节点挂载失败,于是全部分配到了manager1节点上,如果需要在work1节点上挂载成功,需要创建www目录,但是后面的数据一致还是需要共享存储

将上面的volumes挂载改为volume下的某一个没有创建的(两个节点之前都没创建)

root@manager1:~/docker_project# cat docker-compose.yml

version: "3"

services:

web:

image: nginx

deploy:

replicas: 2

restart_policy:

condition: on-failure

ports:

- "8080:80"

networks:

- "net"

volumes:

- "mydata:/usr/share/nginx/html"

networks:

net:

volumes:

mydata:

利用上面的compose进行创建stack:

root@manager1:~/docker_project# vim docker-compose.yml root@manager1:~/docker_project# docker stack deploy -c docker-compose.yml web Creating network web_net Creating service web_web

root@manager1:~/docker_project# docker stack ps web ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS qgph48g6devs web_web.1 nginx:latest manager1 Running Running 4 seconds ago ukualp6c6rwn web_web.2 nginx:latest work1 Running Running 3 seconds ago

可以看见节点都能成功执行任务,现在查看各节点的volume情况:

root@manager1:~/docker_project# docker volume ls DRIVER VOLUME NAME local html local test local web_mydata

root@work1:~# docker volume ls DRIVER VOLUME NAME local html local test local web_mydata

然后查看具体volume web_mydata的挂载目录:

root@manager1:~/docker_project# docker volume inspect web_mydata

[

{

"CreatedAt": "2017-11-19T21:39:16+08:00",

"Driver": "local",

"Labels": {

"com.docker.stack.namespace": "web"

},

"Mountpoint": "/var/lib/docker/volumes/web_mydata/_data",

"Name": "web_mydata",

"Options": {},

"Scope": "local"

}

]

root@manager1:~/docker_project# cd /var/lib/docker/volumes/web_mydata/_data root@manager1:/var/lib/docker/volumes/web_mydata/_data# ll 总用量 16 drwxr-xr-x 2 root root 4096 11月 19 21:39 ./ drwxr-xr-x 3 root root 4096 11月 19 21:39 ../ -rw-r--r-- 1 root root 537 9月 15 00:35 50x.html -rw-r--r-- 1 root root 612 9月 15 00:35 index.html

root@work1:/var/lib/docker/volumes/web_mydata/_data# ll 总用量 16 drwxr-xr-x 2 root root 4096 11月 19 21:39 ./ drwxr-xr-x 3 root root 4096 11月 19 21:39 ../ -rw-r--r-- 1 root root 537 9月 15 00:35 50x.html -rw-r--r-- 1 root root 612 9月 15 00:35 index.html

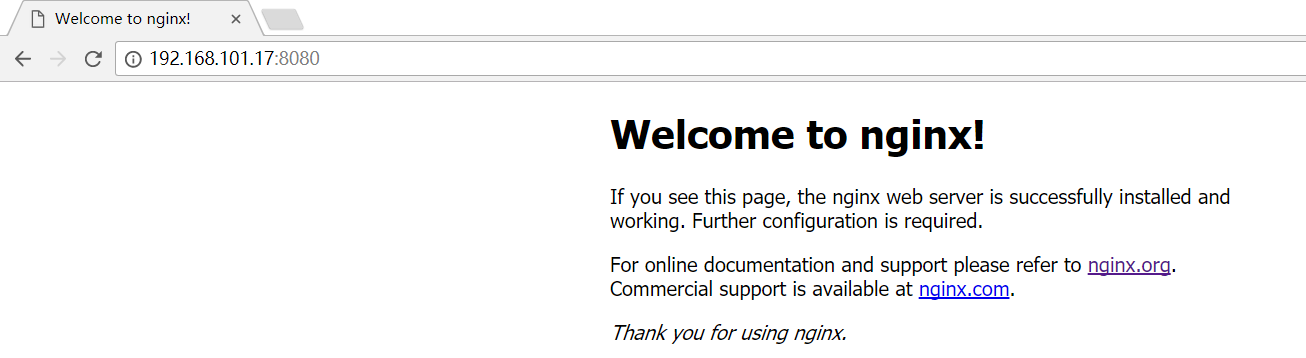

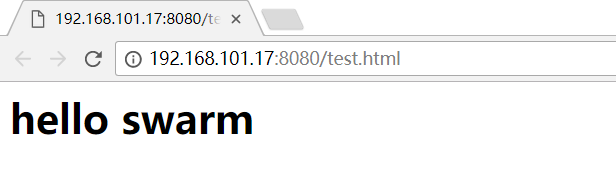

可以看见挂载相同的内容了,然后向manager1创建一个html:

root@manager1:/var/lib/docker/volumes/web_mydata/_data# echo "<h1>hello swarm</h1>" >> test.html

访问两个节点:

由此可见,同样需要共享存储,或者分布式存储

如果需要将创建的任务进行约束constraints,比如将创建的replicas都创建在节点work1上面:

root@manager1:~/docker_project# cat docker-compose.yml

version: "3"

services:

web:

image: nginx

deploy:

replicas: 2

restart_policy:

condition: on-failure

placement:

constraints: [ node.hostname == work1 ]

ports:

- "8080:80"

networks:

- "net"

volumes:

- "mydata:/usr/share/nginx/html"

networks:

net:

volumes:

mydata:

root@manager1:~/docker_project# docker stack ps web ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS waqycm6yzq7n web_web.1 nginx:latest work1 Running Running 5 seconds ago c95s2ts8nnn9 web_web.2 nginx:latest work1 Running Running 5 seconds ago

从上面可以看见了,创建的任务都分配到了节点work1上面,这就是在deploy中添加了constraints条件

而在deploy中也有mode一说,分别有global和replicated,下面举例说明(默认为replicated)

root@manager1:~/docker_project# cat docker-compose.yml

version: "3"

services:

web:

image: nginx

deploy:

mode: replicated

replicas: 2

restart_policy:

condition: on-failure

placement:

constraints: [ node.hostname == work1 ]

ports:

- "8080:80"

networks:

- "net"

volumes:

- "mydata:/usr/share/nginx/html"

networks:

net:

volumes:

mydata:

mode为replicated的时候时,与replicas搭配使用,代表创建的任务的副本数,而global不与replicas连用,表示在每个节点上都创建有任务:

root@manager1:~/docker_project# cat docker-compose.yml

version: "3"

services:

web:

image: nginx

deploy:

mode: global

restart_policy:

condition: on-failure

ports:

- "8080:80"

networks:

- "net"

volumes:

- "mydata:/usr/share/nginx/html"

networks:

net:

volumes:

mydata:

然后查看创建任务对于的节点状态:

root@manager1:~/docker_project# docker stack ps web ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS rc1t2hv5amhy web_web.izidcyk70x8jbhm20emeblymz nginx:latest manager1 Running Running 1 second ago qbianufy60p4 web_web.gt0onqyz71f2fnzmwqqgtv83z nginx:latest work1 Running Running 1 second ago

当然也可以对全部的节点进行约束,也可以仅仅只是在某一个节点上创建任务:

root@manager1:~/docker_project# cat docker-compose.yml

version: "3"

services:

web:

image: nginx

deploy:

mode: global

restart_policy:

condition: on-failure

placement:

constraints:

- node.hostname == work1

ports:

- "8080:80"

networks:

- "net"

volumes:

- "mydata:/usr/share/nginx/html"

networks:

net:

volumes:

mydata:

root@manager1:~/docker_project# docker stack ps web ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS oof47jartobw web_web.gt0onqyz71f2fnzmwqqgtv83z nginx:latest work1 Running Running 3 seconds ago

而访问两个节点却都是成功的: