In this tutorial we're going to use RabbitMQ to build an RPC system:

a client and a scalable(可扩展的) RPC server.

As we don't have any time-consuming tasks that are worth distributing, we're going to create a dummy(虚拟的) RPC service that returns Fibonacci numbers.

Client interface

To illustrate how an RPC service could be used we're going to create a simple client class.

It's going to expose a method named call which sends an RPC request and blocks until the answer is received:

fibonacci_rpc = FibonacciRpcClient() result = fibonacci_rpc.call(4) print("fib(4) is %r" % result)

A note on RPC Although RPC is a pretty common pattern in computing, it's often criticised(批评).

The problems arise(出现) when a programmer is not aware whether a function call is local or if it's a slow RPC.

Confusions like that result in an unpredictable system and adds unnecessary complexity to debugging.

Instead of simplifying software, misused(滥用) RPC can result in unmaintainable(难以维护的) spaghetti(意大利面条似得) code. Bearing that in mind, consider the following advice: Make sure it's obvious which function call is local and which is remote. Document your system. Make the dependencies between components clear. Handle error cases. How should the client react when the RPC server is down for a long time?

When in doubt avoid RPC. If you can,

you should use an asynchronous pipeline(异步的管道) - instead of RPC-like blocking,

results are asynchronously pushed to a next computation stage.

Callback queue

In general doing RPC over RabbitMQ is easy.

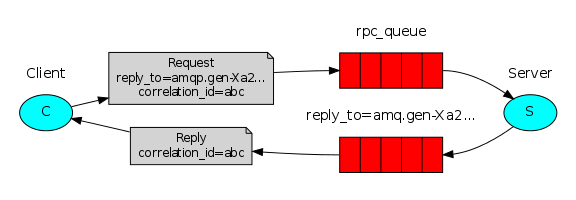

A client sends a request message and a server replies with a response message. In order to receive a response the client needs to send a 'callback' queue address with the request. Let's try it:

result = channel.queue_declare(exclusive=True) callback_queue = result.method.queue channel.basic_publish(exchange='', routing_key='rpc_queue', properties=pika.BasicProperties( reply_to = callback_queue, ), body=request) # ... and some code to read a response message from the callback_queue ...

Message properties The AMQP 0-9-1 protocol predefines(预定义) a set of 14 properties(属性) that go with(附属于) a message.

Most of the properties are rarely used, with the exception of the following:(以下常用) delivery_mode: Marks a message as persistent (with a value of 2) or transient(短暂的) (any other value).

You may remember this property from the second tutorial. content_type: Used to describe the mime-type(文件类型) of the encoding.

For example for the often used JSON encoding it is a good practice to set this property to: application/json. reply_to: Commonly used to name a callback queue. correlation_id: Useful to correlate RPC responses with requests.

Correlation id

In the method presented above we suggest creating a callback queue for every RPC request. That's pretty inefficient, but fortunately there is a better way - let's create a single callback queue per client.

That raises a new issue, having received a response in that queue it's not clear to which request the response belongs. That's when the correlation_id property is used. We're going to set it to a unique value for every request. Later, when we receive a message in the callback queue we'll look at this property, and based on that we'll be able to match a response with a request. If we see an unknown correlation_id value, we may safely discard(放弃) the message - it doesn't belong to our requests.

You may ask, why should we ignore unknown messages in the callback queue, rather than failing with an error? It's due to a possibility of a race condition on the server side. Although unlikely, it is possible that the RPC server will die just after sending us the answer, but before sending an acknowledgment message for the request. If that happens, the restarted RPC server will process the request again. That's why on the client we must handle the duplicate responses gracefully, and the RPC should ideally be idempotent.(幂等)

Summary

Our RPC will work like this:

- When the Client starts up, it creates an anonymous exclusive callback queue.

- For an RPC request, the Client sends a message with two properties: reply_to, which is set to the callback queue and correlation_id, which is set to a unique value for every request.(reply_to服务端返回结果到正确的队列,correlation_id客户端从reply_to中取出指定请求的结果)

- The request is sent to an rpc_queue queue.

- The RPC worker (aka: server) is waiting for requests on that queue. When a request appears, it does the job and sends a message with the result back to the Client, using the queue from the reply_to field.

- The client waits for data on the callback queue. When a message appears, it checks the correlation_id property. If it matches the value from the request it returns the response to the application.

# rpc_server.py import pika connection = pika.BlockingConnection(pika.ConnectionParameters(host='localhost')) channel = connection.channel() channel.queue_declare(queue='rpc_queue') def fib(n): if n == 0: return 0 elif n == 1: return 1 else: return fib(n-1) + fib(n-2) def on_request(ch, method, props, body): n = int(body) print(" [.] fib(%s)" % n) response = fib(n) ch.basic_publish(exchange='', routing_key=props.reply_to, properties=pika.BasicProperties(correlation_id = props.correlation_id), body=str(response)) ch.basic_ack(delivery_tag = method.delivery_tag) channel.basic_qos(prefetch_count=1) channel.basic_consume(on_request, queue='rpc_queue') print(" [x] Awaiting RPC requests") channel.start_consuming()

The server code is rather straightforward:

- (4) As usual we start by establishing the connection and declaring the queue.

- (11) We declare our fibonacci function. It assumes(假设) only valid positive integer input. (Don't expect this one to work for big numbers, it's probably the slowest recursive implementation possible).

- (19) We declare a callback for basic_consume, the core of the RPC server. It's executed when the request is received. It does the work and sends the response back.

- (32) We might want to run more than one server process. In order to spread the load equally over multiple servers we need to set the prefetch_count setting.

#rpc_client.py import pika import uuid class FibonacciRpcClient(object): def __init__(self): self.connection = pika.BlockingConnection(pika.ConnectionParameters(host='localhost')) self.channel = self.connection.channel() result = self.channel.queue_declare(exclusive=True) self.callback_queue = result.method.queue self.channel.basic_consume(self.on_response, no_ack=True, queue=self.callback_queue) def on_response(self, ch, method, props, body): if self.corr_id == props.correlation_id: self.response = body def call(self, n): self.response = None self.corr_id = str(uuid.uuid4()) self.channel.basic_publish(exchange='', routing_key='rpc_queue', properties=pika.BasicProperties( reply_to = self.callback_queue, correlation_id = self.corr_id, ), body=str(n)) while self.response is None: self.connection.process_data_events() return int(self.response) fibonacci_rpc = FibonacciRpcClient() print(" [x] Requesting fib(30)") response = fibonacci_rpc.call(31) print(" [.] Got %r" % response)

The client code is slightly more involved:

- (7) We establish a connection, channel and declare an exclusive 'callback' queue for replies.

- (16) We subscribe to the 'callback' queue, so that we can receive RPC responses.

- (18) The 'on_response' callback executed on every response is doing a very simple job, for every response message it checks if the correlation_id is the one we're looking for. If so, it saves the response in self.response and breaks the consuming loop.

- (23) Next, we define our main call method - it does the actual RPC request.

- (24) In this method, first we generate a unique correlation_id number and save it - the 'on_response' callback function will use this value to catch the appropriate response.

- (25) Next, we publish the request message, with two properties: reply_to and correlation_id.

- (32) At this point we can sit back and wait until the proper response arrives.

- (33) And finally we return the response back to the user.