一、pg相关

1、xx objects unfound

- 问题描述:

dmesg查看磁盘发现读写异常,部分对象损坏(处于objects nofound状态),集群处于ERR状态

root@node1101:~# ceph health detail

HEALTH_ERR noscrub,nodeep-scrub flag(s) set; 13/409798 objects unfound(0.003%);17 stuck requests are blocked > 4096 sec. Implicated osds 38

OSDMAP_FLAGS noscrub,nodeep-scrub flag(s) set

OBJECT_UNFOUND 13/409798 objects unfound (0.003%)

pg 5.309 has 1 unfound objects

pg 5.2da has 1 unfound objects

pg 5.2c9 has 1 unfound objects

pg 5.1e2 has 1 unfound objects

pg 5.6a has 1 unfound objects

pg 5.120 has 1 unfound objects

pg 5.148 has 1 unfound objects

pg 5.14b has 1 unfound objects

pg 5.160 has 1 unfound objects

pg 5.35b has 1 unfound objects

pg 5.39c has 1 unfound objects

pg 5.3ad has 1 unfound objects

REQUEST_STUCK 17 stuck requests are blocked > 4096 sec. Implicated osds 38

17 ops are blocked > 67108.9 sec

osd.38 has stuck requests > 67108.9 sec- 处理措施:

将unfound pg强制删除,参考命令:ceph pg {pgid} mark_unfound_lost delete

注:如需批量删除unfound pg,则参考命令如下

for i in `ceph health detail | grep pg | awk '{print $2}'`;do ceph pg $i mark_unfound_lost delete;done2、Reduced data availability: xx pgs inactive

- 问题描述:

磁盘出现读写异常,osd无法启动,强制替换故障盘为新盘加入到集群,出现pgs inactive(unkown)

root@node1106:~# ceph -s

cluster:

id: 7f1aa879-afbb-4b19-9bc3-8f55c8ecbbb4

health: HEALTH_WARN

4 clients failing to respond to capability release

3 MDSs report slow metadata IOs

1 MDSs report slow requests

3 MDSs behind on trimming

noscrub,nodeep-scrub flag(s) set

Reduced data availability: 25 pgs inactive

6187 slow requests are blocked > 32 sec. Implicated osds 41

services:

mon: 3 daemons, quorum node1101,node1102,node1103

mgr: node1103(active), standbys: node1102, node1101

mds: ceph-3/3/3 up {0=node1103=up:active,1=node1102=up:active,2=node1104=up:active}, 2 up:standby

osd: 48 osds: 48 up, 48 in

flags noscrub,nodeep-scrub

data:

pools: 6 pools, 2888 pgs

objects: 474.95k objects, 94.5GiB

usage: 267GiB used, 202TiB / 202TiB avail

pgs: 0.866% pgs unknown

2863 active+clean

25 unknown

root@node1101:~# ceph pg dump_stuck inactive

ok

PG_STAT STATE UP UP_PRIMARY ACTING ACTING_PRIMARY

1.166 unknown [] -1 [] -1

1.163 unknown [] -1 [] -1

1.26f unknown [] -1 [] -1

1.228 unknown [] -1 [] -1

1.213 unknown [] -1 [] -1

1.12f unknown [] -1 [] -1

1.276 unknown [] -1 [] -1

1.264 unknown [] -1 [] -1

1.32a unknown [] -1 [] -1

1.151 unknown [] -1 [] -1

1.20d unknown [] -1 [] -1

1.298 unknown [] -1 [] -1

1.306 unknown [] -1 [] -1

1.2f7 unknown [] -1 [] -1

1.2c8 unknown [] -1 [] -1

1.223 unknown [] -1 [] -1

1.204 unknown [] -1 [] -1

1.374 unknown [] -1 [] -1

1.b5 unknown [] -1 [] -1

1.b6 unknown [] -1 [] -1

1.2b unknown [] -1 [] -1

1.9f unknown [] -1 [] -1

1.2ac unknown [] -1 [] -1

1.78 unknown [] -1 [] -1

1.1c3 unknown [] -1 [] -1

1.1a unknown [] -1 [] -1

1.d9 unknown [] -1 [] -1 - 处理措施:

强制创建unkown pg,参考命令:ceph osd force-create-pg {pgid}

注:如需批量创建unkown pg,则参考命令如下:

for i in `ceph pg dump_stuck inactive | awk '{if (NR>2){print $1}}'`;do ceph osd force-create-pg $i;done二、OSD相关

1、osd端口与其他服务固定绑定端口冲突

- 问题描述:

osd先行启动,占用其他服务固定绑定端口,导致其他服务绑定端口失败,无法启动

- 处理措施:

考虑到其他服务涉及组件太多,担心修改不完全导致其他问题发生,尝试修改osd启动端口范围为其他服务之外

- 修改osd作为服务端的启动端口范围

ceph可通过ms_bind_port_min和ms_bind_port_max参数限制osd和mds守护进程使用端口范围,默认范围为6800:7300

设置端口使用范围为9600:10000,追加参数设置至/etc/ceph/ceph.conf文件中[global]字段内

[root@node111 ~]# cat /etc/ceph/ceph.conf | grep ms_bind_port

ms_bind_port_min = 9600

ms_bind_port_max = 10000

[root@node111 ~]#

[root@node111 ~]# ceph --show-config | grep ms_bind_port

ms_bind_port_max = 10000

ms_bind_port_min = 9600- 修改osd作为客户端的启动端口范围

osd作为客户端的启动端口为随机分配的,可通过内核去限制随机端口分配范围

默认端口范围为1024:65000,修改端口范围为7500:65000

--默认端口范围为1024:65000

[root@node111 ~]# cat /proc/sys/net/ipv4/ip_local_port_range

1024 65000

--修改范围为7500:65000

[root@node111 ~]# sed -i 's/net.ipv4.ip_local_port_range=1024 65000/net.ipv4.ip_local_port_range=7500 65000/g' /etc/sysctl.conf

[root@node111 ~]# sysctl -p2、磁盘热插拔,osd无法上线

- 问题描述:

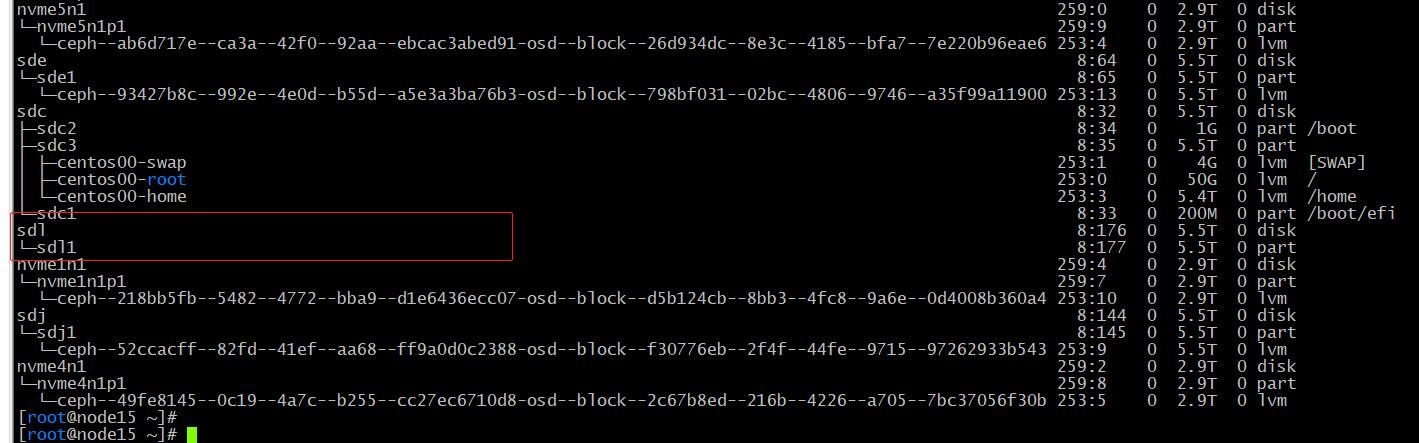

使用bluestore部署ceph集群,对osd所在磁盘进行热插拔操作,当重新插回之后,osd对应lvm不能自动恢复,导致osd无法上线成功

- 处理措施:

- 查找故障osd对应uuid:

ceph osd dump | grep {osd-id} | awk '{print $NF}'参考示例:查找osd.0对应uuid

[root@node127 ~]# ceph osd dump | grep osd.0 | awk '{print $NF}'

57377809-fba4-4389-8703-f9603f16e60d- 查找故障osd对应lvm路径:

ls /dev/mapper/ | grep `ceph osd dump | grep {osd-id} | awk '{print $NF}' | sed 's/-/--/g'`参考示例:通过uuid查找对应lvm路径

注:由于lvm路径对uuid做了处理,需要sed 's/-/--/g'`将-替换为--

[root@node127 ~]# ls /dev/mapper/ | grep `ceph osd dump | grep osd.0 | awk '{print $NF}' | sed 's/-/--/g'`

ceph--3182c42e--f8d8--4c13--ad92--987463d626c8-osd--block--57377809--fba4--4389--8703--f9603f16e60d- 删除故障osd对应lvm路径

dmsetup remove /dev/mapper/{lvm-path}参考示例:删除故障osd对应lvm路径

[root@node127 ~]# dmsetup remove /dev/mapper/ceph--3182c42e--f8d8--4c13--ad92--987463d626c8-osd--block--57377809--fba4--4389--8703--f9603f16e60d - 重新激活所有lvm卷组

注:此时可以查看到对应故障osd的lvm信息

vgchange -ay- 重新启动osd使得osd上线

systemctl start ceph-volume@lvm-{osd-id}-{osd-uuid}三、集群相关

1、clock skew detected on mon.node2

- 问题描述:

集群mon节点时间偏差过大,出现clock skew detected on mon.node2 告警信息

- 处理措施:

1、检查集群mon节点时间偏差,使用chronyd时间进行集群时间同步

2、调大集群参数阈值,调整mon_clock_drift_allowed 参数值为2,调整mon_clock_drift_warn_backoff 参数值为30

sed -i "2 amon_clock_drift_allowed = 2" /etc/ceph/ceph.conf

sed -i "3 amon_clock_drift_warn_backoff = 30" /etc/ceph/ceph.conf

ceph tell mon.* injectargs '--mon_clock_drift_allowed 2'

ceph tell mon.* injectargs '--mon_clock_drift_warn_backoff 30'注:相关参数说明如下:

[root@node147 ~]# ceph --show-config | grep mon_clock_drift

mon_clock_drift_allowed = 0.050000

--当mon节点之间时间偏移超过0.05秒,则不正常

mon_clock_drift_warn_backoff = 5.000000

--当出现5次偏移,则上报告警