郑重声明:原文参见标题,如有侵权,请联系作者,将会撤销发布!

Neural Computation, (2007): 2245-2279

Abstract

学习智能体,无论是自然的还是人工的,都必须更新它们的内部参数,以便随着时间的推移改进它们的行为。在强化学习中,这种可塑性受到环境信号(称为奖励)的影响,该信号将变化引导到适当的方向。我们将最近引入的从机器学习中引入的策略学习算法应用于脉冲神经网络,并推导出一个脉冲时序依赖可塑性规则,以确保收敛到期望平均奖励的局部最优值。该方法适用于广泛的神经元模型,包括Hodgkin-Huxley模型。我们证明了派生规则在几个toy问题中的有效性。最后,通过统计分析,我们表明所建立的突触可塑性规则与广泛使用的BCM规则密切相关,具有良好的生物学证据。

1 Policy Learning and Neuronal Dynamics

2 Derivation of the Weight Update

2.1 Two Explicit Choices for α.

3 Extensions to General Neuronal Models

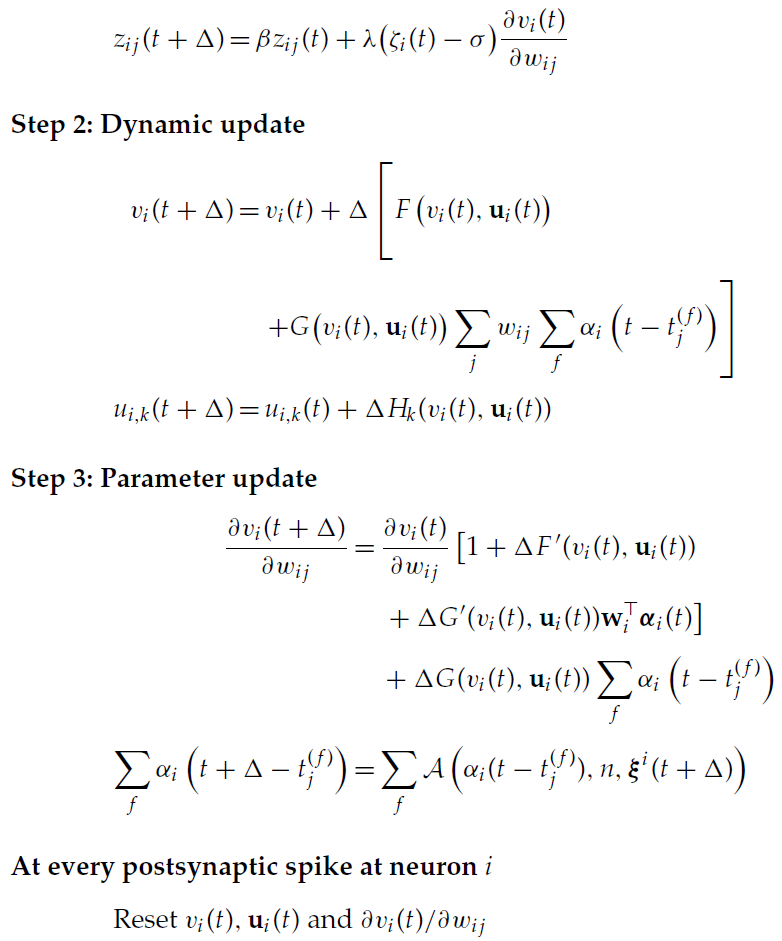

Algorithm 1: Synaptic Update Rule for a Generalized Neuronal Model

3.1 Explicit Calculation of the Update Rules for Different α Functions.

3.1.1 Demonstration for α(s) = qδ(s).

3.1.2 Demonstration for α(s) = ![]() .

.

3.2 Depressing Synapses.

4 Simulation Results

5 Relation to the BCM Rule

6 Discussion

Appendix A: Computing Expectations

A.1 Expectation with Respect to the Postsynaptic Spike Train.

A.2 Expectation with Respect to the Presynaptic Spike Train.

Appendix B: Simulation Details

Appendix C: Technical Derivations

C.1 Decaying Exponential α Function.

C.2 Depressing Synapses.

Case 1: No Presynaptic Spike Occurred Since the Last Postsynaptic Spike.

Case 2: At Least One Presynaptic Spike Occurred Since the Last Postsynaptic Spike.

C.3 MDPs, POMDPs

C.3.1 MDPs.