上次的图像拼接效果还是不够好,有鬼影。所有一直在想怎么解决。

才发现基于拉普拉斯金字塔的图像融合是可以的。然后就找到原来还有最佳拼接缝一说。然后发现opencv高版本是带这个的,但是怎么解决呢?

http://blog.csdn.net/wd1603926823/article/details/49536691

http://blog.csdn.net/hanshuning/article/details/41960401

http://blog.csdn.net/manji_lee/article/details/9002228

还没解决

1 找最佳缝合线模板

2 调用拉普拉斯进行多分辨率融合

opencv自带的stitch 貌似必须2.4版本以上才行,代码:

1 #include "stdafx.h" 2 #include <iostream> 3 #include <fstream> 4 #include <string> 5 #include "opencv2/opencv_modules.hpp" 6 #include "opencv2/highgui/highgui.hpp" 7 #include "opencv2/stitching/detail/autocalib.hpp" 8 #include "opencv2/stitching/detail/blenders.hpp" 9 #include "opencv2/stitching/detail/camera.hpp" 10 #include "opencv2/stitching/detail/exposure_compensate.hpp" 11 #include "opencv2/stitching/detail/matchers.hpp" 12 #include "opencv2/stitching/detail/motion_estimators.hpp" 13 #include "opencv2/stitching/detail/seam_finders.hpp" 14 #include "opencv2/stitching/detail/util.hpp" 15 #include "opencv2/stitching/detail/warpers.hpp" 16 #include "opencv2/stitching/warpers.hpp" 17 #include <opencv2/stitching/stitcher.hpp> 18 #include<time.h> 19 using namespace std; 20 using namespace cv; 21 using namespace cv::detail; 22 //定义参数 23 24 bool try_use_gpu = false; 25 vector<Mat> imgs; 26 string result_name = "result.jpg"; 27 28 int main() 29 { 30 Mat img = imread("1.jpg"); 31 imgs.push_back(img); 32 img = imread("2.jpg"); 33 imgs.push_back(img); 34 35 Mat pano; 36 Stitcher stitcher = Stitcher::createDefault(try_use_gpu); 37 Stitcher::Status status = stitcher.stitch(imgs, pano); 38 39 if (status != Stitcher::OK) 40 { 41 cout << "Can't stitch images, error code = " << int(status) << endl; 42 return -1; 43 } 44 45 imwrite(result_name, pano); 46 return 0; 47 system("pause"); 48 return 0; 49 }

傻瓜式的,无法改写的设置。

然后改成另外一个可以改写的版本:

1 #include "stdafx.h" 2 #include <iostream> 3 #include <fstream> 4 #include <string> 5 #include "opencv2/opencv_modules.hpp" 6 #include "opencv2/highgui/highgui.hpp" 7 #include "opencv2/stitching/detail/autocalib.hpp" 8 #include "opencv2/stitching/detail/blenders.hpp" 9 #include "opencv2/stitching/detail/camera.hpp" 10 #include "opencv2/stitching/detail/exposure_compensate.hpp" 11 #include "opencv2/stitching/detail/matchers.hpp" 12 #include "opencv2/stitching/detail/motion_estimators.hpp" 13 #include "opencv2/stitching/detail/seam_finders.hpp" 14 #include "opencv2/stitching/detail/util.hpp" 15 #include <opencv2/stitching/warpers.hpp> 16 #include <opencv2/stitching/stitcher.hpp> 17 #include<time.h> 18 using namespace std; 19 using namespace cv; 20 using namespace cv::detail; 21 //定义参数 22 23 bool try_use_gpu = false; 24 vector<Mat> imgs; 25 string result_name = "result.jpg"; 26 27 int main() 28 { 29 clock_t start, finish; 30 double totaltime; 31 start = clock(); 32 int filenum = 2; 33 char* fdir[] = { "1.jpg", "2.jpg", "3.jpg", "4.jpg", "5.jpg"}; 34 Mat img, pano; 35 for (int i = 0; i < filenum; i++){ 36 img = imread(fdir[i]); 37 imgs.push_back(img); 38 } 39 Stitcher stitcher = Stitcher::createDefault(try_use_gpu); 40 stitcher.setRegistrationResol(0.6);//为了加速,我选0.1,默认是0.6,最大值1最慢,此方法用于特征点检测阶段,如果找不到特征点,调高吧 41 //stitcher.setSeamEstimationResol(0.1);//默认是0.1 42 //stitcher.setCompositingResol(-1);//默认是-1,用于特征点检测阶段,找不到特征点的话,改-1 43 stitcher.setPanoConfidenceThresh(1);//默认是1,见过有设0.6和0.4的 44 stitcher.setWaveCorrection(false);//默认是true,为加速选false,表示跳过WaveCorrection步骤 45 //stitcher.setWaveCorrectKind(detail::WAVE_CORRECT_HORIZ);//还可以选detail::WAVE_CORRECT_VERT ,波段修正(wave correction)功能(水平方向/垂直方向修正)。因为setWaveCorrection设的false,此语句没用 46 47 //找特征点surf算法,此算法计算量大,但对刚体运动、缩放、环境影响等情况下较为稳定 48 detail::SurfFeaturesFinder *featureFinder = new detail::SurfFeaturesFinder(); 49 stitcher.setFeaturesFinder(featureFinder); 50 51 //找特征点ORB算法,但是发现草地这组图,这个算法不能完成拼接 52 //detail::OrbFeaturesFinder *featureFinder = new detail::OrbFeaturesFinder(); 53 //stitcher.setFeaturesFinder(featureFinder); 54 55 //Features matcher which finds two best matches for each feature and leaves the best one only if the ratio between descriptor distances is greater than the threshold match_conf. 56 detail::BestOf2NearestMatcher *matcher = new detail::BestOf2NearestMatcher(false, 0.5f/*=match_conf默认是0.65,我选0.8,选太大了就没特征点啦,0.8都失败了*/); 57 stitcher.setFeaturesMatcher(matcher); 58 59 // Rotation Estimation,It takes features of all images, pairwise matches between all images and estimates rotations of all cameras. 60 //Implementation of the camera parameters refinement algorithm which minimizes sum of the distances between the rays passing through the camera center and a feature,这个耗时短 61 stitcher.setBundleAdjuster(new detail::BundleAdjusterRay()); 62 //Implementation of the camera parameters refinement algorithm which minimizes sum of the reprojection error squares. 63 //stitcher.setBundleAdjuster(new detail::BundleAdjusterReproj()); 64 65 //Seam Estimation 66 //Minimum graph cut-based seam estimator 67 //stitcher.setSeamFinder(new detail::GraphCutSeamFinder(detail::GraphCutSeamFinderBase::COST_COLOR));//默认就是这个 68 //stitcher.setSeamFinder(new detail::GraphCutSeamFinder(detail::GraphCutSeamFinderBase::COST_COLOR_GRAD));//GraphCutSeamFinder的第二种形式 69 //啥SeamFinder也不用,Stub seam estimator which does nothing. 70 stitcher.setSeamFinder(new detail::NoSeamFinder); 71 //Voronoi diagram-based seam estimator. 72 //stitcher.setSeamFinder(new detail::VoronoiSeamFinder); 73 74 //exposure compensators曝光补偿 75 //stitcher.setExposureCompensator(new detail::BlocksGainCompensator());//默认的就是这个 76 //不要曝光补偿 77 stitcher.setExposureCompensator(new detail::NoExposureCompensator()); 78 //Exposure compensator which tries to remove exposure related artifacts by adjusting image intensities 79 //stitcher.setExposureCompensator(new detail::detail::GainCompensator()); 80 //Exposure compensator which tries to remove exposure related artifacts by adjusting image block intensities 81 //stitcher.setExposureCompensator(new detail::detail::BlocksGainCompensator()); 82 83 //Image Blenders 84 //Blender which uses multi-band blending algorithm 85 stitcher.setBlender(new detail::MultiBandBlender(try_use_gpu));//默认的是这个 86 //Simple blender which mixes images at its borders 87 //stitcher.setBlender(new detail::FeatherBlender());//这个简单,耗时少 88 89 //柱面?球面OR平面?默认为球面 90 //PlaneWarper* cw = new PlaneWarper(); 91 //SphericalWarper* cw = new SphericalWarper(); 92 //CylindricalWarper* cw = new CylindricalWarper(); 93 //stitcher.setWarper(cw); 94 95 Stitcher::Status status = stitcher.estimateTransform(imgs); 96 if (status != Stitcher::OK) 97 { 98 cout << "Can't stitch images, error code = " << int(status) << endl; 99 return -1; 100 } 101 status = stitcher.composePanorama(pano); 102 if (status != Stitcher::OK) 103 { 104 cout << "Can't stitch images, error code = " << int(status) << endl; 105 return -1; 106 } 107 cout << "程序开始"; 108 imwrite(result_name, pano); 109 finish = clock(); 110 totaltime = (double)(finish - start) / CLOCKS_PER_SEC; 111 cout << " 此程序的运行时间为" << totaltime << "秒!" << endl; 112 system("pause"); 113 return 0; 114 }

可以设置一些,比如融合方式等。

但是我现在的问题是知道拼接量,怎么使用光照补偿 曝光补偿和多分辨率融合呢?

还有一种方法就是分解opencv stitch过程

#include "stdafx.h"

#include <iostream>

#include <fstream>

#include <string>

#include "opencv2/opencv_modules.hpp"

#include "opencv2/highgui/highgui.hpp"

#include "opencv2/stitching/detail/autocalib.hpp"

#include "opencv2/stitching/detail/blenders.hpp"

#include "opencv2/stitching/detail/camera.hpp"

#include "opencv2/stitching/detail/exposure_compensate.hpp"

#include "opencv2/stitching/detail/matchers.hpp"

#include "opencv2/stitching/detail/motion_estimators.hpp"

#include "opencv2/stitching/detail/seam_finders.hpp"

#include "opencv2/stitching/detail/util.hpp"

#include "opencv2/stitching/detail/warpers.hpp"

#include "opencv2/stitching/warpers.hpp"

#include<time.h>

using namespace std;

using namespace cv;

using namespace cv::detail;

//定义参数

vector<string> img_names; //图像名容器

bool try_gpu = false;

double work_megapix = 1;//图像匹配的分辨率大小,图像的面积尺寸变为work_megapix*100000

double seam_megapix = 0.1;//拼接缝像素的大小

double compose_megapix = 0.6;//拼接分辨率

float conf_thresh = 1.f;//两幅图来自同一全景图的置信度

WaveCorrectKind wave_correct = detail::WAVE_CORRECT_HORIZ;//波形校验,水平

int expos_comp_type = ExposureCompensator::GAIN_BLOCKS;//光照补偿方法,默认是gain_blocks

float match_conf = 0.65f;//特征点检测置信等级,最近邻匹配距离与次近邻匹配距离的比值,surf默认为0.65

int blend_type = Blender::MULTI_BAND;//融合方法,默认是多频段融合

float blend_strength = 5;//融合强度,0 - 100.默认是5.

string result_name = "result.jpg";//输出图像的文件名

int main()

{

clock_t start, finish;

double totaltime;

start = clock();

int argc = 2;

char* argv[] = { "1.jpg", "2.jpg", "3.jpg", "4.jpg", "5.jpg", "6.jpg", "7.jpg"

};

for (int i = 0; i < argc; ++i)

img_names.push_back(argv[i]);

int num_images = static_cast<int>(img_names.size());

double work_scale = 1, seam_scale = 1, compose_scale = 1;

//特征点检测以及对图像进行预处理(尺寸缩放),然后计算每幅图形的特征点,以及特征点描述子

cout << "Finding features..." << endl;

Ptr<FeaturesFinder> finder;

finder = new SurfFeaturesFinder();///采用Surf特征点检测

Mat full_img1, full_img, img;

vector<ImageFeatures> features(num_images);

vector<Mat> images(num_images);

vector<Size> full_img_sizes(num_images);

double seam_work_aspect = 1;

for (int i = 0; i < num_images; ++i)

{

full_img1 = imread(img_names[i]);

resize(full_img1, full_img, Size(full_img1.cols, full_img1.rows));

full_img_sizes[i] = full_img.size();

//计算work_scale,将图像resize到面积在work_megapix*10^6以下

work_scale = min(1.0, sqrt(work_megapix * 1e6 / full_img.size().area()));

resize(full_img, img, Size(), work_scale, work_scale);

//将图像resize到面积在work_megapix*10^6以下

seam_scale = min(1.0, sqrt(seam_megapix * 1e6 / full_img.size().area()));

seam_work_aspect = seam_scale / work_scale;

// 计算图像特征点,以及计算特征点描述子,并将img_idx设置为i

(*finder)(img, features[i]);

features[i].img_idx = i;

cout << "Features in image #" << i + 1 << ": " << features[i].keypoints.size() << endl;

//将源图像resize到seam_megapix*10^6,并存入image[]中

resize(full_img, img, Size(), seam_scale, seam_scale);

images[i] = img.clone();

}

finder->collectGarbage();

full_img.release();

img.release();

//对图像进行两两匹配

cout << "Pairwise matching" << endl;

//使用最近邻和次近邻匹配,对任意两幅图进行特征点匹配

vector<MatchesInfo> pairwise_matches;

BestOf2NearestMatcher matcher(try_gpu, match_conf);//最近邻和次近邻法

matcher(features, pairwise_matches); //对每两个图片进行匹配

matcher.collectGarbage();

//将置信度高于门限的所有匹配合并到一个集合中

///只留下确定是来自同一全景图的图片

vector<int> indices = leaveBiggestComponent(features, pairwise_matches, conf_thresh);

vector<Mat> img_subset;

vector<string> img_names_subset;

vector<Size> full_img_sizes_subset;

for (size_t i = 0; i < indices.size(); ++i)

{

img_names_subset.push_back(img_names[indices[i]]);

img_subset.push_back(images[indices[i]]);

full_img_sizes_subset.push_back(full_img_sizes[indices[i]]);

}

images = img_subset;

img_names = img_names_subset;

full_img_sizes = full_img_sizes_subset;

// 检查图片数量是否依旧满足要求

num_images = static_cast<int>(img_names.size());

if (num_images < 2)

{

cout << "Need more images" << endl;

return -1;

}

HomographyBasedEstimator estimator;//基于单应性的估计量

vector<CameraParams> cameras;//相机参数

estimator(features, pairwise_matches, cameras);

for (size_t i = 0; i < cameras.size(); ++i)

{

Mat R;

cameras[i].R.convertTo(R, CV_32F);

cameras[i].R = R;

cout << "Initial intrinsics #" << indices[i] + 1 << ":

" << cameras[i].K() << endl;

}

Ptr<detail::BundleAdjusterBase> adjuster;//光束调整器参数

adjuster = new detail::BundleAdjusterRay();//使用Bundle Adjustment(光束法平差)方法对所有图片进行相机参数校正

adjuster->setConfThresh(conf_thresh);//设置配置阈值

Mat_<uchar> refine_mask = Mat::zeros(3, 3, CV_8U);

refine_mask(0, 0) = 1;

refine_mask(0, 1) = 1;

refine_mask(0, 2) = 1;

refine_mask(1, 1) = 1;

refine_mask(1, 2) = 1;

adjuster->setRefinementMask(refine_mask);

(*adjuster)(features, pairwise_matches, cameras);//进行矫正

// 求出的焦距取中值和所有图片的焦距并构建camera参数,将矩阵写入camera

vector<double> focals;

for (size_t i = 0; i < cameras.size(); ++i)

{

cout << "Camera #" << indices[i] + 1 << ":

" << cameras[i].K() << endl;

focals.push_back(cameras[i].focal);

}

sort(focals.begin(), focals.end());

float warped_image_scale;

if (focals.size() % 2 == 1)

warped_image_scale = static_cast<float>(focals[focals.size() / 2]);

else

warped_image_scale = static_cast<float>(focals[focals.size() / 2 - 1] + focals[focals.size() / 2]) * 0.5f;

///波形矫正

vector<Mat> rmats;

for (size_t i = 0; i < cameras.size(); ++i)

rmats.push_back(cameras[i].R);

waveCorrect(rmats, wave_correct);////波形矫正

for (size_t i = 0; i < cameras.size(); ++i)

cameras[i].R = rmats[i];

cout << "Warping images ... " << endl;

vector<Point> corners(num_images);//统一坐标后的顶点

vector<Mat> masks_warped(num_images);

vector<Mat> images_warped(num_images);

vector<Size> sizes(num_images);

vector<Mat> masks(num_images);//融合掩码

// 准备图像融合掩码

for (int i = 0; i < num_images; ++i)

{

masks[i].create(images[i].size(), CV_8U);

masks[i].setTo(Scalar::all(255));

}

//弯曲图像和融合掩码

Ptr<WarperCreator> warper_creator;

warper_creator = new cv::SphericalWarper();

Ptr<RotationWarper> warper = warper_creator->create(static_cast<float>(warped_image_scale * seam_work_aspect));

for (int i = 0; i < num_images; ++i)

{

Mat_<float> K;

cameras[i].K().convertTo(K, CV_32F);

float swa = (float)seam_work_aspect;

K(0, 0) *= swa; K(0, 2) *= swa;

K(1, 1) *= swa; K(1, 2) *= swa;

corners[i] = warper->warp(images[i], K, cameras[i].R, INTER_LINEAR, BORDER_REFLECT, images_warped[i]);//计算统一后坐标顶点

sizes[i] = images_warped[i].size();

warper->warp(masks[i], K, cameras[i].R, INTER_NEAREST, BORDER_CONSTANT, masks_warped[i]);//弯曲当前图像

}

vector<Mat> images_warped_f(num_images);

for (int i = 0; i < num_images; ++i)

images_warped[i].convertTo(images_warped_f[i], CV_32F);

Ptr<ExposureCompensator> compensator = ExposureCompensator::createDefault(expos_comp_type);//建立补偿器以进行关照补偿,补偿方法是gain_blocks

compensator->feed(corners, images_warped, masks_warped);

//查找接缝

Ptr<SeamFinder> seam_finder;

seam_finder = new detail::GraphCutSeamFinder(GraphCutSeamFinderBase::COST_COLOR);

seam_finder->find(images_warped_f, corners, masks_warped);

//namedWindow("images_warped", 0);

//imshow("images_warped", images_warped[0]);

//namedWindow("masks_warped", 0);

//imshow("masks_warped", masks_warped[0]);

// 释放未使用的内存

images.clear();

images_warped.clear();

images_warped_f.clear();

masks.clear();

//////图像融合

cout << "Compositing..." << endl;

Mat img_warped, img_warped_s;

Mat dilated_mask, seam_mask, mask, mask_warped;

Ptr<Blender> blender;

double compose_work_aspect = 1;

for (int img_idx = 0; img_idx < num_images; ++img_idx)

{

cout << "Compositing image #" << indices[img_idx] + 1 << endl;

//由于以前进行处理的图片都是以work_scale进行缩放的,所以图像的内参

//corner(统一坐标后的顶点),mask(融合的掩码)都需要重新计算

// 读取图像和做必要的调整

full_img1 = imread(img_names[img_idx]);

resize(full_img1, full_img, Size(full_img1.cols, full_img1.rows));

compose_scale = min(1.0, sqrt(compose_megapix * 1e6 / full_img.size().area()));

compose_work_aspect = compose_scale / work_scale;

// 更新弯曲图像比例

warped_image_scale *= static_cast<float>(compose_work_aspect);

warper = warper_creator->create(warped_image_scale);

// 更新corners和sizes

for (int i = 0; i < num_images; ++i)

{

// 更新相机以下特性

cameras[i].focal *= compose_work_aspect;

cameras[i].ppx *= compose_work_aspect;

cameras[i].ppy *= compose_work_aspect;

// 更新corners和sizes

Size sz = full_img_sizes[i];

if (std::abs(compose_scale - 1) > 1e-1)

{

sz.width = cvRound(full_img_sizes[i].width * compose_scale);

sz.height = cvRound(full_img_sizes[i].height * compose_scale);

}

Mat K;

cameras[i].K().convertTo(K, CV_32F);

Rect roi = warper->warpRoi(sz, K, cameras[i].R);

corners[i] = roi.tl();

sizes[i] = roi.size();

}

if (abs(compose_scale - 1) > 1e-1)

resize(full_img, img, Size(), compose_scale, compose_scale);

else

img = full_img;

full_img.release();

Size img_size = img.size();

Mat K;

cameras[img_idx].K().convertTo(K, CV_32F);

// 扭曲当前图像

warper->warp(img, K, cameras[img_idx].R, INTER_LINEAR, BORDER_REFLECT, img_warped);

// 扭曲当前图像掩模

mask.create(img_size, CV_8U);

mask.setTo(Scalar::all(255));

warper->warp(mask, K, cameras[img_idx].R, INTER_NEAREST, BORDER_CONSTANT, mask_warped);

// 曝光补偿

compensator->apply(img_idx, corners[img_idx], img_warped, mask_warped);

img_warped.convertTo(img_warped_s, CV_16S);

img_warped.release();

img.release();

mask.release();

dilate(masks_warped[img_idx], dilated_mask, Mat());

resize(dilated_mask, seam_mask, mask_warped.size());

mask_warped = seam_mask & mask_warped;

//初始化blender

if (blender.empty())

{

blender = Blender::createDefault(blend_type, try_gpu);

Size dst_sz = resultRoi(corners, sizes).size();

float blend_width = sqrt(static_cast<float>(dst_sz.area())) * blend_strength / 100.f;

if (blend_width < 1.f)

blender = Blender::createDefault(Blender::NO, try_gpu);

else

{

MultiBandBlender* mb = dynamic_cast<MultiBandBlender*>(static_cast<Blender*>(blender));

mb->setNumBands(static_cast<int>(ceil(log(blend_width) / log(2.)) - 1.));

cout << "Multi-band blender, number of bands: " << mb->numBands() << endl;

}

//根据corners顶点和图像的大小确定最终全景图的尺寸

blender->prepare(corners, sizes);

}

// // 融合当前图像

blender->feed(img_warped_s, mask_warped, corners[img_idx]);

//blender->feed(img, mask, corners[img_idx]);

}

Mat result, result_mask;

blender->blend(result, result_mask);

imwrite(result_name, result);

namedWindow("result", 0);

imshow("result", result);

finish = clock();

totaltime = (double)(finish - start) / CLOCKS_PER_SEC;

cout << "

此程序的运行时间为" << totaltime << "秒!" << endl;

waitKey(0);

system("pause");

return 0;

waitKey(0);

system("pause");

return 0;

}

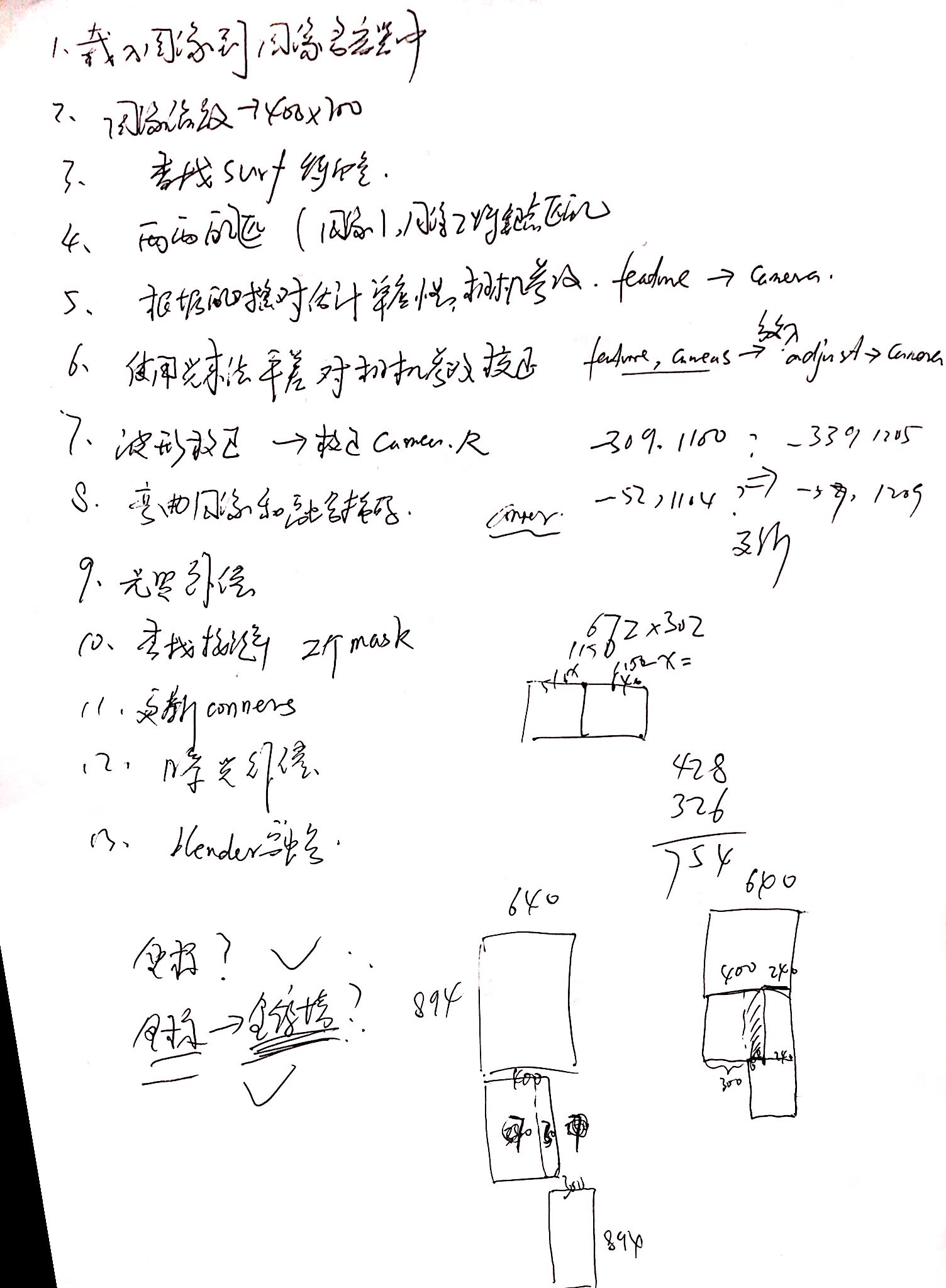

上面的方法现在存在两个问题:

1 conner 左上角的点是怎么获得的?

2 有了左上角的点,用图割方法获得最佳分割线,然后调用拉普拉斯金字塔能否实现完美拼接。