6-1构建模型的3种方法

可以使用以下3种方式构建模型:

- 使用Sequential按层顺序构建模型;

- 使用函数式API构建任意结构模型;

- 继承Model基类构建自定义模型。

对于顺序结构的模型,优先使用Sequential方法构建。

如果模型有多输入或者多输出,或者模型需要共享权重,或者模型具有残差连接等非顺序结构,推荐使用函数式API进行创建。

如果无特定必要,尽可能避免使用Model子类化的方式构建模型,这种方式提供了极大的灵活性,但也有更大的概率出错。

下面以IMDB电影评论的分类问题为例,演示3种创建模型的方法。

import numpy as np

import pandas as pd

import tensorflow as tf

from tqdm import tqdm

from tensorflow.keras import *

train_token_path = "../一、TensorFlow的建模流程/data/imdb/train_token.csv"

test_token_path = "../一、TensorFlow的建模流程/data/imdb/test_token.csv"

MAX_WORDS = 10000 # We will only consider the top 10000 words in the dataset

MAX_LEN = 200 # We will cut reviews after 200 words

BATCH_SIZE = 20

# 构建管道

def parse_line(line):

t = tf.strings.split(line, "\t")

label = tf.reshape(tf.cast(tf.strings.to_number(t[0]), tf.int32), (-1,))

features = tf.cast(tf.strings.to_number(tf.strings.split(t[1], " ")), tf.int32)

return features, label

ds_train = tf.data.TextLineDataset(filenames=[train_token_path]) \

.map(parse_line, num_parallel_calls=tf.data.experimental.AUTOTUNE) \

.shuffle(buffer_size=1000).batch(BATCH_SIZE) \

.prefetch(tf.data.experimental.AUTOTUNE)

ds_test = tf.data.TextLineDataset(filenames=[test_token_path]) \

.map(parse_line, num_parallel_calls=tf.data.experimental.AUTOTUNE) \

.shuffle(buffer_size=1000).batch(BATCH_SIZE) \

.prefetch(tf.data.experimental.AUTOTUNE)

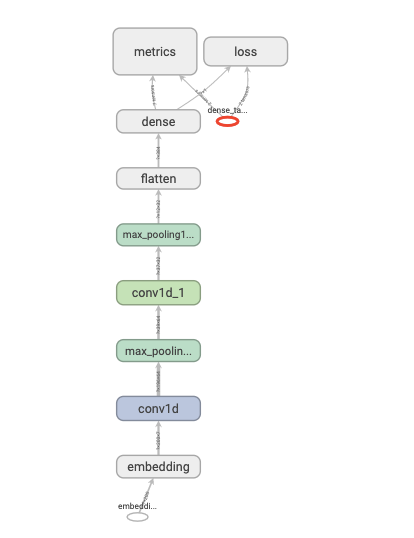

Sequential按层顺序创建模型

tf.keras.backend.clear_session()

model = models.Sequential()

model.add(layers.Embedding(MAX_WORDS, 7, input_length=MAX_LEN))

model.add(layers.Conv1D(filters=64, kernel_size=5, activation="relu")) # kernel_size: An integer or tuple/list of a single integer, specifying the length of the 1D convolution window.

model.add(layers.MaxPool1D(2))

model.add(layers.Conv1D(filters=32, kernel_size=3, activation="relu"))

model.add(layers.MaxPool1D(2))

model.add(layers.Flatten())

model.add(layers.Dense(1, activation="sigmoid"))

model.compile(optimizer='Nadam', loss="binary_crossentropy", metrics=['accuracy', 'AUC'])

model.summary()

"""

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

embedding (Embedding) (None, 200, 7) 70000

_________________________________________________________________

conv1d (Conv1D) (None, 196, 64) 2304

_________________________________________________________________

max_pooling1d (MaxPooling1D) (None, 98, 64) 0

_________________________________________________________________

conv1d_1 (Conv1D) (None, 96, 32) 6176

_________________________________________________________________

max_pooling1d_1 (MaxPooling1 (None, 48, 32) 0

_________________________________________________________________

flatten (Flatten) (None, 1536) 0

_________________________________________________________________

dense (Dense) (None, 1) 1537

=================================================================

Total params: 80,017

Trainable params: 80,017

Non-trainable params: 0

_________________________________________________________________

"""

import datetime

baselogger = callbacks.BaseLogger(stateful_metrics=['AUC'])

logdir = "./data/keras_model/" + datetime.datetime.now().strftime("%Y%m%d-%H%M%S")

tensorboard_callback = tf.keras.callbacks.TensorBoard(logdir, histogram_freq=1)

history = model.fit(ds_train, validation_data=ds_test, epochs=6, callbacks=[tensorboard_callback]) # [baselogger, tensorboard_callback]

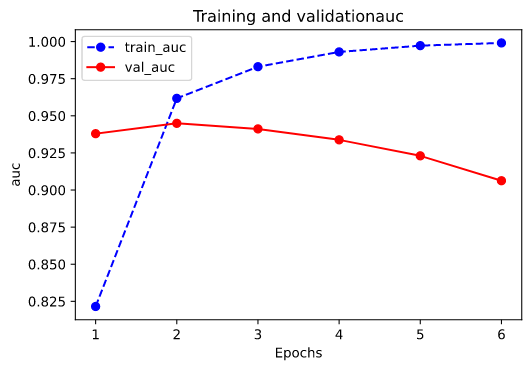

"""

Epoch 1/6

1000/1000 [==============================] - 5s 4ms/step - loss: 0.4746 - accuracy: 0.7350 - auc: 0.8418 - val_loss: 0.3161 - val_accuracy: 0.8632 - val_auc: 0.9411

Epoch 2/6

1000/1000 [==============================] - 4s 4ms/step - loss: 0.2400 - accuracy: 0.9049 - auc: 0.9650 - val_loss: 0.3084 - val_accuracy: 0.8768 - val_auc: 0.9466

Epoch 3/6

1000/1000 [==============================] - 4s 4ms/step - loss: 0.1628 - accuracy: 0.9392 - auc: 0.9835 - val_loss: 0.3503 - val_accuracy: 0.8702 - val_auc: 0.9412

Epoch 4/6

1000/1000 [==============================] - 5s 5ms/step - loss: 0.1069 - accuracy: 0.9618 - auc: 0.9924 - val_loss: 0.4766 - val_accuracy: 0.8596 - val_auc: 0.9296

Epoch 5/6

1000/1000 [==============================] - 8s 8ms/step - loss: 0.0645 - accuracy: 0.9779 - auc: 0.9971 - val_loss: 0.6010 - val_accuracy: 0.8600 - val_auc: 0.9221

Epoch 6/6

1000/1000 [==============================] - 7s 7ms/step - loss: 0.0354 - accuracy: 0.9877 - auc: 0.9990 - val_loss: 0.7835 - val_accuracy: 0.8556 - val_auc: 0.9111

"""

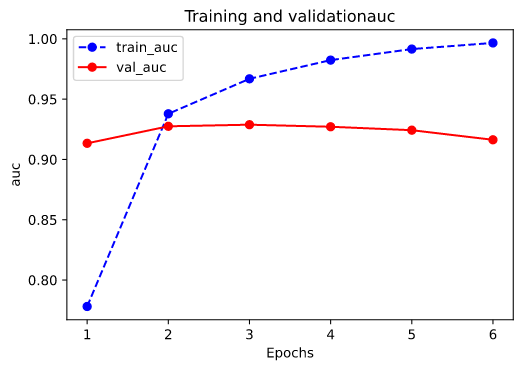

%matplotlib inline

%config InlineBackend.figure_format = 'svg'

import matplotlib.pyplot as plt

def plot_metric(history, metric):

train_metrics = history.history[metric]

val_metrics = history.history['val_' + metric]

epochs = range(1, len(train_metrics) + 1)

plt.plot(epochs, train_metrics, 'bo--')

plt.plot(epochs, val_metrics, 'ro-')

plt.title('Training and validation' + metric)

plt.xlabel("Epochs")

plt.ylabel(metric)

plt.legend(['train_' + metric, 'val_' + metric])

plt.show()

plot_metric(history, "auc")

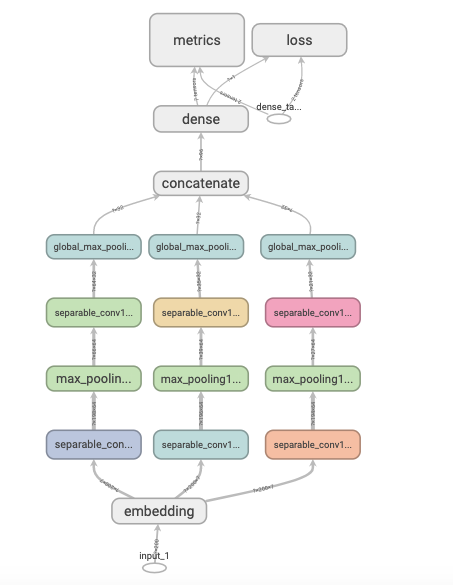

函数式API创建任意结构模型

tf.keras.backend.clear_session()

inputs = layers.Input(shape=[MAX_LEN])

x = layers.Embedding(MAX_WORDS, 7)(inputs)

branch1 = layers.SeparableConv1D(64, 3, activation="relu")(x) # 可分离卷积,用不同的卷积核在各个通道上进行卷积,然后再用一个卷积核将不同的通道合并

branch1 = layers.MaxPool1D(3)(branch1)

branch1 = layers.SeparableConv1D(32, 3, activation="relu")(branch1)

branch1 = layers.GlobalMaxPool1D()(branch1)

branch2 = layers.SeparableConv1D(64, 5, activation="relu")(x) # 可分离卷积,用不同的卷积核在各个通道上进行卷积,然后再用一个卷积核将不同的通道合并

branch2 = layers.MaxPool1D(3)(branch2)

branch2 = layers.SeparableConv1D(32, 5, activation="relu")(branch2)

branch2 = layers.GlobalMaxPool1D()(branch2)

branch3 = layers.SeparableConv1D(64, 7, activation="relu")(x) # 可分离卷积,用不同的卷积核在各个通道上进行卷积,然后再用一个卷积核将不同的通道合并

branch3 = layers.MaxPool1D(3)(branch3)

branch3 = layers.SeparableConv1D(32, 7, activation="relu")(branch3)

branch3 = layers.GlobalMaxPool1D()(branch3)

concat = layers.Concatenate()([branch1, branch2, branch3])

outputs = layers.Dense(1, activation="sigmoid")(concat)

model = models.Model(inputs=inputs, outputs=outputs)

model.compile(optimizer="Nadam", loss="binary_crossentropy", metrics=["accuracy", "AUC"])

model.summary()

"""

Model: "model"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) [(None, 200)] 0

__________________________________________________________________________________________________

embedding (Embedding) (None, 200, 7) 70000 input_1[0][0]

__________________________________________________________________________________________________

separable_conv1d (SeparableConv (None, 198, 64) 533 embedding[0][0]

__________________________________________________________________________________________________

separable_conv1d_2 (SeparableCo (None, 196, 64) 547 embedding[0][0]

__________________________________________________________________________________________________

separable_conv1d_4 (SeparableCo (None, 194, 64) 561 embedding[0][0]

__________________________________________________________________________________________________

max_pooling1d (MaxPooling1D) (None, 66, 64) 0 separable_conv1d[0][0]

__________________________________________________________________________________________________

max_pooling1d_1 (MaxPooling1D) (None, 65, 64) 0 separable_conv1d_2[0][0]

__________________________________________________________________________________________________

max_pooling1d_2 (MaxPooling1D) (None, 64, 64) 0 separable_conv1d_4[0][0]

__________________________________________________________________________________________________

separable_conv1d_1 (SeparableCo (None, 64, 32) 2272 max_pooling1d[0][0]

__________________________________________________________________________________________________

separable_conv1d_3 (SeparableCo (None, 61, 32) 2400 max_pooling1d_1[0][0]

__________________________________________________________________________________________________

separable_conv1d_5 (SeparableCo (None, 58, 32) 2528 max_pooling1d_2[0][0]

__________________________________________________________________________________________________

global_max_pooling1d (GlobalMax (None, 32) 0 separable_conv1d_1[0][0]

__________________________________________________________________________________________________

global_max_pooling1d_1 (GlobalM (None, 32) 0 separable_conv1d_3[0][0]

__________________________________________________________________________________________________

global_max_pooling1d_2 (GlobalM (None, 32) 0 separable_conv1d_5[0][0]

__________________________________________________________________________________________________

concatenate (Concatenate) (None, 96) 0 global_max_pooling1d[0][0]

global_max_pooling1d_1[0][0]

global_max_pooling1d_2[0][0]

__________________________________________________________________________________________________

dense (Dense) (None, 1) 97 concatenate[0][0]

==================================================================================================

Total params: 78,938

Trainable params: 78,938

Non-trainable params: 0

__________________________________________________________________________________________________

"""

import datetime

logdir = "./data/keras_model/" + datetime.datetime.now().strftime("%Y%m%d-%H%M%S")

tensorboard_callback = tf.keras.callbacks.TensorBoard(logdir, histogram_freq=1)

history = model.fit(ds_train, validation_data=ds_test, epochs=6, callbacks=[tensorboard_callback])

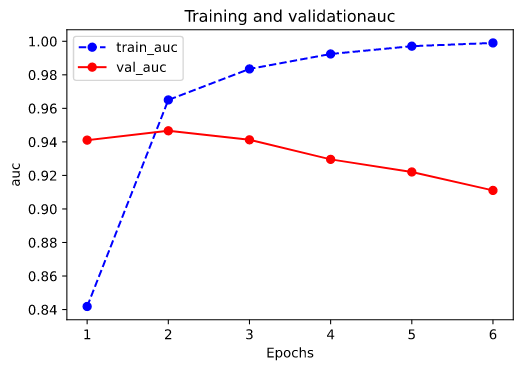

"""

Epoch 1/6

1000/1000 [==============================] - 18s 17ms/step - loss: 0.5480 - accuracy: 0.6829 - auc: 0.7781 - val_loss: 0.3778 - val_accuracy: 0.8328 - val_auc: 0.9134

Epoch 2/6

1000/1000 [==============================] - 17s 17ms/step - loss: 0.3190 - accuracy: 0.8637 - auc: 0.9379 - val_loss: 0.3464 - val_accuracy: 0.8462 - val_auc: 0.9275

Epoch 3/6

1000/1000 [==============================] - 17s 17ms/step - loss: 0.2331 - accuracy: 0.9089 - auc: 0.9669 - val_loss: 0.3582 - val_accuracy: 0.8510 - val_auc: 0.9288

Epoch 4/6

1000/1000 [==============================] - 14s 14ms/step - loss: 0.1680 - accuracy: 0.9391 - auc: 0.9824 - val_loss: 0.4038 - val_accuracy: 0.8484 - val_auc: 0.9271

Epoch 5/6

1000/1000 [==============================] - 8s 8ms/step - loss: 0.1134 - accuracy: 0.9617 - auc: 0.9915 - val_loss: 0.4620 - val_accuracy: 0.8484 - val_auc: 0.9243

Epoch 6/6

1000/1000 [==============================] - 12s 12ms/step - loss: 0.0678 - accuracy: 0.9793 - auc: 0.9966 - val_loss: 0.5725 - val_accuracy: 0.8434 - val_auc: 0.9163

"""

plot_metric(history, "auc")

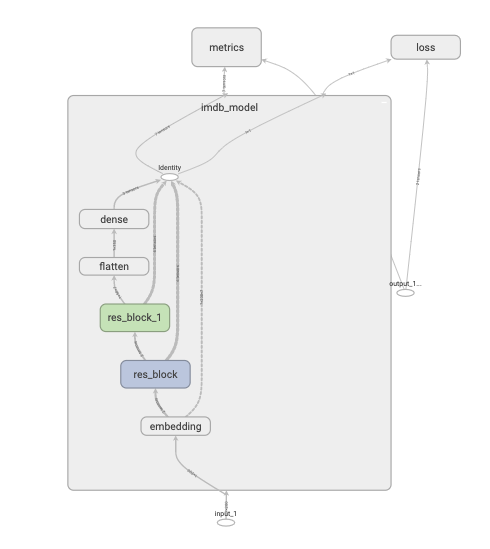

Model子类化创建自定义模型

# 先自定义一个残差模块,为自定义Layer

class ResBlock(layers.Layer):

def __init__(self, kernel_size, **kwargs):

super().__init__(**kwargs)

self.kernel_size = kernel_size

def build(self, input_shape):

self.conv1 = layers.Conv1D(filters=64, kernel_size=self.kernel_size, activation="relu", padding="same")

self.conv2 = layers.Conv1D(filters=32, kernel_size=self.kernel_size, activation="relu", padding="same")

self.conv3 = layers.Conv1D(filters=input_shape[-1], kernel_size=self.kernel_size, activation="relu", padding="same")

self.maxpool = layers.MaxPool1D(2)

super().build(input_shape) # 相当于设置self.built=True

def call(self, inputs):

x = self.conv1(inputs)

x = self.conv2(x)

x = self.conv3(x)

x = layers.Add()([inputs, x])

x = self.maxpool(x)

return x

# 如果要让自定义的Layer通过Functional API组合成模型时可以序列化,需要自定义 get_config方法

def get_config(self):

config = super().get_config()

config.update({"kernel_size": self.kernel_size})

return config

# 测试ResBlock

resblock = ResBlock(kernel_size=3)

resblock.build(input_shape=(None, 200, 7))

resblock.compute_output_shape(input_shape=(None, 200, 7))

"""

TensorShape([None, 100, 7])

"""

# 自定义模型,实际上也可以使用Sequential或者Functional API

class ImdbModel(models.Model):

def __init__(self):

super().__init__()

def build(self, input_shape):

self.embedding = layers.Embedding(MAX_WORDS, 7)

self.block1 = ResBlock(7)

self.block2 = ResBlock(5)

self.dense = layers.Dense(1, activation="sigmoid")

super().build(input_shape)

def call(self, x):

x = self.embedding(x)

x = self.block1(x)

x = self.block2(x)

x = layers.Flatten()(x)

x = self.dense(x)

return x

tf.keras.backend.clear_session()

model = ImdbModel()

model.build(input_shape=(None, 200))

model.summary()

model.compile(optimizer="Nadam", loss="binary_crossentropy", metrics=["accuracy", "AUC"])

"""

Model: "imdb_model"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

embedding (Embedding) multiple 70000

_________________________________________________________________

res_block (ResBlock) multiple 19143

_________________________________________________________________

res_block_1 (ResBlock) multiple 13703

_________________________________________________________________

dense (Dense) multiple 351

=================================================================

Total params: 103,197

Trainable params: 103,197

Non-trainable params: 0

_________________________________________________________________

"""

import datetime

logdir = "./data/tflogs/keras_model/" + datetime.datetime.now().strftime("%Y%m%d-%H%M%S")

tensorboard_callback = tf.keras.callbacks.TensorBoard(logdir, histogram_freq=1)

history = model.fit(ds_train, validation_data=ds_test, epochs=6, callbacks=[tensorboard_callback])

"""

Epoch 1/6

1000/1000 [==============================] - 18s 17ms/step - loss: 0.4992 - accuracy: 0.7184 - auc: 0.8215 - val_loss: 0.3192 - val_accuracy: 0.8588 - val_auc: 0.9379

Epoch 2/6

1000/1000 [==============================] - 18s 18ms/step - loss: 0.2512 - accuracy: 0.8989 - auc: 0.9618 - val_loss: 0.3416 - val_accuracy: 0.8594 - val_auc: 0.9449

Epoch 3/6

1000/1000 [==============================] - 17s 17ms/step - loss: 0.1651 - accuracy: 0.9369 - auc: 0.9831 - val_loss: 0.3654 - val_accuracy: 0.8710 - val_auc: 0.9412

Epoch 4/6

1000/1000 [==============================] - 17s 17ms/step - loss: 0.1037 - accuracy: 0.9611 - auc: 0.9930 - val_loss: 0.4717 - val_accuracy: 0.8676 - val_auc: 0.9338

Epoch 5/6

1000/1000 [==============================] - 17s 17ms/step - loss: 0.0606 - accuracy: 0.9777 - auc: 0.9973 - val_loss: 0.5980 - val_accuracy: 0.8584 - val_auc: 0.9230

Epoch 6/6

1000/1000 [==============================] - 17s 17ms/step - loss: 0.0320 - accuracy: 0.9894 - auc: 0.9991 - val_loss: 0.9280 - val_accuracy: 0.8456 - val_auc: 0.9062

"""

plot_metric(history, "auc")