Lucene就是一个全文检索的工具,建立索引用的,类似于新华字典的目录

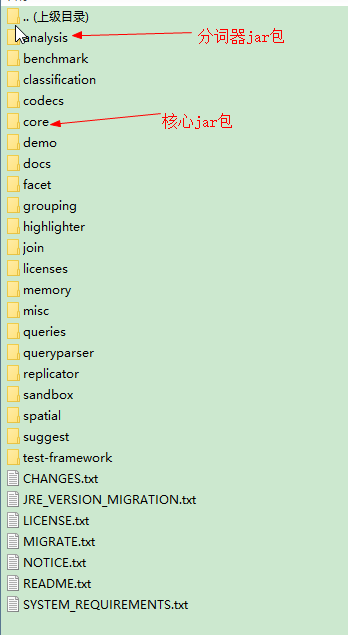

这里使用的是lucene-4.4.0版本,入门代码所需jar包如下图所示(解压lucene-4.4.0后的目录):

入门代码:

import java.io.File; import java.io.IOException; import org.apache.lucene.analysis.Analyzer; import org.apache.lucene.analysis.standard.StandardAnalyzer; import org.apache.lucene.document.Document; import org.apache.lucene.document.IntField; import org.apache.lucene.document.StringField; import org.apache.lucene.document.TextField; import org.apache.lucene.document.Field.Store; import org.apache.lucene.index.DirectoryReader; import org.apache.lucene.index.IndexReader; import org.apache.lucene.index.IndexWriter; import org.apache.lucene.index.IndexWriterConfig; import org.apache.lucene.index.IndexableField; import org.apache.lucene.index.Term; import org.apache.lucene.search.IndexSearcher; import org.apache.lucene.search.Query; import org.apache.lucene.search.ScoreDoc; import org.apache.lucene.search.TermQuery; import org.apache.lucene.search.TopDocs; import org.apache.lucene.store.Directory; import org.apache.lucene.store.FSDirectory; import org.apache.lucene.util.Version; import org.junit.Test; /*8 * luceneDemo * */ public class TestLucene { /** * 通过lucene 提供的api 对数据建立索引,indexWriter * @throws IOException * */ @Test public void testAdd() throws IOException{ //索引在硬盘上面存放的位置.. Directory directory=FSDirectory.open(new File("D:/INDEX")); //lucene 当前使用的版本... Version matchVersion=Version.LUCENE_44; //分词器...(把一段文本分词)(黑马程序员是高端的培训机构) //analzyer 是一个抽象类,具体的切分词规则由子类实现... Analyzer analyzer=new StandardAnalyzer(matchVersion); IndexWriterConfig config=new IndexWriterConfig(matchVersion, analyzer); //构造索引写入的对象.. IndexWriter indexWriter=new IndexWriter(directory, config); //往索引库里面写数据.. //索引库里面的数据都是document 一个document相当于是一条记录 //这个document里面的数据相当于索引结构.. Document document=new Document(); IndexableField indexableField=new IntField("id",1, Store.YES); IndexableField stringfield=new StringField("title","对王召廷的个人评价",Store.YES); IndexableField teIndexableField=new TextField("content","风流倜傥有点黄",Store.YES); document.add(indexableField); document.add(stringfield); document.add(teIndexableField); //索引库里面接收的数据都是document对象 indexWriter.addDocument(document); indexWriter.close(); } /** * 对建立的索引进行搜索... * 通过indexSearcher 去搜索... * @throws IOException */ @Test public void testSearcher() throws IOException{ //索引在硬盘上面存放的位置.. Directory directory=FSDirectory.open(new File("D:/INDEX")); //把索引目录里面的索引读取到IndexReader 当中... IndexReader indexReader=DirectoryReader.open(directory); // /构造搜索索引的对象.. IndexSearcher indexSearcher=new IndexSearcher(indexReader); //Query 它是一个查询条件对象,它是一个抽象类,不同的查询规则就构造不同的子类... Query query=new TermQuery(new Term("title", "对王召廷的个人评价")); //检索符合query 条件的前面N 条记录.. // TopDocs topDocs=indexSearcher.search(query, 10); //返回总记录数... System.out.println(topDocs.totalHits); //存放的都是document 的id ScoreDoc scoreDocs []=topDocs.scoreDocs; for(ScoreDoc scoreDoc:scoreDocs){ //返回的就是document id int docID=scoreDoc.doc; //我还需要根据id 检索到对应的document Document document=indexSearcher.doc(docID); System.out.println("id=="+document.get("id")); System.out.println("title=="+document.get("title")); System.out.println("content=="+document.get("content")); } } }

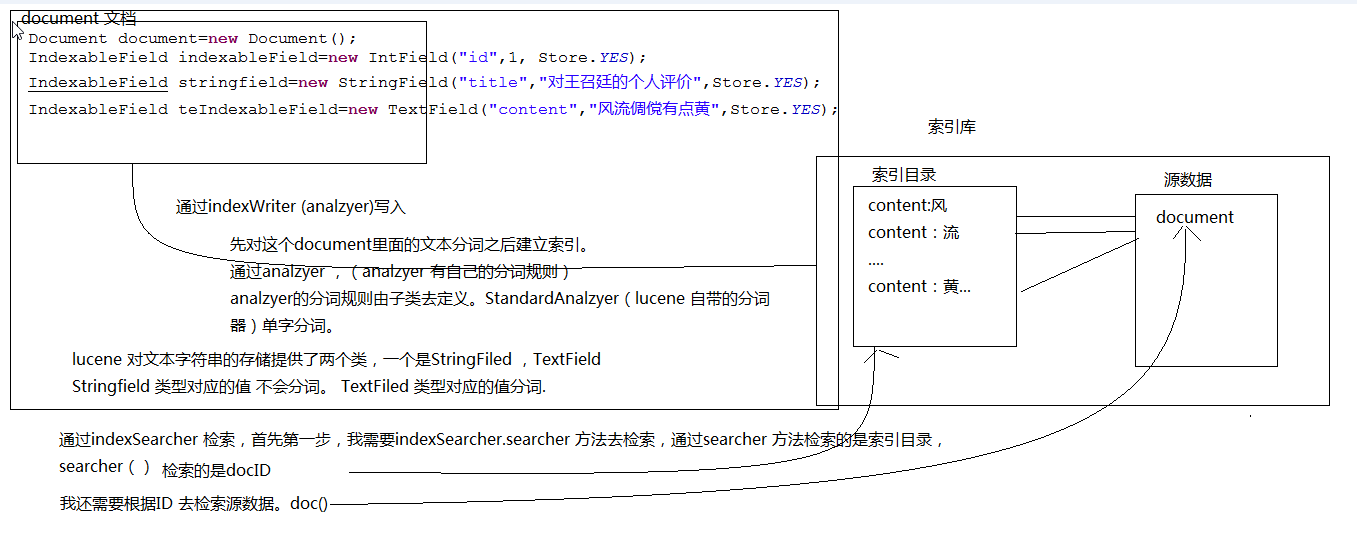

原理分析图:

demo演示:

根据入门代码流程提炼工具类代码:

import java.io.File; import java.io.IOException; import org.apache.lucene.analysis.Analyzer; import org.apache.lucene.analysis.standard.StandardAnalyzer; import org.apache.lucene.index.DirectoryReader; import org.apache.lucene.index.IndexReader; import org.apache.lucene.index.IndexWriter; import org.apache.lucene.index.IndexWriterConfig; import org.apache.lucene.search.IndexSearcher; import org.apache.lucene.store.Directory; import org.apache.lucene.store.FSDirectory; import org.apache.lucene.util.Version; /** * lucene 工具类... * @author Administrator * */ /** * 提炼规则,假设这段代码可以完成一个功能,把这个代码提炼到一个方法里面去,假设这个方法在某个业务罗继承可以共用,那么往上抽取, * 假设在其它逻辑层也可以用,提炼到工具类里面去。 * */ public class LuceneUtils { private static IndexWriter indexWriter=null; private static IndexSearcher indexSearcher=null; //索引存放目录.. private static Directory directory=null; private static IndexWriterConfig indexWriterConfig=null; private static Version version=null; private static Analyzer analyzer=null; static { try { directory=FSDirectory.open(new File(Constants.URL)); version=Version.LUCENE_44; analyzer=new StandardAnalyzer(version); indexWriterConfig=new IndexWriterConfig(version, analyzer); } catch (IOException e) { e.printStackTrace(); } } /** * * @return 返回用于操作索引的对象... * @throws IOException */ public static IndexWriter getIndexWriter() throws IOException{ indexWriter=new IndexWriter(directory, indexWriterConfig); return indexWriter; } /** * 返回用于搜索索引的对象... * @return * @throws IOException */ public static IndexSearcher getIndexSearcher() throws IOException{ IndexReader indexReader=DirectoryReader.open(directory); indexSearcher=new IndexSearcher(indexReader); return indexSearcher; } /** * * 返回lucene 当前的版本... * @return */ public static Version getVersion() { return version; } /** * * 返回lucene 当前使用的分词器.. * @return */ public static Analyzer getAnalyzer() { return analyzer; } }

public class Constants { /** * 索引存放的目录 */ public static final String URL="d:/indexdir/news"; }

bean:

package cn.itcast.bean; public class Article { private int id; public int getId() { return id; } public void setId(int id) { this.id = id; } public String getTitle() { return title; } public void setTitle(String title) { this.title = title; } public String getContent() { return content; } public void setContent(String content) { this.content = content; } public String getAuthor() { return author; } public void setAuthor(String author) { this.author = author; } public String getUrl() { return url; } public void setUrl(String url) { this.url = url; } private String title; private String content; private String author; private String url; }

转换工具类:

package cn.itcast.lucene; import org.apache.lucene.document.Document; import org.apache.lucene.document.IntField; import org.apache.lucene.document.StringField; import org.apache.lucene.document.TextField; import org.apache.lucene.document.Field.Store; import org.apache.lucene.index.IndexableField; import cn.itcast.bean.Article; /*8 * 对象与索引库document 之间的转化 * */ public class ArticleToDocument { public static Document articleToDocument(Article article){ Document document=new Document(); IntField idfield=new IntField("id", article.getId(), Store.YES); //StringField 对应的值不分词,textField 分词.. TextField titleField=new TextField("title", article.getTitle(),Store.YES); TextField contentField=new TextField("content", article.getContent(),Store.YES); //修改这个字段对应的权重值,默认这个值为1f // contentField.setBoost(3f); StringField authorField=new StringField("author", article.getAuthor(), Store.YES); StringField urlField=new StringField("url", article.getUrl(), Store.YES); document.add(idfield); document.add(titleField); document.add(contentField); document.add(authorField); document.add(urlField); return document; } }

Dao层:

package cn.itcast.dao; import java.io.IOException; import org.apache.lucene.document.Document; import org.apache.lucene.index.IndexWriter; import org.apache.lucene.index.Term; import org.apache.lucene.queryparser.classic.MultiFieldQueryParser; import org.apache.lucene.queryparser.classic.QueryParser; import org.apache.lucene.search.IndexSearcher; import org.apache.lucene.search.Query; import org.apache.lucene.search.ScoreDoc; import org.apache.lucene.search.TopDocs; import cn.itcast.bean.Article; import cn.itcast.lucene.ArticleToDocument; import cn.itcast.uitls.LuceneUtils; /** * 使用lucene 的API 来操作索引库.. * @author Administrator * */ public class LuceneDao { public void addIndex(Article article) throws IOException{ IndexWriter indexWriter=LuceneUtils.getIndexWriter(); Document doc=ArticleToDocument.articleToDocument(article); indexWriter.addDocument(doc); indexWriter.close(); } /** * 删除符合条件的记录... * @param fieldName * @param fieldValue * @throws IOException */ public void delIndex(String fieldName,String fieldValue) throws IOException{ IndexWriter indexWriter=LuceneUtils.getIndexWriter(); //一定要梦想,万一实现了勒 Term term=new Term(fieldName, fieldValue); indexWriter.deleteDocuments(term); indexWriter.close(); } /** * * 更新 * * update table set ? where condtion * @throws IOException * * */ public void updateIndex(String fieldName,String fieldValue,Article article) throws IOException{ IndexWriter indexWriter=LuceneUtils.getIndexWriter(); /** * 1:term 设置更新的条件... * * 2:设置更新的内容的对象.. * */ Term term=new Term(fieldName,fieldValue); Document doc=ArticleToDocument.articleToDocument(article); /** * * 在lucene 里面是先删除符合这个条件term 的记录,在创建一个doc 记录... * */ indexWriter.updateDocument(term, doc); indexWriter.close(); } /** * 0,10 * 10,10 * 20,10 * @param keywords * @throws Exception */ public void findIndex(String keywords,int firstResult,int maxResult) throws Exception{ IndexSearcher indexSearcher=LuceneUtils.getIndexSearcher(); //第一个条件.. 单字段查询... // Query query=new TermQuery(new Term("title","梦想")) //select * from table where fieldname="" or content="" String fields []={"title","content"}; //第二种条件:使用查询解析器,多字段。。。 我们需要重新导入一个jar queryParser 的jar... 位置在lucene解压后的queryparser文件夹下 QueryParser queryParser=new MultiFieldQueryParser(LuceneUtils.getVersion(),fields,LuceneUtils.getAnalyzer()); // /这个事一个条件.. Query query=queryParser.parse(keywords); //query 它是一个查询条件,query 是一个抽象类,不同的查询规则构造部同的子类即可 //检索符合query 条件的前面N 条记录... //检索的是索引目录... (总记录数,socreDOC (docID)) //使用lucene 提供的api 进行操作... TopDocs topDocs=indexSearcher.search(query,firstResult+maxResult); // /存放的是docID ScoreDoc scoreDocs []=topDocs.scoreDocs; //判断:scoreDocs 的length (实际取出来的数量..) 与 firstResult+maxResult 的值取小值... //在java jdk 里面提供了一个api int endResult=Math.min(scoreDocs.length, firstResult+maxResult); for(int i=firstResult;i<endResult;i++){ // /取出来的是docID,这个id 是lucene 自己来维护。 int docID=scoreDocs[i].doc; Document document=indexSearcher.doc(docID); System.out.println("id==="+document.get("id")); System.out.println("title==="+document.get("title")); System.out.println("content==="+document.get("content")); System.out.println("url==="+document.get("url")); System.out.println("author==="+document.get("author")); } } }

测试类:

package cn.itcast.junit; import java.io.IOException; import org.junit.Test; import cn.itcast.bean.Article; import cn.itcast.dao.LuceneDao; /** * 测试luceneDao * @author Administrator * */ public class LuceneDaoTest { private LuceneDao luceneDao=new LuceneDao(); @Test public void testCreate() throws IOException{ for(int i=28;i<=28;i++){ Article article=new Article(); article.setId(i); article.setTitle("一定要梦想,万一实现了勒"); article.setContent("矫情我觉得这句话太矫情了矫情矫情矫情矫情矫情矫情"); article.setUrl("http://www.tianmao.com"); article.setAuthor("马云"); luceneDao.addIndex(article); } } @Test public void testsearcher() throws Exception{ // article.setTitle("一定要梦想,万一实现了勒"); textfield 分词 标准分词器 // article.setContent("我觉得这句话太矫情了"); textfield 分词 标准分词器 luceneDao.findIndex("梦想",20,10); } @Test public void testdelete() throws IOException{ String fieldName="title"; String fieldValue="定"; luceneDao.delIndex(fieldName, fieldValue); } @Test public void testUpdate() throws IOException{ String fieldName="title"; String fieldValue="定"; Article article=new Article(); article.setId(9527); article.setTitle("一定要梦想,万一实现了勒"); article.setContent("我觉得这句话太矫情了"); article.setUrl("http://www.tianmao.com"); article.setAuthor("马云"); luceneDao.updateIndex(fieldName, fieldValue, article); } }

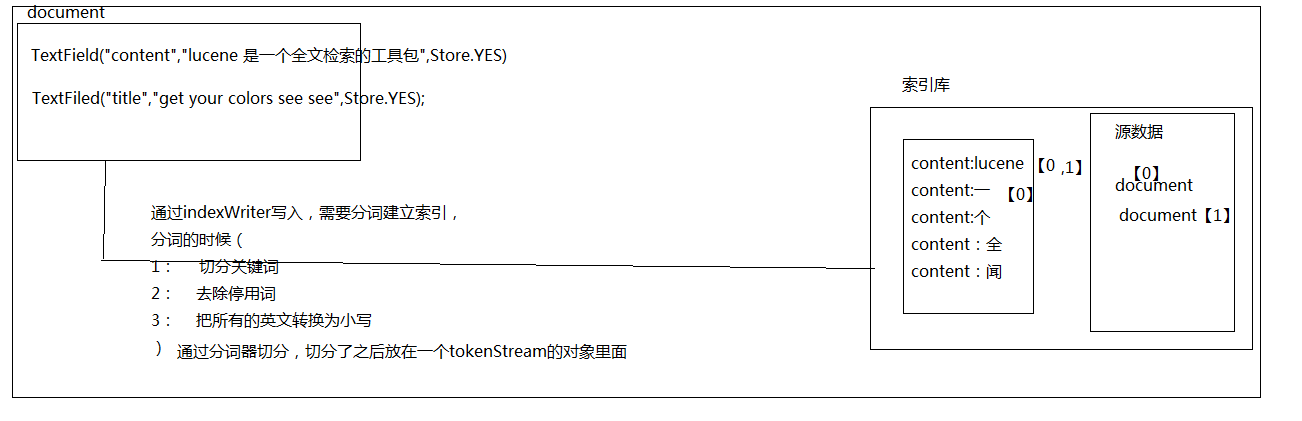

分词器的流程图:

关于分词器,网上可以找到很多种类的分词器配合Lucene使用,相关分词规则查看对应说明。

举例如下:

Analyzer analyzer=new StandardAnalyzer(Version.LUCENE_44);//中文单字切分、英文按空格切分成单词

Analyzer analyzer=new CJKAnalyzer(Version.LUCENE_44);//二分法分词,中文相连的两个词作为一个索引

Analyzer analyzer=new IKAnalyzer();//第三方的分词器,对中文支持较好,可以自定义分词单词与停用词

索引库优化

package cn.itcast.lucene; import java.io.File; import java.io.IOException; import org.apache.lucene.analysis.Analyzer; import org.apache.lucene.analysis.standard.StandardAnalyzer; import org.apache.lucene.index.DirectoryReader; import org.apache.lucene.index.IndexReader; import org.apache.lucene.index.IndexWriter; import org.apache.lucene.index.IndexWriterConfig; import org.apache.lucene.index.LogDocMergePolicy; import org.apache.lucene.index.MergePolicy; import org.apache.lucene.index.Term; import org.apache.lucene.search.IndexSearcher; import org.apache.lucene.search.Query; import org.apache.lucene.search.TermQuery; import org.apache.lucene.search.TopDocs; import org.apache.lucene.store.Directory; import org.apache.lucene.store.FSDirectory; import org.apache.lucene.store.IOContext; import org.apache.lucene.store.RAMDirectory; import org.apache.lucene.util.Version; import org.junit.Test; import cn.itcast.uitls.Constants; public class TestOptimise { /*8 * 优化的第一种方式:通过 IndexWriterConfig 优化设置mergePolicy(合并策略) * * */ public void testoptimise() throws IOException{ Directory directory=FSDirectory.open(new File(Constants.URL)); Analyzer analyzer=new StandardAnalyzer(Version.LUCENE_44); IndexWriterConfig config=new IndexWriterConfig(Version.LUCENE_44, analyzer); LogDocMergePolicy mergePolicy=new LogDocMergePolicy(); /** * 当这个值越小,更少的内存会被运用当创建索引的时候,搜索的时候越快,创建的时候越慢。 * 当这个值越大,更多的内存会被运用当创建索引的时候,搜索的时候越慢,创建的时候越快.. * larger values >10 * * 2<=smaller<=10 * */ //设置合并因子.. mergePolicy.setMergeFactor(10); // /设置索引的合并策略.. config.setMergePolicy(mergePolicy); IndexWriter indexWriter=new IndexWriter(directory, config); } /** * 通过directory 去优化.... * @throws IOException * */ @Test public void testoptimise2() throws IOException{ //现在的索引放在硬盘上面... Directory directory=FSDirectory.open(new File(Constants.URL)); // /通过这个对象吧directory 里面的数据读取到directory1 里面来.. IOContext ioContext=new IOContext(); //相办法吧directory 的索引读取到内存当中来... Directory directory1=new RAMDirectory(directory,ioContext); IndexReader indexReader=DirectoryReader.open(directory1); IndexSearcher indexSearcher=new IndexSearcher(indexReader); Query query=new TermQuery(new Term("title", "想")); TopDocs topDocs=indexSearcher.search(query, 100); System.out.println(topDocs.totalHits); } /** * 索引文件越大,会影响检索的速度.. (减少索引文件的大小) * * 1:排除停用词.. * */ public void testoptimise3(){ } /** * 将索引分目盘存放 将数据归类... * */ public void testoptimise4(){ } }