今天主要学习了实验 7 Spark 机器学习库 MLlib 编程实践,

主要代码:

import org.apache.spark.ml.feature.PCA

import org.apache.spark.sql.Row

import org.apache.spark.ml.linalg.{Vector,Vectors}

import org.apache.spark.ml.evaluation.MulticlassClassificationEvaluator

import org.apache.spark.ml.{Pipeline,PipelineModel}

import org.apache.spark.ml.feature.{IndexToString, StringIndexer, VectorIndexer,HashingTF, Tokenizer}

import org.apache.spark.ml.classification.LogisticRegression

import org.apache.spark.ml.classification.LogisticRegressionModel

import org.apache.spark.ml.classification.{BinaryLogisticRegressionSummary, LogisticRegression}

import org.apache.spark.sql.functions;

import spark.implicits._

case class Adult(features: org.apache.spark.ml.linalg.Vector, label: String)

val df = sc.textFile("adult.data.txt").map(_.split(",")).map(p =>Adult(Vectors.dense(p(0).toDouble,p(2).toDouble,p(4).toDouble, p(10).toDouble, p(11).toDouble, p(12).toDouble), p(14).toString())).toDF()

val test = sc.textFile("adult.test.txt").map(_.split(",")).map(p =>Adult(Vectors.dense(p(0).toDouble,p(2).toDouble,p(4).toDouble, p(10).toDouble, p(11).toDouble, p(12).toDouble), p(14).toString())).toDF()

val pca = new PCA().setInputCol("features").setOutputCol("pcaFeatures").setK(3).fit(df)

val result = pca.transform(df)

val testdata = pca.transform(test)

result.show(false)

testdata.show(false)

val labelIndexer = new StringIndexer().setInputCol("label").setOutputCol("indexedLabel").fit(result)

labelIndexer.labels.foreach(println)

val featureIndexer = new VectorIndexer().setInputCol("pcaFeatures").setOutputCol("indexedFeatures").fit(result)

println(featureIndexer.numFeatures)

val labelConverter = new IndexToString().setInputCol("prediction").setOutputCol("predictedLabel").setLabels(labelIndexer.labels)

val lr = new LogisticRegression().setLabelCol("indexedLabel").setFeaturesCol("indexedFeatures").setMaxIter(100)

val lrPipeline = new Pipeline().setStages(Array(labelIndexer, featureIndexer, lr, labelConverter))

val lrPipelineModel = lrPipeline.fit(result)

val lrModel = lrPipelineModel.stages(2).asInstanceOf[LogisticRegressionModel]

println("Coefficients: " + lrModel.coefficientMatrix+"Intercept: "+lrModel.interceptVector+"numClasses: "+lrModel.numClasses+"numFeatures: "+lrModel.numFeatures)

val lrPredictions = lrPipelineModel.transform(testdata)

val evaluator = new MulticlassClassificationEvaluator().setLabelCol("indexedLabel").setPredictionCol("prediction")

val lrAccuracy = evaluator.evaluate(lrPredictions)

println("Test Error = " + (1.0 - lrAccuracy))

val pca = new PCA().setInputCol("features").setOutputCol("pcaFeatures")

val labelIndexer = new StringIndexer().setInputCol("label").setOutputCol("indexedLabel").fit(df)

val featureIndexer = new VectorIndexer().setInputCol("pcaFeatures").setOutputCol("indexedFeatures")

val labelConverter = new IndexToString().setInputCol("prediction").setOutputCol("predictedLabel").setLabels(labelIndexer.labels)

val lr = new LogisticRegression().setLabelCol("indexedLabel").setFeaturesCol("indexedFeatures").setMaxIter(100)

val lrPipeline = new Pipeline().setStages(Array(pca, labelIndexer, featureIndexer, lr, labelConverter))

val paramGrid = new ParamGridBuilder().addGrid(pca.k, Array(1,2,3,4,5,6)).addGrid(lr.elasticNetParam, Array(0.2,0.8)).addGrid(lr.regParam, Array(0.01, 0.1, 0.5)).build()

val cv = new CrossValidator().setEstimator(lrPipeline).setEvaluator(new MulticlassClassificationEvaluator().setLabelCol("indexedLabel").setPredictionCol("prediction")).setEstimatorParamMaps(paramGrid).setNumFolds(3)

val cvModel = cv.fit(df)

val lrPredictions=cvModel.transform(test)

val evaluator = new MulticlassClassificationEvaluator().setLabelCol("indexedLabel").setPredictionCol("prediction")

val lrAccuracy = evaluator.evaluate(lrPredictions)

println("准确率为"+lrAccuracy)

val bestModel= cvModel.bestModel.asInstanceOf[PipelineModel]

val lrModel = bestModel.stages(3).asInstanceOf[LogisticRegressionModel]

println("Coefficients: " + lrModel.coefficientMatrix + "Intercept: "+lrModel.interceptVector+ "numClasses: "+lrModel.numClasses+"numFeatures: "+lrModel.numFeatures)

val pcaModel = bestModel.stages(0).asInstanceOf[PCAModel]

println("Primary Component: " + pcaModel.pc)

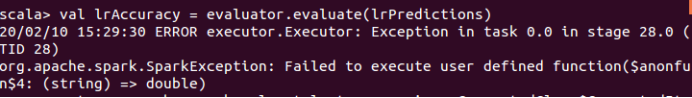

在继续这个实验时遇到一个问题,现在还没解决,如图:

org.apache.spark.SparkException: Failed to execute user defined function($anonfun$4: (string) => double)

经过查询这个问题的原因是无法执行定义的函数,但是我完全按照教程中的代码进行就会产生这个问题,网上没有这个问题的解析,所以还未解决。