k8s 显卡不能共用,8张显卡只能调度8个pod 使用,docker 是可以共享使用的

环境

-

硬件:Tesla T4 八张显卡

-

系统:Centos7.9 操作系统

-

服务器安装完操作系统,并安装完显卡驱动

请参照:https://www.cnblogs.com/lixinliang/p/14705315.html -

k8s 使用gpu 显卡方式

固定gpu 服务器,需要先给指定的gpu 服务器打上污点

shell>kubectl taint node lgy-dev-gpu-k8s-node7-105 server_type=gpu:NoSchedule

- 准备k8s deployment 配置文件

# cat pengyun-python-test111.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

name: pengyun-python-test111

name: pengyun-python-test111

namespace: pengyun

spec:

replicas: 1

selector:

matchLabels:

name: pengyun-python-test111

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

creationTimestamp: null

labels:

name: pengyun-python-test111

spec:

containers:

- image: harbor.k8s.moviebook.cn/pengyun/dev/000003-pengyun/python_dev:20220106140120

imagePullPolicy: IfNotPresent

name: pengyun-python-test111

resources:

limits:

cpu: "1"

nvidia.com/gpu: "1"

requests:

cpu: "1"

memory: 2Gi

nvidia.com/gpu: "1"

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

tolerations: #设置容忍污点

- effect: NoSchedule

key: server_type

operator: Equal

value: gpu

- 创建yaml

shell> kubectl apply -f pengyun-python-test111.yaml

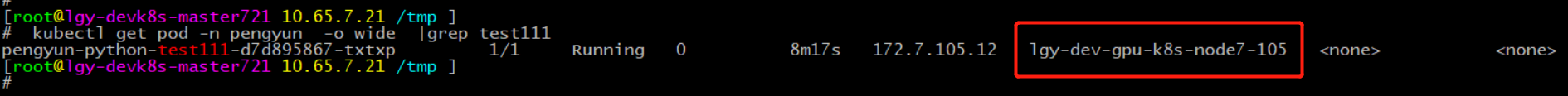

- 查看pod 创建情况

确认pod 已调度至指定污点的node 节点

kubectl get pod -n pengyun -o wide |grep test111

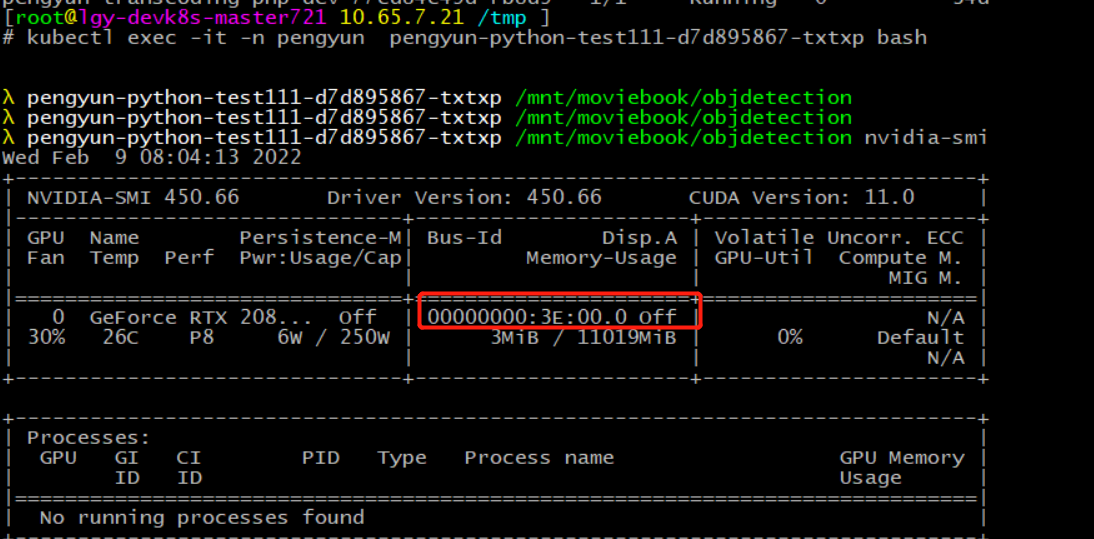

- 查看pod gpu显卡

shell>kubectl exec -it -n pengyun pengyun-python-test111-d7d895867-txtxp bash

shell>nvidia-smi

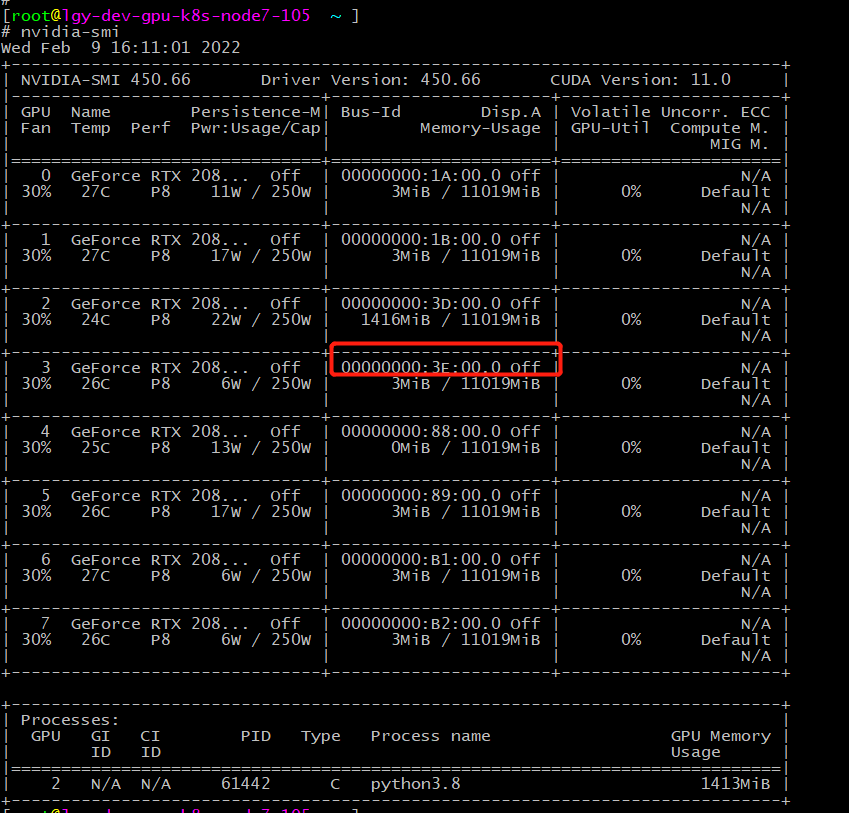

- 查看宿主机显卡