k8s 环境规划

- ip 规划

Pod网段: 10.0.0.0/16

Service网段: 10.255.0.0/16

- 系统资源规划

操作系统:centos7.6

配置: 8Gib内存/4vCPU/100G硬盘

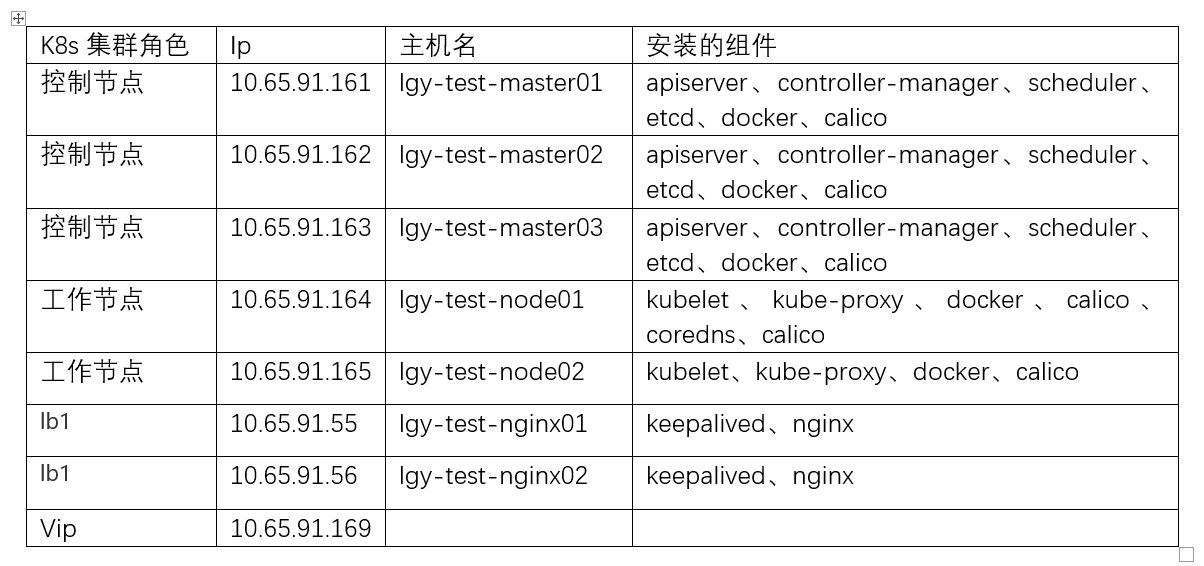

- 集群规划

- 架构图

集群基础配置

- 配置主机host 文件,修改lgy-test-master01、lgy-test-master02、lgy-test-master03、lgy-test-node01、lgy-test-node02文件host

10.65.91.161 lgy-test-master01 master01

10.65.91.162 lgy-test-master02 master02

10.65.91.163 lgy-test-master03 master03

10.65.91.164 lgy-test-node01 node01

10.65.91.165 lgy-test-node02 node02

- 配置主机无密登陆

保证master01节点可以免密登陆其他节点即可

- 所有节点关闭firewalld防火墙(包括master 和node 节点)

systemctl stop firewalld ; systemctl disable firewalld

- 关闭selinux(包括master 和node 节点)

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

- 重启系统

reboot

-内核升级

# 安装yum源

rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm

# 查看列表

yum --disablerepo=* --enablerepo=elrepo-kernel repolist

yum --disablerepo=* --enablerepo=elrepo-kernel list kernel*

# 安装

yum --enablerepo=elrepo-kernel install kernel-ml-devel kernel-ml -y

# 设置生成新的grub

grub2-set-default 0

grub2-mkconfig -o /etc/grub2.cfg

# 移除旧版本工具包

yum remove kernel-tools-libs.x86_64 kernel-tools.x86_64 -y

# 安装新版本

yum --disablerepo=* --enablerepo=elrepo-kernel install -y kernel-ml-tools.x86_64

# 重启

reboot

# 查看内核版本

uname -sr

- 修改 ulimt

vim /etc/security/limits.conf

* soft nofile 65536

* hard nofile 65535

- 关闭交换分区swap(包括master 和node 节点)

#临时关闭

swapoff -a

#永久关闭:注释swap挂载,给swap这行开头加一下注释

vim /etc/fstab

#/dev/mapper/centos-swap swap swap defaults 0 0

- 修改内核参数(包括master 和node 节点)

#加载br_netfilter模块

modprobe br_netfilter

#验证模块是否加载成功:

lsmod |grep br_netfilter

#修改内核参数

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

#使刚才修改的内核参数生效

sysctl -p /etc/sysctl.d/k8s.conf

- 配置阿里云repo源(包括master 和node 节点)

yum install lrzsz -y

yum install openssh-clients

#备份基础repo源

mkdir /root/repo.bak

cd /etc/yum.repos.d/

mv * /root/repo.bak/

#下载阿里云的repo源,把CentOS-Base.repo文件上传到主机的/etc/yum.repos.d/目录下

#配置国内阿里云docker的repo源

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

- 配置时间同步(包括master 和node 节点)

#安装ntpdate命令

yum install ntpdate -y

#跟网络源做同步

ntpdate cn.pool.ntp.org

#把时间同步做成计划任务

crontab -e

* */1 * * * /usr/sbin/ntpdate cn.pool.ntp.org

#重启crond服务

service crond restart

- 安装iptables(包括master 和node 节点)

#安装iptables

yum install iptables-services -y

#禁用iptables

service iptables stop && systemctl disable iptables

#清空防火墙规则

iptables -F

- 开启ipvs,把ipvs.modules上传到各个机器的/etc/sysconfig/modules/目录下(包括master 和node 节点)

#ipvs.modules内容

cat /etc/sysconfig/modules/ipvs.modules

#!/bin/bash

ipvs_modules="ip_vs ip_vs_lc ip_vs_wlc ip_vs_rr ip_vs_wrr ip_vs_lblc ip_vs_lblcr ip_vs_dh ip_vs_sh ip_vs_nq ip_vs_sed ip_vs_ftp nf_conntrack"

for kernel_module in ${ipvs_modules}; do

/sbin/modinfo -F filename ${kernel_module} > /dev/null 2>&1

if [ 0 -eq 0 ]; then

/sbin/modprobe ${kernel_module}

fi

done

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep ip_vs

- 安装基础软件包(包括master 和node 节点)

yum install -y yum-utils device-mapper-persistent-data lvm2 wget net-tools nfs-utils lrzsz gcc gcc-c++ make cmake libxml2-devel openssl-devel curl curl-devel unzip sudo ntp libaio-devel wget vim ncurses-devel autoconf automake zlib-devel python-devel epel-release openssh-server socat ipvsadm conntrack ntpdate telnet rsync

- 安装docker-ce(包括master 和node 节点)

yum install docker-ce docker-ce-cli containerd.io -y

systemctl start docker && systemctl enable docker.service && systemctl status docker

- 配置docker镜像加速器,修改docker文件驱动为systemd,默认为cgroupfs,kubelet默认使用systemd,两者必须一致才可以。(包括master 和node 节点)

# cat /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"],

"registry-mirrors": ["https://docker.mirrors.ustc.edu.cn","https://8xpk5wnt.mirror.aliyuncs.com"],

"insecure-registries": ["yz.harbor.xxx.com"],

"max-concurrent-downloads": 20,

"live-restore": true,

"max-concurrent-uploads": 10,

"debug": true,

"data-root": "/data/k8s/docker/data",

"log-opts": {

"max-size": "100m",

"max-file": "5"

}

}

systemctl daemon-reload

systemctl restart docker

systemctl status docker

搭建etcd集群

#配置etcd工作目录,创建配置文件和证书文件存放目录(3个master 节点操作)

mkdir -p /etc/etcd

mkdir -p /etc/etcd/ssl

#安装签发证书工具cfssl (以下操作均在master01 上进行)

mkdir /data/work -p

cd /data/work/

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

#把文件变成可执行权限

chmod +x *

mv cfssl_linux-amd64 /usr/local/bin/cfssl

mv cfssljson_linux-amd64 /usr/local/bin/cfssljson

mv cfssl-certinfo_linux-amd64 /usr/local/bin/cfssl-certinfo

#配置ca证书

#生成ca证书请求文件

# cat ca-csr.json

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "k8s",

"OU": "system"

}

],

"ca": {

"expiry": "175200h"

}

}

cfssl gencert -initca ca-csr.json | cfssljson -bare ca

#生成ca证书文件

# cat ca-config.json

{

"signing": {

"default": {

"expiry": "175200h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "175200h"

}

}

}

}

#生成etcd证书

#配置etcd证书请求,hosts的ip变成自己etcd所在节点的ip

# cat etcd-csr.json

{

"CN": "etcd",

"hosts": [

"127.0.0.1",

"10.65.91.161",

"10.65.91.162",

"10.65.91.163",

"10.65.91.169"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "k8s",

"OU": "system"

}

]

}

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes etcd-csr.json | cfssljson -bare etcd

ls etcd*.pem

etcd-key.pem etcd.pem

#部署etcd集群,把etcd-v3.4.13-linux-amd64.tar.gz上传到/data/work目录下

tar -xf etcd-v3.4.13-linux-amd64.tar.gz

cp -p etcd-v3.4.13-linux-amd64/etcd* /usr/local/bin/

scp -r etcd-v3.4.13-linux-amd64/etcd* master02:/usr/local/bin/

scp -r etcd-v3.4.13-linux-amd64/etcd* master03:/usr/local/bin/

#创建配置文件 (master01)

# cat etcd.conf

#[Member]

ETCD_NAME="etcd1"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://10.65.91.161:2380"

ETCD_LISTEN_CLIENT_URLS="https://10.65.91.161:2379,http://127.0.0.1:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://10.65.91.161:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://10.65.91.161:2379"

ETCD_INITIAL_CLUSTER="etcd1=https://10.65.91.161:2380,etcd2=https://10.65.91.162:2380,etcd3=https://10.65.91.163:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

#创建启动服务文件

# cat etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=-/etc/etcd/etcd.conf

WorkingDirectory=/var/lib/etcd/

ExecStart=/usr/local/bin/etcd \

--cert-file=/etc/etcd/ssl/etcd.pem \

--key-file=/etc/etcd/ssl/etcd-key.pem \

--trusted-ca-file=/etc/etcd/ssl/ca.pem \

--peer-cert-file=/etc/etcd/ssl/etcd.pem \

--peer-key-file=/etc/etcd/ssl/etcd-key.pem \

--peer-trusted-ca-file=/etc/etcd/ssl/ca.pem \

--peer-client-cert-auth \

--client-cert-auth

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

cp ca*.pem /etc/etcd/ssl/

cp etcd*.pem /etc/etcd/ssl/

cp etcd.conf /etc/etcd/

cp etcd.service /usr/lib/systemd/system/

for i in master02 master03;do rsync -vaz etcd.conf $i:/etc/etcd/;done

for i in master02 master03;do rsync -vaz etcd*.pem ca*.pem $i:/etc/etcd/ssl/;done

for i in master02 master03;do rsync -vaz etcd.service $i:/usr/lib/systemd/system/;done

#master02 节点etcd.conf

[root@lgy-test-master02 10.65.91.162 ~ ]

# cat /etc/etcd/etcd.conf

#[Member]

ETCD_NAME="etcd2"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://10.65.91.162:2380"

ETCD_LISTEN_CLIENT_URLS="https://10.65.91.162:2379,http://127.0.0.1:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://10.65.91.162:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://10.65.91.162:2379"

ETCD_INITIAL_CLUSTER="etcd1=https://10.65.91.161:2380,etcd2=https://10.65.91.162:2380,etcd3=https://10.65.91.163:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

#master03 节点etcd.conf

[root@lgy-test-master03 10.65.91.163 ~ ]

# cat /etc/etcd/etcd.conf

#[Member]

ETCD_NAME="etcd3"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://10.65.91.163:2380"

ETCD_LISTEN_CLIENT_URLS="https://10.65.91.163:2379,http://127.0.0.1:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://10.65.91.163:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://10.65.91.163:2379"

ETCD_INITIAL_CLUSTER="etcd1=https://10.65.91.161:2380,etcd2=https://10.65.91.162:2380,etcd3=https://10.65.91.163:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

#启动etcd集群(3个master节点操作)

mkdir -p /var/lib/etcd/default.etcd

systemctl daemon-reload

systemctl enable etcd.service

systemctl start etcd.service

备注:启动etcd的时候,先启动 master01 的etcd服务,会一直卡住在启动的状态,然后接着再启动master02的etcd,这样master01这个节点etcd才会正常起来

#检查状态

systemctl status etcd

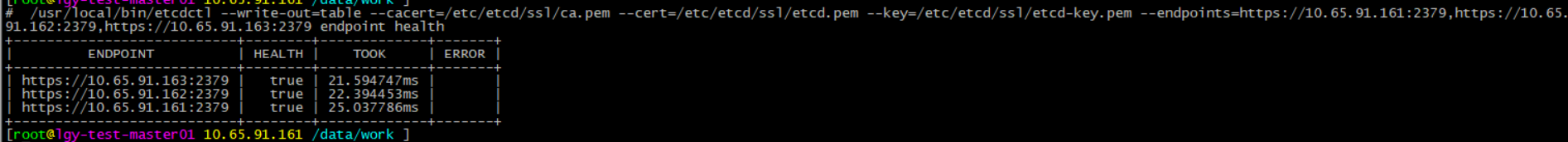

#查看etcd集群

ETCDCTL_API=3

/usr/local/bin/etcdctl --write-out=table --cacert=/etc/etcd/ssl/ca.pem --cert=/etc/etcd/ssl/etcd.pem --key=/etc/etcd/ssl/etcd-key.pem --endpoints=https://10.65.91.161:2379,https://10.65.91.162:2379,https://10.65.91.163:2379 endpoint health

#针对etcdv3.3.13 版本查看集群健康方式

查看etcd 集群是否健康

ETCDCTL_API=3 etcdctl \

--endpoints=https://10.148.100.11:2379,https://10.148.100.12:2379,https://10.148.100.13:2379 \

--cacert=/etc/etcd/ssl/ca.pem \

--cert=/etc/etcd/ssl/etcd.pem \

--key=/etc/etcd/ssl/etcd-key.pem endpoint health

ETCDCTL_API=3 etcdctl \

-w table --cacert=/etc/etcd/ssl/ca.pem \

--cert=/etc/etcd/ssl/etcd.pem \

--key=/etc/etcd/ssl/etcd-key.pem \

--endpoints=https://10.148.100.11:2379,https://10.148.100.12:2379,https://10.148.100.13:2379 endpoint status

安装kubernetes组件

#下载安装包

二进制包所在的github地址如下:

https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/

#把kubernetes-server-linux-amd64.tar.gz上传到 master01 上的/data/work目录下:

tar zxvf kubernetes-server-linux-amd64.tar.gz

cd kubernetes/server/bin/

cp kube-apiserver kube-controller-manager kube-scheduler kubectl /usr/local/bin/

#拷贝kube-apiserver kube-controller-manager 到master02 和master03

rsync -vaz kube-apiserver kube-controller-manager kube-scheduler kubectl master02:/usr/local/bin/

rsync -vaz kube-apiserver kube-controller-manager kube-scheduler kubectl master03:/usr/local/bin/

#将 kubelet kube-proxy 拷贝至node节点

scp kubelet kube-proxy node01:/usr/local/bin/

scp kubelet kube-proxy node02:/usr/local/bin/

#创建ssl 目录 master01执行

cd /data/work/

mkdir -p /etc/kubernetes/

mkdir -p /etc/kubernetes/ssl

mkdir /var/log/kubernetes

部署apiserver组件

#创建token.csv文件

cat > token.csv << EOF

$(head -c 16 /dev/urandom | od -An -t x | tr -d ' '),kubelet-bootstrap,10001,"system:kubelet-bootstrap"

EOF

#创建csr请求文件,替换为自己机器的IP

# cat kube-apiserver-csr.json

{

"CN": "kubernetes",

"hosts": [

"127.0.0.1",

"10.65.91.161",

"10.65.91.162",

"10.65.91.163",

"10.65.91.164",

"10.65.91.165",

"10.65.91.169",

"10.255.0.1",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "k8s",

"OU": "system"

}

]

}

#生成证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-apiserver-csr.json | cfssljson -bare kube-apiserver

#创建服务启动文件

# cat /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=etcd.service

Wants=etcd.service

[Service]

ExecStart=/usr/local/bin/kube-apiserver \

--enable-admission-plugins=NamespaceLifecycle,NodeRestriction,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \

--anonymous-auth=false \

--bind-address=10.65.91.161 \

--secure-port=6443 \

--advertise-address=10.65.91.161 \

--insecure-port=0 \

--authorization-mode=Node,RBAC \

--runtime-config=api/all=true \

--enable-bootstrap-token-auth \

--service-cluster-ip-range=10.255.0.0/16 \

--token-auth-file=/etc/kubernetes/token.csv \

--service-node-port-range=30000-50000 \

--tls-cert-file=/etc/kubernetes/ssl/kube-apiserver.pem \

--tls-private-key-file=/etc/kubernetes/ssl/kube-apiserver-key.pem \

--client-ca-file=/etc/kubernetes/ssl/ca.pem \

--kubelet-client-certificate=/etc/kubernetes/ssl/kube-apiserver.pem \

--kubelet-client-key=/etc/kubernetes/ssl/kube-apiserver-key.pem \

--service-account-key-file=/etc/kubernetes/ssl/ca-key.pem \

--service-account-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \

--service-account-issuer=https://kubernetes.default.svc.cluster.local \

--etcd-cafile=/etc/etcd/ssl/ca.pem \

--etcd-certfile=/etc/etcd/ssl/etcd.pem \

--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \

--etcd-servers=https://10.65.91.161:2379,https://10.65.91.162:2379,https://10.65.91.163:2379 \

--enable-swagger-ui=true \

--allow-privileged=true \

--apiserver-count=3 \

--audit-log-maxage=30 \

--audit-log-maxbackup=3 \

--audit-log-maxsize=100 \

--audit-log-path=/var/log/kube-apiserver-audit.log \

--event-ttl=1h \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/var/log/kubernetes \

--v=4

Restart=on-failure

RestartSec=5

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

#复制文件到目标目录

cp ca*.pem /etc/kubernetes/ssl

cp kube-apiserver*.pem /etc/kubernetes/ssl/

cp token.csv /etc/kubernetes/

cp kube-apiserver.conf /etc/kubernetes/

cp kube-apiserver.service /usr/lib/systemd/system/

#拷贝文件到master02、master03

rsync -vaz token.csv master02:/etc/kubernetes/

rsync -vaz token.csv master03:/etc/kubernetes/

rsync -vaz kube-apiserver*.pem master02:/etc/kubernetes/ssl/

rsync -vaz kube-apiserver*.pem master03:/etc/kubernetes/ssl/

rsync -vaz ca*.pem master02:/etc/kubernetes/ssl/

rsync -vaz ca*.pem master03:/etc/kubernetes/ssl/

rsync -vaz kube-apiserver.service master02:/usr/lib/systemd/system/

rsync -vaz kube-apiserver.service master03:/usr/lib/systemd/system/

#注:master02和master03配置文件kube-apiserver.service的IP地址修改为实际的本机IP

#kube-apiserver.service (master02 配置)

# cat /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=etcd.service

Wants=etcd.service

[Service]

ExecStart=/usr/local/bin/kube-apiserver \

--enable-admission-plugins=NamespaceLifecycle,NodeRestriction,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \

--anonymous-auth=false \

--bind-address=10.65.91.162 \

--secure-port=6443 \

--advertise-address=10.65.91.162 \

--insecure-port=0 \

--authorization-mode=Node,RBAC \

--runtime-config=api/all=true \

--enable-bootstrap-token-auth \

--service-cluster-ip-range=10.255.0.0/16 \

--token-auth-file=/etc/kubernetes/token.csv \

--service-node-port-range=30000-50000 \

--tls-cert-file=/etc/kubernetes/ssl/kube-apiserver.pem \

--tls-private-key-file=/etc/kubernetes/ssl/kube-apiserver-key.pem \

--client-ca-file=/etc/kubernetes/ssl/ca.pem \

--kubelet-client-certificate=/etc/kubernetes/ssl/kube-apiserver.pem \

--kubelet-client-key=/etc/kubernetes/ssl/kube-apiserver-key.pem \

--service-account-key-file=/etc/kubernetes/ssl/ca-key.pem \

--service-account-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \

--service-account-issuer=https://kubernetes.default.svc.cluster.local \

--etcd-cafile=/etc/etcd/ssl/ca.pem \

--etcd-certfile=/etc/etcd/ssl/etcd.pem \

--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \

--etcd-servers=https://10.65.91.161:2379,https://10.65.91.162:2379,https://10.65.91.163:2379 \

--enable-swagger-ui=true \

--allow-privileged=true \

--apiserver-count=3 \

--audit-log-maxage=30 \

--audit-log-maxbackup=3 \

--audit-log-maxsize=100 \

--audit-log-path=/var/log/kube-apiserver-audit.log \

--event-ttl=1h \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/var/log/kubernetes \

--v=4

Restart=on-failure

RestartSec=5

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

#kube-apiserver.service (master03 配置)

# cat /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=etcd.service

Wants=etcd.service

[Service]

ExecStart=/usr/local/bin/kube-apiserver \

--enable-admission-plugins=NamespaceLifecycle,NodeRestriction,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \

--anonymous-auth=false \

--bind-address=10.65.91.163 \

--secure-port=6443 \

--advertise-address=10.65.91.163 \

--insecure-port=0 \

--authorization-mode=Node,RBAC \

--runtime-config=api/all=true \

--enable-bootstrap-token-auth \

--service-cluster-ip-range=10.255.0.0/16 \

--token-auth-file=/etc/kubernetes/token.csv \

--service-node-port-range=30000-50000 \

--tls-cert-file=/etc/kubernetes/ssl/kube-apiserver.pem \

--tls-private-key-file=/etc/kubernetes/ssl/kube-apiserver-key.pem \

--client-ca-file=/etc/kubernetes/ssl/ca.pem \

--kubelet-client-certificate=/etc/kubernetes/ssl/kube-apiserver.pem \

--kubelet-client-key=/etc/kubernetes/ssl/kube-apiserver-key.pem \

--service-account-key-file=/etc/kubernetes/ssl/ca-key.pem \

--service-account-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \

--service-account-issuer=https://kubernetes.default.svc.cluster.local \

--etcd-cafile=/etc/etcd/ssl/ca.pem \

--etcd-certfile=/etc/etcd/ssl/etcd.pem \

--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \

--etcd-servers=https://10.65.91.161:2379,https://10.65.91.162:2379,https://10.65.91.163:2379 \

--enable-swagger-ui=true \

--allow-privileged=true \

--apiserver-count=3 \

--audit-log-maxage=30 \

--audit-log-maxbackup=3 \

--audit-log-maxsize=100 \

--audit-log-path=/var/log/kube-apiserver-audit.log \

--event-ttl=1h \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/var/log/kubernetes \

--v=4

Restart=on-failure

RestartSec=5

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

#master01、master02、master03顺序启动

systemctl daemon-reload

systemctl enable kube-apiserver

systemctl start kube-apiserver

systemctl status kube-apiserver

#验证

curl --insecure https://10.65.91.161:6443/

{

"kind": "Status",

"apiVersion": "v1",

"metadata": {

},

"status": "Failure",

"message": "Unauthorized",

"reason": "Unauthorized",

"code": 401

}

上面看到401,这个是正常的的状态,还没认证

部署kubectl组件

#master01 操作

#export KUBECONFIG =/etc/kubernetes/admin.conf

#cp /etc/kubernetes/admin.conf /root/.kube/config

#创建csr请求文件

# cat admin-csr.json

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "system:masters",

"OU": "system"

}

]

}

#生成证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

cp admin*.pem /etc/kubernetes/ssl/

#配置安全上下文

#创建kubeconfig配置文件,比较重要

kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://10.65.91.161:6443 --kubeconfig=kube.config

#设置客户端认证参数

kubectl config set-credentials admin --client-certificate=admin.pem --client-key=admin-key.pem --embed-certs=true --kubeconfig=kube.config

#设置上下文参数

kubectl config set-context kubernetes --cluster=kubernetes --user=admin --kubeconfig=kube.config

#设置当前上下文

kubectl config use-context kubernetes --kubeconfig=kube.config

mkdir ~/.kube -p

cp kube.config ~/.kube/config

#授权kubernetes证书访问kubelet api权限

kubectl create clusterrolebinding kube-apiserver:kubelet-apis --clusterrole=system:kubelet-api-admin --user kubernetes

#查看集群组件状态

kubectl cluster-info

Kubernetes control plane is running at https://10.65.91.161:6443

kubectl get componentstatuses

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

controller-manager Unhealthy HTTP probe failed with statuscode: 400

etcd-2 Healthy {"health":"true"}

etcd-0 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}

scheduler Healthy ok

#同步kubectl文件到其他节点 (master02、master03 操作)

mkdir /root/.kube/

#同步config到其他节点

rsync -vaz /root/.kube/config master02:/root/.kube/

rsync -vaz /root/.kube/config master03:/root/.kube/

部署kube-controller-manager组件

#均在master01 执行

#创建csr请求文件

# cat kube-controller-manager-csr.json

{

"CN": "system:kube-controller-manager",

"hosts": [

"127.0.0.1",

"10.65.91.161",

"10.65.91.162",

"10.65.91.163",

"10.65.91.169"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "system:kube-controller-manager",

"OU": "system"

}

]

}

#生成证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager

#创建kube-controller-manager的kubeconfig

1.设置集群参数

kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://10.65.91.161:6443 --kubeconfig=kube-controller-manager.kubeconfig

2.设置客户端认证参数

kubectl config set-credentials system:kube-controller-manager --client-certificate=kube-controller-manager.pem --client-key=kube-controller-manager-key.pem --embed-certs=true --kubeconfig=kube-controller-manager.kubeconfig

3.设置上下文参数

kubectl config set-context system:kube-controller-manager --cluster=kubernetes --user=system:kube-controller-manager --kubeconfig=kube-controller-manager.kubeconfig

4.设置当前上下文

kubectl config use-context system:kube-controller-manager --kubeconfig=kube-controller-manager.kubeconfig

#创建配置文件kube-controller-manager.conf

# cat kube-controller-manager.conf

KUBE_CONTROLLER_MANAGER_OPTS="--port=0 \

--secure-port=10252 \

--bind-address=127.0.0.1 \

--kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig \

--service-cluster-ip-range=10.255.0.0/16 \

--cluster-name=kubernetes \

--cluster-signing-cert-file=/etc/kubernetes/ssl/ca.pem \

--cluster-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \

--allocate-node-cidrs=true \

--cluster-cidr=10.0.0.0/16 \

--experimental-cluster-signing-duration=175200h \

--root-ca-file=/etc/kubernetes/ssl/ca.pem \

--service-account-private-key-file=/etc/kubernetes/ssl/ca-key.pem \

--leader-elect=true \

--feature-gates=RotateKubeletServerCertificate=true \

--controllers=*,bootstrapsigner,tokencleaner \

--horizontal-pod-autoscaler-use-rest-clients=true \

--horizontal-pod-autoscaler-sync-period=10s \

--tls-cert-file=/etc/kubernetes/ssl/kube-controller-manager.pem \

--tls-private-key-file=/etc/kubernetes/ssl/kube-controller-manager-key.pem \

--use-service-account-credentials=true \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/var/log/kubernetes \

--v=2"

#创建启动文件

# cat kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/etc/kubernetes/kube-controller-manager.conf

ExecStart=/usr/local/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_OPTS

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

#启动服务

cp kube-controller-manager*.pem /etc/kubernetes/ssl/

cp kube-controller-manager.kubeconfig /etc/kubernetes/

cp kube-controller-manager.conf /etc/kubernetes/

cp kube-controller-manager.service /usr/lib/systemd/system/

rsync -vaz kube-controller-manager*.pem master02:/etc/kubernetes/ssl/

rsync -vaz kube-controller-manager*.pem master03:/etc/kubernetes/ssl/

rsync -vaz kube-controller-manager.kubeconfig kube-controller-manager.conf master02:/etc/kubernetes/

rsync -vaz kube-controller-manager.kubeconfig kube-controller-manager.conf master03:/etc/kubernetes/

rsync -vaz kube-controller-manager.service master02:/usr/lib/systemd/system/

rsync -vaz kube-controller-manager.service master03:/usr/lib/systemd/system/

systemctl daemon-reload

systemctl enable kube-controller-manager

systemctl start kube-controller-manager

systemctl status kube-controller-manager

部署kube-scheduler组件

#以下操作均在master01 进行

#创建csr请求

# cat kube-scheduler-csr.json

{

"CN": "system:kube-scheduler",

"hosts": [

"127.0.0.1",

"10.65.91.161",

"10.65.91.162",

"10.65.91.163",

"10.65.91.169"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "system:kube-scheduler",

"OU": "system"

}

]

}

#生成证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-scheduler-csr.json | cfssljson -bare kube-scheduler

#创建kube-scheduler的kubeconfig

1.设置集群参数

kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://10.65.91.161:6443 --kubeconfig=kube-scheduler.kubeconfig

2.设置客户端认证参数

kubectl config set-credentials system:kube-scheduler --client-certificate=kube-scheduler.pem --client-key=kube-scheduler-key.pem --embed-certs=true --kubeconfig=kube-scheduler.kubeconfig

3.设置上下文参数

kubectl config set-context system:kube-scheduler --cluster=kubernetes --user=system:kube-scheduler --kubeconfig=kube-scheduler.kubeconfig

4.设置当前上下文

kubectl config use-context system:kube-scheduler --kubeconfig=kube-scheduler.kubeconfig

#创建配置文件kube-scheduler.conf

# cat kube-scheduler.conf

KUBE_SCHEDULER_OPTS="--address=127.0.0.1 \

--kubeconfig=/etc/kubernetes/kube-scheduler.kubeconfig \

--leader-elect=true \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/var/log/kubernetes \

--v=2"

#创建服务启动文件

# cat kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/etc/kubernetes/kube-scheduler.conf

ExecStart=/usr/local/bin/kube-scheduler $KUBE_SCHEDULER_OPTS

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

#拷贝文件

cp kube-scheduler*.pem /etc/kubernetes/ssl/

cp kube-scheduler.kubeconfig /etc/kubernetes/

cp kube-scheduler.conf /etc/kubernetes/

cp kube-scheduler.service /usr/lib/systemd/system/

rsync -vaz kube-scheduler*.pem master02:/etc/kubernetes/ssl/

rsync -vaz kube-scheduler*.pem master03:/etc/kubernetes/ssl/

rsync -vaz kube-scheduler.kubeconfig kube-scheduler.conf master02:/etc/kubernetes/

rsync -vaz kube-scheduler.kubeconfig kube-scheduler.conf master03:/etc/kubernetes/

rsync -vaz kube-scheduler.service master02:/usr/lib/systemd/system/

rsync -vaz kube-scheduler.service master03:/usr/lib/systemd/system/

#启动服务 (3个master 节点均执行)

systemctl daemon-reload

systemctl enable kube-scheduler

systemctl start kube-scheduler

systemctl status kube-scheduler

部署coredns

#把pause-cordns.tar.gz上传到node01节点,手动解压 (node01 节点操作)

docker load -i pause-cordns.tar.gz

部署kubelet组件

# kubelet: 每个Node节点上的kubelet定期就会调用API Server的REST接口报告自身状态,API Server接收这些信息后,将节点状态信息更新到etcd中。kubelet也通过API Server监听Pod信息,从而对Node机器上的POD进行管理,如创建、删除、更新Pod

#以下操作在 master01 上操作

#创建kubelet-bootstrap.kubeconfig

cd /data/work/

BOOTSTRAP_TOKEN=$(awk -F "," '{print $1}' /etc/kubernetes/token.csv)

kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://10.65.91.161:6443 --kubeconfig=kubelet-bootstrap.kubeconfig

kubectl config set-credentials kubelet-bootstrap --token=${BOOTSTRAP_TOKEN} --kubeconfig=kubelet-bootstrap.kubeconfig

kubectl config set-context default --cluster=kubernetes --user=kubelet-bootstrap --kubeconfig=kubelet-bootstrap.kubeconfig

kubectl config use-context default --kubeconfig=kubelet-bootstrap.kubeconfig

kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap

#创建配置文件kubelet.json,"cgroupDriver": "systemd"要和docker的驱动一致。address替换为自己master01的IP地址。

#千万注意:kubelete.json配置文件address改为各个节点的ip地址,在各个work节点上启动服务

# cat kubelet.json

{

"kind": "KubeletConfiguration",

"apiVersion": "kubelet.config.k8s.io/v1beta1",

"authentication": {

"x509": {

"clientCAFile": "/etc/kubernetes/ssl/ca.pem"

},

"webhook": {

"enabled": true,

"cacheTTL": "2m0s"

},

"anonymous": {

"enabled": false

}

},

"authorization": {

"mode": "Webhook",

"webhook": {

"cacheAuthorizedTTL": "5m0s",

"cacheUnauthorizedTTL": "30s"

}

},

"address": "10.65.91.161", #注:kubelete.json配置文件address改为各个节点的ip地址,在各个work节点上启动服务

"port": 10250,

"readOnlyPort": 10255,

"cgroupDriver": "systemd",

"hairpinMode": "promiscuous-bridge",

"serializeImagePulls": false,

"featureGates": {

"RotateKubeletClientCertificate": true,

"RotateKubeletServerCertificate": true

},

"clusterDomain": "cluster.local.",

"clusterDNS": ["10.255.0.2"]

}

#配置启动文件

# cat kubelet.service

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/kubernetes/kubernetes

After=docker.service

Requires=docker.service

[Service]

WorkingDirectory=/var/lib/kubelet

ExecStart=/usr/local/bin/kubelet \

--bootstrap-kubeconfig=/etc/kubernetes/kubelet-bootstrap.kubeconfig \

--cert-dir=/etc/kubernetes/ssl \

--kubeconfig=/etc/kubernetes/kubelet.kubeconfig \

--config=/etc/kubernetes/kubelet.json \

--network-plugin=cni \

--pod-infra-container-image=k8s.gcr.io/pause:3.2 \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/var/log/kubernetes \

--v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

#node01、node02 上进行操作

mkdir /etc/kubernetes/ssl -p

#master01 上操作,将配置文件拷贝至node 节点指定位置

scp kubelet-bootstrap.kubeconfig kubelet.json node01:/etc/kubernetes/

scp kubelet-bootstrap.kubeconfig kubelet.json node02:/etc/kubernetes/

scp ca.pem node01:/etc/kubernetes/ssl/

scp ca.pem node02:/etc/kubernetes/ssl/

scp kubelet.service node01:/usr/lib/systemd/system/

scp kubelet.service node02:/usr/lib/systemd/system/

#启动kubelet服务 (node01、node02节点操作)

mkdir /var/lib/kubelet

mkdir /var/log/kubernetes

systemctl daemon-reload

systemctl enable kubelet

systemctl start kubelet

systemctl status kubelet

#确认kubelet服务启动成功后,接着到master01节点上Approve一下bootstrap请求。执行如下命令可以看到一个worker节点发送了一个 CSR 请求,在master01节点操作,(两个node 节点都执行完后会有两个csr,以下是举一个节点的例子)

kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

node-csr-SY6gROGEmH0qVZhMVhJKKWN3UaWkKKQzV8dopoIO9Uc 87s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending

#批准

kubectl certificate approve node-csr-SY6gROGEmH0qVZhMVhJKKWN3UaWkKKQzV8dopoIO9Uc

#再次执行

kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

node-csr-SY6gROGEmH0qVZhMVhJKKWN3UaWkKKQzV8dopoIO9Uc 87s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued

#查看节点

kubectl get nodes

lgy-test-node01 NotReady <none> 3d21h v1.20.7

lgy-test-node02 NotReady <none> 3d19h v1.20.7

#注意:STATUS是NotReady表示还没有安装网络插件

部署kube-proxy组件

#创建csr请求

# cat kube-proxy-csr.json

{

"CN": "system:kube-proxy",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "k8s",

"OU": "system"

}

]

}

#生成证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

#创建kubeconfig文件

kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://10.65.91.161:6443 --kubeconfig=kubeproxy.kubeconfig

kubectl config set-credentials kube-proxy --client-certificate=kube-proxy.pem --client-key=kube-proxy-key.pem --embed-certs=true --kubeconfig=kube-proxy.kubeconfig

kubectl config set-context default --cluster=kubernetes --user=kube-proxy --kubeconfig=kube-proxy.kubeconfig

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

#创建kube-proxy配置文件

#注:kubelete.json配置文件address改为各个节点的ip地址,在各个work节点上启动服务

# cat kube-proxy.yaml

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 10.65.91.161 #注:kubelete.json配置文件address改为各个节点的ip地址,在各个work节点上启动服务

clientConnection:

kubeconfig: /etc/kubernetes/kube-proxy.kubeconfig

clusterCIDR: 10.65.91.0/16

healthzBindAddress: 10.65.91.161:10256 #注:kubelete.json配置文件address改为各个节点的ip地址,在各个work节点上启动服务

kind: KubeProxyConfiguration

metricsBindAddress: 10.65.91.161:10249 #注:kubelete.json配置文件address改为各个节点的ip地址,在各个work节点上启动服务

mode: "ipvs"

#创建服务启动文件

# cat kube-proxy.service

[Unit]

Description=Kubernetes Kube-Proxy Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

WorkingDirectory=/var/lib/kube-proxy

ExecStart=/usr/local/bin/kube-proxy \

--config=/etc/kubernetes/kube-proxy.yaml \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/var/log/kubernetes \

--v=2

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

#将配置文件均分发至node01、node02节点

scp kube-proxy.kubeconfig kube-proxy.yaml node01:/etc/kubernetes/

scp kube-proxy.kubeconfig kube-proxy.yaml node02:/etc/kubernetes/

scp kube-proxy.service node01:/usr/lib/systemd/system/

scp kube-proxy.service node02:/usr/lib/systemd/system/

#启动服务 (两个node节点启动服务)

mkdir -p /var/lib/kube-proxy

systemctl daemon-reload

systemctl enable kube-proxy

systemctl start kube-proxy

systemctl status kube-proxy

部署calico组件

#解压离线镜像压缩包

#把cni.tar.gz和node.tar.gz上传到 node01、node02 节点,手动解压

docker load -i cni.tar.gz

docker load -i node.tar.gz

#把calico.yaml文件上传到xianchaomaster1上的的/data/work目录

# cat calico.yaml

# Calico Version v3.5.3

# https://docs.projectcalico.org/v3.5/releases#v3.5.3

# This manifest includes the following component versions:

# calico/node:v3.5.3

# calico/cni:v3.5.3

# This ConfigMap is used to configure a self-hosted Calico installation.

kind: ConfigMap

apiVersion: v1

metadata:

name: calico-config

namespace: kube-system

data:

# Typha is disabled.

typha_service_name: "none"

# Configure the Calico backend to use.

calico_backend: "bird"

# Configure the MTU to use

veth_mtu: "1440"

# The CNI network configuration to install on each node. The special

# values in this config will be automatically populated.

cni_network_config: |-

{

"name": "k8s-pod-network",

"cniVersion": "0.3.0",

"plugins": [

{

"type": "calico",

"log_level": "info",

"datastore_type": "kubernetes",

"nodename": "__KUBERNETES_NODE_NAME__",

"mtu": __CNI_MTU__,

"ipam": {

"type": "host-local",

"subnet": "usePodCidr"

},

"policy": {

"type": "k8s"

},

"kubernetes": {

"kubeconfig": "__KUBECONFIG_FILEPATH__"

}

},

{

"type": "portmap",

"snat": true,

"capabilities": {"portMappings": true}

}

]

}

---

# This manifest installs the calico/node container, as well

# as the Calico CNI plugins and network config on

# each master and worker node in a Kubernetes cluster.

kind: DaemonSet

apiVersion: apps/v1

metadata:

name: calico-node

namespace: kube-system

labels:

k8s-app: calico-node

spec:

selector:

matchLabels:

k8s-app: calico-node

updateStrategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

template:

metadata:

labels:

k8s-app: calico-node

annotations:

# This, along with the CriticalAddonsOnly toleration below,

# marks the pod as a critical add-on, ensuring it gets

# priority scheduling and that its resources are reserved

# if it ever gets evicted.

scheduler.alpha.kubernetes.io/critical-pod: ''

spec:

nodeSelector:

beta.kubernetes.io/os: linux

hostNetwork: true

tolerations:

# Make sure calico-node gets scheduled on all nodes.

- effect: NoSchedule

operator: Exists

# Mark the pod as a critical add-on for rescheduling.

- key: CriticalAddonsOnly

operator: Exists

- effect: NoExecute

operator: Exists

serviceAccountName: calico-node

# Minimize downtime during a rolling upgrade or deletion; tell Kubernetes to do a "force

# deletion": https://kubernetes.io/docs/concepts/workloads/pods/pod/#termination-of-pods.

terminationGracePeriodSeconds: 0

initContainers:

# This container installs the Calico CNI binaries

# and CNI network config file on each node.

- name: install-cni

image: xianchao/cni:v3.5.3

command: ["/install-cni.sh"]

env:

# Name of the CNI config file to create.

- name: CNI_CONF_NAME

value: "10-calico.conflist"

# The CNI network config to install on each node.

- name: CNI_NETWORK_CONFIG

valueFrom:

configMapKeyRef:

name: calico-config

key: cni_network_config

# Set the hostname based on the k8s node name.

- name: KUBERNETES_NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

# CNI MTU Config variable

- name: CNI_MTU

valueFrom:

configMapKeyRef:

name: calico-config

key: veth_mtu

# Prevents the container from sleeping forever.

- name: SLEEP

value: "false"

volumeMounts:

- mountPath: /host/opt/cni/bin

name: cni-bin-dir

- mountPath: /host/etc/cni/net.d

name: cni-net-dir

containers:

# Runs calico/node container on each Kubernetes node. This

# container programs network policy and routes on each

# host.

- name: calico-node

image: xianchao/node:v3.5.3

env:

# Use Kubernetes API as the backing datastore.

- name: DATASTORE_TYPE

value: "kubernetes"

# Wait for the datastore.

- name: WAIT_FOR_DATASTORE

value: "true"

# Set based on the k8s node name.

- name: NODENAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

# Choose the backend to use.

- name: CALICO_NETWORKING_BACKEND

valueFrom:

configMapKeyRef:

name: calico-config

key: calico_backend

# Cluster type to identify the deployment type

- name: CLUSTER_TYPE

value: "k8s,bgp"

# Auto-detect the BGP IP address.

- name: IP

value: "autodetect"

- name: IP_AUTODETECTION_METHOD

value: "can-reach=192.168.40.131"

# Enable IPIP

- name: CALICO_IPV4POOL_IPIP

value: "Always"

# Set MTU for tunnel device used if ipip is enabled

- name: FELIX_IPINIPMTU

valueFrom:

configMapKeyRef:

name: calico-config

key: veth_mtu

# The default IPv4 pool to create on startup if none exists. Pod IPs will be

# chosen from this range. Changing this value after installation will have

# no effect. This should fall within `--cluster-cidr`.

- name: CALICO_IPV4POOL_CIDR

value: "10.0.0.0/16"

# Disable file logging so `kubectl logs` works.

- name: CALICO_DISABLE_FILE_LOGGING

value: "true"

# Set Felix endpoint to host default action to ACCEPT.

- name: FELIX_DEFAULTENDPOINTTOHOSTACTION

value: "ACCEPT"

# Disable IPv6 on Kubernetes.

- name: FELIX_IPV6SUPPORT

value: "false"

# Set Felix logging to "info"

- name: FELIX_LOGSEVERITYSCREEN

value: "info"

- name: FELIX_HEALTHENABLED

value: "true"

securityContext:

privileged: true

resources:

requests:

cpu: 250m

livenessProbe:

httpGet:

path: /liveness

port: 9099

host: localhost

periodSeconds: 10

initialDelaySeconds: 10

failureThreshold: 6

readinessProbe:

exec:

command:

- /bin/calico-node

- -bird-ready

- -felix-ready

periodSeconds: 10

volumeMounts:

- mountPath: /lib/modules

name: lib-modules

readOnly: true

- mountPath: /run/xtables.lock

name: xtables-lock

readOnly: false

- mountPath: /var/run/calico

name: var-run-calico

readOnly: false

- mountPath: /var/lib/calico

name: var-lib-calico

readOnly: false

volumes:

# Used by calico/node.

- name: lib-modules

hostPath:

path: /lib/modules

- name: var-run-calico

hostPath:

path: /var/run/calico

- name: var-lib-calico

hostPath:

path: /var/lib/calico

- name: xtables-lock

hostPath:

path: /run/xtables.lock

type: FileOrCreate

# Used to install CNI.

- name: cni-bin-dir

hostPath:

path: /opt/cni/bin

- name: cni-net-dir

hostPath:

path: /etc/cni/net.d

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: calico-node

namespace: kube-system

---

# Create all the CustomResourceDefinitions needed for

# Calico policy and networking mode.

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: felixconfigurations.crd.projectcalico.org

spec:

scope: Cluster

group: crd.projectcalico.org

version: v1

names:

kind: FelixConfiguration

plural: felixconfigurations

singular: felixconfiguration

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: bgppeers.crd.projectcalico.org

spec:

scope: Cluster

group: crd.projectcalico.org

version: v1

names:

kind: BGPPeer

plural: bgppeers

singular: bgppeer

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: bgpconfigurations.crd.projectcalico.org

spec:

scope: Cluster

group: crd.projectcalico.org

version: v1

names:

kind: BGPConfiguration

plural: bgpconfigurations

singular: bgpconfiguration

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: ippools.crd.projectcalico.org

spec:

scope: Cluster

group: crd.projectcalico.org

version: v1

names:

kind: IPPool

plural: ippools

singular: ippool

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: hostendpoints.crd.projectcalico.org

spec:

scope: Cluster

group: crd.projectcalico.org

version: v1

names:

kind: HostEndpoint

plural: hostendpoints

singular: hostendpoint

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: clusterinformations.crd.projectcalico.org

spec:

scope: Cluster

group: crd.projectcalico.org

version: v1

names:

kind: ClusterInformation

plural: clusterinformations

singular: clusterinformation

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: globalnetworkpolicies.crd.projectcalico.org

spec:

scope: Cluster

group: crd.projectcalico.org

version: v1

names:

kind: GlobalNetworkPolicy

plural: globalnetworkpolicies

singular: globalnetworkpolicy

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: globalnetworksets.crd.projectcalico.org

spec:

scope: Cluster

group: crd.projectcalico.org

version: v1

names:

kind: GlobalNetworkSet

plural: globalnetworksets

singular: globalnetworkset

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: networkpolicies.crd.projectcalico.org

spec:

scope: Namespaced

group: crd.projectcalico.org

version: v1

names:

kind: NetworkPolicy

plural: networkpolicies

singular: networkpolicy

---

# Include a clusterrole for the calico-node DaemonSet,

# and bind it to the calico-node serviceaccount.

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: calico-node

rules:

# The CNI plugin needs to get pods, nodes, and namespaces.

- apiGroups: [""]

resources:

- pods

- nodes

- namespaces

verbs:

- get

- apiGroups: [""]

resources:

- endpoints

- services

verbs:

# Used to discover service IPs for advertisement.

- watch

- list

# Used to discover Typhas.

- get

- apiGroups: [""]

resources:

- nodes/status

verbs:

# Needed for clearing NodeNetworkUnavailable flag.

- patch

# Calico stores some configuration information in node annotations.

- update

# Watch for changes to Kubernetes NetworkPolicies.

- apiGroups: ["networking.k8s.io"]

resources:

- networkpolicies

verbs:

- watch

- list

# Used by Calico for policy information.

- apiGroups: [""]

resources:

- pods

- namespaces

- serviceaccounts

verbs:

- list

- watch

# The CNI plugin patches pods/status.

- apiGroups: [""]

resources:

- pods/status

verbs:

- patch

# Calico monitors various CRDs for config.

- apiGroups: ["crd.projectcalico.org"]

resources:

- globalfelixconfigs

- felixconfigurations

- bgppeers

- globalbgpconfigs

- bgpconfigurations

- ippools

- globalnetworkpolicies

- globalnetworksets

- networkpolicies

- clusterinformations

- hostendpoints

verbs:

- get

- list

- watch

# Calico must create and update some CRDs on startup.

- apiGroups: ["crd.projectcalico.org"]

resources:

- ippools

- felixconfigurations

- clusterinformations

verbs:

- create

- update

# Calico stores some configuration information on the node.

- apiGroups: [""]

resources:

- nodes

verbs:

- get

- list

- watch

# These permissions are only requried for upgrade from v2.6, and can

# be removed after upgrade or on fresh installations.

- apiGroups: ["crd.projectcalico.org"]

resources:

- bgpconfigurations

- bgppeers

verbs:

- create

- update

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: calico-node

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: calico-node

subjects:

- kind: ServiceAccount

name: calico-node

namespace: kube-system

---

#创建yaml 文件

kubectl apply -f calico.yaml

#查看 calico

kubectl get pods -n kube-system

calico-node-6ljgc 1/1 Running 0 10s

calico-node-7r69n 1/1 Running 0 11s

#查看node节点

kubectl get nodes

lgy-test-node01 Ready <none> 3d21h v1.20.7

lgy-test-node02 Ready <none> 3d19h v1.20.7

部署coredns组件

#coredns 配置文件

#cat coredns.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

- apiGroups:

- discovery.k8s.io

resources:

- endpointslices

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

---

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

data:

Corefile: |

.:53 {

errors

health {

lameduck 5s

}

ready

kubernetes cluster.local in-addr.arpa ip6.arpa {

fallthrough in-addr.arpa ip6.arpa

}

prometheus :9153

forward . /etc/resolv.conf {

max_concurrent 1000

}

cache 30

loop

reload

loadbalance

}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/name: "CoreDNS"

spec:

# replicas: not specified here:

# 1. Default is 1.

# 2. Will be tuned in real time if DNS horizontal auto-scaling is turned on.

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

spec:

priorityClassName: system-cluster-critical

serviceAccountName: coredns

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

nodeSelector:

kubernetes.io/os: linux

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: k8s-app

operator: In

values: ["kube-dns"]

topologyKey: kubernetes.io/hostname

containers:

- name: coredns

image: coredns/coredns:1.7.0

imagePullPolicy: IfNotPresent

resources:

limits:

memory: 170Mi

requests:

cpu: 100m

memory: 70Mi

args: [ "-conf", "/etc/coredns/Corefile" ]

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

readOnly: true

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_BIND_SERVICE

drop:

- all

readOnlyRootFilesystem: true

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

path: /ready

port: 8181

scheme: HTTP

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

---

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

annotations:

prometheus.io/port: "9153"

prometheus.io/scrape: "true"

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: kube-dns

clusterIP: 10.255.0.2

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

- name: metrics

port: 9153

protocol: TCP

#创建yaml 文件

kubectl apply -f coredns.yaml

#查看coredns 创建

kubectl get pods -n kube-system

coredns-7bf4bd64bd-dnck5 1/1 Running 0 3d2h

#查看svc 创建

kubectl get svc -n kube-system

kube-dns ClusterIP 10.255.0.2 <none> 53/UDP,53/TCP,9153/TCP 3d20h

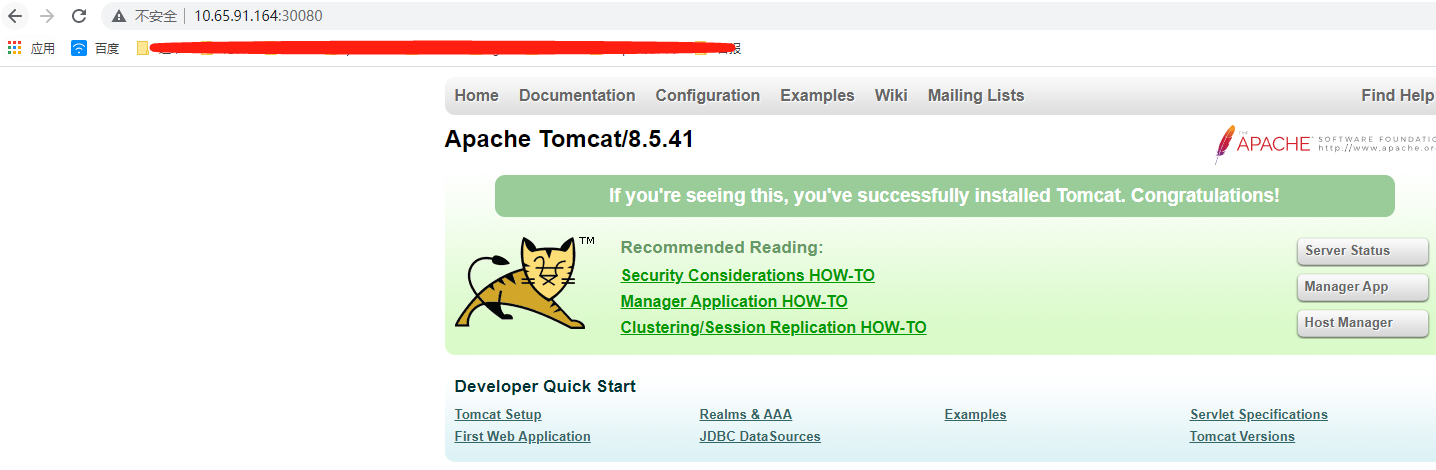

测试k8s 集群部署tomcat服务

#把tomcat.tar.gz和busybox-1-28.tar.gz上传到node01,手动解压

docker load -i tomcat.tar.gz

docker load -i busybox-1-28.tar.gz

#准备tomcat.yaml文件

# cat tomcat.yaml

apiVersion: v1 #pod属于k8s核心组v1

kind: Pod #创建的是一个Pod资源

metadata: #元数据

name: demo-pod #pod名字

namespace: default #pod所属的名称空间

labels:

app: myapp #pod具有的标签

env: dev #pod具有的标签

spec:

containers: #定义一个容器,容器是对象列表,下面可以有多个name

- name: tomcat-pod-java #容器的名字

ports:

- containerPort: 8080

image: tomcat:8.5-jre8-alpine #容器使用的镜像

imagePullPolicy: IfNotPresent

- name: busybox

image: busybox:latest

command: #command是一个列表,定义的时候下面的参数加横线

- "/bin/sh"

- "-c"

- "sleep 3600"

#执行创建tomcat.yaml 文件

kubectl apply -f tomcat.yaml

#查看创建的Pod

kubectl get pods

demo-pod 2/2 Running 90 3d19h

#准备svc 配置文件

apiVersion: v1

kind: Service

metadata:

name: tomcat

spec:

type: NodePort

ports:

- port: 8080

nodePort: 30080

selector:

app: myapp

env: dev

#创建svc

kubectl apply -f tomcat-service.yaml

#查看svc

kubectl get svc

tomcat NodePort 10.255.246.19 <none> 8080:30080/TCP 3d20h

#验证coredns 是否正常

kubectl run busybox --image busybox:1.28 --restart=Never --rm -it busybox -- sh

ping www.baidu.com

#通过上面可以看到能访问网络

# nslookup kubernetes.default.svc.cluster.local

Server: 10.255.0.2

Address 1: 10.255.0.2 kube-dns.kube-system.svc.cluster.local

Name: kubernetes.default.svc.cluster.local

Address 1: 10.255.0.1 kubernetes.default.svc.cluster.local

# nslookup tomcat.default.svc.cluster.local

Server: 10.255.0.2

Address 1: 10.255.0.2 kube-dns.kube-system.svc.cluster.local

Name: tomcat.default.svc.cluster.local

Address 1: 10.255.246.19 tomcat.default.svc.cluster.local

#注: 10.255.0.2 就是我们coreDNS的clusterIP,说明coreDNS配置好了。解析内部Service的名称,是通过coreDNS去解析的。

#在浏览器访问node01节点:30080

安装keepalived+nginx实现k8s apiserver高可用

#lb1 与lb2 及nginx01 和nginx02 上执行以下操作,安装Nginx+Keepalived

rpm -Uvh http://nginx.org/packages/centos/7/noarch/RPMS/nginx-release-centos-7-0.el7.ngx.noarch.rpm

yum install nginx keepalived nginx-mod-stream -y

#修改主备nginx 的nginx.conf 文件,内容一样

# cat /etc/nginx/nginx.conf

# For more information on configuration, see:

# * Official English Documentation: http://nginx.org/en/docs/

# * Official Russian Documentation: http://nginx.org/ru/docs/

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log;

pid /run/nginx.pid;

# Load dynamic modules. See /usr/share/doc/nginx/README.dynamic.

include /usr/share/nginx/modules/*.conf;

events {

worker_connections 1024;

}

http {

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 65;

types_hash_max_size 4096;

include /etc/nginx/mime.types;

default_type application/octet-stream;

# Load modular configuration files from the /etc/nginx/conf.d directory.

# See http://nginx.org/en/docs/ngx_core_module.html#include

# for more information.

include /etc/nginx/conf.d/*.conf;

server {

listen 80;

listen [::]:80;

server_name _;

root /usr/share/nginx/html;

# Load configuration files for the default server block.

include /etc/nginx/default.d/*.conf;

error_page 404 /404.html;

location = /404.html {

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

}

}

# Settings for a TLS enabled server.

#

# server {

# listen 443 ssl http2;

# listen [::]:443 ssl http2;

# server_name _;

# root /usr/share/nginx/html;

#

# ssl_certificate "/etc/pki/nginx/server.crt";

# ssl_certificate_key "/etc/pki/nginx/private/server.key";

# ssl_session_cache shared:SSL:1m;

# ssl_session_timeout 10m;

# ssl_ciphers HIGH:!aNULL:!MD5;

# ssl_prefer_server_ciphers on;

#

# # Load configuration files for the default server block.

# include /etc/nginx/default.d/*.conf;

#

# error_page 404 /404.html;

# location = /40x.html {

# }

#

# error_page 500 502 503 504 /50x.html;

# location = /50x.html {

# }

# }

}

stream {

log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-apiserver {

server 10.65.91.161:6443 max_fails=3 fail_timeout=30s;

server 10.65.91.162:6443 max_fails=3 fail_timeout=30s;

server 10.65.91.163:6443 max_fails=3 fail_timeout=30s;

}

server {

listen 16443;

proxy_connect_timeout 2s;

proxy_timeout 900s;

proxy_pass k8s-apiserver;

}

}

#启动nginx (lb1与lb2执行)

nginx

#keepalive配置

#主keepalived 配置

# cat /etc/keepalived/keepalived.conf

global_defs {

router_id master01

}

vrrp_script check_nginx {

script /etc/keepalived/check_nginx.sh

interval 3

}

vrrp_instance VI_1 {

state MASTER

interface ens192

virtual_router_id 93

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.65.91.169

}

track_script {

check_nginx

}

}

#配置监测脚本

# cat /etc/keepalived/check_nginx.sh

#!/bin/bash

count=`ps -C nginx --no-header |wc -l`

if [ "$count" -eq 0 ];then

systemctl stop keepalived

fi

#添加脚本执行权限

chmod +x /etc/keepalived/check_nginx.sh

#备keepalived

# cat /etc/keepalived/keepalived.conf

global_defs {

router_id master02

}

vrrp_script check_nginx {

script /etc/keepalived/check_nginx.sh

interval 3

}

vrrp_instance VI_1 {

state BACKUP

interface ens192

virtual_router_id 93

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.65.91.169

}

track_script {

check_nginx

}

}

#配置检测脚本

# cat /etc/keepalived/check_nginx.sh

#!/bin/bash

count=`ps -C nginx --no-header |wc -l`

if [ "$count" -eq 0 ];then

systemctl stop keepalived

fi

#添加权限

chmod +x /etc/keepalived/check_nginx.sh

#启动服务(lb1与lb2执行)

systemctl daemon-reload

systemctl start keepalived

systemctl enable nginx keepalived

#测试vip是否绑定成功

service nginx stop

#查看nginx vip 是否会漂移至备节点

#目前所有的Worker Node组件连接都还是 master01 Node,如果不改为连接VIP走负载均衡器,那么Master还是单点故障。 因此接下来就是要改所有Worker Node(kubectl get node命令查看到的节点)组件配置文件,由原来10.65.91.161 修改为10.65.91.169(VIP)。 在所有Worker Node执行:

cd /root/.kube

sed -i 's#10.65.91.161:6443#10.65.91.169:16443#' config

cd /etc/kubernetes

sed -i 's#10.65.91.161:6443#10.65.91.169:16443#' kubelet-bootstrap.kubeconfig

sed -i 's#10.65.91.161:6443#10.65.91.169:16443#' kubelet.kubeconfig

sed -i 's#10.65.91.161:6443#10.65.91.169:16443#' kube-proxy.kubeconfig

#重启kubelet 和kube-proxy

systemctl restart kubelet kube-proxy

#接下来可以在master01、master02 上也安装 kubelet、kube-proxy、calico

#将新加入的master节点 ROLES 改为 master

kubectl label no lgy-test-master01 kubernetes.io/role=master

kubectl label no lgy-test-master02 kubernetes.io/role=master

kubectl label no lgy-test-master03 kubernetes.io/role=master

#设置master节点不允许调度pod

kubectl taint node lgy-test-master01 node-role.kubernetes.io/master=:NoSchedule

kubectl taint node lgy-test-master02 node-role.kubernetes.io/master=:NoSchedule

kubectl taint node lgy-test-master03 node-role.kubernetes.io/master=:NoSchedule

#获取最终节点信息

# kubectl get node

NAME STATUS ROLES AGE VERSION

lgy-test-master01 Ready master 3d19h v1.20.7

lgy-test-master02 Ready master 3d19h v1.20.7

lgy-test-master03 Ready master 3d19h v1.20.7

lgy-test-node01 Ready <none> 3d22h v1.20.7

lgy-test-node02 Ready <none> 3d19h v1.20.7

问题处理

- rancher2.4.11 版本报错Alert: Component controller-manager is unhealthy.

#现象rancher2.4.11 版本报错Alert: Component controller-manager is unhealthy.

kubectl get componentstatuses

controller-manager Unhealthy HTTP probe failed with statuscode: 400

#处理方式,登录三个master 节点,三个master 节点执行相同的操作

cd /etc/kubernetes

vim kube-controller-manager.conf

注释以下两个参数

#--port=0

#--secure-port=10252

#重启kube-controller-manager、kubelet

systemctl restart kube-controller-manager

systemctl restart kubelet

#再吃执行 kubectl get componentstatuses

controller-manager Healthy ok

scheduler Healthy ok

etcd-2 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}

etcd-0 Healthy {"health":"true"}

#刷新rancher,问题解决