kubeadm 部署k8s集群

https://kubernetes.io/zh/docs/tasks/

1: 环境准备

yum install vim wget bash-completion lrzsz nmap nc tree htop iftop net-tools ipvsadm -y

2: 修改主机名

hostnamectl set-hostname master

systemctl disable firewalld.service

systemctl stop firewalld.service

setenforce 0

3: 关闭虚拟机内存

swapoff -a

sed -i 's/.*swap.*/#&/' /etc/fstab

4: 关闭selinux

sed -i "s/^SELINUX=enforcing/SELINUX=disabled/g" /etc/sysconfig/selinux

sed -i "s/^SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

sed -i "s/^SELINUX=permissive/SELINUX=disabled/g" /etc/sysconfig/selinux

sed -i "s/^SELINUX=permissive/SELINUX=disabled/g" /etc/selinux/config

###补充

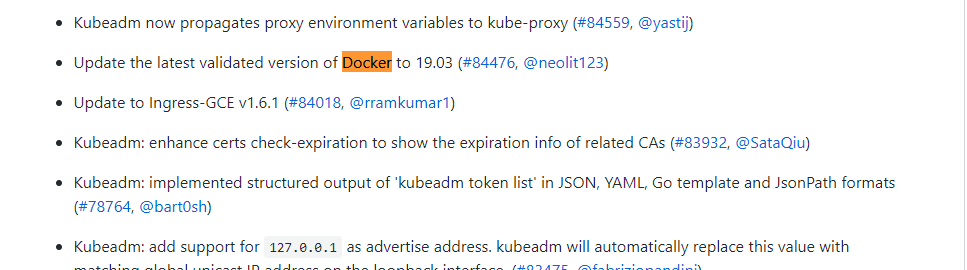

Docker版本要求:

https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG-1.17.md

v1.17 docker 要求

Docker versions docker-ce-19.03

v1.16 要求:

Drop the support for docker 1.9.x. Docker versions 1.10.3, 1.11.2, 1.12.6 have been validated.

查看docker 版本:

yum list docker-ce --showduplicates|sort -r

5: 安装依赖

yum install yum-utils device-mapper-persistent-data lvm2 -y

6:添加docker源

yum-config-manager

--add-repo

https://download.docker.com/linux/centos/docker-ce.repo

7: 更新源并安装指定版本

yum update && yum install docker-ce-18.06.3.ce -y

8: 配置k8s的docker环境要求和镜像加速

sudo mkdir -p /etc/docker

cat << EOF > /etc/docker/daemon.json "exec-opts": "native.cgroupdriver=systemd" "registry-mirrors": "https://0bb06s1q.mirror.aliyuncs.com" "log-driver": "json-file" "log-opts": "max-size": "100m" "storage-driver": "overlay2" "storage-opts": "overlay2.override_kernel_check=true" EOF

systemctl daemon-reload && systemctl restart docker && systemctl enable docker.service

9: 安装和配置containerd

###新版本dockers自动安装这个包 可忽略这一步

yum install containerd.io -y

mkdir -p /etc/containerd

containerd config default > /etc/containerd/config.toml

systemctl restart containerd

10:添加kubernetes源

kubernetes安装:

cat <<EOF > /etc/yum.repos.d/kubernetes.repo kubernetes name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF

11: 安装最新版本或指定版本

yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

systemctl enable --now kubelet

指定版本

yum install kubeadm-1.18.0-0 kubelet-1.18.0-0 kubectl-1.18.0-0 --disableexcludes=kubernetes

systemctl enable --now kubelet

12: 开启内核转发

cat <<EOF > /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 EOF sysctl --system

13:加载检查 ipvs内核

检查:

lsmod | grep ip_vs

加载:

modprobe ip_vs

###自定义配置文件

master节点部署:

14:导出kubeadm集群部署自定义文件

kubeadm config print init-defaults > init.default.yaml 导出配置文件

15: 修改自定义配置文件

A:修改配置文件 添加 主节点IP advertiseAddress:

B:修改国内阿里镜像地址imageRepository:

C:自定义pod地址 podSubnet: "192.168.0.0/16"

D:开启 IPVS 模式 ---

[root@master ~]# cat init.default.yaml

apiVersionkubeadm.k8s.io/v1beta2 bootstrapTokens groups system:bootstrappers:kubeadm:default-node-token tokenabcdef.0123456789abcdef ttl24h0m0s usages signing authentication kindInitConfiguration localAPIEndpoint #配置主节点IP信息 advertiseAddress192.168.31.147 bindPort6443 nodeRegistration criSocket/var/run/dockershim.sock namemaster taints effectNoSchedule keynode-role.kubernetes.io/master --- apiServer timeoutForControlPlane4m0s apiVersionkubeadm.k8s.io/v1beta2 certificatesDir/etc/kubernetes/pki clusterNamekubernetes controllerManager dns typeCoreDNS etcd local dataDir/var/lib/etcd #自定义容器镜像拉取国内仓库地址 imageRepositoryregistry.aliyuncs.com/google_containers kindClusterConfiguration kubernetesVersionv1.17.0 networking dnsDomaincluster.local #自定义podIP地址段 podSubnet"192.168.0.0/16" serviceSubnet10.96.0.0/12 scheduler # 开启 IPVS 模式 --- apiVersionkubeproxy.config.k8s.io/v1alpha1 kindKubeProxyConfiguration featureGates SupportIPVSProxyModetrue modeipvs

16: 检查需要拉取的镜像

kubeadm config images list --config init.default.yaml

17: 拉取阿里云kubernetes容器镜像

kubeadm config images pull --config init.default.yaml

[root@master ~]# kubeadm config images list --config init.default.yaml

W0204 00:37:10.879146 30608 validation.go:28] Cannot validate kubelet config - no validator is available

W0204 00:37:10.879175 30608 validation.go:28] Cannot validate kube-proxy config - no validator is available

registry.aliyuncs.com/google_containers/kube-apiserver:v1.17.2

registry.aliyuncs.com/google_containers/kube-controller-manager:v1.17.2

registry.aliyuncs.com/google_containers/kube-scheduler:v1.17.2

registry.aliyuncs.com/google_containers/kube-proxy:v1.17.2

registry.aliyuncs.com/google_containers/pause:3.1

registry.aliyuncs.com/google_containers/etcd:3.4.3-0

registry.aliyuncs.com/google_containers/coredns:1.6.5

[root@master ~]# kubeadm config images pull --config init.default.yaml

W0204 00:37:25.590147 30636 validation.go:28] Cannot validate kube-proxy config - no validator is available

W0204 00:37:25.590179 30636 validation.go:28] Cannot validate kubelet config - no validator is available

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-apiserver:v1.17.2

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-controller-manager:v1.17.2

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-scheduler:v1.17.2

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-proxy:v1.17.2

[config/images] Pulled registry.aliyuncs.com/google_containers/pause:3.1

[config/images] Pulled registry.aliyuncs.com/google_containers/etcd:3.4.3-0

[config/images] Pulled registry.aliyuncs.com/google_containers/coredns:1.6.5

[root@master ~]#

18: kubernetes集群部署

#阿里云部署使用内网地址

#开始部署集群 kubeadm init --config=init.default.yaml

[root@master ~]# kubeadm init --config=init.default.yaml | tee kubeadm-init.log

W0204 00:39:48.825538 30989 validation.go:28] Cannot validate kube-proxy config - no validator is available

W0204 00:39:48.825592 30989 validation.go:28] Cannot validate kubelet config - no validator is available

[init] Using Kubernetes version: v1.17.2

[preflight] Running pre-flight checks

[WARNING Hostname]: hostname "master" could not be reached

[WARNING Hostname]: hostname "master": lookup master on 114.114.114.114:53: no such host

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [master kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.31.90]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [master localhost] and IPs [192.168.31.90 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [master localhost] and IPs [192.168.31.90 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

W0204 00:39:51.810550 30989 manifests.go:214] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

W0204 00:39:51.811109 30989 manifests.go:214] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 35.006692 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.17" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node master as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node master as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: abcdef.0123456789abcdef

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.31.90:6443 --token abcdef.0123456789abcdef

--discovery-token-ca-cert-hash sha256:09959f846dba6a855fbbd090e99b4ba1df4e643ec1a1578c28eaf9a9d3ea6a03

[root@master ~]#

19:配置用户证书

[root@master ~]#mkdir -p $HOME/.kube

[root@master ~]#sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master ~]#sudo chown $(id -u):$(id -g) $HOME/.kube/config

20: 查看集群状态

[root@master ~]#kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady master 2m34s v1.17.2

[root@master ~]#

[root@master ~]# kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}

21 kuberctl 命令自动补全

source <(kubectl completion bash)

echo "source <(kubectl completion bash)" >> ~/.bashrc

22 kubernetes网络部署

Calico

https://www.projectcalico.org/

https://docs.projectcalico.org/getting-started/kubernetes/

Calico网络部署:

kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml

[root@master ~]# kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

serviceaccount/calico-node created

deployment.apps/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

[root@master ~]#

23: 查看网络插件部署状态

[root@master ~]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-77c4b7448-hfqws 1/1 Running 0 32s

calico-node-59p6f 1/1 Running 0 32s

coredns-9d85f5447-6wgkd 1/1 Running 0 2m5s

coredns-9d85f5447-bkjj8 1/1 Running 0 2m5s

etcd-master 1/1 Running 0 2m2s

kube-apiserver-master 1/1 Running 0 2m2s

kube-controller-manager-master 1/1 Running 0 2m2s

kube-proxy-lwww6 1/1 Running 0 2m5s

kube-scheduler-master 1/1 Running 0 2m2s

24:添加node节点

[root@node02 ~]# kubeadm join 192.168.31.90:6443 --token abcdef.0123456789abcdef

> --discovery-token-ca-cert-hash sha256:09959f846dba6a855fbbd090e99b4ba1df4e643ec1a1578c28eaf9a9d3ea6a03

W0204 00:46:53.878006 30928 join.go:346] [preflight] WARNING: JoinControlPane.controlPlane settings will be ignored when control-plane flag is not set.

[preflight] Running pre-flight checks

[WARNING Hostname]: hostname "node02" could not be reached

[WARNING Hostname]: hostname "node02": lookup node02 on 114.114.114.114:53: no such host

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.17" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

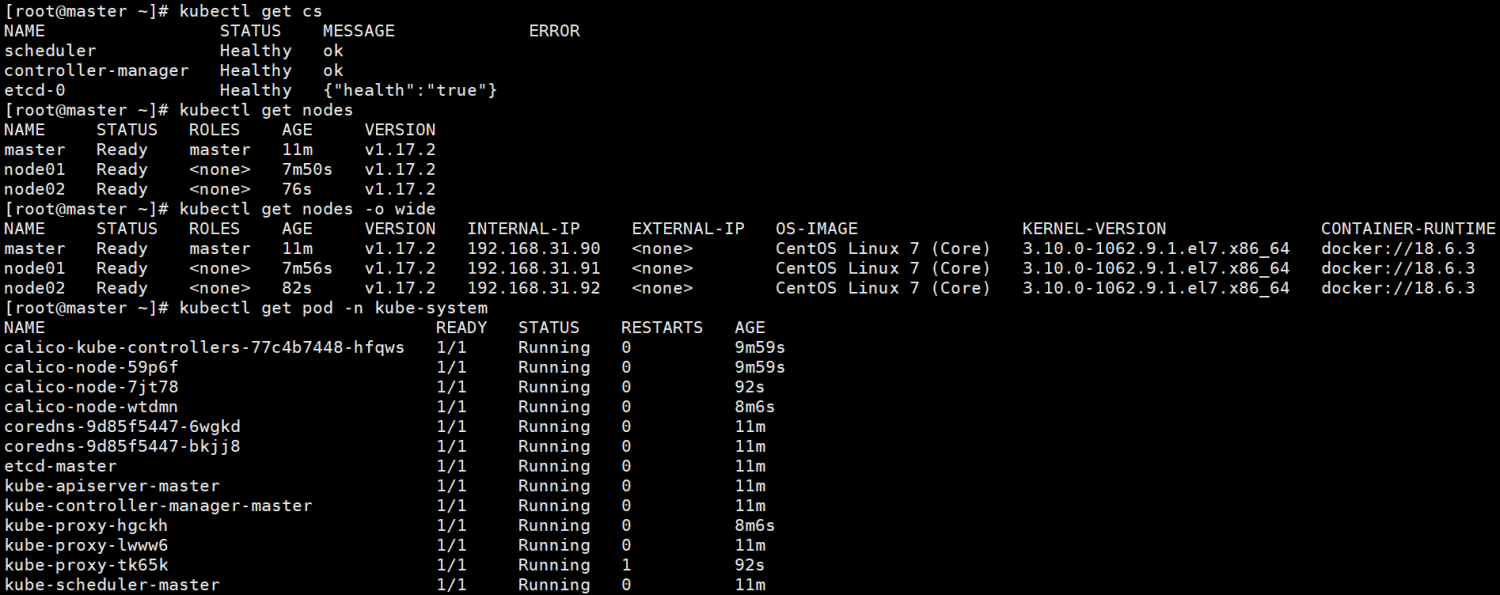

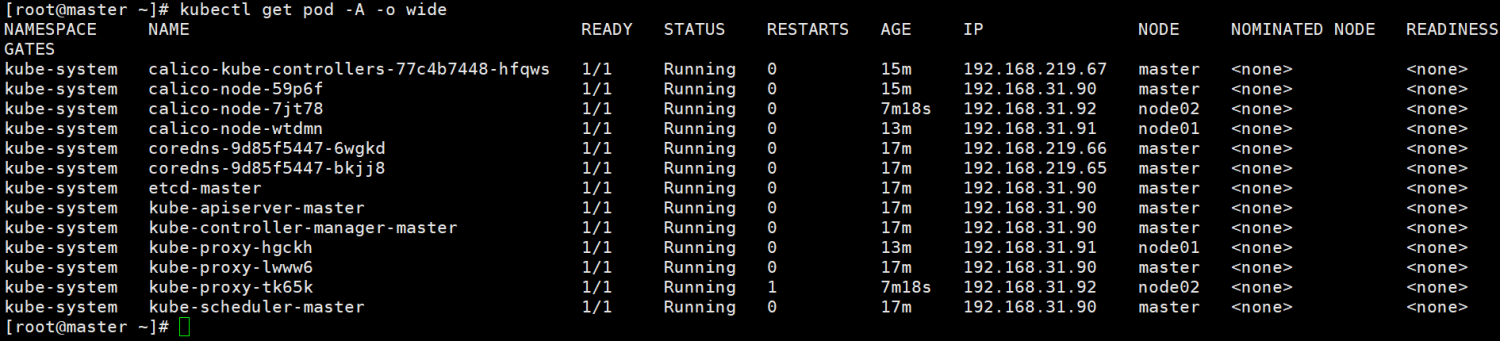

25: 集群检查

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 19m v1.17.2

node01 Ready <none> 15m v1.17.2

node02 Ready <none> 13m v1.17.2

[root@master ~]# kubectl get cs

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health":"true"}

[root@master ~]# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-77c4b7448-zd6dt 0/1 Error 1 119s

kube-system calico-node-2fcbs 1/1 Running 0 119s

kube-system calico-node-56f95 1/1 Running 0 119s

kube-system calico-node-svlg9 1/1 Running 0 119s

kube-system coredns-9d85f5447-4f4hq 0/1 Running 0 19m

kube-system coredns-9d85f5447-n68wd 0/1 Running 0 19m

kube-system etcd-master 1/1 Running 0 19m

kube-system kube-apiserver-master 1/1 Running 0 19m

kube-system kube-controller-manager-master 1/1 Running 0 19m

kube-system kube-proxy-ch4vl 1/1 Running 1 15m

kube-system kube-proxy-fjl5c 1/1 Running 1 19m

kube-system kube-proxy-hhsqc 1/1 Running 1 13m

kube-system kube-scheduler-master 1/1 Running 0 19m

[root@master ~]#

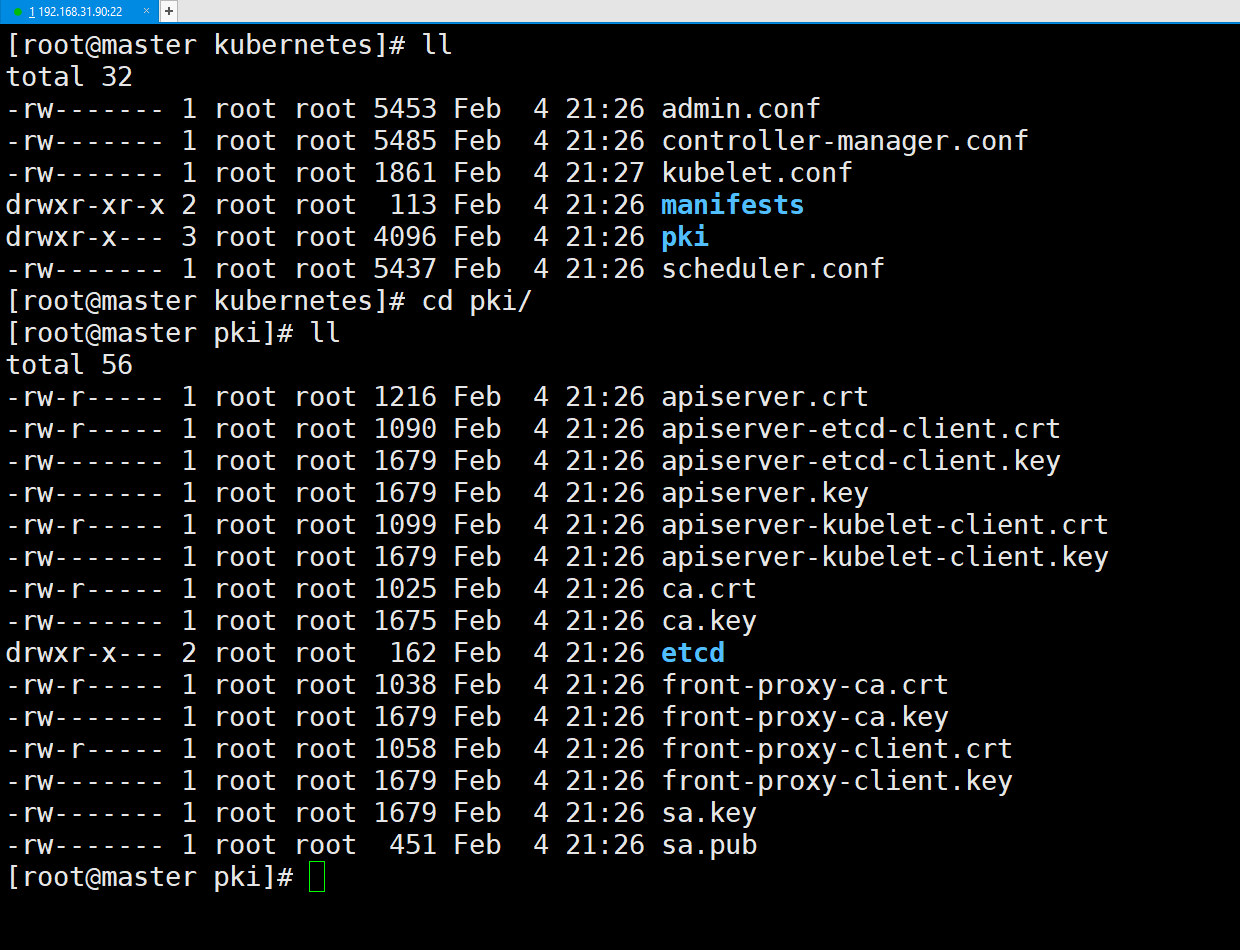

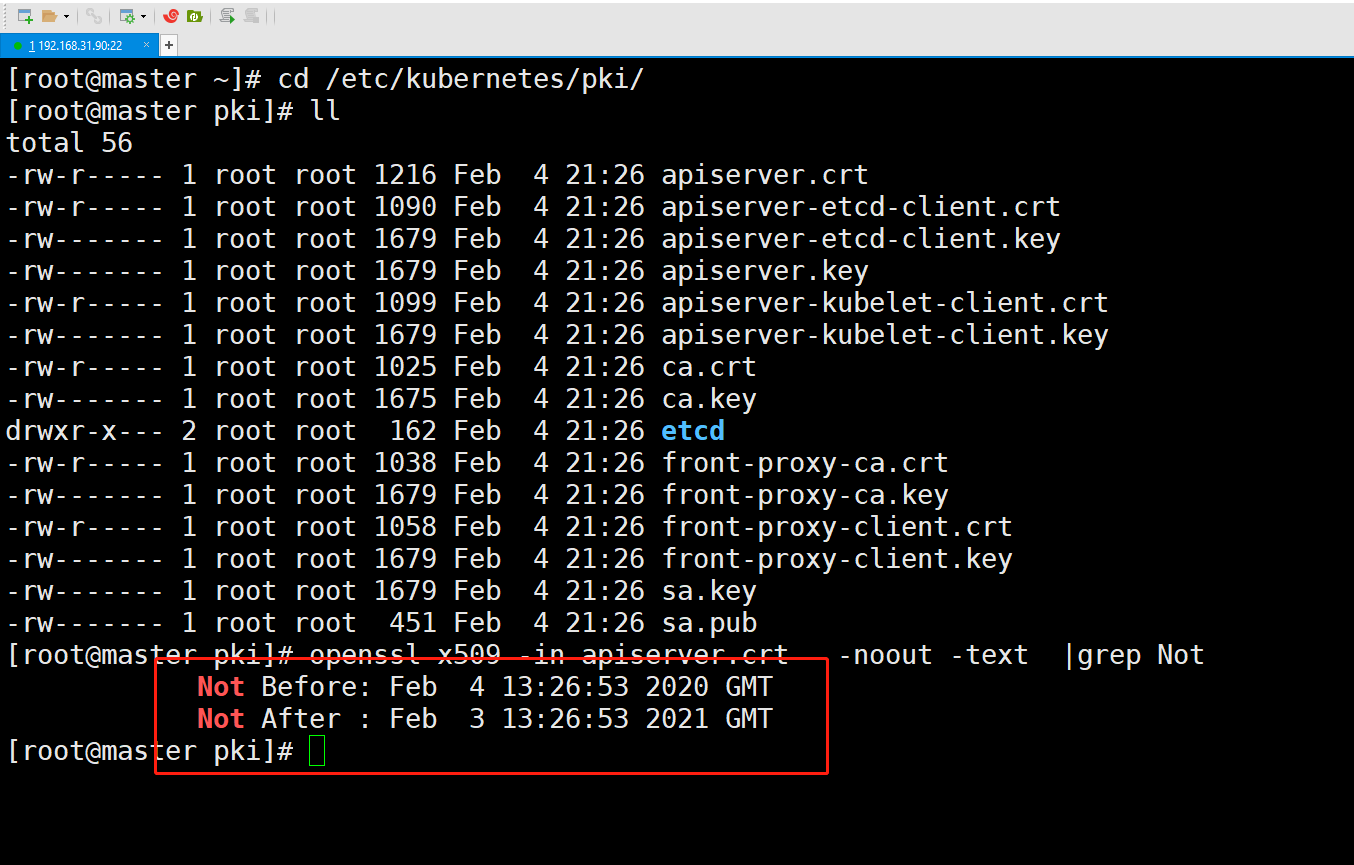

26:查看证书

[root@master ~]# cd /etc/kubernetes/

[root@master kubernetes]# ll

total 32

-rw------- 1 root root 5453 Feb 4 21:26 admin.conf

-rw------- 1 root root 5485 Feb 4 21:26 controller-manager.conf

-rw------- 1 root root 1861 Feb 4 21:27 kubelet.conf

drwxr-xr-x 2 root root 113 Feb 4 21:26 manifests

drwxr-x--- 3 root root 4096 Feb 4 21:26 pki

-rw------- 1 root root 5437 Feb 4 21:26 scheduler.conf

[root@master kubernetes]# tree

.

├── admin.conf

├── controller-manager.conf

├── kubelet.conf

├── manifests

│ ├── etcd.yaml

│ ├── kube-apiserver.yaml

│ ├── kube-controller-manager.yaml

│ └── kube-scheduler.yaml

├── pki

│ ├── apiserver.crt

│ ├── apiserver-etcd-client.crt

│ ├── apiserver-etcd-client.key

│ ├── apiserver.key

│ ├── apiserver-kubelet-client.crt

│ ├── apiserver-kubelet-client.key

│ ├── ca.crt

│ ├── ca.key

│ ├── etcd

│ │ ├── ca.crt

│ │ ├── ca.key

│ │ ├── healthcheck-client.crt

│ │ ├── healthcheck-client.key

│ │ ├── peer.crt

│ │ ├── peer.key

│ │ ├── server.crt

│ │ └── server.key

│ ├── front-proxy-ca.crt

│ ├── front-proxy-ca.key

│ ├── front-proxy-client.crt

│ ├── front-proxy-client.key

│ ├── sa.key

│ └── sa.pub

└── scheduler.conf

3 directories, 30 files

[root@master kubernetes]#

27:查看证书有效时间

[root@master ~]# cd /etc/kubernetes/pki/

[root@master pki]# ll

total 56

-rw-r----- 1 root root 1216 Feb 4 21:26 apiserver.crt

-rw-r----- 1 root root 1090 Feb 4 21:26 apiserver-etcd-client.crt

-rw------- 1 root root 1679 Feb 4 21:26 apiserver-etcd-client.key

-rw------- 1 root root 1679 Feb 4 21:26 apiserver.key

-rw-r----- 1 root root 1099 Feb 4 21:26 apiserver-kubelet-client.crt

-rw------- 1 root root 1679 Feb 4 21:26 apiserver-kubelet-client.key

-rw-r----- 1 root root 1025 Feb 4 21:26 ca.crt

-rw------- 1 root root 1675 Feb 4 21:26 ca.key

drwxr-x--- 2 root root 162 Feb 4 21:26 etcd

-rw-r----- 1 root root 1038 Feb 4 21:26 front-proxy-ca.crt

-rw------- 1 root root 1679 Feb 4 21:26 front-proxy-ca.key

-rw-r----- 1 root root 1058 Feb 4 21:26 front-proxy-client.crt

-rw------- 1 root root 1679 Feb 4 21:26 front-proxy-client.key

-rw------- 1 root root 1679 Feb 4 21:26 sa.key

-rw------- 1 root root 451 Feb 4 21:26 sa.pub

[root@master pki]# openssl x509 -in apiserver.crt -noout -text |grep Not

Not Before: Feb 4 13:26:53 2020 GMT

Not After : Feb 3 13:26:53 2021 GMT

[root@master pki]#

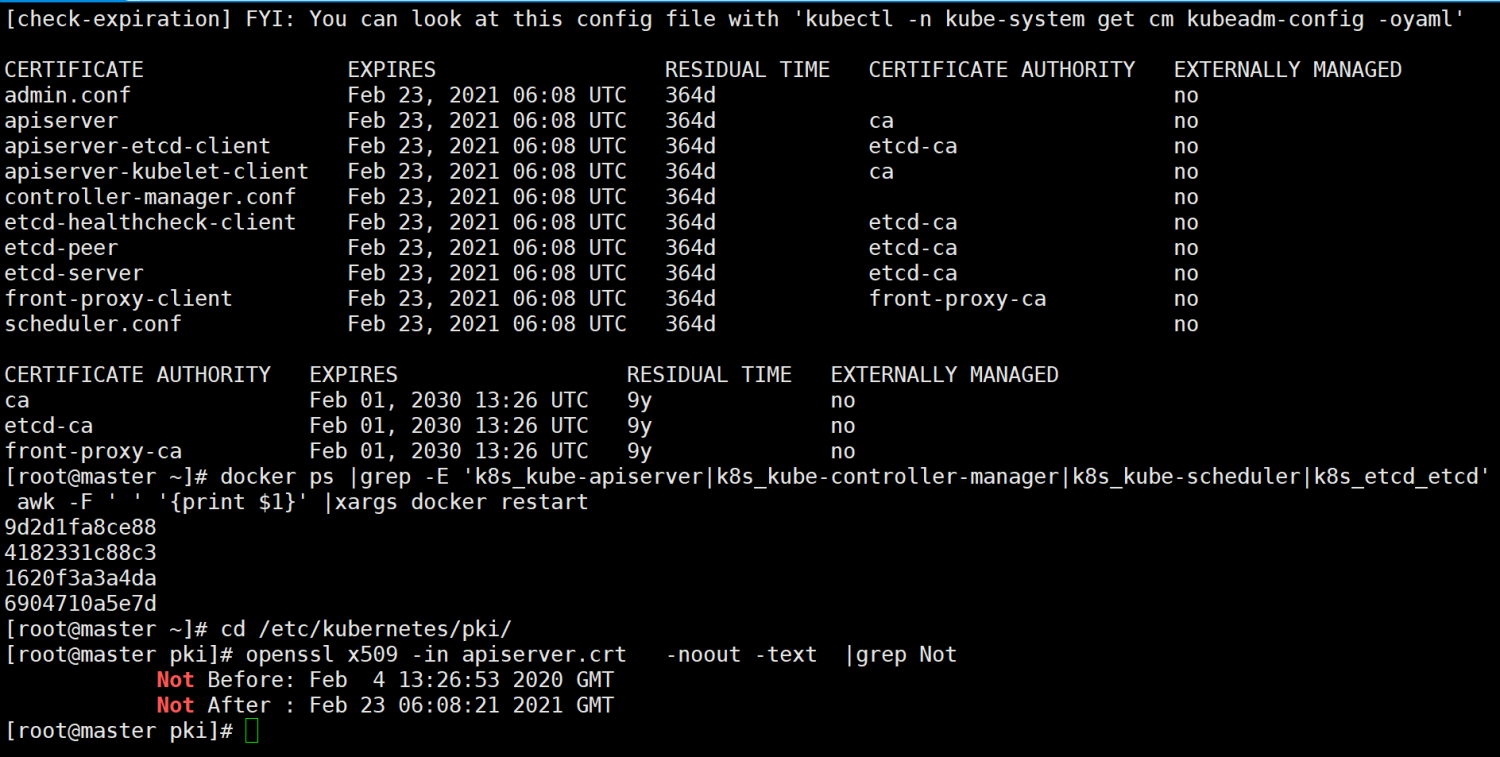

28:更新证书

[root@master ~]# kubeadm config view > /root/kubeadm.yaml

[root@master ~]# ll /root/kubeadm.yaml

-rw-r----- 1 root root 492 Feb 24 14:07 /root/kubeadm.yaml

[root@master ~]# cat /root/kubeadm.yaml

apiServer:

extraArgs:

authorization-mode: Node,RBAC

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.17.2

networking:

dnsDomain: cluster.local

podSubnet: 192.168.0.0/16

serviceSubnet: 10.96.0.0/12

scheduler: {}

[root@master ~]# kubeadm alpha certs renew all --config=/root/kubeadm.yaml

W0224 14:08:21.385077 47490 validation.go:28] Cannot validate kube-proxy config - no validator is available

W0224 14:08:21.385111 47490 validation.go:28] Cannot validate kubelet config - no validator is available

certificate embedded in the kubeconfig file for the admin to use and for kubeadm itself renewed

certificate for serving the Kubernetes API renewed

certificate the apiserver uses to access etcd renewed

certificate for the API server to connect to kubelet renewed

certificate embedded in the kubeconfig file for the controller manager to use renewed

certificate for liveness probes to healthcheck etcd renewed

certificate for etcd nodes to communicate with each other renewed

certificate for serving etcd renewed

certificate for the front proxy client renewed

certificate embedded in the kubeconfig file for the scheduler manager to use renewed

[root@master ~]# kubeadm alpha certs check-expiration

[check-expiration] Reading configuration from the cluster...

[check-expiration] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

CERTIFICATE EXPIRES RESIDUAL TIME CERTIFICATE AUTHORITY EXTERNALLY MANAGED

admin.conf Feb 23, 2021 06:08 UTC 364d no

apiserver Feb 23, 2021 06:08 UTC 364d ca no

apiserver-etcd-client Feb 23, 2021 06:08 UTC 364d etcd-ca no

apiserver-kubelet-client Feb 23, 2021 06:08 UTC 364d ca no

controller-manager.conf Feb 23, 2021 06:08 UTC 364d no

etcd-healthcheck-client Feb 23, 2021 06:08 UTC 364d etcd-ca no

etcd-peer Feb 23, 2021 06:08 UTC 364d etcd-ca no

etcd-server Feb 23, 2021 06:08 UTC 364d etcd-ca no

front-proxy-client Feb 23, 2021 06:08 UTC 364d front-proxy-ca no

scheduler.conf Feb 23, 2021 06:08 UTC 364d no

CERTIFICATE AUTHORITY EXPIRES RESIDUAL TIME EXTERNALLY MANAGED

ca Feb 01, 2030 13:26 UTC 9y no

etcd-ca Feb 01, 2030 13:26 UTC 9y no

front-proxy-ca Feb 01, 2030 13:26 UTC 9y no

[root@master ~]# docker ps |grep -E 'k8s_kube-apiserver|k8s_kube-controller-manager|k8s_kube-scheduler|k8s_etcd_etcd' | awk -F ' ' '{print $1}' |xargs docker restart

9d2d1fa8ce88

4182331c88c3

1620f3a3a4da

6904710a5e7d

[root@master ~]# cd /etc/kubernetes/pki/

[root@master pki]# openssl x509 -in apiserver.crt -noout -text |grep Not

Not Before: Feb 4 13:26:53 2020 GMT

Not After : Feb 23 06:08:21 2021 GMT

[root@master pki]#

29:Kbernetes管理平台

rancher

https://docs.rancher.cn/rancher2x/

30:集群升级 v1.17.2 升级 v1.17.4 不可跨大版本升级

查看新版本 kubeadm upgrade plan

[root@master ~]# kubeadm upgrade plan [upgrade/config] Making sure the configuration is correct: [upgrade/config] Reading configuration from the cluster... [upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml' [preflight] Running pre-flight checks. [upgrade] Making sure the cluster is healthy: [upgrade] Fetching available versions to upgrade to [upgrade/versions] Cluster version: v1.17.2 [upgrade/versions] kubeadm version: v1.17.2 I0326 09:28:34.816310 3805 version.go:251] remote version is much newer: v1.18.0; falling back to: stable-1.17 [upgrade/versions] Latest stable version: v1.17.4 [upgrade/versions] Latest version in the v1.17 series: v1.17.4 Components that must be upgraded manually after you have upgraded the control plane with 'kubeadm upgrade apply': COMPONENT CURRENT AVAILABLE Kubelet 3 x v1.17.2 v1.17.4 Upgrade to the latest version in the v1.17 series: COMPONENT CURRENT AVAILABLE API Server v1.17.2 v1.17.4 Controller Manager v1.17.2 v1.17.4 Scheduler v1.17.2 v1.17.4 Kube Proxy v1.17.2 v1.17.4 CoreDNS 1.6.5 1.6.5 Etcd 3.4.3 3.4.3-0 You can now apply the upgrade by executing the following command: kubeadm upgrade apply v1.17.4 Note: Before you can perform this upgrade, you have to update kubeadm to v1.17.4. _____________________________________________________________________ [root@master ~]#

升级

kubeadm kubectl

yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

[root@master ~]# kubectl version Client Version: version.Info{Major:"1", Minor:"17", GitVersion:"v1.17.4", GitCommit:"8d8aa39598534325ad77120c120a22b3a990b5ea", GitTreeState:"clean", BuildDate:"2020-03-12T21:03:42Z", GoVersion:"go1.13.8", Compiler:"gc", Platform:"linux/amd64"} Server Version: version.Info{Major:"1", Minor:"17", GitVersion:"v1.17.2", GitCommit:"59603c6e503c87169aea6106f57b9f242f64df89", GitTreeState:"clean", BuildDate:"2020-01-18T23:22:30Z", GoVersion:"go1.13.5", Compiler:"gc", Platform:"linux/amd64"} [root@master ~]#

使用集群的配置文件检查升级信息

kubeadm upgrade apply v1.17.4 --config init.default.yaml --dry-run

升级指定版本:

[root@master ~]# kubeadm upgrade apply v1.17.4 --config init.default.yaml W0326 09:42:59.690575 25446 validation.go:28] Cannot validate kube-proxy config - no validator is available W0326 09:42:59.690611 25446 validation.go:28] Cannot validate kubelet config - no validator is available [upgrade/config] Making sure the configuration is correct: W0326 09:42:59.701115 25446 common.go:94] WARNING: Usage of the --config flag for reconfiguring the cluster during upgrade is not recommended! W0326 09:42:59.701862 25446 validation.go:28] Cannot validate kube-proxy config - no validator is available W0326 09:42:59.701870 25446 validation.go:28] Cannot validate kubelet config - no validator is available [preflight] Running pre-flight checks. [upgrade] Making sure the cluster is healthy: [upgrade/version] You have chosen to change the cluster version to "v1.17.4" [upgrade/versions] Cluster version: v1.17.2 [upgrade/versions] kubeadm version: v1.17.4 [upgrade/confirm] Are you sure you want to proceed with the upgrade? [y/N]: y [upgrade/prepull] Will prepull images for components [kube-apiserver kube-controller-manager kube-scheduler etcd] [upgrade/prepull] Prepulling image for component etcd. [upgrade/prepull] Prepulling image for component kube-apiserver. [upgrade/prepull] Prepulling image for component kube-controller-manager. [upgrade/prepull] Prepulling image for component kube-scheduler. [apiclient] Found 0 Pods for label selector k8s-app=upgrade-prepull-etcd [apiclient] Found 1 Pods for label selector k8s-app=upgrade-prepull-kube-controller-manager [apiclient] Found 1 Pods for label selector k8s-app=upgrade-prepull-kube-apiserver [apiclient] Found 1 Pods for label selector k8s-app=upgrade-prepull-kube-scheduler [apiclient] Found 1 Pods for label selector k8s-app=upgrade-prepull-etcd [upgrade/prepull] Prepulled image for component etcd. [upgrade/prepull] Prepulled image for component kube-scheduler. [upgrade/prepull] Prepulled image for component kube-apiserver. [upgrade/prepull] Prepulled image for component kube-controller-manager. [upgrade/prepull] Successfully prepulled the images for all the control plane components [upgrade/apply] Upgrading your Static Pod-hosted control plane to version "v1.17.4"... Static pod: kube-apiserver-master hash: 221485f981f15fee9c0123f34ca082cd Static pod: kube-controller-manager-master hash: 8d9fdb8447a20709c28d62b361e21c5c Static pod: kube-scheduler-master hash: 5fd6ddfbc568223e0845f80bd6fd6a1a [upgrade/etcd] Upgrading to TLS for etcd [upgrade/etcd] Non fatal issue encountered during upgrade: the desired etcd version for this Kubernetes version "v1.17.4" is "3.4.3-0", but the current etcd version is "3.4.3". Won't downgrade etcd, instead just continue [upgrade/staticpods] Writing new Static Pod manifests to "/etc/kubernetes/tmp/kubeadm-upgraded-manifests668951726" [upgrade/staticpods] Preparing for "kube-apiserver" upgrade [upgrade/staticpods] Renewing apiserver certificate [upgrade/staticpods] Renewing apiserver-kubelet-client certificate [upgrade/staticpods] Renewing front-proxy-client certificate [upgrade/staticpods] Renewing apiserver-etcd-client certificate [upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-apiserver.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2020-03-26-09-43-17/kube-apiserver.yaml" [upgrade/staticpods] Waiting for the kubelet to restart the component [upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s) Static pod: kube-apiserver-master hash: 221485f981f15fee9c0123f34ca082cd Static pod: kube-apiserver-master hash: 5fc5a9f3b46c1fd494c3e99e0c7d307c [apiclient] Found 1 Pods for label selector component=kube-apiserver [upgrade/staticpods] Component "kube-apiserver" upgraded successfully! [upgrade/staticpods] Preparing for "kube-controller-manager" upgrade [upgrade/staticpods] Renewing controller-manager.conf certificate [upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-controller-manager.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2020-03-26-09-43-17/kube-controller-manager.yaml" [upgrade/staticpods] Waiting for the kubelet to restart the component [upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s) Static pod: kube-controller-manager-master hash: 8d9fdb8447a20709c28d62b361e21c5c Static pod: kube-controller-manager-master hash: 31ffb42eb9357ac50986e0b46ee527f8 [apiclient] Found 1 Pods for label selector component=kube-controller-manager [upgrade/staticpods] Component "kube-controller-manager" upgraded successfully! [upgrade/staticpods] Preparing for "kube-scheduler" upgrade [upgrade/staticpods] Renewing scheduler.conf certificate [upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-scheduler.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2020-03-26-09-43-17/kube-scheduler.yaml" [upgrade/staticpods] Waiting for the kubelet to restart the component [upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s) Static pod: kube-scheduler-master hash: 5fd6ddfbc568223e0845f80bd6fd6a1a Static pod: kube-scheduler-master hash: b265ed564e34d3887fe43a6a6210fbd4 [apiclient] Found 1 Pods for label selector component=kube-scheduler [upgrade/staticpods] Component "kube-scheduler" upgraded successfully! [upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace [kubelet] Creating a ConfigMap "kubelet-config-1.17" in namespace kube-system with the configuration for the kubelets in the cluster [kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.17" ConfigMap in the kube-system namespace [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials [bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token [bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster [addons]: Migrating CoreDNS Corefile [addons] Applied essential addon: CoreDNS [addons] Applied essential addon: kube-proxy [upgrade/successful] SUCCESS! Your cluster was upgraded to "v1.17.4". Enjoy! [upgrade/kubelet] Now that your control plane is upgraded, please proceed with upgrading your kubelets if you haven't already done so. [root@master ~]#

组件升级成功:

重启master节点 kubelet组件

systemctl daemon-reload

systemctl restart kubelet

等待一会 master组件可查询到更新完成

[root@master ~]# kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE calico-kube-controllers-77c4b7448-9nrx2 1/1 Running 6 50d calico-node-mc7s7 0/1 Running 6 50d calico-node-p8rm7 1/1 Running 5 50d calico-node-wwtkl 1/1 Running 5 50d coredns-9d85f5447-ht4c4 1/1 Running 6 50d coredns-9d85f5447-wvjlb 1/1 Running 6 50d etcd-master 1/1 Running 6 50d kube-apiserver-master 1/1 Running 0 2m59s kube-controller-manager-master 1/1 Running 0 2m55s kube-proxy-5hdvx 1/1 Running 0 2m1s kube-proxy-7hdzf 1/1 Running 0 2m7s kube-proxy-v4hjt 1/1 Running 0 2m17s kube-scheduler-master 1/1 Running 0 2m52s [root@master ~]# systemctl daemon-reload [root@master ~]# systemctl restart kubelet [root@master ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION master NotReady master 50d v1.17.2 node01 Ready <none> 50d v1.17.2 node02 Ready <none> 50d v1.17.2 [root@master ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION master Ready master 50d v1.17.4 node01 Ready <none> 50d v1.17.2 node02 Ready <none> 50d v1.17.2 [root@master ~]#

升级nodes节点 kubelet

yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

systemctl daemon-reload

systemctl restart kubelet

等待etcd更新数据 即可查询到节点都已升级完成

[root@master ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION master Ready master 50d v1.17.4 node01 Ready <none> 50d v1.17.2 node02 Ready <none> 50d v1.17.2 [root@master ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION master Ready master 50d v1.17.4 node01 Ready <none> 50d v1.17.4 node02 Ready <none> 50d v1.17.4 [root@master ~]#

升级最新版本

官方

https://kubernetes.io/zh/docs/tasks/administer-cluster/kubeadm/kubeadm-upgrade/

yum list --showduplicates kubeadm --disableexcludes=kubernetes

yum install kubeadm-1.18.0-0 --disableexcludes=kubernetes

yum install kubelet-1.18.0-0 kubectl-1.18.0-0 --disableexcludes=kubernetes